Variations

We live in the thrall of technology and our lives seem to be “technologically textured for most of our waking moments” (Ihde, 1983, p.3).

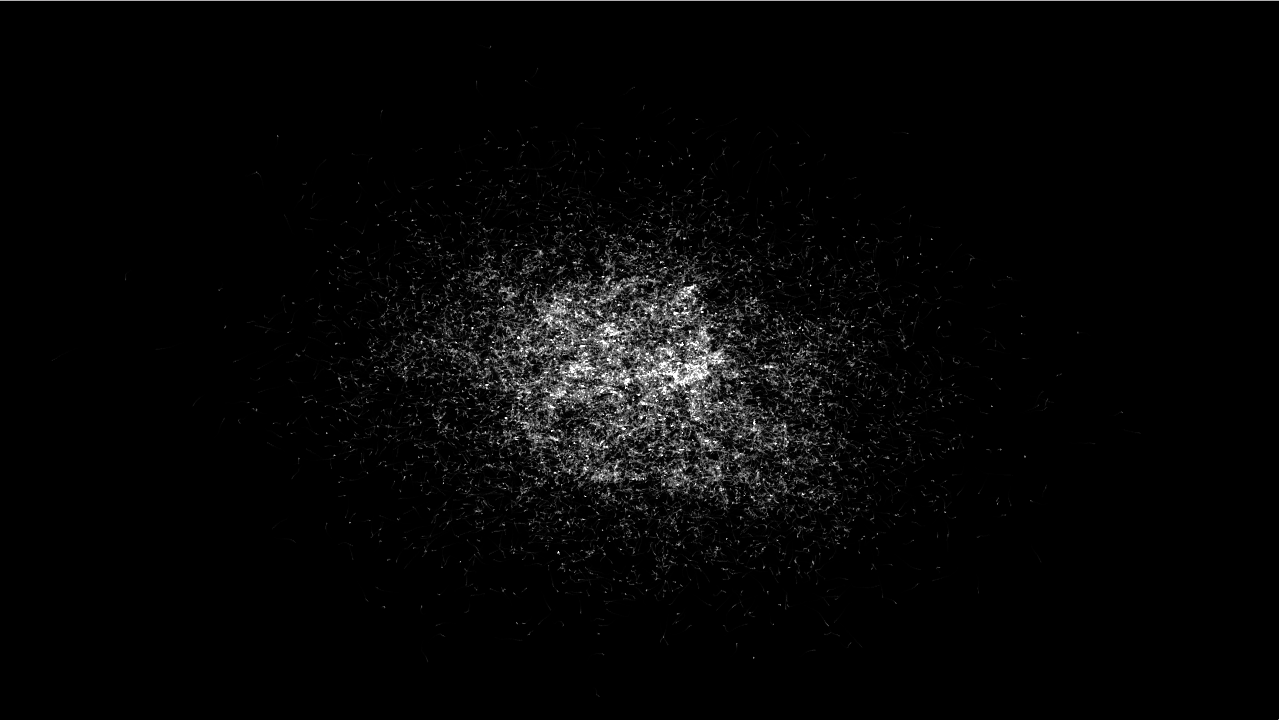

Variations performance is a real time audiovisual dialogue between the machine and the human being. There is a process behind this dialogue between personal memories and emotions and how they are connected to each other. This concept is translated into the performance regarding the rhythm of the visual context, some are generative to connect with the frequencies of the sound design and negotiate the boundary of randomness. Variations also explores the impact of technology on human action.

produced by: Ioannis Kanelis

Background

I view this project as a chance to challenge myself at creating an audiovisual live performance and to experiment with the interaction between visual context and different variations of sounds. I am particularly interested in audiovisual performances because they establish a strong sense of interaction between the observer and what is being observed. As Massumi proposed “interactivity can make the useful less boring and the serious more engaging”. This is also what I am trying to achieve with the “Variations” performance. For the visual part of the project I was aesthetically inspired by different visual artists like Ryoji Ikeda, Robert Henke, Carsten Nicolai, and Ryo Ikeshiro, whose performances’ final output is simultaneously simple and complex. The main influences for producing the different sounds derived from several sound artists and musicians like Tim Hecker, Emptyset, and Iannis Xenakis. The main idea for the content of this project was inspired by the beauty that can evolve from generating particles and the variety of their multiple behaviours.

Project Description

This project is divided into three different parts or types of interaction between the human and the machine. The first part consists of genetic algorithms which are responsible for generating sounds from a music software programme (Ableton/Max for Live). In this section, particles are created in order to achieve the goal of finding a target. When particles make contact with the target, this generates a specific sound. When particles hit the existing obstacle, a different sound is generated and the obstacle flashes. Each time the algorithm runs, the particles become smarter and avoid the obstacle in order to hit the target.

In the second part of this project the sounds will be played by myself and the visuals will be created by an algorithm, which interacts with these sounds.

Finally, the third stage of the performance is the opposite of the second part. Here, existing visuals will be affecting the way I choose to produce sounds according to the visuals’ movement. The interesting thing about this part of the performance is that overall the visuals will have a random output, therefore, the sounds will be produced and played live.

The main idea of this project is that there is a specific process behind the dialogue between personal memories and emotions and the way they are connected to each other. Therefore, I view this project and the output of my performance as my attempt to combine pain with beauty. I wanted to emphasize the co-existence between death, darkness, light, beauty and life, and to justify that for me none of these elements repeal or neutralize one another.

Process

In the beginning I started working on the four different scenes of this project with different apps on Xcode. For the first scene I chose to translate the famous example of the book “The Nature of Code” called Smart Rockets from Java into C++. This algorithm sends two different OSC messages to the music software programme (Ableton/Max for Live) in order to generate different sounds that are connected to particular statements. One of the messages requests Ableton to play a sample when the particles hit the obstacle, and the other requests it to play a different sample when they hit the target.

For the second scene I used the fft algorithm from https://github.com/tado which contains a few fft routines, including a real fft routine that is almost twice as fast as a normal complex fft. Later on, based on this fft algorithm, different visuals were created using particles and sine and cosine movements. Additionally, this specific fft algorithm was used for the following scene as well, which creates visuals based on Lissajous curves.

The fourth and final scene demonstrates a random movement of particles by using a vector field algorithm retrieved from https://github.com/tado. This specific scene does not interact with sounds, but the interaction takes place between the visuals I receive (random movement of particles) and the sounds I choose to produce upon what I have seen.

In order to control all of these different scenes and to make some variations in each of them, I am using the ofxMidi addon which connects one of my midi controllers with the openframeworks app. A second midi controller will be connected with the music software programme (Ableton/Max for Live).

Challenges & Further Development

The challenges I faced in the beginning of this project had to do with my understanding of the genetic algorithm and how to manipulate it. Other challenges lied in the combination of all of my four different apps into one main app. Additionally, it was quite challenging to figure out what type of visuals to utilize in order to create an overall solid and aesthetic output. Finally, I have figured out that after all the main challenge is to avoid crashing and slowing down of the computer due to the simultaneous functioning of the different programmes like Ableton and Openframeworks. This is still causing me some issues that I hope to be able to resolve.

The final challenge mentioned above is also one of the main further developments that I would propose. It would be a great achievement if there could be a way for different programmes to work at once on the same computer without causing it to crash, so that performers in this field would not have to use more than one computer to perform. For me personally, a further development would be programming the algorithm on a different programme that incorporates both visuals and sounds, Max/Msp Jitter.

Source code:

http://gitlab.doc.gold.ac.uk/comp_arts_2015/sandbox/tree/master/students/Yannis/VariationsAVfinal

References

Brouwer, Joke, Arjen Mulder, Brian Massumi, Detlef Mertins, Lars Spuybroek, Moortje Marres, and Christian Hubler. 2007. Interact Or Die. Rotterdam: V2 Pub./NAi Publishers.

https://github.com/shiffman/The-Nature-of-Code/issues

https://github.com/tado

https://github.com/danomatika/ofxMidi