The Metaplasm

The Metaplasm is a mixed reality piece that consists of a computational leather prosthetic and an augmented computer vision app. The shoulder garment computes the wearer's movement data to the app which mixes a phantom augmentation of floating images with their live camera feed.

produced by: Panja Göbel

Introduction

I was inspired by a section in Wendy Chun’s Updating to Remain the Same that talked about phone users feeling their mobile phones vibrate without a phone call being attempted. This phenomenon was equated with phantom limb syndrome where amputees often have strong sensations of their missing limb in random circumstances and without explanation. I was curious about how we could use technology to illuminate subconscious internal thought patterns, an altered state of some sort with the potential to unlock areas within our brain.

Concept and background research

Since the success of the smart phone and it's suite of social apps, wearable tech gadgets have started to bleed into our daily lives in their drive to help us perform better. But they are also subtly changing our behaviours, turning us into performance junkies and driving us to loose touch with our previous internal selves. I was interested in exploring what our internal selves might look like? So I began to research different artists that worked with prosthetics to hack their bodies in different ways to create different sensations.

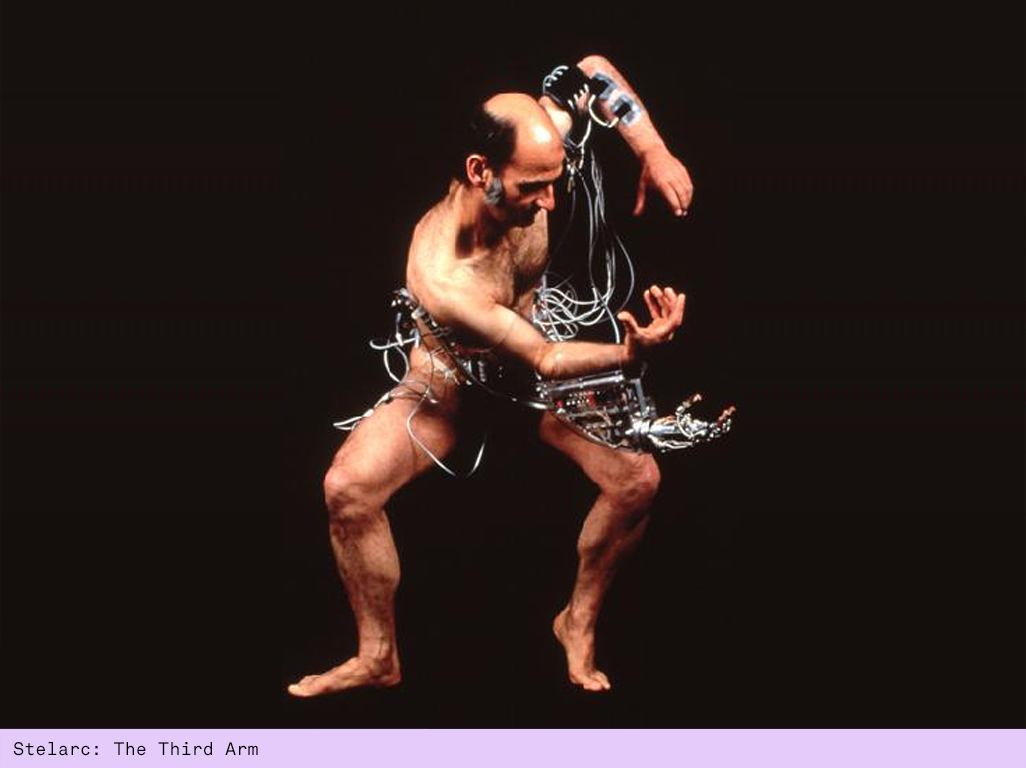

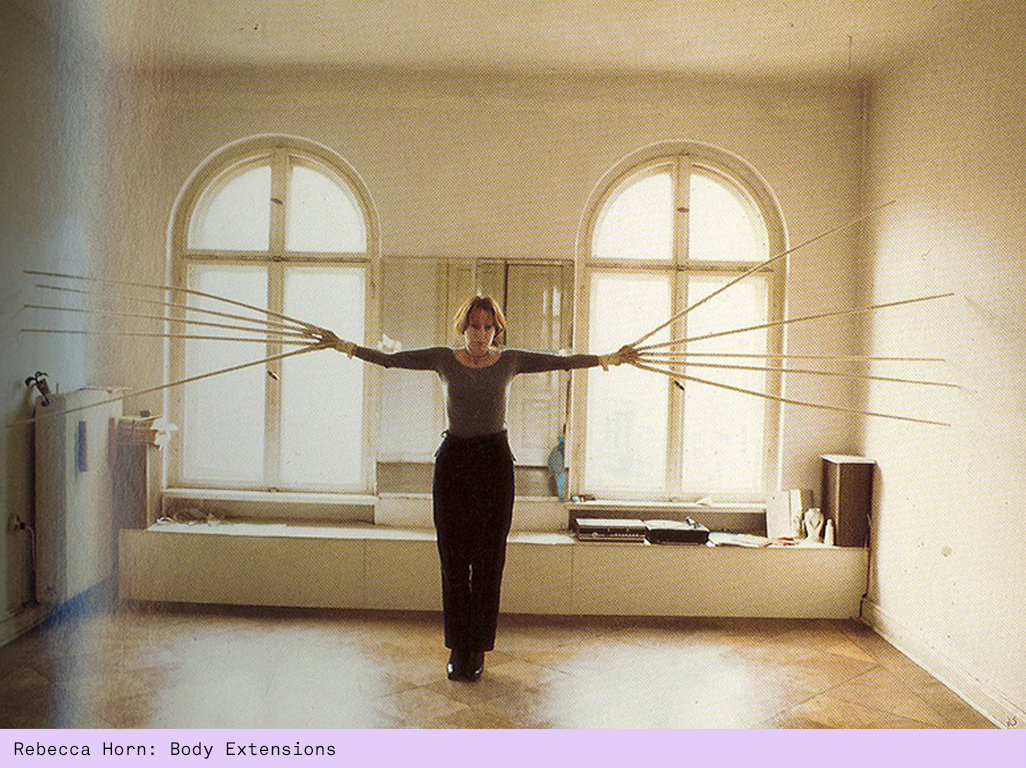

The australian cypriot performance artist Stelarc uses his body as a rich interaction platform, visibly hacking it with electronics to blur the boundaries between man and machine. In his Third Hand project a cyborg like robotic arm attachment is controlled by the EMG signals from other parts of the performer's body. Rebecca Horn’s Body sculptures and extensions are shaped by imagining the body as a machine. In her Extensions series Horn’s armatures remind of 19th century orthopaedic prosthetics supporting an injured body. Her armatures are redirecting the body's purpose, turning it into an instrument and often inducing a feeling of torture.

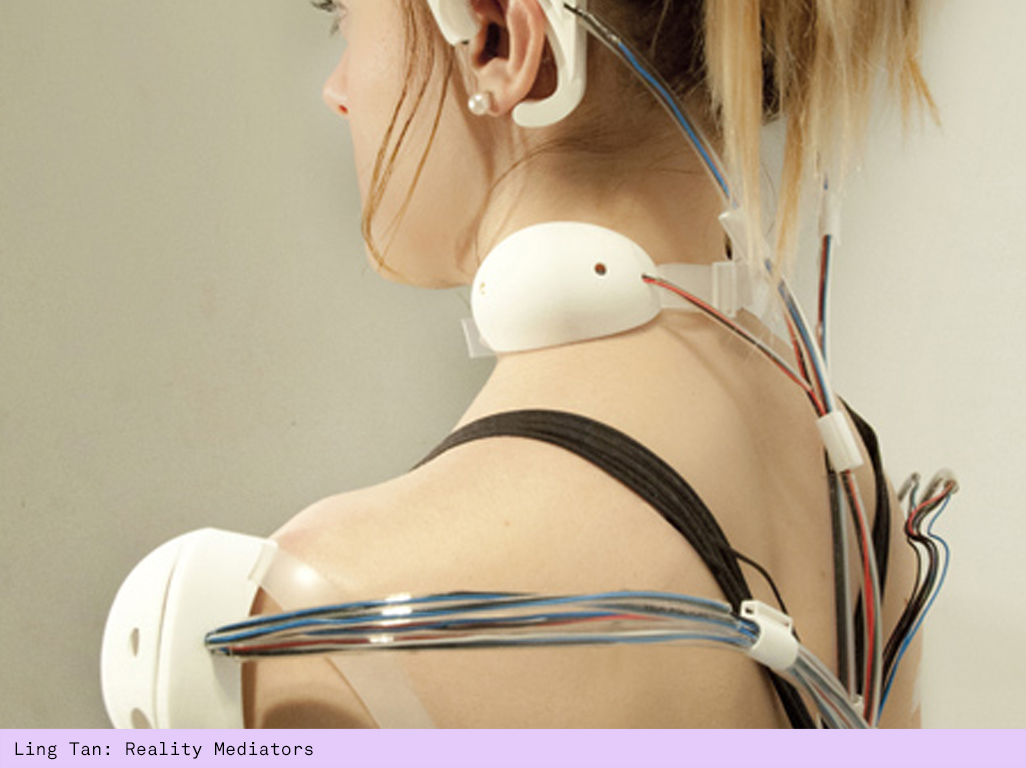

Ling Tan’s Reality Mediators are wearable prosthetics that cause unpleasant sensations when the wearer’s brain activity or muscle movement are low. Her project is a direct comment on to the effect current wearables have on our perception of the world around us and the way these products alter our daily behaviours.

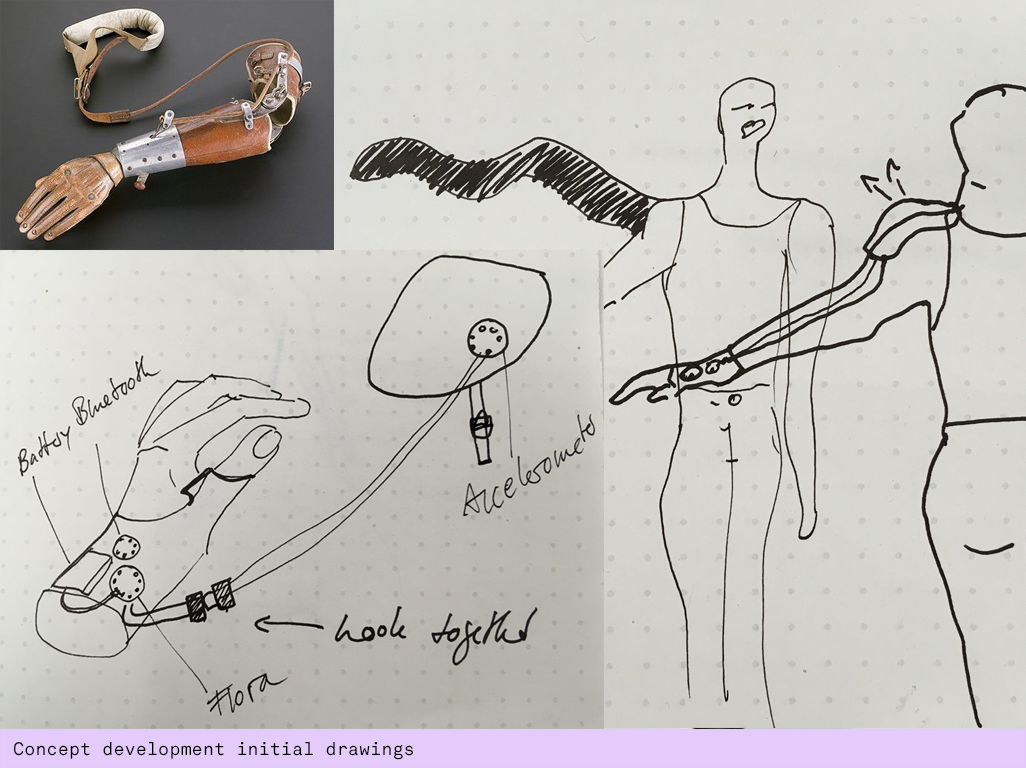

I decided on designing a shoulder prosthetic that communicates with a computer vision app to allow it's wearer play with an augmented trail of images. It was important to me to present the experience as a clash of two worlds. The old world was represented by the prosthetic which took great inspiration in victorian prosthetics. I researched the look of these at the Wellcome Collection. But I also wanted the prosthetic to look really attractive and contemporary. Here I took visual inspiration from Olga Noronha's Ortho Prosthetics series.

Rather then being a tool to help the physical body function better the prosthetic had the purpose to unlock and help navigate an internal monologue of thought images represented by the projection. The images featured stuff in our head that alludes from online shopping activity to stuff we see out and about. The camera feed holds the wearer as the centre point surrounded by a crude collection of stuff.

Reading Donna Harraway's Companien Species I came across a great section where she talks about metaplasms. She explains "I use metaplasm to mean the remodelling of dog and human flesh, remolding the codes of life, in the history of companien-species relating." Whilst Haraway explores the blurred boundaries in relationships between dogs and humans, this descriptor felt to be a very fitting title for an experience that aims to illuminate internal phantoms, that accumulate inside of us and we have no idea how to control.

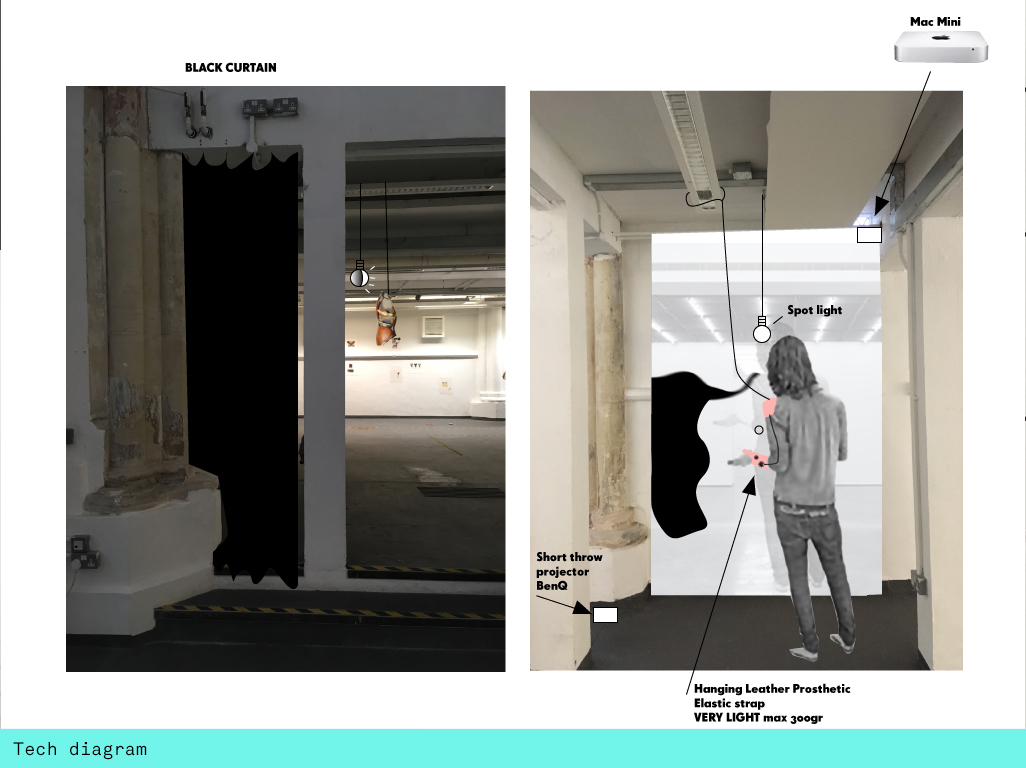

Technical

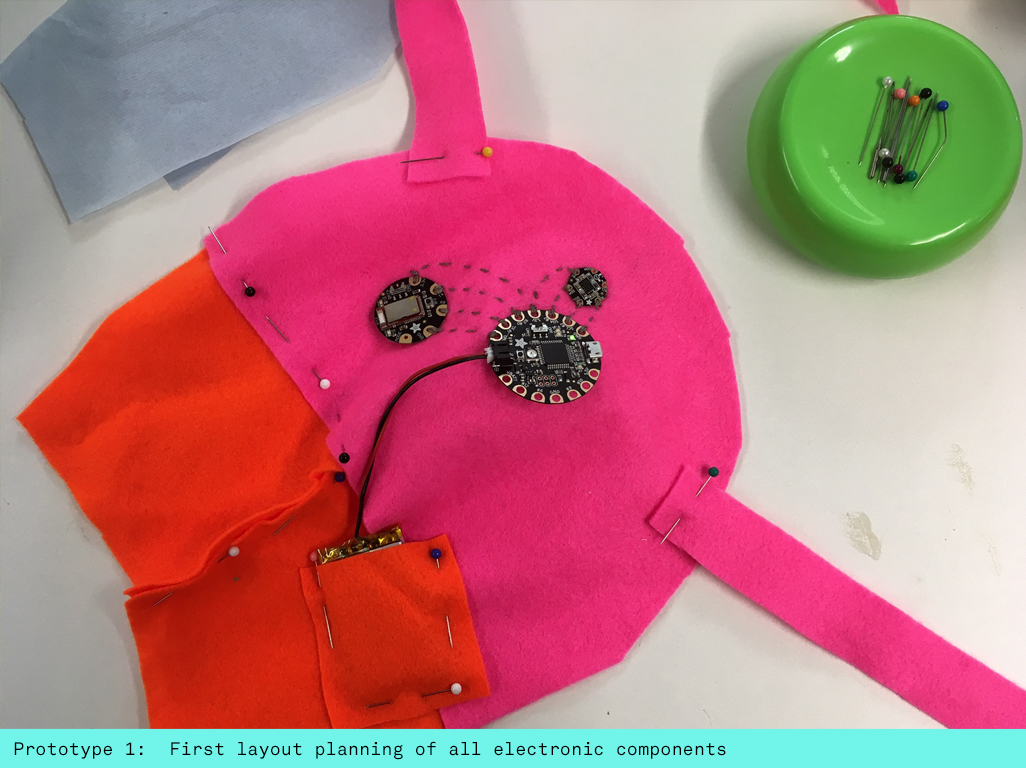

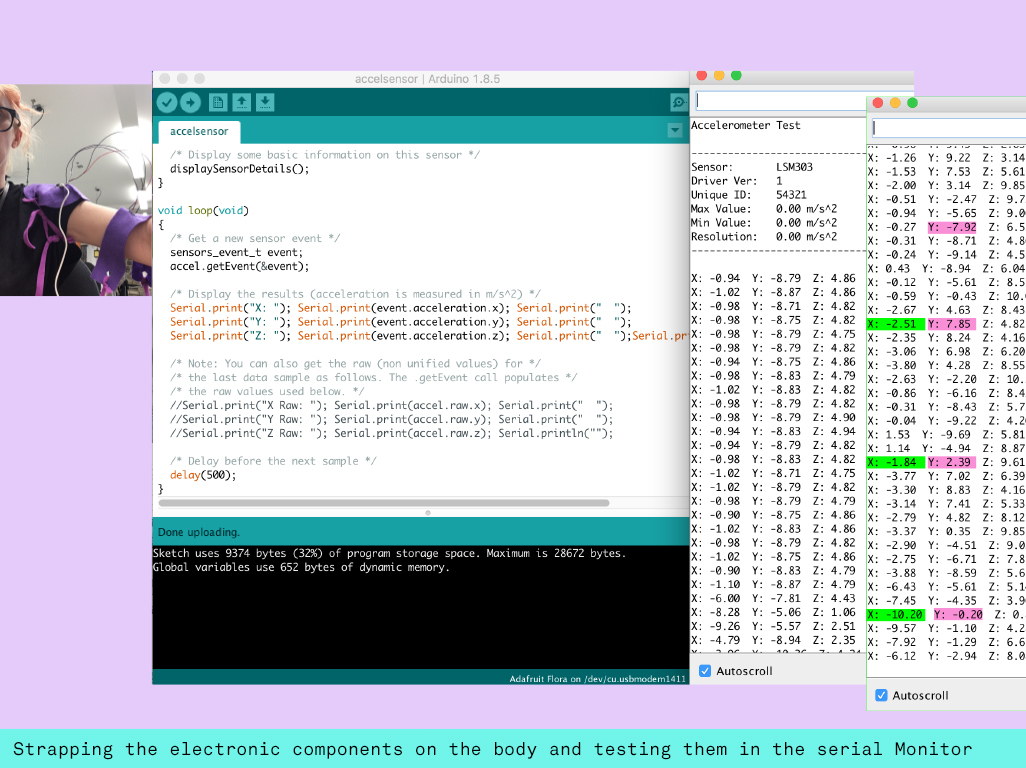

I began dividing my project into two parts: The physical prosthetic and the augmented computer vision app. Having played with the latter in my last project I decided to concentrate on the physical aspects first. Here I decided to use the Arduino compatible Adafruit Flora board with the LSM303 Accelerometer Compass Sensor. I also initially thought I would make the whole experience wireless and ordered the Bluefruit LE Bluetooth Module and the rechargeable LiPo battery pack. It was important to get the components onto my physical body asap to start testing where they should sit for their maximum efficiency. I layed them out on some cheap felt and sewed them on with conductive thread according to the great help section on the Adafruit website. Despite getting the bluetooth communication to work immediately I struggled to add on the accelerometer too. The connections seemed temperamental so I decided to remove the bluetooth simplify the cross component communication. Later I got some advice that made me rethink the use of bluetooth in the church and decided that a 3m long micro usb would actually suit my aesthetic whilst making the experience more reliable. I also at this point decided to solder silicon wires onto the board instead of the less conductive thread. Now my connections were very stable and I got clear differences when moving my arm. I used the accelsensor file that came with the LSM 303 library and tested my movements in the Serial Monitor. I integrated last values and current values to let the app create a different value for the acceleration by subtracting the last value from the current value. I found it hard though to separate the x y z values from each other and making these be linked to understandable directions when sitting on the lower arm. To save time I decided to add all the difference values together with the abs function to create a single agitation value that was then communicated to the app. I tested the communication between the electronics and openframeworks with ofSerial to make sure the app would receive the values ok before parking the tech development and concentrate on the design of the prosthetic.

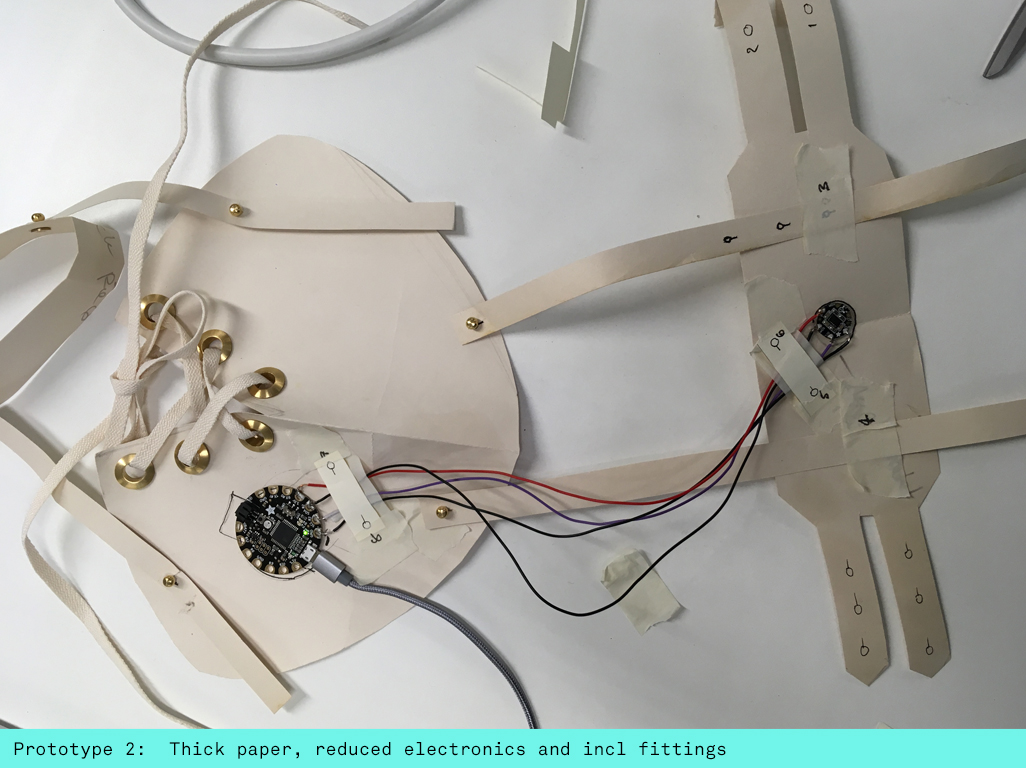

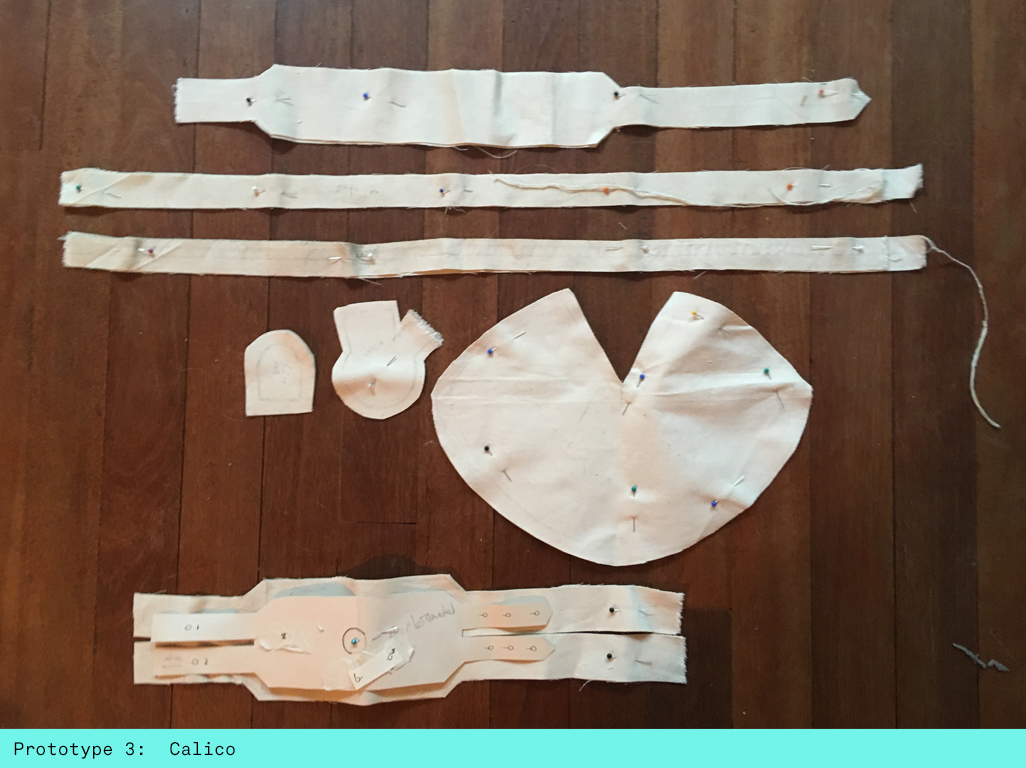

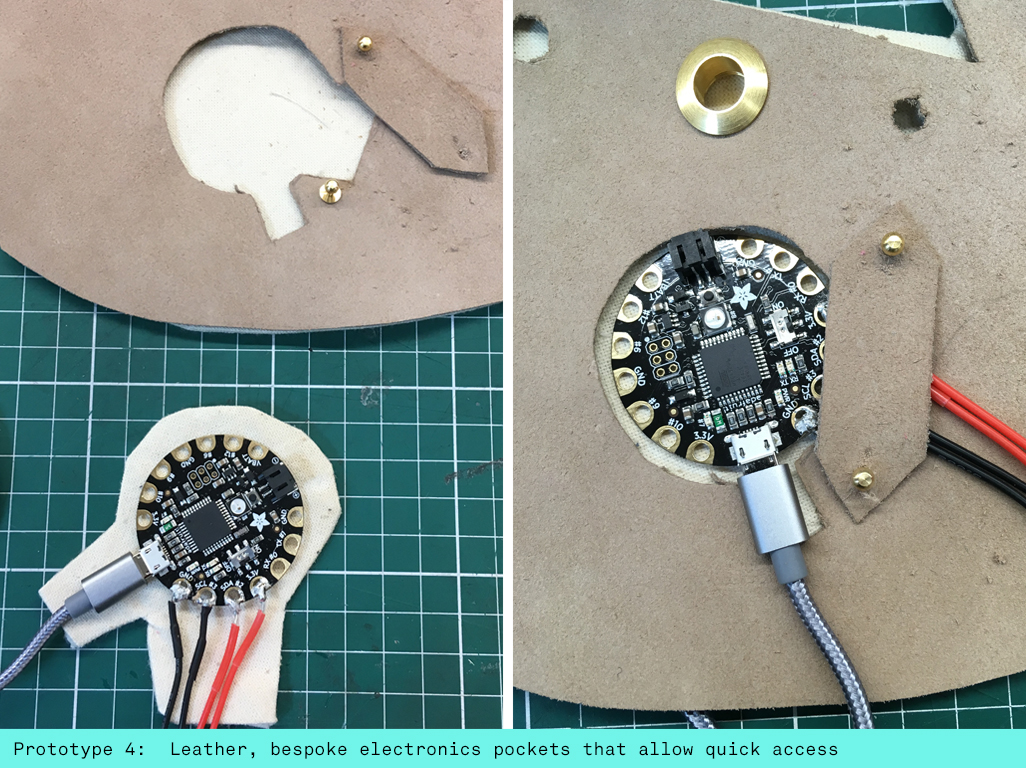

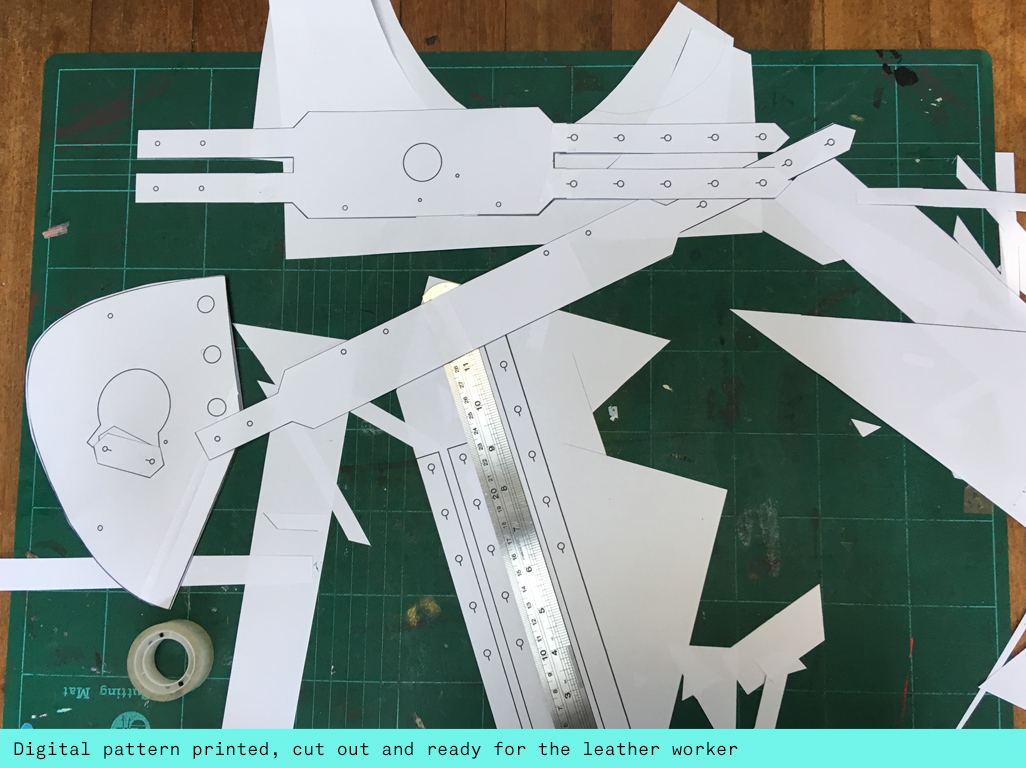

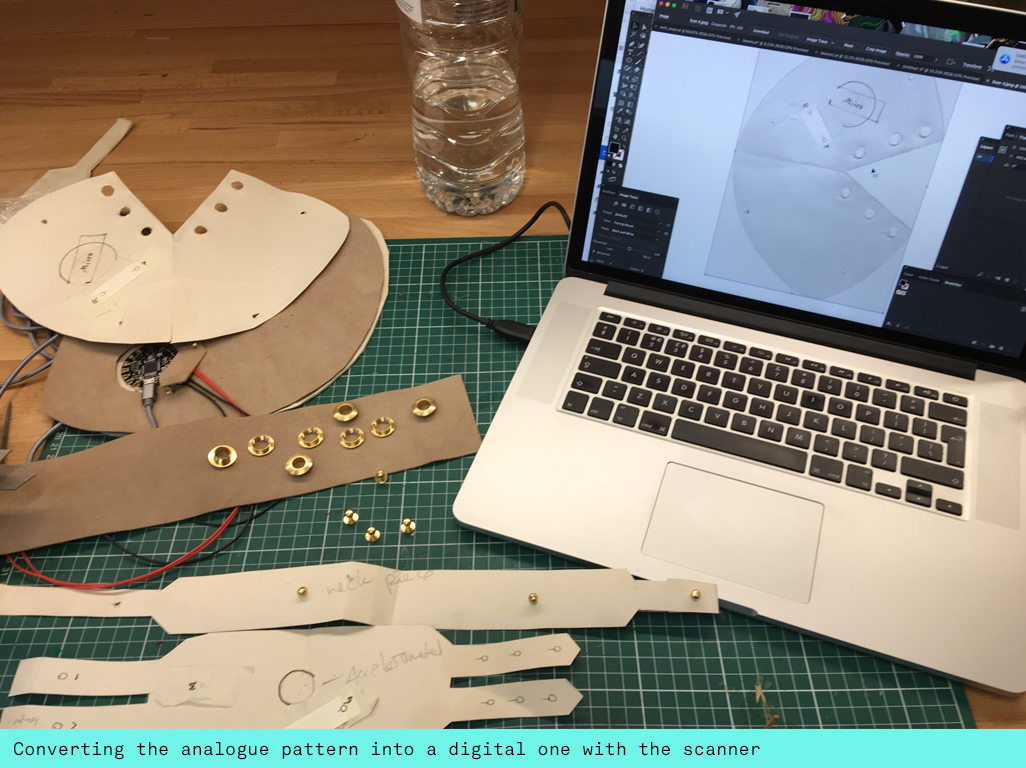

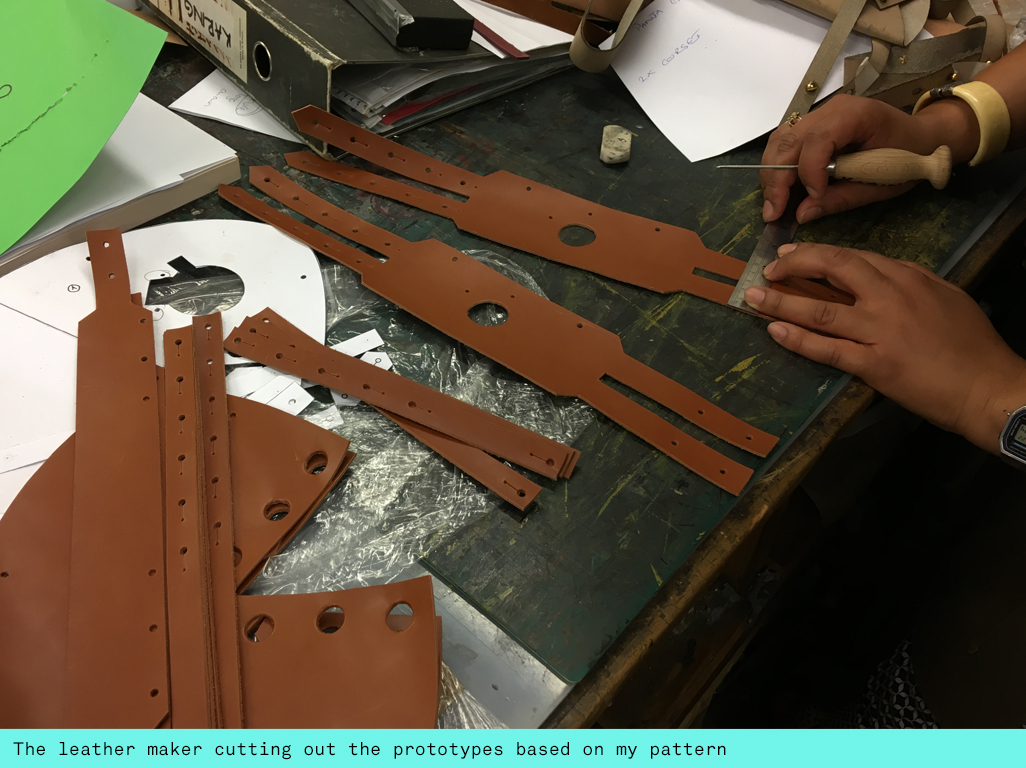

From doing my initial tests with the electronics I knew that I needed the Flora board on the shoulder and the accelerometer lower down on the arm. I wanted to make the prosthetic as adjustable as possible accommodating as many different body sizes as possible. I started by using thick wall paper lining paper to cut my first pattern fitting it to a size 8 dressmaker dummy that I have at home. I created loads of adjustable straps to adapt the distances between neck, shoulder and lower arm. From there I moved onto cutting it out from dressmakers Calico and sewing it together to make my next prototype. In the meantime I researched a leather workshop that sold amazing fittings such as brass Sam Browns and brass eyelids to pin the leather to the bottom layer of calico and make adjustable fittings. There I also found cheap leather off cuts to make my next prototype. Being fairly happy with my paper pattern it was time to digitise it by scanning it on a flat bed scanner and then to trace and tidy the lines in illustrator. At this point I also created the exact openings for the electronics. I wanted to create little pockets that would allow me to whip them out quickly to replace them in case they got broken. I placed the Flora board and the accelerometer on little calico pieces sewing the connections down so they became more protected. I also designed little leather flaps to cover and further protect the areas where the wires were soldered onto the board. Now that I had a digital pattern I was able to laser cut the leather as a last test before my final prototype. The laser cutter charred the leather at the edges producing an overwhelming bbq charcoal smell that I didn’t want in my final piece. I assembled the prototype to see whether I needed to make any final changes to the digital pattern. Now I was able to instruct a leather worker to cut out my final piece from the leather following my printed out pattern and referencing the assembled prototype whilst doing so.

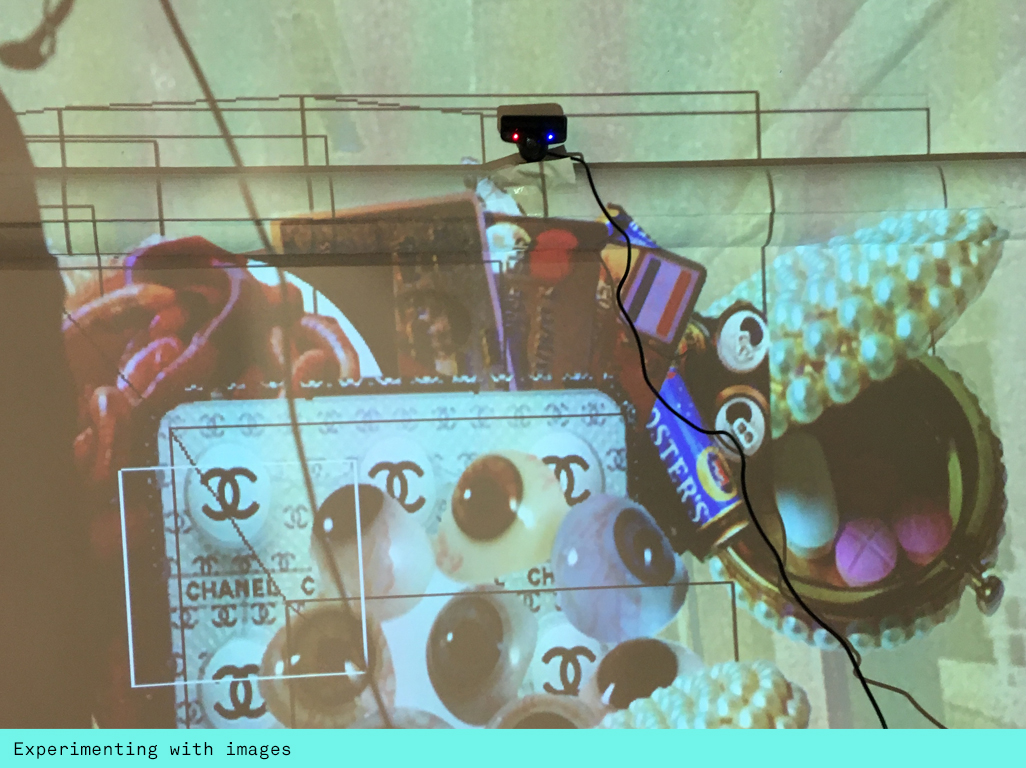

It was time to move on with creating the augmented phantom. For this I used Vanderlin's box2d addon to add real physics behaviour to the floating augmented images. I used the 'joint' example that consisted of several joints and lines and a anchor. I combined this file with the openCV haarfinder example so that the app once detects a face could hang this joint example onto the user's shoulder. I created a separate class for the box2D trail and fed in my movement data by creating a variable to affect the trail. I created black outline squares and little white circles to mirror the aestetic of the computervision app and tested my movements. At this point I brought in some random images fed them into a vector to draw them into the outline squares on the same x/y values. Playing around with it I decided that it would be nice if the images would randomly reload adding an element of surprise and control for the user. I build an algorithm that would reload the vector when the user's agitation data accelerated the trail to the top of the screen. This worked pretty well and by this point it was time to test it in location.

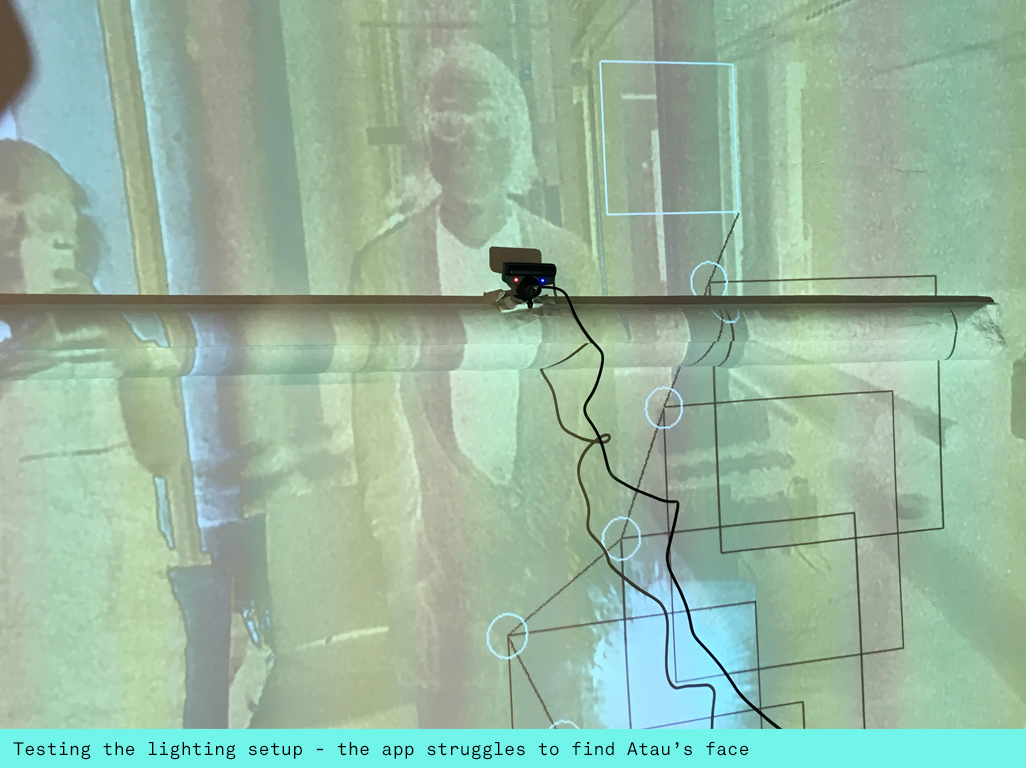

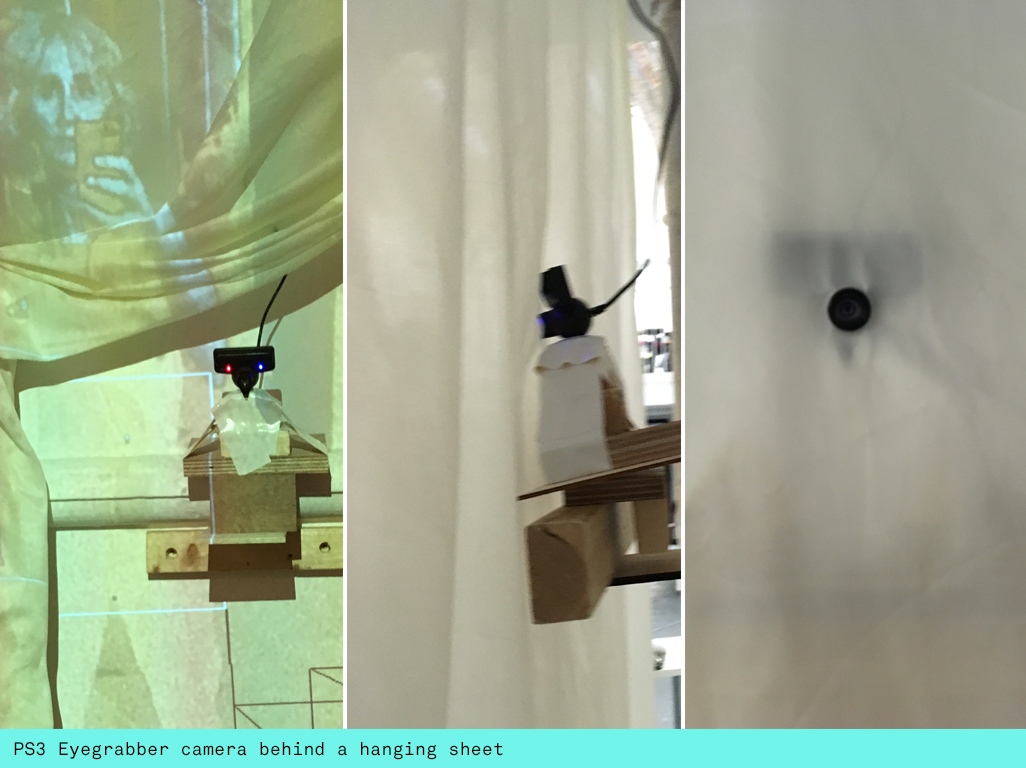

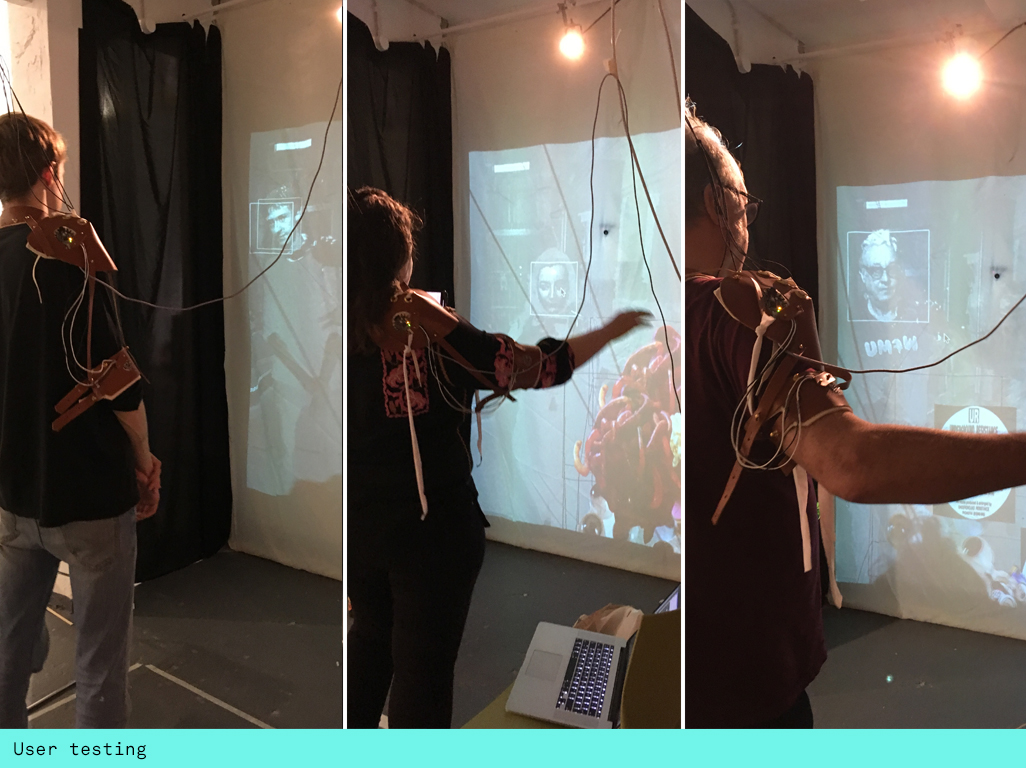

I knew my installation set-up was going to be challenging as I had to project on a full height wall whilst meeting the lighting needs of a computer vision app. I was positioned by the edge to the big space of the church, the beginning of an area that was meant to be semi dark whilst receiving all the light from the big space. The BenQ short throw projector was surprisingly powerful to give a pretty crisp and high lumen projection in a semi dark space. I fit a PS3 Eyegrabber camera on the wall of the projection and a spot light further up shining at the user and in direction of the projector. I fitted a hanging sheet over the wall thereby hiding the camera except for a little hole where the lense could fit through. I hung my shoulder prosthetic from the ceiling with a thin bungee elastic allowing it to be pulled down to different heights without any accidents. Now I was ready to test the interaction space and positions of the lamp by bringing in players of different heights all throughout the day for several days. Despite everyone's lighting requirements in the big open space getting nailed down and fitted with my own I found the experience quite frustrating as the natural daylight would change throughout the day and I could do anything about that. I settled with the shortcomings and carried on refining the app. For this I decided to produce 50 images representing daily life and modern world meme’s. These I loaded into the app to create a good level of variation to delight the user. Then I created a screen shot facility to record interactions ie take a screenshot every time the app sees a face and every 20 seconds of interaction, so I wouldn’t get too many. I also decided to design a fixing hook for my bungee so the user could take it off the elastic and wouldn’t get tangled up in it whilst moving about.

Future development

Testing the prosthesis on well over 100 users over the course of 3 days and observing each person interact with it convinced me to build the experience wireless next time. It was a real joy to witness the different interaction behaviours users came up with. Some wanted to dance, some were play acting, some wanted to use leap motion behaviours to grab the images and some were just terrified. Overseeing this process and closely engaging with over 40 users on opening night alone was really fascinating, despite also being very tiring. I temporarily considered hiring someone but wouldn't have had the time to train them to say the right things nor ask the right questions. It was great to so closely engage with people, dressing the different body shapes and constantly learning about what could be made different. A lot of users obviously suggested a more personalised experience ie. their own images. Talking through with them what this would mean though in terms of a privacy opt in and an open projection of their online activity they quickly understood that the player take up would be low and the experience would most likely also look very different. I hope my conceptual approach to this matter gave them enough food for thought to imagine what images might be floating in their heads though. But I also came across some interesting potential use cases from my audience. One worked with paraplegic kids and suggested the experience to have potential as a training app to a reinvigorate a lazy arm. I had a similar comment from an Alexander technique teacher. I'm interested in exploring this further and looking into integrating sensory feedback or linking the prosthetic to another device.

Self evaluation

Overall I’m really happy with the prosthetic. It fitted most body sizes with a few exceptions. I would like to make a few adaptations but am otherwise very happy with the look and feel of it. If I had had more time I would have integrated a little vibrator within the shoulder to add sensory feedback when the user reloaded the vector or pulled in a particular image. I would definitely decide on a more controlled lighting set-up with a bigger interaction space, potentially even a huge monitor and a wireless prosthetic. Despite these constraints I was surprised how many users enjoyed playing with the images ignoring the obvious glitchiness of the facial tracking.

References

Wendy Hui Kyong Chun, Updating to Remain the Same

Donna Haraway, The Companion Species Manifesto: Dogs, People, and Significant Otherness

Rebecca Horn Body Art, Performance and Installations

Stelarc Third Hand

Wellcome Collection Prosthetics

Ling Tan Reality Mediators

Adafruit Getting Started with the Flora

Kobakant Hard/Soft connections http://www.kobakant.at/DIY/?p=1272

Vanderlin box2D wrapper for openFrameworks https://github.com/vanderlin/ofxBox2d

Box 2D manual http://box2d.org/manual.pdf

Solarised filter applied on camera feed https://www.khronos.org/registry/OpenGL-Refpages/gl2.1/xhtml/glTexImage2D.xml