Sing a Picture

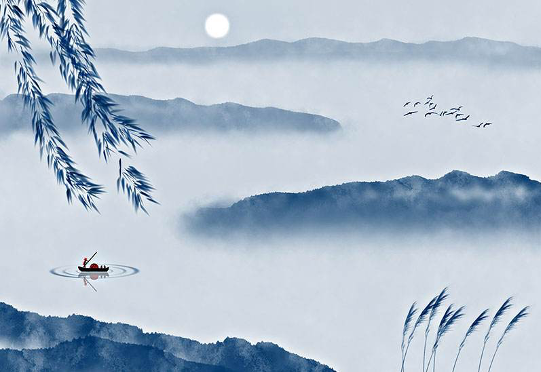

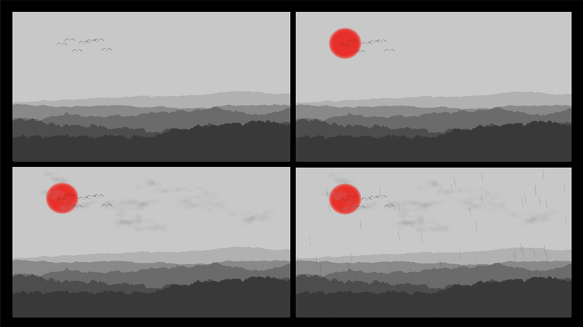

“Sing a Picture” is an interactive work to control instruments and a dynamic painting. When a picture of distant mountains in Chinese style and very different kind of dynamic western sounds collide together, what will happened?

produced by: Junqiao Luo

Introduction

The work could be divided to three parts, audio, visualization and interaction. This work is mainly compiled using openFrameworks, using the audio files of 5 musical instruments in a song. Meanwhile, there are 5 corresponding elements in the visualized painting, which would be controlled by the sounds. The interaction between the work and users is realized through keyboard or Leap Motion, allows everyone to control the playing or not of the instruments to show the charm of music and at the same time turn what the instruments sing into a painting. It is a good demonstration of the ensemble way the band plays music, and also uses interaction to let everyone feel the charm firsthand.

Concept and background research

An interesting thing is that the initial idea came when washing dishes a few months ago. When the sound of chopsticks, dishes, knife, water mixed together, the effect was amazing, though they were simple sounds in lives. Then I came through A Cappella, which uses people’s different voice to sing a song, and then the band, which needs different instruments. To make sure the final effect could sound good, the audio of band is the best choice because of the certain rhythm of different instruments of in song. The sounds of varies instruments may be irrelevant, but when different instruments played together, the effect could be diverse. The idea of achieving such a work and visualize them appealed, which could be very cool.

As for Chinese-style paintings, I grew up in a Chinese cultural background and think it is one of the styles of oriental culture. Chinese ink painting should be of aesthetic value to everyone. And music and painting are forms of art that have almost no threshold of appreciation, no matter where you come from, and no matter your age or gender, can have a certain resonance. Therefore, after referring to many classic paintings and studying the characteristics of ink painting, I chose landscape painting as the visual subject.

After studying similar examples in the past, I found that the multi-audio input and the visual style of Chinese style in the openFrameworks environment were deficient. I referenced and tried a number of methods, such as some addons and similar processing examples. After eliminating many difficulties, the overall style of the realized works is basically consistent with the expectation. Through this work, I realized a breakthrough in multi-channel audio input processing and realistic image rendering in the OpenFramework.

Technical

What technologies did you use? Why did you use these? What challenges did you overcome?

Hardware: Leap Motion

Software: OpenFrameworks, Wekinator, GarageBand

My work has mainly 3 parts, audio part, painting part and interaction part. For audio part, 5 audio files were used of 5 instruments. They sound like separate melodies, but when combined together they have amazing effects. And then the painting part, there are 5 elements in my painting, mountains, birds, sun, cloud and rain. Those 5 elements could be mapped to 5 audio, that’s my interaction.

First, edit the selected guitar, claps, bass, drums and ukulele audio files in GarageBand and generate them with the same length and rhythm that could be matched with each other.

Then, using the function in ofxSoundObject addon, the corresponding spectrum of the five input sounds could be get. Unlike the ofGetSpetrum() function, the ofxSoundObject could get separated spectrums of each audio while ofGetSpectrum() could only get the spectrum of all the audio being played at the same time. This was also the most difficult problem of my work. 5 player objects were created to store each spectrum and finally the whole spectrum could be shown separately. In this way, the whole spectrum of 5 audio and one real-time spectrum could be shown at the same time. But the real-time spectrum has another problem, it could only show the first played audio but do nothing with others.

Since there is no proper function to obtain the real-time spectrum of each audio, a buffer was created. Calculate values in each frame and store them in a buffer, then clear the buffer every frame. In this way, the real-time data of a certain audio can be transmitted to the visual picture, which generates interaction.

Another serious problem is delay, no matter how to start audio and painting together or set up frame rate, the delay between sound and current spectrum seemed to be inevitable. I considered for a few days and finally felt it may because of the necessary time required for the program to run, and the uncontrollable frame rate. Through a lot of calculations and tests, using timelines and frames, the most accurate calculation formula appealed.

In order to make my Chinese style paintings look better, I have made many attempts. Finally, inspired by a work in Processing, various random calculations and drawing functions were used to realize a unique painting for each frame. It is made up of of 5 elements, mountains, sun, birds, cloud and rain, which could be mapped to 5 audio.

The interaction function was achieved by Leap Motion and Keyboard. After training using machine learning, the Leap Motion could tell which gestures correspond to which commands. The interaction on the keyboard is more intuitive and accurate. You can use the corresponding key “q”, “w”, “e”, “r” to choose whether to add the sound or not. The final effect is that users could use interact with the work and experience different music effects with different instruments, and also a dynamic Chinese style painting.

Future development

If there is something could make it better. Firstly, I would prefer to enhance the interaction function. Although the interaction function now is easy and direct, body interaction will be cooler if on exhibition. What else is that try to improve it to 3D version to give a better visualization. The visual diversity will bring a better experience to this work. And add other styles of audio or even A Cappella, make it more colorful.

Self evaluation

During the work, I have roughly completed the expected design, including the band music and dynamic painting. Although the audio-visual way is totally strange to me, the developing process made me learn to figure out many questions, like how to deal with multiple audio inputs, how to draw a realistic image based on openFrameworks, how to operate the real-time spectrum… But there are still some disadvantages. The way to calculate the real-time spectrum seems to be a inflexible method and if the PC changed, the formula may be useless. It’s time-consuming and not perfectly accurate. I believe that there should be a universal way to solve the problem.

References

- https://github.com/roymacdonald/ofxSoundObjects

- https://www.gamedev.net/tutorials/_/technical/game-programming/simple-clouds-part-1-r2085/

- https://www.openprocessing.org/sketch/941956

- https://www.openprocessing.org/sketch/188947