rewind 2.0

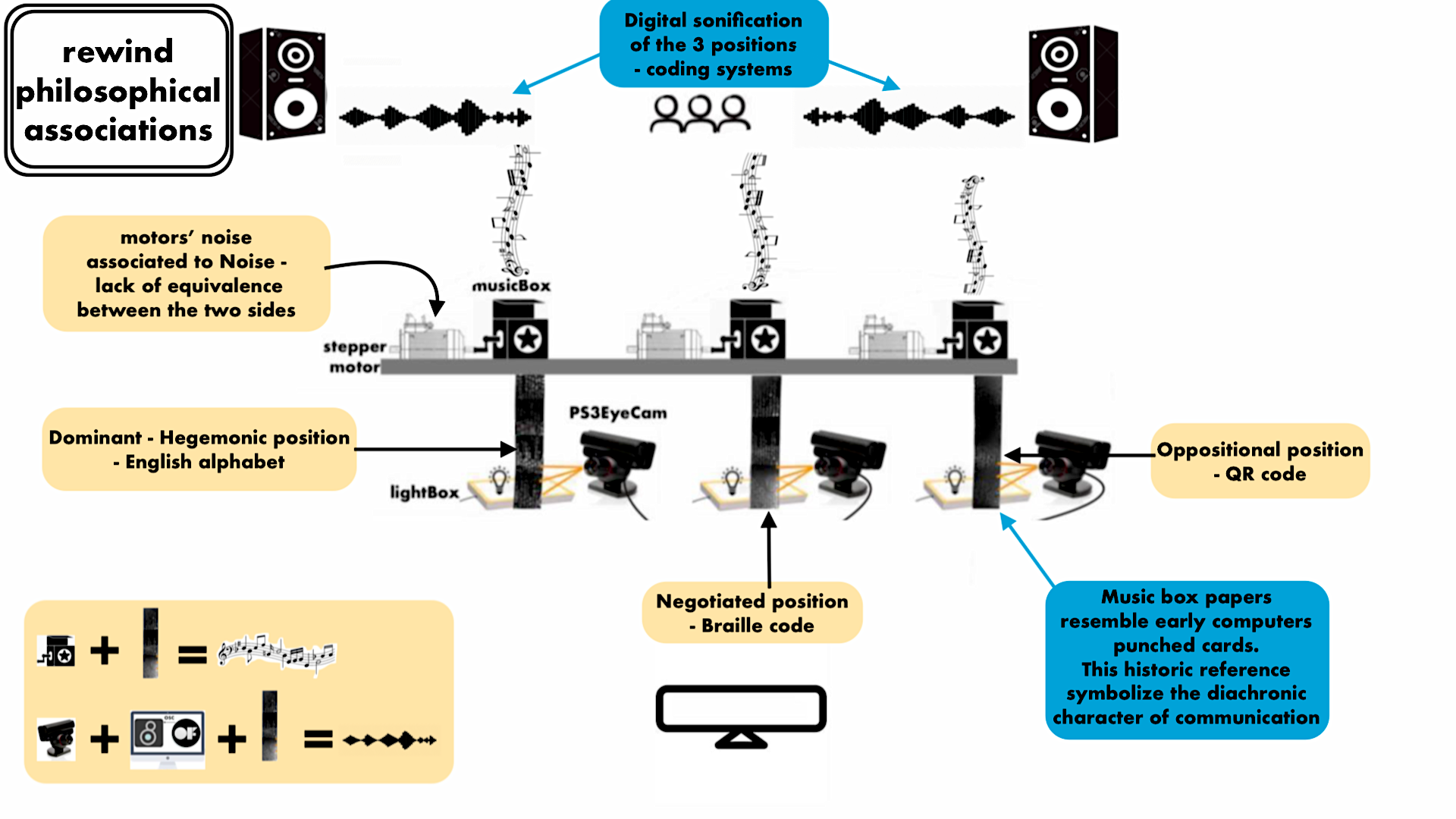

rewind 2.0 is a sound weaving, digital to analog to digital sequencer. Re-imagining in the present Stuart Hall’s encoding/decoding model of communication, it metaphorically questions meaning, subjectivity, chance and introspection, as well as underlining the symbiotic relationship between man and the machine.

produced by: Petros Velousis

Introduction

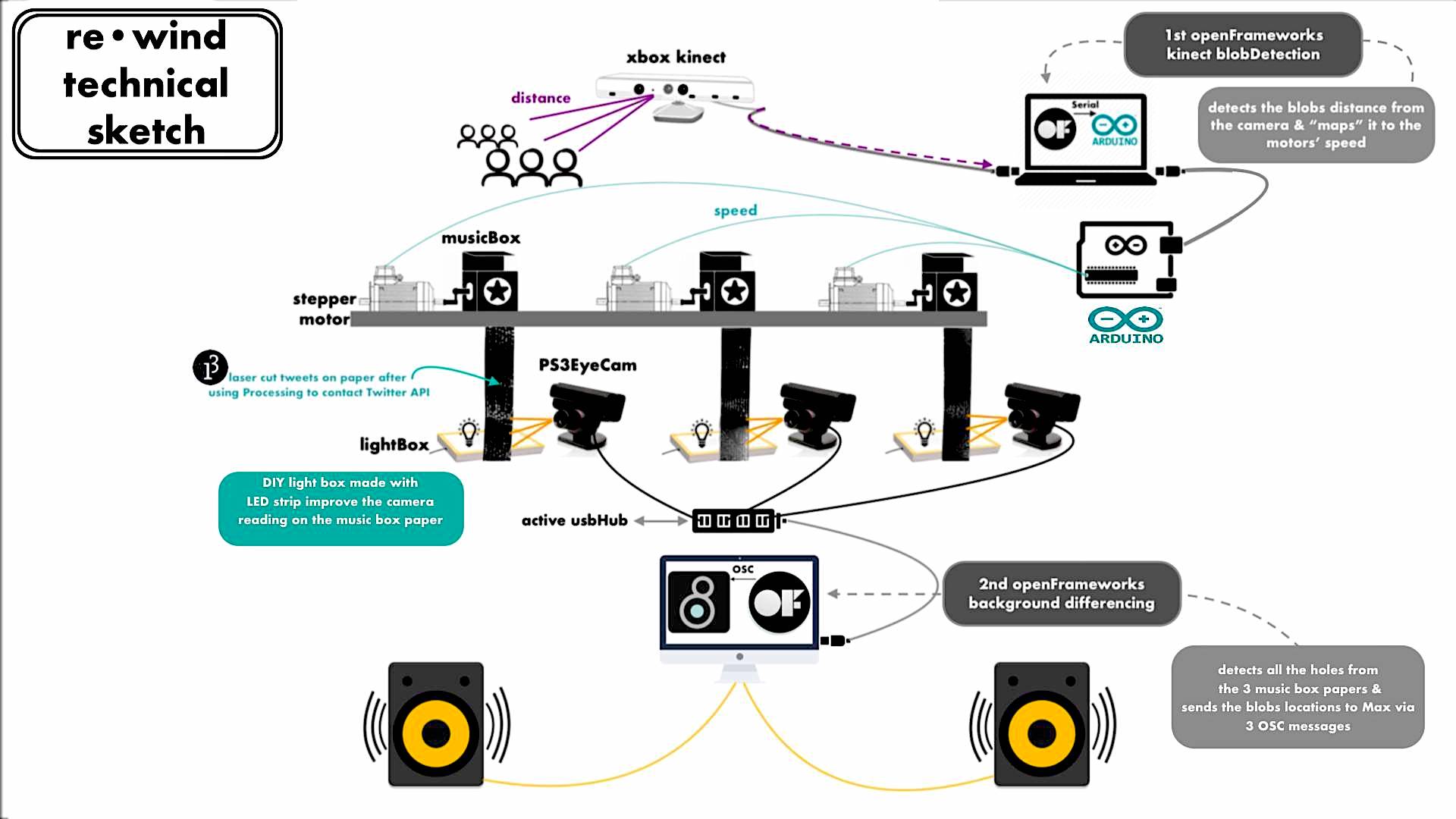

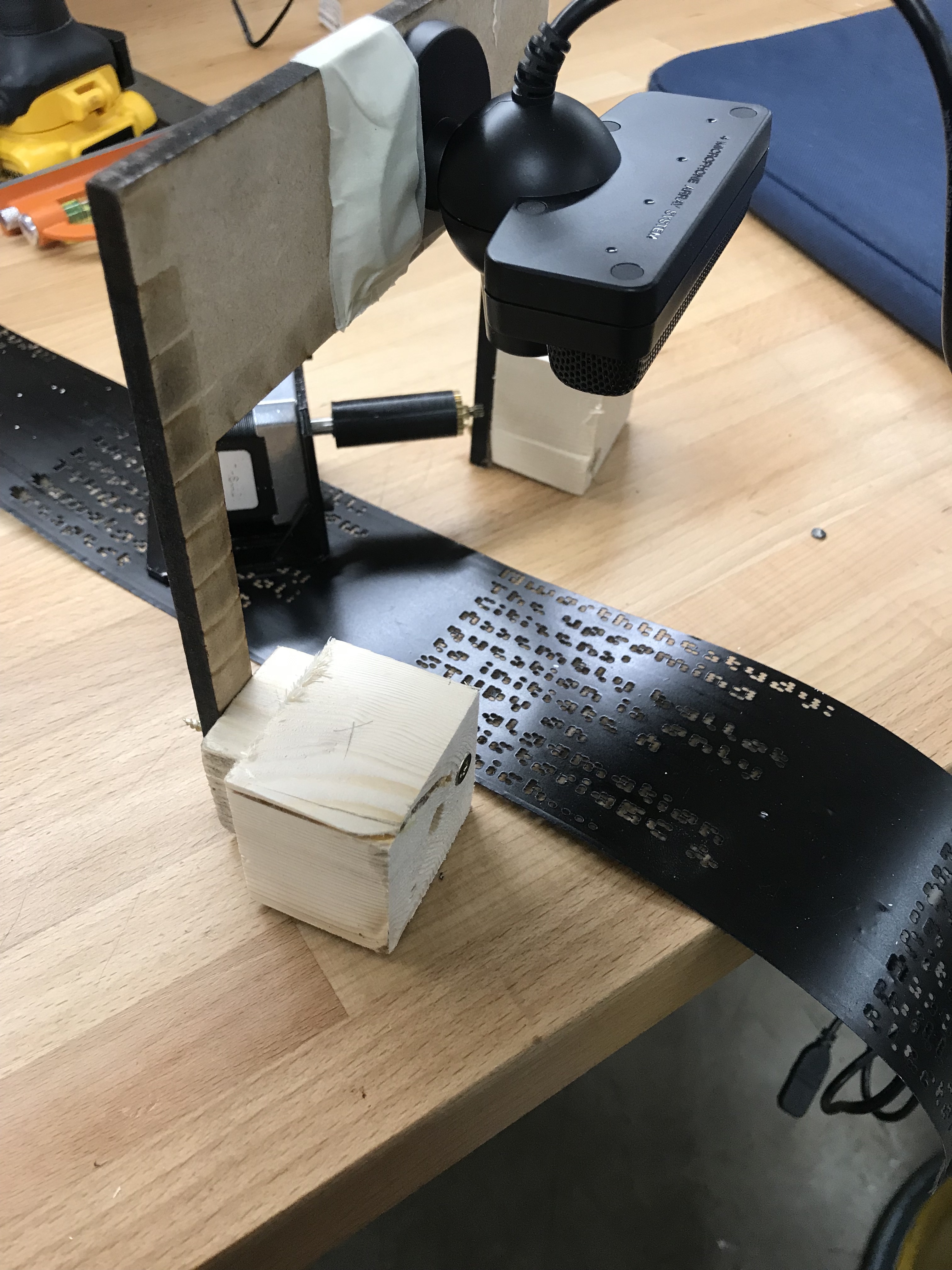

An algorithm is getting tweets from the Twitter API with the hashtag #amalgamation and draws each one in English alphabet, Braille and QR codes. The three diverse interpretations which hold the same encoded messages, are laser cut on three card paper rolls as holes. Once the perforated papers enter the three music boxes their holes will trigger musical notes and as they exit, the three PS3 cameras will scan and detect the holes. The scanning result will be sent to Max MSP where each paper will be individually and digitally sonified. Thus, the encoded messages become the common music score for both the analog and digital machine, resulting in a musical dialogue between the two.

Decoded in succession, the message is conveyed through music; the universal language that attempts to unify the means in use. The viewer is placed in the centre of a semantic chaos that provokes the confusion of the tower of Babel. rewind 2.0 aims the attention to the freedom of listening, which incorporates the capacity and imaginary of physical resistances. Through listening to oneself, one is enabled to deepen his conscience and consciousness. And in turns to listen to others. Moreover, the viewer interacts through movement by affecting the speed of the motors which crank the music boxes, contributing on the unification of the decoding process. The outcome is a musical composition, which will never be performed in the same way.

Concept and background research

Artistic inspiration

As a music composer I have been using music boxes, along with other toy instruments, to a great extent and in quite “conventional” ways. In the beginning of my computational arts practice, I was lucky enough to encounter Quadrature’s “Twelve”;

https://gizmodo.com/a-twelve-track-sequencer-that-plays-analogue-music-boxe-1548739406

an artwork of 12 motor driven, fixed-melody small music boxes, where the user can individually switch them on or off, as well as control their speed, in order to produce random and interesting melodies. This work became a great influence to me, mostly technically, indicating me new and inspiring ways that I could employ music boxes in my new practice.

Moreover, I was inspired by Kathy Hinde’s “Music Box Migrations”,

http://kathyhinde.co.uk/music-box-migration/

in the way and the extent that she associates the medium to philosophical concepts.

Theoretical background

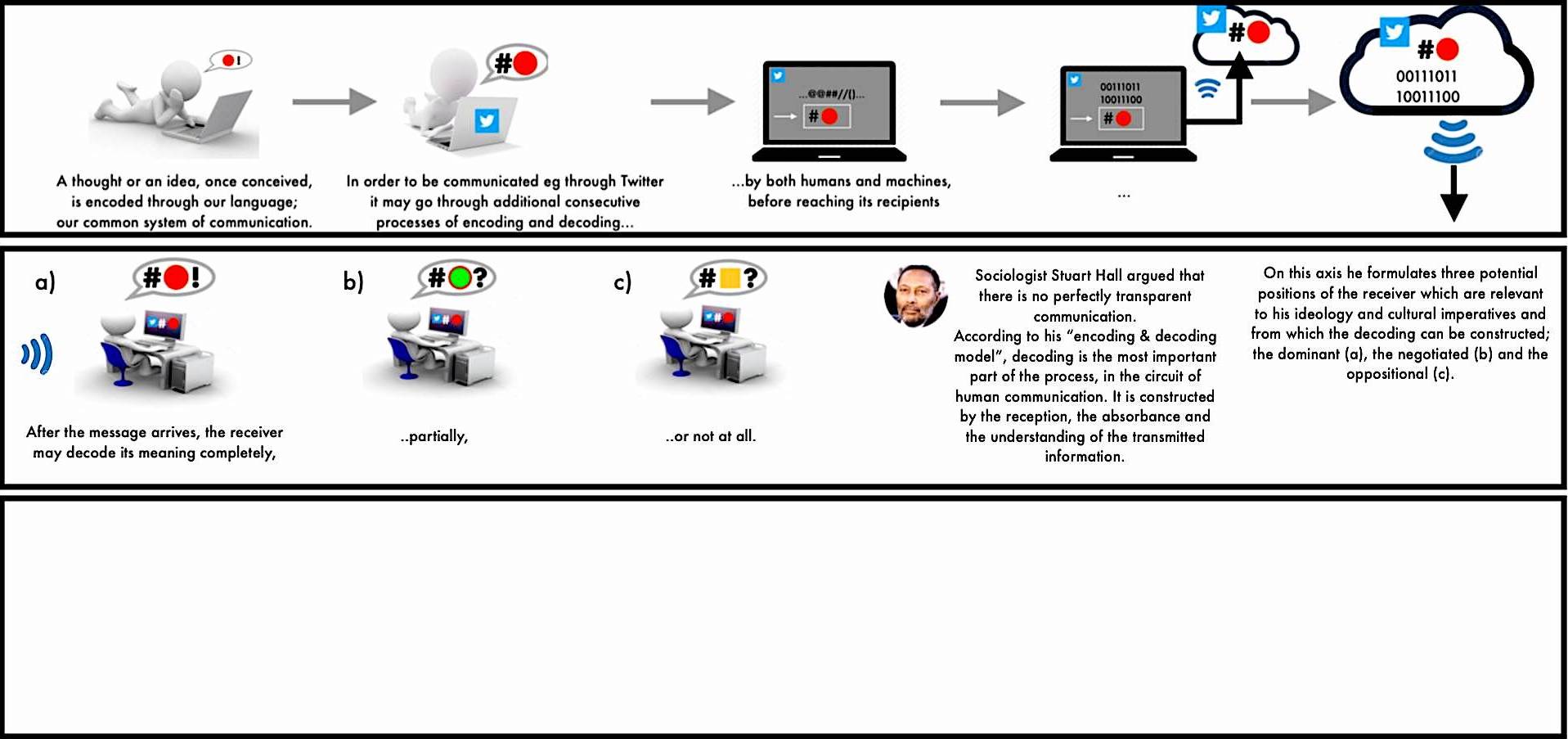

Communication has been an integral part of almost every moment in our every day lives. Whether it is interpersonal, inner/esoteric or even between machines in our times, it helps us understand each other, as well as ourselves. It consists of a source and a target, a sender and a receiver, a person who encodes a message and a person who decodes it, together with a common language or coding system that will make the communication feasible.

On the other hand, not all people who speak the same language, may perceive or interpret things in the same way, which is due to the fact that not all people share common cultural and ideological imperatives. Moreover, each language on its own comprises different connotations, figures of speech, metaphors, idioms etc. Having said that, it becomes more obvious that the afore-mentioned parameters render communication a remarkably subjective field.

Sociologist Stuart Hall deals with that subject in extenso in his “encoding and decoding model of communication” 1973 theory, which is the main conceptual foundation of ‘rewind 2.0’.

In the following lines I will attempt to highlight concisely the main points of Hall’s theory although the detailed version with all the theory’s associations to my work are cited in my theory’s module report. https://compartsblog.doc.gold.ac.uk/index.php/work/re-thinking-re-wind/

Hall argues that the meaning is not statically determined by the encoder/producer and that there is no ‘perfectly equivalent circuit’ or ‘perfectly transparent communication’, pointing out the subjectivity that underlies communication. Furthermore, what he mentions as ‘noise, distortions or misunderstandings arise precisely from the lack of equivalence between the two sides’. Hall believed decoding in the communicative exchange is the most important part of the process and is constructed by the reception, the absorbance and the understanding of the transmitted information.

On this axis he formulates three potential positions of the receiver from which the decoding can be constructed; the dominant-hegemonic, the negotiated and the oppositional.

In the dominant - hegemonic position, the encoder succeeds in conveying his/her message to the receiver, fully and straight and the receiver decodes it exactly the way it was encoded.

This position was associated with the English alphabet tweets. The international popularity of this encoding system leaves small room for misinterpretations and therefore is symbolically used, with the intention for the audience to acknowledge its legitimacy to encode a message that has already been signified.

In the negotiated position, there is a mixture of acceptance and rejection from the decoder point of view, but nevertheless understands adequately the dominant definition and the signification of the message. While understanding the meaning, his/her opinions differ to a certain extent, retaining his/her own interpretations and negotiated versions in local, situational conditions.

This position was associated with the Braille code tweets. Braille code is developed as the medium for blind people to interpret the visual. Lacking from their vision, they depend on the other senses and in specific their touch to receive the visual aspect of the broadcasted message. This creates another layer to the message that renders the communication mediated. In turns, the fact that the message is reformed and produced through this mediated form of communication, is possible to create connotations, in terms of less fixed, associative meanings. In addition, the encoding system itself corresponds to specific group of the audience, able to understand the initial message denotation. These aspects are able to stimulate certain levels of partially controlled misinterpretations, that aim to create noise in the decoding; more changeable and conventionalized meanings to be interpreted through diverse receiving, that clearly vary from instance to instance. This renders the Braille code as the negotiated position, provoking a mixture of adaptive and oppositional elements.

In the oppositional position, the receiver decodes the message in a globally contrary way. Although understanding both literal and connotative aspects of the message, he/she chooses to completely reject it, detotalize it and reproduce it within its own alternative referential framework. This can be due to decoder’s disassociation to the view, or because it does not reflect upon commonly accepted views of society, or simply due to not holding the same view.

This position was associated with the QR code tweets. QR code is a type of matrix barcode, essentially a machine-readable optical label. In practice, QR codes often contain data for a locator, identifier, or tracker that points to a website or application. In this regard, OR codes can be characterize as visual representations of the 'language of the machine', relating both the process of storing the information into a digital space, and the symmetrical image of black and white dots to the 0 and 1 of the binary code. The use of the QR code is therefore connected to the oppositional position, as human being are incapable to interpret the message without the interference of technology.

Since the publication of the encoding/decoding theory in 1973, things have changed in the way we communicate. With the rise of social media, technology and computers have become a mediator in the social intercourse, presenting and emphasizing the synergetic relationship between the man and the machine. Whereas in the natural form of communication the meaning of the message is encoded by the sender and decoded by the receiver, there is now a significant extension on the encoding and decoding chain. Moreover, the initial naturally encoded message may be decoded by the computer once it is typed and then follow additional digital encryptions and decryptions along with the encoding and decoding routes. It will “travel” from the computer to the cloud server and then to the recipient’s computer, before he/she reads it and tries to understand / decode its meaning.

Among my intentions was creating a circuit of successive and diverse encodings in order to point out, in an exaggerated manner, communication’s complexity and subjectivity. Additionally, the long encoding/decoding “chain” of the message in the man - machine era and a parallel state of the actual meaning’s fragmentation and at the same time amalgamation through its musical decoding.

Technical

Processing

The two submitted processing programs were used to contact the Twitter API, get 15 tweets under a certain hashtag and store them in pdf. The first (Twitter_API_with_textBox.pde) was used for saving the tweets in the English alphabet and Braille, using two font types made up with dots. Then, the saved pdf files were used in Illustrator in order to be laser cut on the music box card papers.

The second (Twitter_API___QRCode.pde) is based on the first one with the modification to create a QR code for every tweet it gets. In other words it embeds each tweet in a newly generated QR code. Both programs are not meant to run during the installation.

openFrameworks

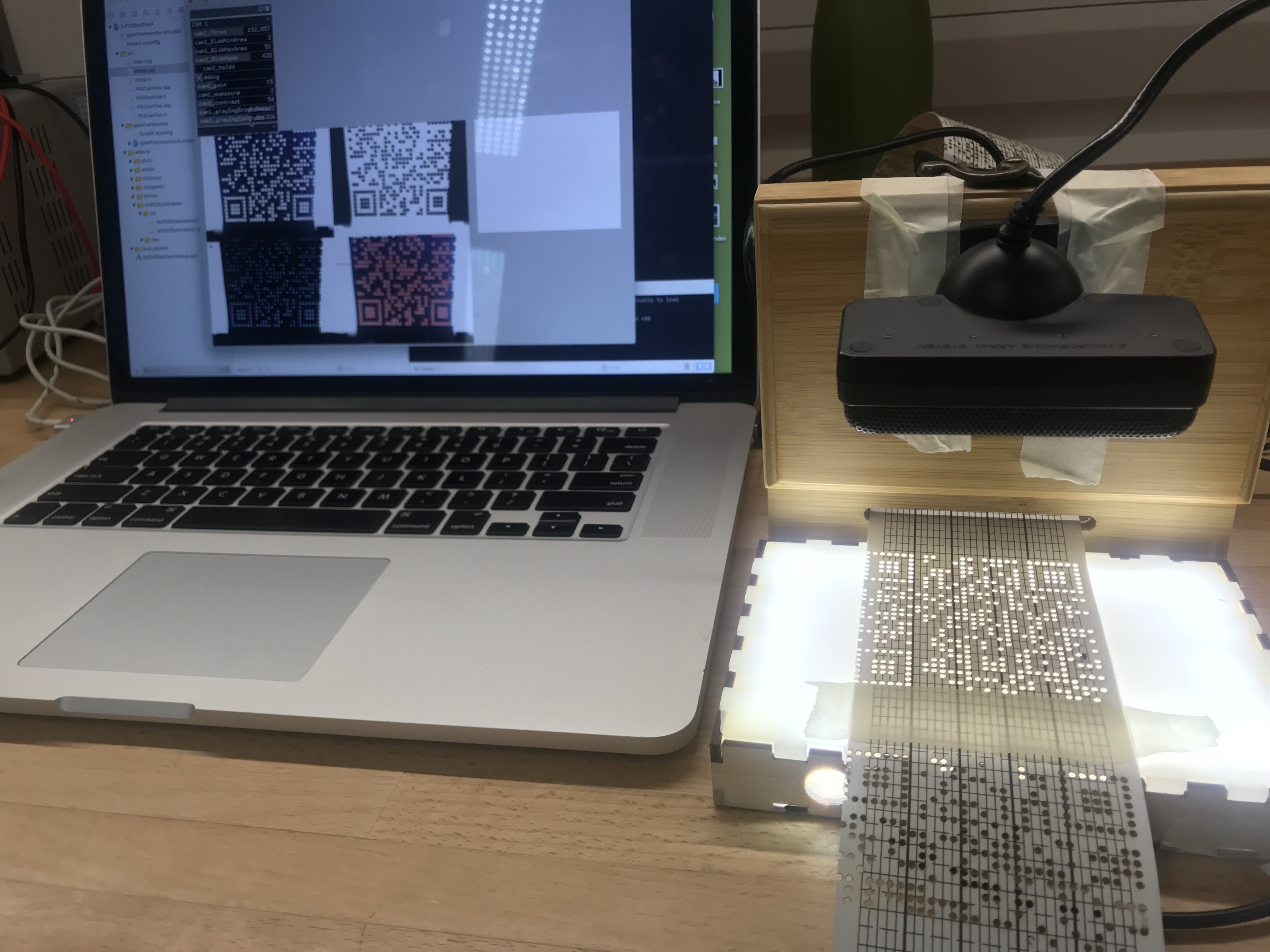

There are three projects in total. The first one (QR_Convert_To_Circles) was used offline to turn the square pixels of each QR code into circles. By manually loading each QR code png file and looping through the image, I substituted each rectangle with a circle by simply coloring the rectangles white, so that they can’t be seen. By using the “myRect.getCenter()” command, I could draw a black circle on top of every rectangle center. Then I saved them in pdf in order to be used in Illustrator and laser cut the card papers with the “circled” QR codes.

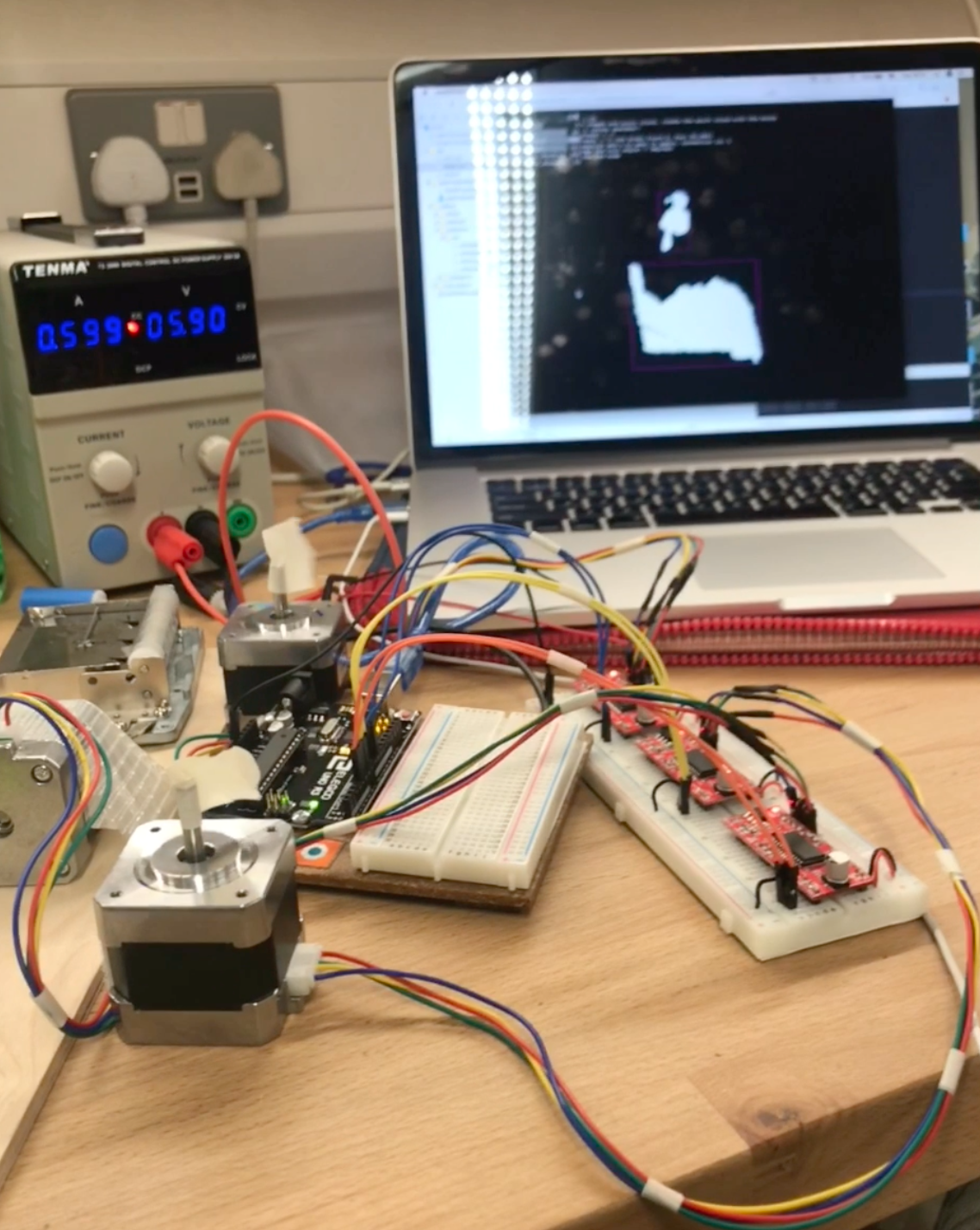

The second openFrameworks file (motors testing with Kinect_V) was used during the installation to run one kinect grid Lab example, look for “human” size blobs, track the found blob’s depth distance (viewers proximity to the camera) and map it to the speed of the motors. Since the motors were run from arduino, the ofxMotors addon made the serial communication between arduino and openFrameworks easier.

The third openFrameworks file (3xPS3BlobTrack) was used during the installation to run the 3 PS3Eye cameras that would scan the music box papers and detect their holes. It was based on the background differencing example and each one of the camera codes was placed in a separate class. In each class I used some pointers to call some of the ofxPS3EyeGrabber addon’s functions (setGain(), setContrast(), setExposure(), setAutoGain(), setAutoWhiteBalance()) as well as ofxCv’s grayImage.brightnessContrast(brightness, contrast) function that would help me fine tune the camera image and achieve better blob tracking results. All of these functions were passed into the ofxGui so that they were easily controllable when I wanted to re tune the images under different light conditions. Another modification on the background differencing example code was loading a “pre-shot” picture of the surface that the camera scanning would take place and used that to make the differencing. (a picture of the surface without the papers). Finally, every tracked blob that was between the boundaries of the red slit line I drew on top of the video, would sent its ‘y’ location to Max MSP via OSC. (3 OSC messages). The way I organised the code with separate classes made it look neat and easy to navigate, while using the ofApp.cpp only for calling the classes’ functions and the ofxGui.

Max MSP

In Max MSP (ReWind Blob Sound.maxpat) I received the 3 OSC messages and for every one I filtered the values to avoid overloading, as in each tweet they were some hundreds of blobs tracked. Then, I mapped the range of the sent values to the range of the MIDI notes’ numbers I wanted to use and routed the signal to 3 envelopes that were switching between each other in time intervals that I could control and tune. Finally, the signal was routed to 4 oscillators giving me the choice to use whichever combination I wanted.

The dominant - English alphabet input was assigned to a drone in order to have polyphony, be the less annoying - more familiar sound and thus match conceptually our familiarity to this coding system.

The negotiated - Braille code input was assigned to a plain sawtooth and cosine oscillator with slow-ish attack envelopes that changed fast over time.

The oppositional - QR code input was assigned to a square waveform oscillator with very fast attack envelopes that slowly changed over time, in order to match the computer/machine digital “beep” sound.

Arduino

The arduino code that ran the 3 stepper motors was based on the AccelStepper example by Mike McCauley. It was slightly modify to be compatible with the ofxMotors openFrameworks addon. Regarding the hardware, I used 3 EZ Driver chips in series to build my circuit in order to run the stepper motors.

FABRICATION

For the needs of the final project I used most of the fabrication techniques I learned during my studies. Apart from the music boxes, the motors, the cameras, the papers and the cables I almost constructed everything else. I have made 3 wooden light boxes with LED strips, A custom made box to hide the kinect in, laser cut all 3 paper rolls (1.5 m, approx 1500-2500 holes each, approx 1 hour of fine detail laser cut each), designed and 3D printed the motors’ mounts in order to be mounted to the music boxes. Finally, the most ambitious one was the construction “from scratch” of the piece of furniture that would host my units for the exhibition and somehow look appealing. Moreover, there was a lot of painting on every bit that I constructed. Many hours, almost days, spent on trials and errors, many mistakes made, lots of stress and frustration, but finally everything turned to a big rewarding feeling.

Future development

One of the technical additions on this work, that I have already thought of as a future development, is the creation of a mechanism that will punch the holes on the music box paper in real time and it will be connected to the processing program that gets the tweets from the Twitter API. This will add autonomy to the overall system/artwork and save me hours laser cutting the holes on the papers . It is something that I have already started looking into in the beginning of the summer term, but needed more time to realize.

One more addition for the future would be the translation of the same messages to morse code that will be used to flash lights.

Another much easier one (perhaps under a different conceptual framework) would be the integration of machine learning by adding a set of gestures. Each one will assign different speed values to the motors and the viewer will be more actively involved.

Self evaluation

Overall I am very happy with the result I achieved. I regard my project to be of high complexity both technically and conceptually and hard to bring all the elements together working. I know that I pushed my limits to the extremes, given my knowledge and experience on the field. Delivering what I initially proposed (to myself) was way too much rewarding.

On the cons, I think I have to figure out another way of joining the motors to the music boxes, other than adjusting a gear on the motor’s mount. This has caused the music box mechanism to get worn out quite easily, after a couple of days and had to use (and damage) my spares. Additionally, I wasn’t overall happy with the way the kinect was affecting the motors’ speed, as it was very sensitive on the depth distance readings, causing the motors to stutter when an abrupt movement was detected.

On the pros, I have to mention the blob detection of the papers’ hundreds of holes, some of which were only 2 pixels big! In the beginning it seemed an impossible objective to achieve but the result was relieving.Moreover, I am glad with the overall fabrication I did, including a wide range of different things mentioned above, as before entering the course my relative knowledge and craftsmanship level was far below the average. I am also pleased by the fact that I used API’s without being taught in the course, as well as using Max MSP for the first time. Furthermore, apart from the audience’s positive feedback, I truly appreciate the fact that the whole process helped me consolidate my knowledge and beat fear by taking one step at a time.

References

“Mastering Open Frameworks : Creative Coding Demystified” by Denis Perevalov

ofBook “Image Processing and Computer Vision

LaBelle B. (2018): Sonic Agency, Sounds and Emergent Form of Resistance. Goldsmiths Press, London

Durham, M. G. & Kellner D. M. (2001): Media and Cultural Studies, Key works. Part II: Social Life and Cultural Studies, 13 Encoding / Decoding, Stuart Hall. Revised Edition, Blackwell Publishing Ltd. Available online: https://we.riseup.net/assets/102142/appadurai.pdf

Davis, H. (2004): Understanding Stuart Hall. SAGE.

Teel A. (2017): The Application of Stuart Hall’s Audience Reception Theory to Help Us Understand #WhichLivesMatter? Online article: https://medium.com/@ateel/the-application-of-stuart-halls-audience-reception-theory-to-help-us-understand-whichlivesmatter-3d4e9e10dae5

All Computer Vision lectures of WCC ( Theo)

https://www.youtube.com/watch?v=gwS6irtGK-c Processing code reference

https://www.youtube.com/watch?v=7-nX3YOC4OA Processing code reference

https://faculty.georgetown.edu/irvinem/theory/SH-Encoding-Decoding.pdf

https://en.wikipedia.org/wiki/Encoding/decoding_model_of_communication