Results May Vary

Results May Vary is an interactive performance influenced in real-time by the audience's recollections of the lockdown.

created by: Clemence Debaig

Concept

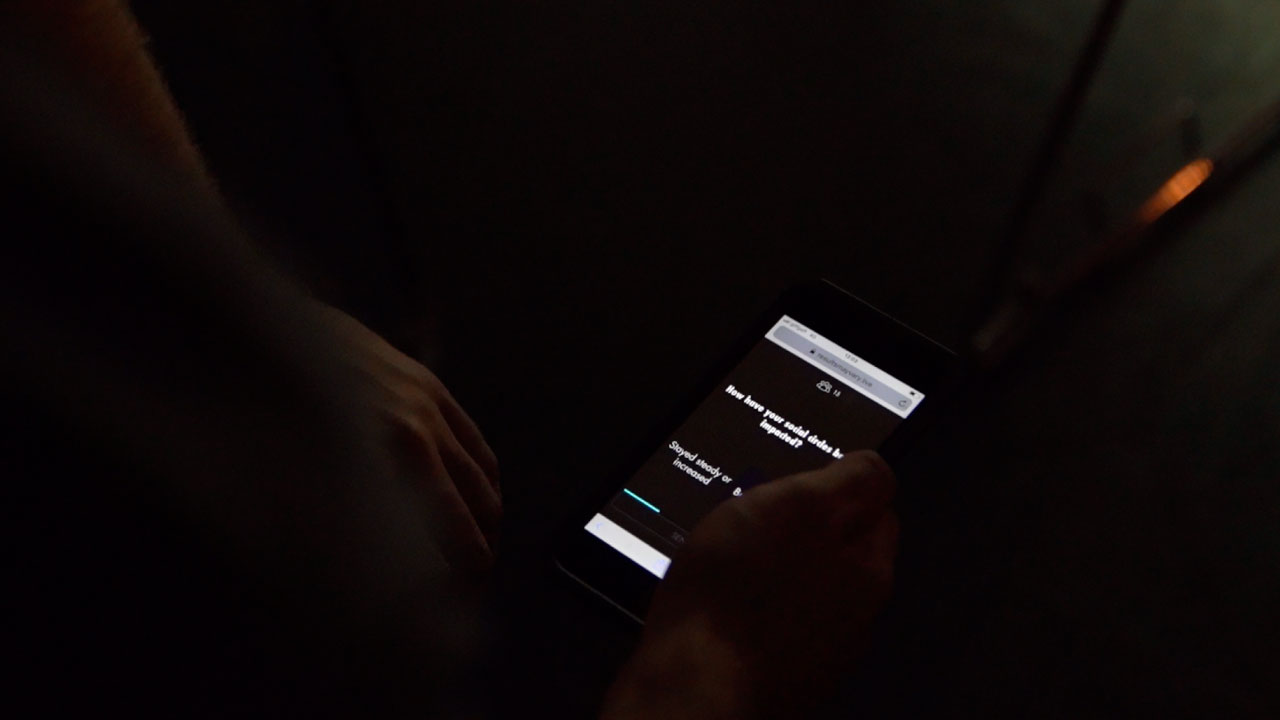

Results May Vary acts as a ‘performative survey’, portraying the collective memories of the specific audience interacting in the moment. During the performance, the audience is invited to reflect on their emotional journey through lockdown by answering a series of questions. With branching narratives and direct interactions with the performance, each performance is unique.

Results May Vary originated from a series of in-depth interviews, collecting real-life stories. The performance aims at highlighting the thoughts and feelings of this pandemic. It invites the audience to empathise with the diversity of experiences and circumstances and offers a space to reflect on what has been missed, enjoyed and learned, and how it will influence our approach to the new normal.

The performance uses computer vision effects and audio samples that are affected in real-time by the audience input. Thanks to a custom user interface, with live-stream and interactive elements, presented side-by-side, audience members can enjoy the performance directly in the browser, from the comfort of their home, or directly on-site while interacting on their phone.

Results May Vary is presented in a 15 minutes format as a series of work in progress scenes building towards a longer piece.

Created by Clemence Debaig

Performed by Lisa Ronkowski

Music by Christina Karpodini

Voices from original interviews: Andrew C., Ashley C., Chloe B., Clemence D., Elmer Z., Jack J., Kelly H., Kristia M., Micha N., Sophie O., Theo A.

Process and background research

In-depth interviews

The project originated from ten in-depth interviews conducted remotely. The participants were selected to cover a diversity of circumstances (e.g. single and isolated, full-time job and looking after toddlers, furloughed, looking after family members, etc). Each interview lasted about one hour and explored topics ranging from the practical challenges of the situation to their emotional journey during the lockdown.

The initial idea was to draw common themes from the interviews to create a traditional narrative structure for the final piece. However, the richness of the interviews highlighted that the diversity of experiences was the topic to cover. Each discussion was full of raw emotions and personal stories, which led to the use of audio from the original interviews as the main soundtrack for the piece, humanising the cold survey aspect and anchoring it into reality.

While discussing with the different participants, it became also clear that that state of isolation didn’t give them many opportunities to be aware of what was happening elsewhere, creating a need for a common space to share experiences and generate empathy.

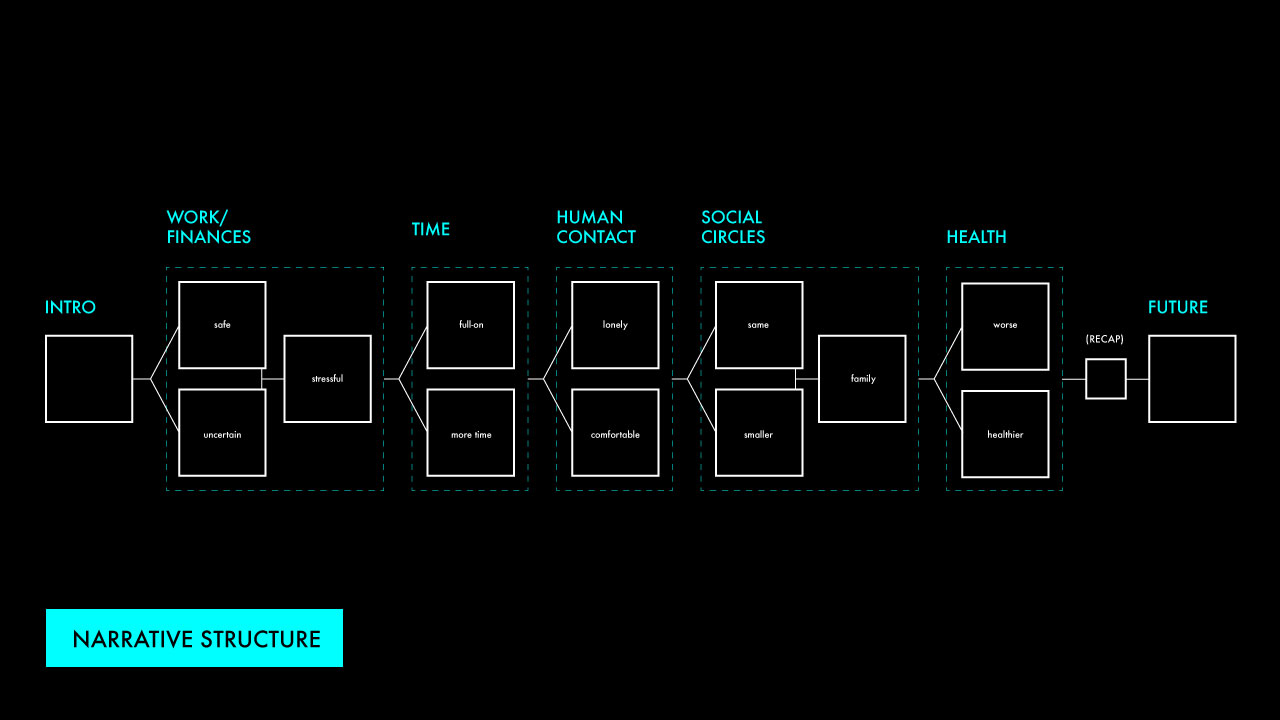

Thematic analysis and performance structure

All the interviews have been thoroughly analysed leading to a thematic grouping of the key insights. The thematic analysis helped identify the common topics of concerns between participants and helped create a narrative structure for the piece.

Three main findings have influenced the structure:

- The interview itself helped the participants process their own feelings and made them wonder how others would answer.

This led to the idea of creating a digital communal experience where the audience could go through a similar process together by answering a series of questions about their own experience.

- Experiences were varied but, at a theme level, they have been very polarised instead of ranging between two extremes.

For example, in relation to how busy they had been, it was either really “full-on”, or they had much more time - but nothing in between. This led to the idea of branching narratives, with simple choices between two options.

- Everyone had taken some time to reflect on themselves and would like to make changes in their lives when approaching the new normal.

This shaped the end goal of the piece. The audience would have the opportunity to write a promise to themselves, some sort of ‘never forget’ statement they can make themselves accountable for.

Based on those key principles, the piece is structured as follow:

- INTRO - A common introduction that anchors the piece in the pandemic context

- 5 THEMES - Each theme starts with a vote that leads to 2 possible scenes

- Work/finances

- Time

- Human contact

- Social circles

- Healthy habits

- THE FUTURE - A common ending where everyone gets to write a promise to themselves

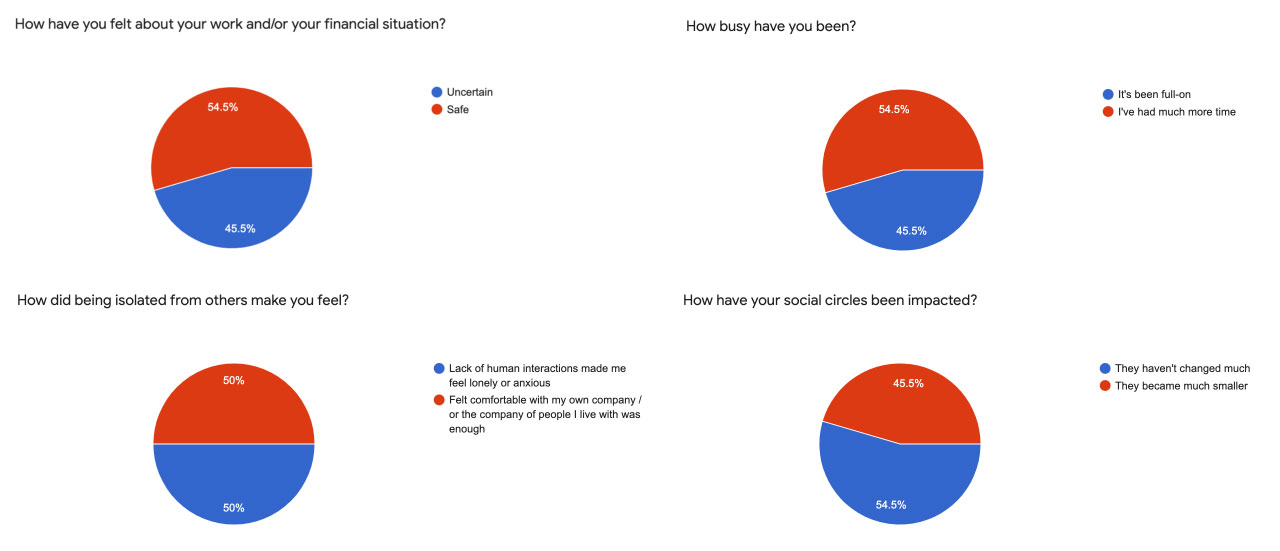

Confirming the data

Considering how the concept would impact the technical architecture, it was key to validate its structure before starting any technical prototypes. With a simple Google Form with conditional logic, all the questions and content have been tested very early on with a larger group of people (30 participants).

This helped fine-tune the questions and options. It also helped measure how long it would take to go through the entire experience and control the timings for a 15 minutes piece. Only answering the questions took an average of 8 minutes, which was perfect to combine with a live performance where moments could be dedicated to more passive content.

The really interesting piece of data that also came out of the survey was that all the ‘voting’ questions at the beginning of each teme were oscillating around the 50% mark. This confirmed the first assumptions coming from the qualitative research. It would certainly create some really interesting outcomes when presented to different audiences. There would be a chance for any options to be picked, leading to unique combinations from one performance to the other.

Questioning the notions of liveness in digital performances and designing meaningful interactions

In parallel to exploring the specific topics of the piece, the lockdown has been a fantastic opportunity to observe and research how the performing industry is reinventing itself through digital channels. Formats and models are still to be created. Unfortunately, more of the content rapidly made available resembled more a bad DIY TV experience than the rich communal experience we are familiar with in physical venues. This also led to questioning the notions of liveness in this new format. What makes it live? Why should it be watched at a certain time instead of on youtube later?

The only references that got closer to a shared live experience appeared to make the most out of live interactions, using game and immersive theatre mechanics, in the like of the Social Sorting Experiment by The Smartphone Orchestra, The Telelibrary by Yannick Trapman-O'Brien and Smoking Gun by Fast Familiar.

Keeping those principles in mind, particular attention has been put in designing meaningful interactions for each scene. On the audience side, the interactions would be consistent from one scene to another, answering questions about their own experience, but the way it affects the performance in real-time would be tailored to the specific topic or emotion being portrayed. In that sense, visual and audio metaphors have been carefully crafted to represent a specific topic and also allow for scalable interactions evolving with different audience sizes.

Process anchored in iterations and user testing

Results May Vary only exists if there is an audience to interact in real-time, so iterations and user testing have been key in the process. After the first survey prototype mentioned above, four rounds of testing took place, organised as follow:

- Playtest 1:

First third of the content in a proof of concept state, focus on testing the key interaction mechanics and the concept of the piece

- Playtest 2:

Full piece, still rough around the edges, focus on the narrative structure, key messages and new interactions

- Playtest 3:

Full piece, still a few details to polish, focus on all the timings, also test of the mobile version for on-site audience

- Playtest 4:

Public rehearsal, in show conditions

Technology

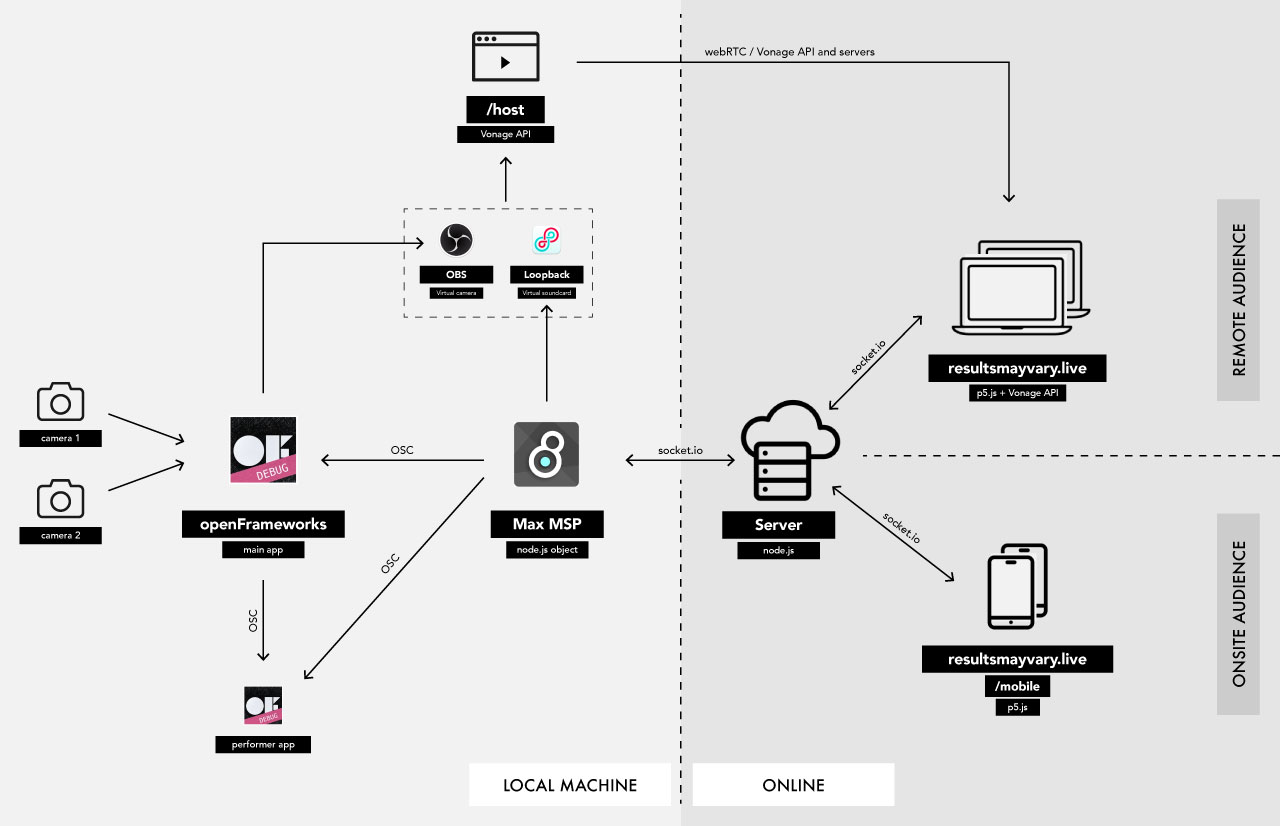

Architecture overview

The system is made of three key components:

- Max MSP at the core - ‘Digital stage manager’

The patch acts as a digital stage manager, passing information back and forth between the different systems: via OSC to openFrameworks, via socket.io (and the node.js object) to the remote server (running node.js as well) which controls what is displayed on the web app for the audience.

Max MSP also is also used to handle the full timeline and the audio.

- openFrameworks - Visuals

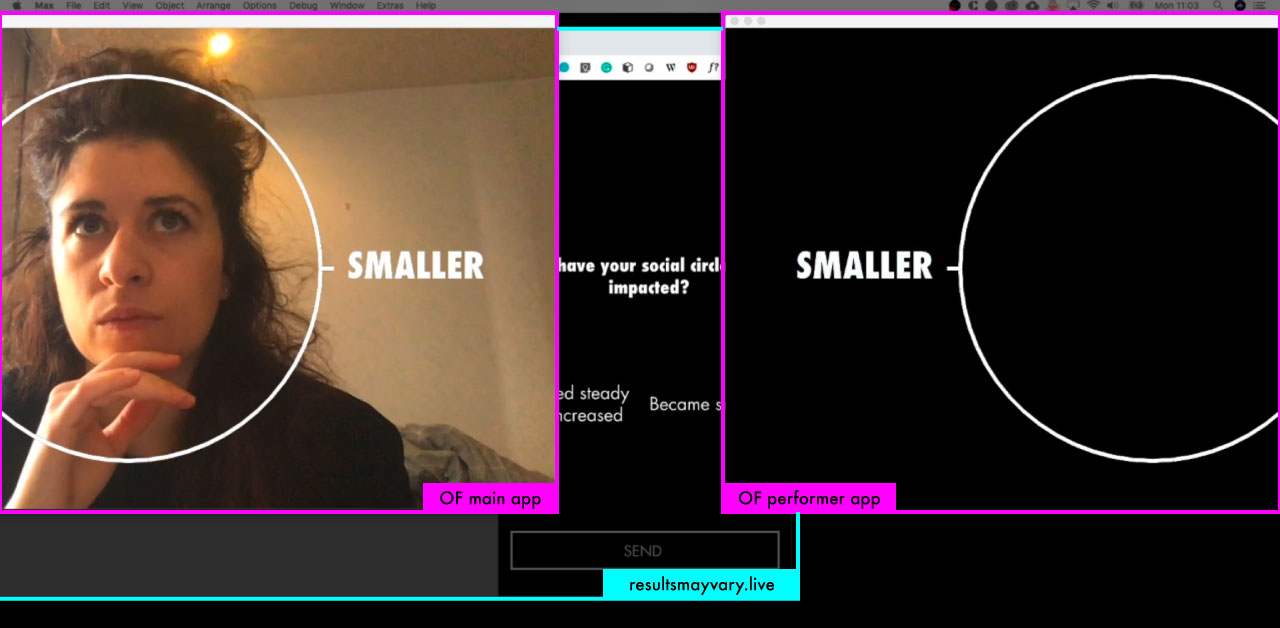

Two openFrameworks apps are running in parallel. One handles all the visuals with computer vision algorithms, which is the view live-streamed to the audience. The other one acts as a simplified prompter for the performer to react live to the audience input.

- Remote server and web apps - Audience interactions

This is made of a remote server in node.js using socket.io to receive data from the web apps and broadcast out to the web apps as well as Max MSP. There are then two web apps, one for the desktop/remote experience, offering a side by side view of the live stream and the user interface, the other one being purely mobile for the onsite audience.

As the interconnection between the systems is directly linked to the content and the timings, the following spreadsheet has really facilitated the cross-platform development.

Max MSP - Timeline, data flow and audio

Max MSP holds the entire structure of the piece and acts as the central “brain” of the performance. It contains all the timed elements and conditional logics, it parses messages and communicates between systems.

The patch includes a node.js object that is directly connected to the remote server via socket.io. In parallel, it communicates with openFrameworks via OSC. There are two types of processes happening between the systems:

- New ‘mode’, at the beginning of a scene:

Messages are sent out via OSC to change the visual effects on openFrameworks, and, in parallel, messages are sent out via socket.io to update the questions asked to the audience on the web app.

- Processing the audience’s input:

Max MSP receives the audience’s input from the remote server via socket.io and processes it before sending OSC messages out to openFrameworks. In most cases, this is to scale up or down some of the interactions to accommodate for various audience sizes. Audio effects are also added at this stage.

openFrameworks - visual metaphors

The main app running in openFrameworks handles the visual effects that are affected in real-time by the audience interaction. Each scene represents a topic or an emotion selected by the audience, and uses carefully selected visual metaphors. Two cameras are connected and used by the app, allowing for different points of view.

Examples of metaphors and algorithms used:

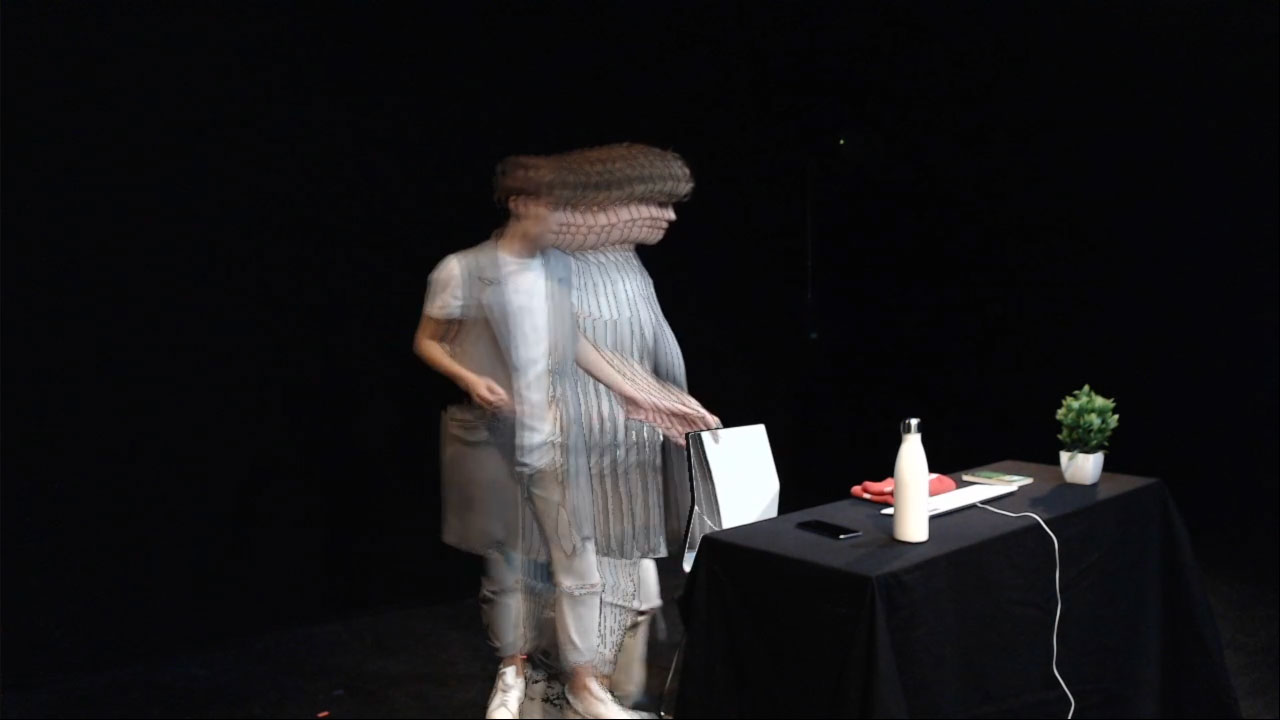

- Work/Finances > Uncertain

Using a mix of frame differencing (recoloured in red) and image cropping with brightness and saturation modified over time. This gives a feeling of reality shrinking and external elements becoming threatening.

- Time > More time

Using background differencing and frame buffer. It displays a trail of images, giving a feeling of the time being stretched (this is also combined with the audio being slowed down accordingly). With the audience input, the trail and the audio are more or less stretched.

- Time > Full-on

Using background differencing and recording loops at a certain interval. It duplicates the performer in space in real-time.

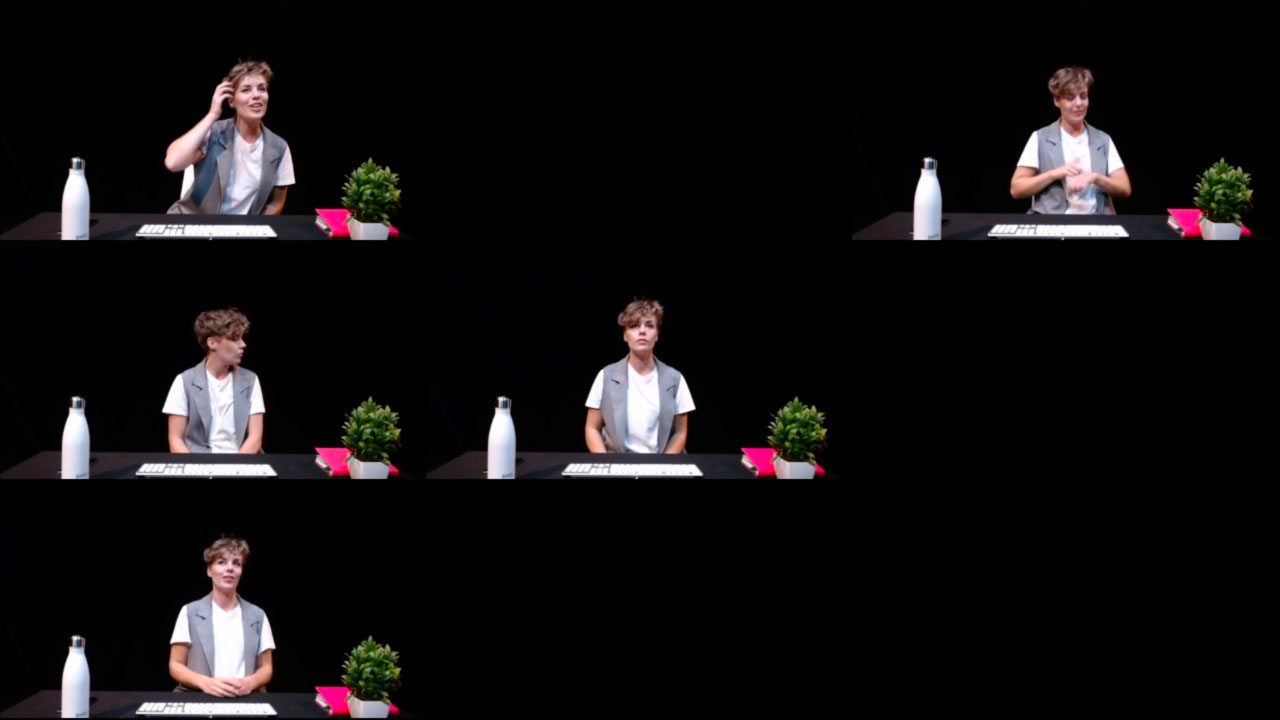

- Social circles > Smaller

Using a large frame buffer displayed in a 3x3 grid, this gives the feeling of several people being on a zoom call. The user input makes some tiles disappear as we go.

A second openFrameworks app also runs in parallel, acting as an aid for the performer to know which options are selected and being able to react to the audience input in real-time. It received OSC messages from Max MSP at the same time as the main app. The main app also sends some OSC messages to update positions and sizes when paragraphs are generated.

Optimising for an online experience: combining broadcast and UI in the browser

Results May Vary has primarily been designed for a remote audience. Two main components needed to be considered: how to ‘watch’ the performance and how to interact.

After experimenting with several platforms and tools, like multi-screen with Zoom on one side and smartphones on the other, none seemed to be seamless enough. Even if Zoom was solving potential latency issues, asking the audience to use different devices creates barriers. On the other side, using mainstream embeddable services like youtube and twitch limits the types of interactions as the latency is around 5 seconds.

Thanks to the help of the brilliant Joe McAlistair who solved this issue for his own theatre company Fast Familiar, new technologies like WebRTC and video conferencing APIs have been explored. After a few prototypes, the company Vonage seemed to be the best solution as it offers a very easy to use and reliable API for video conferencing and interactive broadcast.

Vonage API is already optimised for applications using a node.js server, which made it really easy to combine with socket.io for all the other communication needs of the project. The final version of the web app uses two divs, one on the left for the live-stream with Vonage and one on the right containing a P5.js sketch for the user interface.

To control the live-stream, the app is accessible on a different URL for the host to start and stop the broadcast. In parallel, OBS Virtual Camera is used to capture and live-stream the openFrameworks view, and Loopback is used to stream the audio from Max MSP.

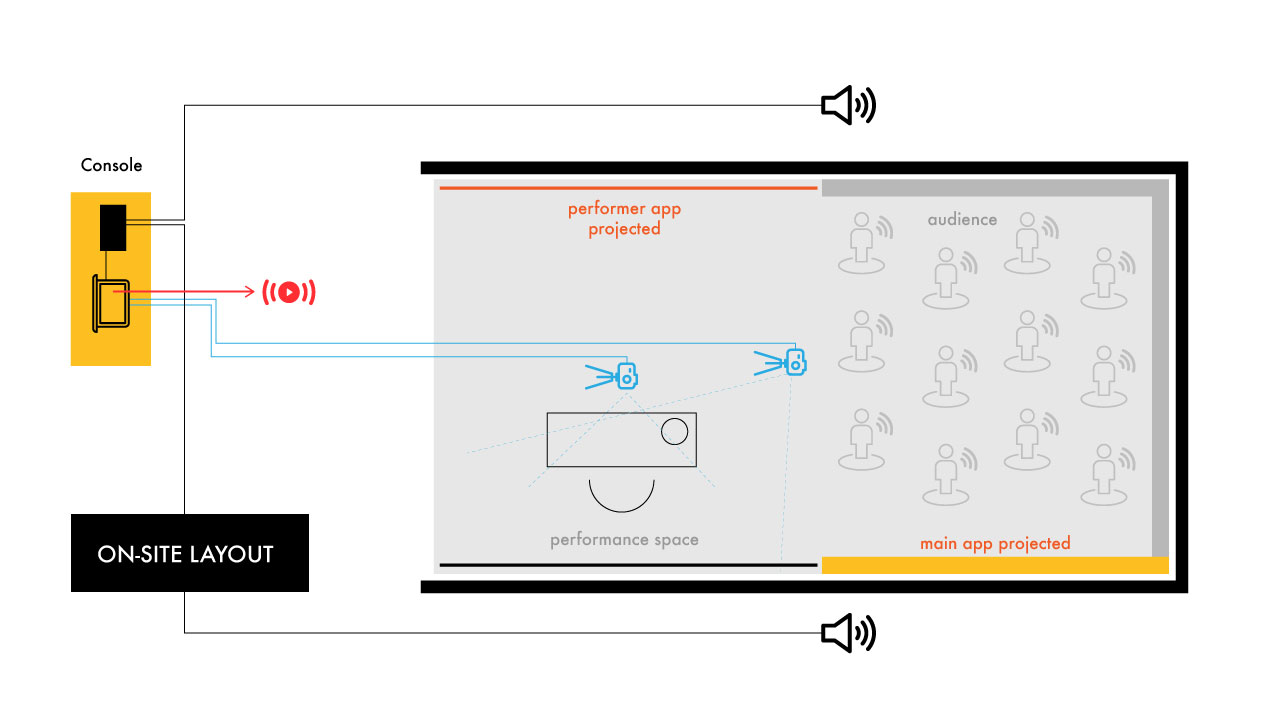

Considering an onsite experience: mobile interactions and room layout

As Results May Vary had originally been designed for a remote audience in mind, when it’s been confirmed that external people would be allowed on-site, it has been an interesting challenge to think about what an on-site experience would look like.

On-site audiences interact with their mobile phones. They can see a version of the final images projected on one side with a 'behind the scenes' view of the live performance at the same time.

The mobile page uses the same p5.js sketch as the desktop version, but only contains one HTML ‘div’ element, so that the live-stream is completely excluded.

Future development

A future version of Results May Vary would last around 40 minutes. This would allow for a better balance between active and passive states. It would also give more time for some scenes and topics to be developed in more detail.

The first interviews were done at the beginning of July. Now, almost three months later, it is apparent that people have adapted, and their circumstances have changed - and continue to change. In a longer version of Results May Vary, it would be really interesting to portray that evolution and really explore the journey people have been on.

Some people have been in touch after the performance to share their own stories, adding a lot of rich and personal content to the original ten interviews. Results May Vary could also evolve into a full platform where people can share their stories, driving conversations before and after performances and serving as a content bank for an ever-evolving performance.

Self-evaluation

I am grateful that I have been able to tackle a delicate but almost universal topic at the exact moment of its relevance. It’s very rare to be able to cover a topic that almost everyone can relate to. The downside, in its current form, is that the performance has an expiration date and won’t be relevant in a few months, or even weeks. Nevertheless, it is a fantastic starting point to evolve into something bigger.

I feel that the project has been very ambitious, especially considering that with all the options, there are about 25 minutes of content. Each technical component is not necessarily complex on its own, but connecting all the systems, scaling them up and juggling with different programming languages has been a very interesting challenge.

A few things didn’t quite work as planned. I do feel that the online experience is more interesting and optimised than the on-site one, but driving traffic online is more challenging in the middle of a 4-day festival. If I had to recreate the performance mainly for an on-site audience, I would think about it differently. For example, adding cues for when to use the phone, and integrating the visuals into the live performance instead of a side by side view.

Developing for the web opened the door to a world of unknowns, from hosting services, DNSs, SSH certificates, cross-browser compatibility, etc, etc, etc. I am very glad that I started looking into those challenges. It has been a really steep learning curve to step out of the controlled environment that a physical installation might offer.

Additionally, creating web apps for mobile devices also opened all sorts of issues that have not necessarily been polished as much as I would have liked. The main challenge was around testing on all screen sizes and operating systems. For example, I had found a bug one day before the show on one specific model of iPhones that was impossible to reproduce and very hard to debug without access to the actual device.

Lastly, I would remember to bring my own network for future projects, or at least to have some sort of control over it. During all my tests, the live-streaming solution with Vonage was seamless with very good image quality. But it happened that we had installed the live-streaming station for the full event next to G05, using the same router as my setup. As a result, the bandwidth was not enough for both live-streams which downgraded the quality of my own stream.

In conclusion, I am very grateful for Results May Vary because it helped me think about my art practice beyond the MA and allowed me to explore new formats, think about the future of live performances and how I would position my own practice in this new context.

References

Inspiration

Simon Katan - Clamour

The Smartphone Orchestra - Social Sorting Experiment (online)

Tim Casson - Choreocracy

Philippe Decoufle - Opticon

Philippe Decoufle - Le Bal Perdu

Literature

Ursu, M.F., Kegel, I.C., Williams, D. et al. ShapeShifting TV: interactive screen media narratives. Multimedia Systems 14, 115–132 (2008). https://doi.org/10.1007/s00530-008-0119-z

Steve. Benford and Gabriella. Giannachi. Performing mixed reality. MIT Press, 2011.

Mixed reality theatre: New ways to play with reality — Nesta. URL: https://www.nesta.org.uk/blog/mixed-reality-theatre-new-ways-play-reality/.

Alice Saville. Immersive theatre, and the consenting audience - Exeunt Magazine. 2019. URL: http://exeuntmagazine.com/features/immersive-theatre-consent-audience-barzakh/.

NoPro Podcast 258: The One Where We Talk About Twitch, And Other Things. No Proscenium. URL: https://noproscenium.com/nopro-podcast-258-the-one-where-we-talk-about-twitch-and-other-things-24a6107160b9

Nicky Donald. Bete Noire: A ludo-gonzoid model for Mixed Reality LARP. June 2020

Code

Computer Vision examples from Theodoros Papatheodorou, Goldsmiths University

ofxParagraph addon that simplified all the text layout

Node.js / p5.js started kit from Andy Lomas, Goldsmiths University

Vonage API and sample app for interactive broadcast

MaxMSP - node for Max documentation

The video of the full performance that happened on Thursday 17 September 2020 is available below (duration 15 minutes):

Special thanks

Lisa Ronkowski for being an incredible performer.

Christina Karpodini for composing some beautiful music and running the piece in G05 while I was away.

Joe McAlistair for spending the time to share his knowledge about web conferencing solutions.

Sarah Wiseman for a brilliant mentoring session at the beginning of the project.

Rob Hall for all his help on-site, for his patience and for being a genuine brilliant human.