Queer Surveillance: Biometric system failures and the illegible

non-binary bodies

In the norm regulated by heteronormative and cisnormative standards in the world of biometric surveillance, What kind of prejudices and oppression are pressed on the othered queer groups as they are being monitored, misclassified, and controlled? How do we envision an inclusive system that embraces collective stylings of bodies and environments and refusals to the flawed algorithmic governance, and in what way?

produced by: Betty Li

Introduction

In the era of automation, biometric technology has been used pervasively to classify bodies for a variety of predictive purposes. The virtual body as information and data in such power systems exceeds representation but imposes on the level of altering the ontology of our physicality. The policing of body delivers an expansive view on social control, bias, and power. Surveillance renders bodies as sexual and gendered subjects through complex mechanisms of power(Foucault, 1990) However, gender and sexuality as an important identity category within the conversation of biometric surveillance are still quite unaddressed. How do the oppressive ideological models of the current biometric system render queerness? As Kathryn Conrad(2009) put in this issue, ”Surveillance techniques, themselves so intimately tied to information systems, put normative pressure on non-normative bodies and practices, such as those of queer and genderqueer subjects.”

Biometric system failures

Queer entities are made illegible and unidentifiable in the data’s seeing eye. Marginalized groups such as queer who do not conform to dominant conventions of gendered grooming and dress are computed as "biometric system failures.” (Kafer, 2019) Lauren E Bridges stated that digital failure reveals how data itself is a flawed concept prone to political abuse and social engineering to protect the interests of the powerful (Bridges, 2021). For example, biometric security and screening systems have revolutionized border crossings. The nonbinary, nonconforming, transgender and queer border crossers might have very different experiences while their bodies are being scanned, catalogued, and scrutinized for irregularity at the borders. Immigration and refugee agents in Canada have operated under a legal regime formed of a patchwork of legal statutes, court rulings, departmental guidelines, and memoranda. Queer asylum seekers who attempt to cross the Canadian border have a high risk of facing rejection due to their gender identity places them outside the narrow confines of Western patterns of heteronormativity—whose queerness establishes their bodies and identities as different and therefore subject to greater levels of scrutiny (Hodge, 2019).

At the same time, transgender Uber drivers find they are at risk of being suspended from the company for photo inconsistencies. Uber requires drivers to upload their selfies for its security feature (Real-Time ID Check) to verify their identity, and transgender drivers will get flagged if the photo does not match their other photos on file. Queer faces are rendered as illegibility during their gender transitioning in Uber’s non-inclusive design system, and their jobs could be lost in no time because of that. (Urbi, 2018) It is as what artist Zach Blas addressed in his work Facial Weaponization Suite, “ facial detection technologies are driven by ever obsessive and paranoid impulses to know, capture, calculate, categorize, and standardize human face,” yet they only equipped to know the “truth” based on the binary world view, any variations, outliers, or blemishes are erased; they’re blurred and bent to conform to this one dominant truth. (Blas, 2013)

Janey, an Uber driver who was going through transition and got kicked off. (Uber also insisted she includes her birth name on her profile)

Internalized surveillance also made queer bodies hyper-visible

While normative logics of classification and quantification operate to exclude, further marginalize, and keep out queer lives by invisiblizing them, they are also hyper-visible as “the othered.” The systematic binary oppression in surveillance technology has been internalized into a state of mind. This form of internalized surveillance is often captured by the spatial landscape of the Panopticon, where prisoners are clustered around a guard tower in which they cannot see whether or not they are being watched (the guard may not be looking in their direction, or there may in fact be no guard in the tower). As a result, they learn to behave as if they are always under surveillance since they can never know whether or not they are being scrutinized. (Rachel, 2015) The coded gaze of those who have the power to mold artificial intelligence makes non-conforming entities hyper-viable and self-conscious.

Designing queer-indentifieable biometric systems might further deepen and justify injustice

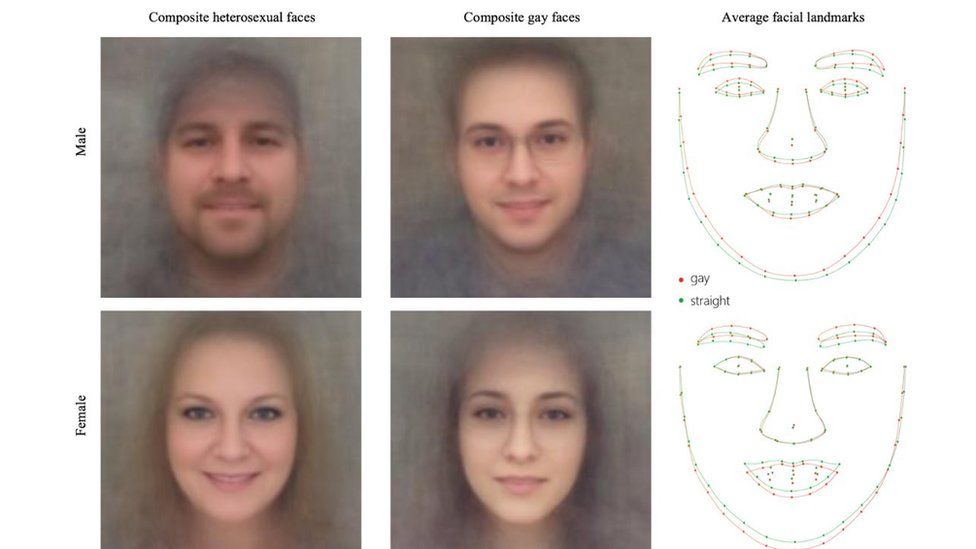

Will the normative power pressed on queer bodies be alleviated if identification systems take non-binary groups into account? Will the minoritarian groups be made less vulnerable if a new biometric technology is designed and can precisely detect them and categorize them? Computable queer data could be used against queer groups and put them in even more vulnerable situations. Authoritarian governments, personal adversaries, and predatory companies can use the data to further control, alienate, market, or build more predictive models that work to the advantages of these parties. In 2017, a Stanford University study claimed an algorithm could accurately distinguish between gay and straight men 81 percent of the time based on headshots. The study made use of people’s online dating photos and only tested the algorithm on white users, claiming not enough people of color could be found. (Samuel, 2019) Despite being criticized for the binary sexuality worldview that the algorithm was based on, more people worried that this “algorithmic gaydar” will make gay communities an easy target for anti-gay governments or potentially a brutal regime’s efforts to identify and/or persecute people they believed to be gay. Sarah Myers West, one of the authors on the AI Now report, stated that algorithms like “algorithmic gaydar” are based on pseudoscience, studying physical appearance as a proxy for character is reminiscent of the dark history of “race science. “We see these systems replicating patterns of race and gender bias in ways that may deepen and actually justify injustice,” Crawford warned, noting that facial recognition services have been shown to ascribe more negative emotions (like anger) to black people than to white people because human bias creeps into the training data. (Samuel, 2019)

How do we address the “failed” queer body and its illegibility situated in the binary cistems of surveillance when the power matrix is not of total disadvantage of it? How do we contest repressive orders and picture other futures where more fluid and inclusive expressions of identity can coexist? Research Scientist Meredith Whittaker thinks these biased algorithms don’t need to be fixed but abandoned. She also raised a few interesting questions: “We need to look beyond technical fixes for social problems. We need to ask: Who has power? Who is harmed? Who benefits? And ultimately, who gets to decide how these tools are built and which purposes they serve?”

It would be in an ideal world if queer groups can design their own biometric system at a technical, global scale that resists the surveillance and identification standardization of emerging neoliberal technologies. However, changing the existing models of algorithmic governance challenges the power apparatus and societies of control, which wouldn't be easy. How can we work around the current binary identificatory regimes and still build meaningful resistance? Can we look at our “failed” queer bodies from a different perspective and use it in the favor of gaining our autonomy and empowerment?

The "gaydar" by Stanford University

An alternative approach of reading digital failures as "unbecoming" of good data and talk back

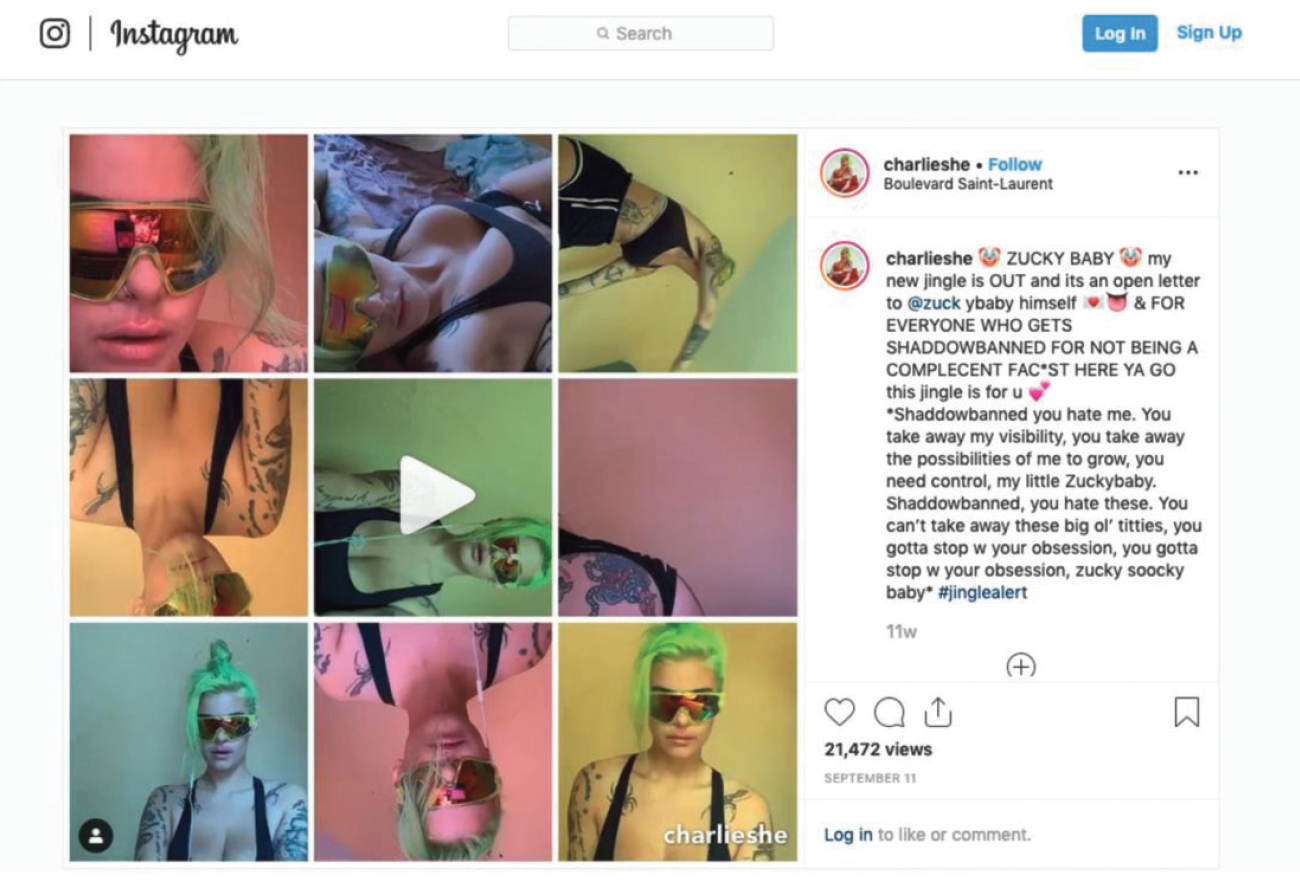

Haraway argued that digital failure is a process of unbecoming a “good” data subject to challenge data’s “God’s eye view” (Haraway, 1988) and push past the margins of legibility, knowability, and thinkability, to interrogate what is made illegible, unknowable, and unthinkable to data’s seeing eye. Bridge added, “Digital failure centers an ontology of difference and unbecoming the data subject through entropic, fugitive and queer data, and offers pleasure and playfulness that toys with its panoptic watchers, and “talk back” with teasing irreverence at the algorithm from the subjugated position. (Bridge, 2021) @Charlieshe, a queer Instagram influencer, talks back at the damaging effects of shadowbanning on marginalized communities through playful and erotic posts that speak directly to the content moderators and platform owners. She read Instagram about its inequities in content moderation that categorize queer bodies as sexual and often lead to take-downs and practices of shadowbanning. For example, she posted a song in response to the practice of shadowbanning dedicated to “Zucky baby”, a diminutive nickname for Facebook founder and owner of Instagram, Mark Zuckerberg.

Reading these failure moments of cybernetic rupture where preexisting biases and structural flaws make themselves known (Bridge, 2021) rather than fixing these flawed governance systems, is a smart alternative approach to speak back to our digitally captured selves, negotiate with the powered directly, and open up possibilities for nonnormative creativity and play.

@CharlieShe posted a song in response to shadowbanning dedicated to “Zucky baby”

Instagram filter "bad data" by me

Bibliography

Conrad, Kathryn. (2009). Surveillance, Gender, and the Virtual Body in the Information Age. Surveillance and Society. 6. 10.24908/ss.v6i4.3269.

Kafer, Gary, and Daniel Grinberg. 2019. Editorial: Queer Surveillance. Surveillance & Society 17(5): 592-601

Lyon, David. 2003. Surveillance as Social Sorting: Privacy, Risk, and Digital Discrimination. New York: Routledge.

Blas, Zach. 2013. “Escaping the Face: Biometric Facial Recognition and the Facial Weaponization Suite”. Media-N, Journal of the New Media Caucus, 9(2), ISSN 2159-6891 [Article]

Magnet, Shoshana. 2011. When Biometrics Fail: Gender, Race, and the Technology of Identity. Durham, NC: Duke University Press.

Foucault, Michel, and Robert. Hurley. The History of Sexuality / [Vol. 1] : An Introduction. London: Penguin, 1990. Print. Penguin History.

Rachel E. Dubrofsky, Shoshana Amielle Magnet, 2015. "Introduction: Feminist Surveillance Studies: Critical Interventions", Feminist Surveillance Studies, Rachel E. Dubrofsky, Shoshana Amielle Magnet

Bridges, L. E. (2021). Digital failure: Unbecoming the “good” data subject through entropic, fugitive, and queer data. Big Data & Society. https://doi.org/10.1177/2053951720977882

Hodge, E., Hallgrímsdóttir, H., & Much, M. (2019). Performing Borders: Queer and Trans Experiences at the Canadian Border. The Social Sciences, 8, 201.

Kornstein, Harris. (2019). Under Her Eye: Digital Drag as Obfuscation and Countersurveillance. Surveillance & Society. 17. 681-698. 10.24908/ss.v17i5.12957.

Urbi, J. (2018, August 13). Some transgender drivers are being kicked off Uber's app. from https://www.cnbc.com/2018/08/08/transgender-uber-driver-suspended-tech-oversight-facial-recognition.html

Samuel, S. (2019, April 19). Some ai just shouldn't exist. Retrieved May 08, 2021, from https://www.vox.com/future-perfect/2019/4/19/18412674/ai-bias-facial-recognition-black-gay-transgender

Haraway D (1988) Situated knowledges: The science question in feminism and the privilege of partial perspective. Feminist Studies 14(3): 575–599.