op('pantomimus')

Op(‘pantomimus’) is an immersive storytelling performance about schizophrenia base on face projection mapping.

The entire project is made with touchDesigner

Produced By: Chia Yang Chang

Mask Design: Ray Zheng

Performer: Kristia Morabito

Audio: Christina Karpodini

Concept

This project is a further develop project for creative coding workshop term2 'SPYOO'. It is aiming to create an immersive storytelling live performance by face hacking technology. Based on the experience of Schizophrenia patient's family, evidencing the patient suffering between reality and illusion becomes the narrative of the performance.

Pantomimus was a masked dancer that performed in the ancient Roman theatre, his performance lack speech and the communication during the scenes was made through dance and body movements. Based on that, in this performance, the audience will be immersed in the storytelling about schizophrenia, the performer’s dance will be enhanced with face hacking technology and projections.

Schizophrenia is still a very misunderstood disease, people tend to discredit their realities or even show no interest in knowing it. However, it is still a very sad illness that affects schizophrenics but all of their friends and families. People that suffer from mental disorder interpret reality abnormally. It may cause delusions, disordered thinking or even hallucinations, impairing day to day chores. People who do not have the experience with schizophrenia patient might think that they want to get attention or might be possessed. However, it is not what they can control. Schizophrenia is a severe mental disorder in which people interpret reality abnormally. It might cause some combination of hallucinations, delusions, and extremely disordered thinking and behaviour that impairs daily functioning.

The experience integrates a computational instrument with the performer recreating the original story through the non-speaking performance. The goal of this project is to bring the audience to experience what is the point of view of a person who is going through this kind of moment. I want to bring awareness to the suffering of those patients and the ones surrounding them.

Technical

Pipeline

Drawing the pipeline for the project, I started from the small one and transfer into a larger one. Eventually, it becomes a collaborate project with mask design, performance and music composing. I reference a lot of contemporary performance artists such as Gillian Wearing, Chris Burden and Franko B. Base on those amazing artist, this project is tempting to grab the fear and the painful images brings the awareness of the mental disorder.

The Face Hacking code that I reference from DBraun’s TD-FaceCHOP in touchDesigner. By using this code, need to hack into the installation of the OpenCV, dlib and cmake. However, the process of installation dlib and cmake wasn’t really well.

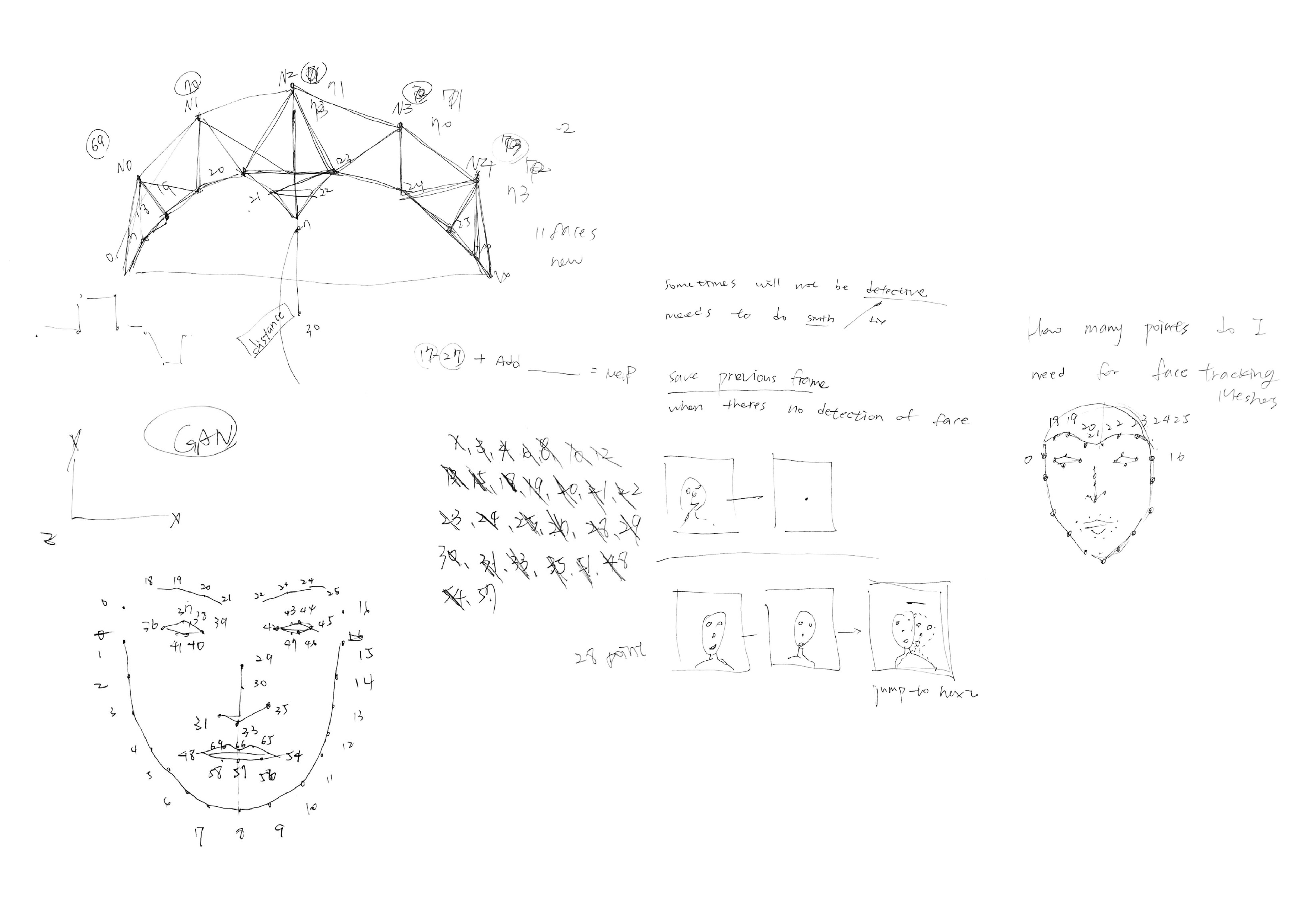

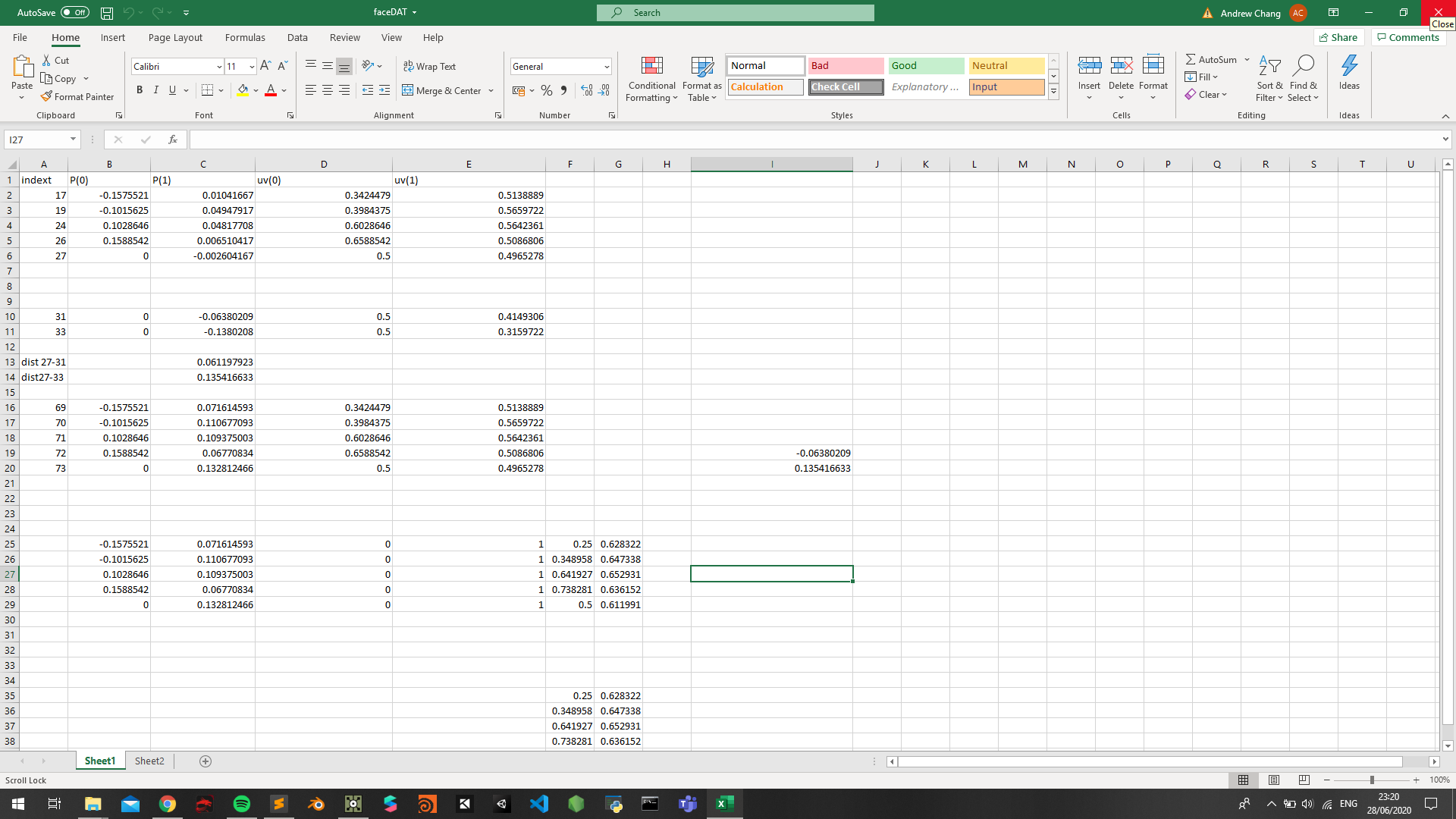

For pedromartins face tracking algorithm, it only supports 68 points of the face landmarks which start from the eyebrows to the chin. To create a bigger canvas of the face, I create a few points base on the original vertices and add the new order of the face meshes. This not only allows me to have more canvas to project visuals but gives the audience more easily to see what is on the face projection.

Visual

In this project’s visual reference from some face projection mapping project and surrealism artist. There are 5 phases of the visual base on the different feeling of the character been through and building up the emotion of the narrative.

1. Opening - Telling the audience to prepare the show, slightly show a few images.

2. Refraction – Refract the true feeling of the patient, showing the other part of them.

3. Projection – Start to project others on themselves.

4. Madness – Out of control, having a delusion.

5. Confusion – Feeling stable sometimes but still get confusing of reality and illusion.

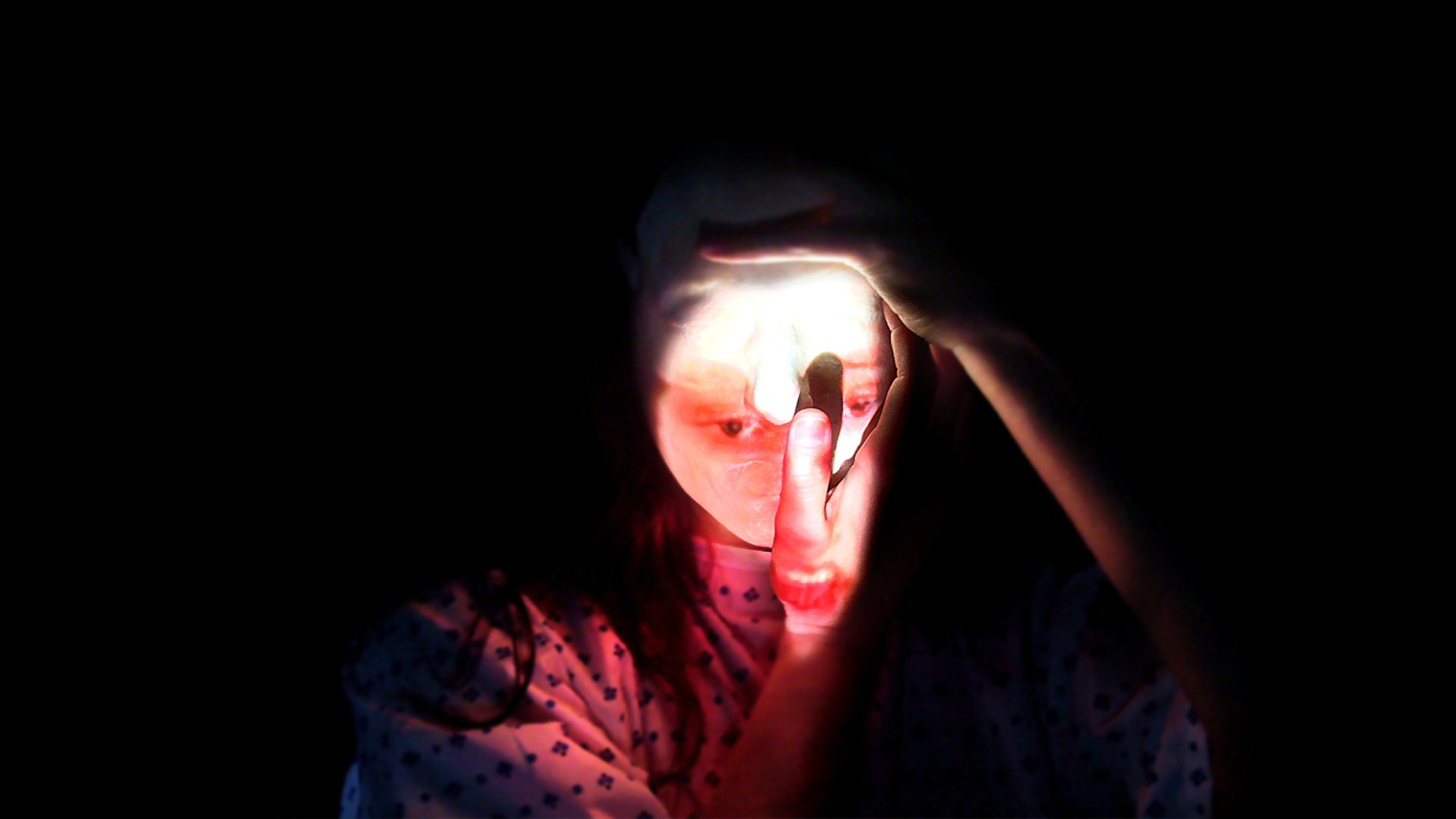

The setting of the visual scene is reference form my experience of seeing the patient from all different phases of the symptoms. For the Refraction part, I chose to use a more harmonious way to express something that is more hidden behind the face. In this effect, you can see many combinations of different expression refracting. Furthermore, creating a more surrealistic image of the illusion of feelings.

Jump to the projection part, I used the generated photos images which they are using AI to generated human faces. Furthermore, I used these image as input data for the styledGAN in runwayML with my performance face. This present that the patient is using their perspective to project others identity on themselves. Therefore, these fake faces express they are twisting the truth into their imagination and pretend the perfection life that they think others have.

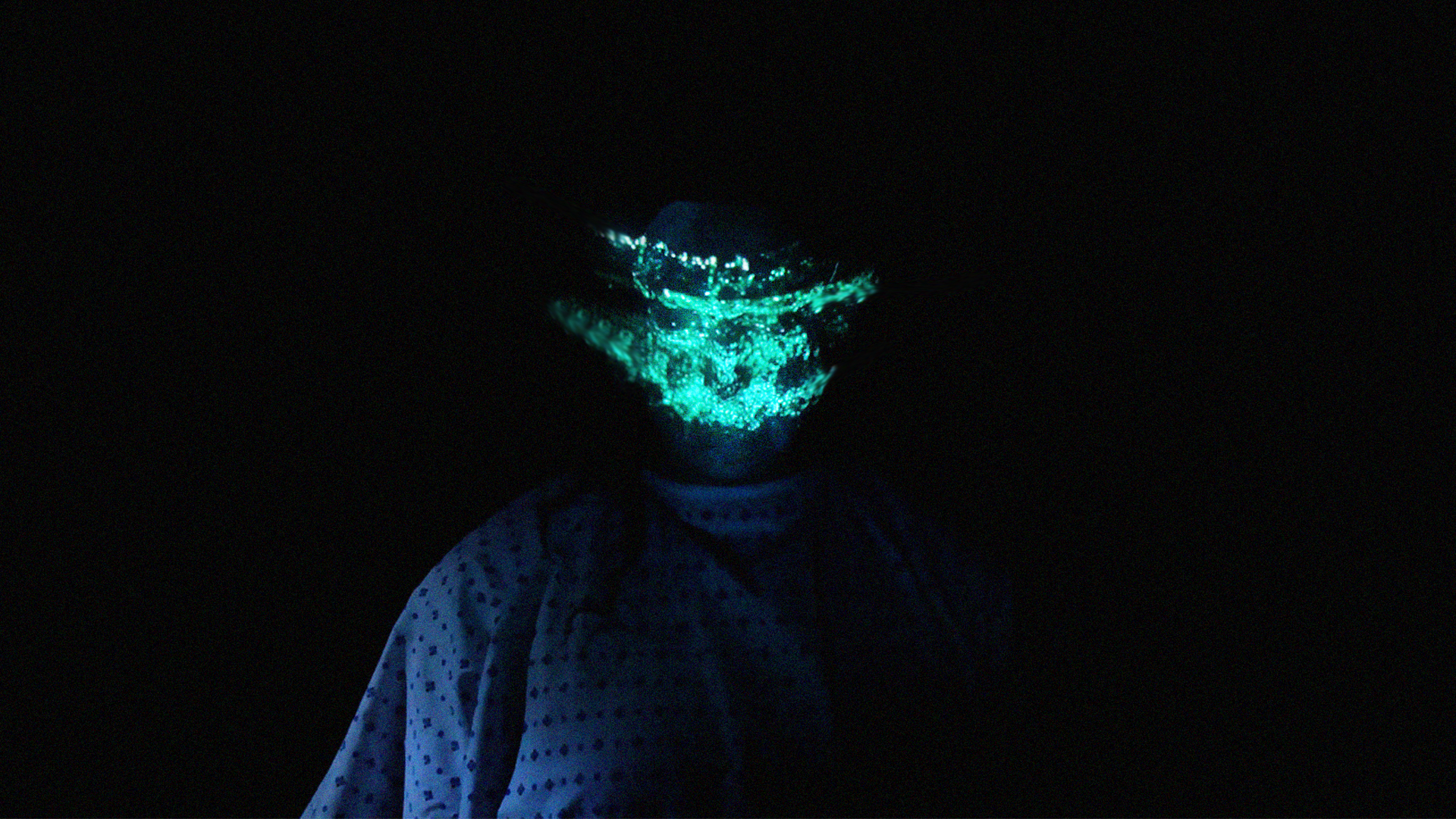

The Madness is trying to recreate their paranoia feeling when the symptoms get worse. They suffer from losing control of their thought, even with the hallucination. They are hearing the voices which are not real. Many of the scenes, I used the black jelly material effects to present the fear of the patient. During the performance, when it shows the black part not only give the audience a scary feeling but the patient is also scared and fear of losing control.

For the last part, Confusion is illustrating the most painful process that I see from the patient. Sometimes the patient will get more stable when they are taking pills on time. However, even with pills they can still out of control and go back to the madness. They know there is something wrong with them but they just cannot control.

Cover by hand as a protective mechanism images, shows we all want to hide our true feelings. Although even cover by hand, the lunatic expression still exposes to the public.

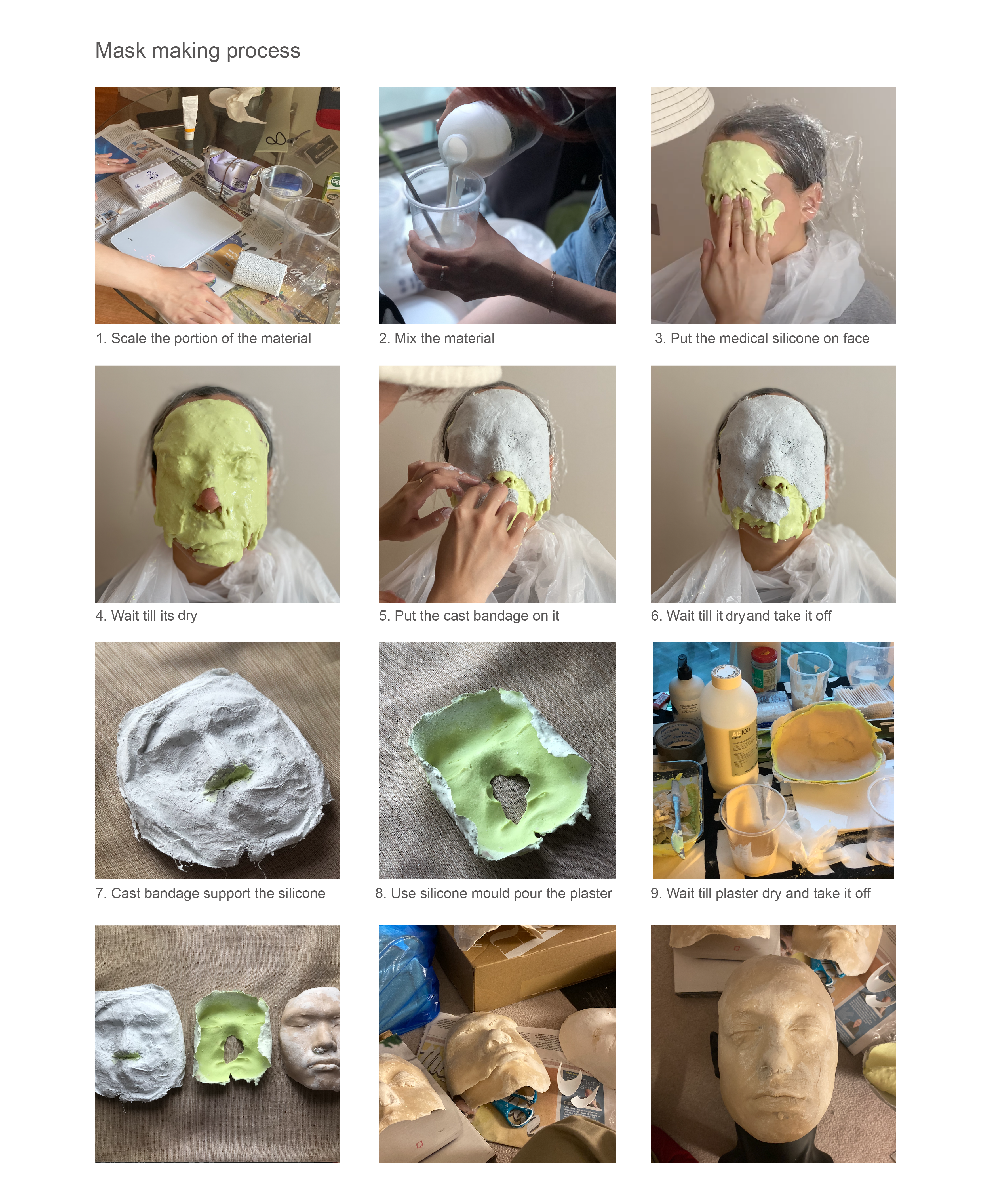

2. Mask Design

Mask using different visual convergence and materials to develop the characteristics of the main role who suffered in disorders and delusions in a mechanical vision. The components of this role have been extracted and revised through the following testing into several versions.

The portrayal that we set first is mainly about a mental illness patient with abnormal acts. Artificial treatment sometimes can be cold but humans have been holding temperature or warmth both physically and mentally, which means the outfit should work with the natural body itself to resonate among the audience. Also, following the fact that her personal ideation is influenced by others expectations and social norms, the face mask is designed as a platform to project those conflict gears. Therefore, the performer's face is repeated by moulding with preserving details as an artificial shell but leaving the true self in vague, wrapped, even breathless.

3. Choreography

For making the process more flexible, me and my performer chose to combine with choreography and improvisation. The way we start for the perform, I tried to brings the narrative of the character to the performer. Then the performer starts to project the character to herself and base on the references we found to improvise. After all, we look back to some recording discuss which part of performing is fit with the effects and the narratives.

It is a challenge for my performer to do this performance. During the show she ha to wear the mask that we made, so she cannot see and have to make sure her face is facing in front of the Kinect, fixed her feet in the specific space. This means that she can only react with the audio and the memory of the visuals.

The bondage fixed the mask is a bit tight hurt a bit of her face.

For the rehearsal, we use zoom meeting to remotely rehearse. To let the performer know what is the visual going to project and let her know how the face hacking work, I screengrab the zoom meeting screen and make the screen as an input to track the face and put the filter on her and spout out the output to the zoom.

4. Audio

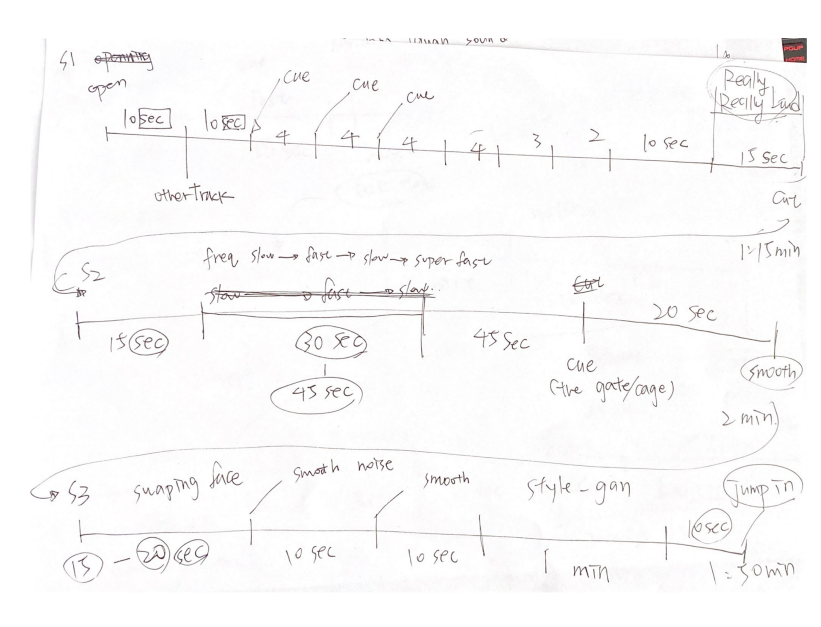

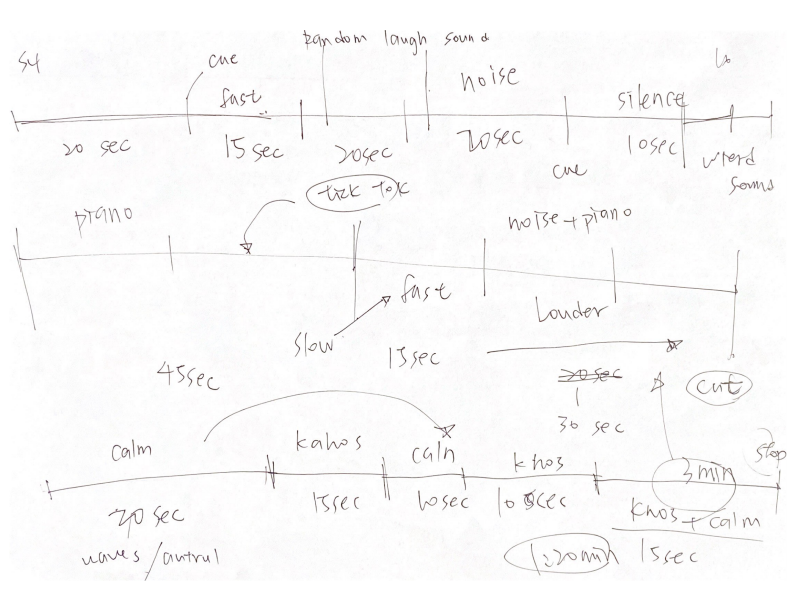

I have a lack of experience of music composed, so I found many references to the audio such as 404.zero and many different samples of the audio. I wrote a draft of the timelines and cues also the transition of the different scene to music composer.

It is also a difficult part that matching the visual with the audio, piece different part together.

I keep testing different visual effect and the images with similar feeling audio that I’m looking for then sent it to music composer. Base on the reference we got and the visual, timelines draft, music composer create the audio that fits the narrative. For some parts of the music, I gave the concept of the visuals might be and what I want to her, and she creates the audio first then I base on the audio creates the images.

Difficulty

Basically can add many effects through 3D model and the 2D images, one of the tricky parts is the computational expense. Face tracking takes extremely expensive GPU. Originally, I want to create the full real-time render face hacking visuals. However, for the stability of the whole show and the frame rate I choice to do the half real-time effects and half pre-render visuals.

After all, these visual effects still made with real-time performance.

Mask and the computer vision

The trickiest part is to make sure the computer can still recognize the mask as a human face. I tried many devices and the illuminator but the algorithm that I used still not that sensitive for the detection. We made many version of the mask, but some idea is being cancelled due to the detection.

Equipment testing

Two critical part of this project is the IR camera and the projector. I tested many types of equipment such as realsence F200 D435 and the Kinect V2 to see which one is the best in the dark.

The realsence can actually get more clear images in the dark and it works really well with the infrared illuminator. I found out that two wired things in realsence D435 and KinectV2.

The D435 is designed to do the motion capture and the depth images, so when I turned the infrared function, it will shot many points of dot to interrupt the images. To grab more clear images in dark it will also absorb the visible light which is a big problem when projecting on the face.

Kinect V2 works perfectly, but it can only absorb the infrared light from the Kinect itself, it will not be impacted by the illuminator that I use. This means the object needs to be more close to the equipment to be detected.

Further Development

In this project, I think it is really fascinating to see how technology gives a more immersive experience to the live-performance. Face Hacking creates more dynamic expression to the audience. This brings the big white screen to the performer’s face like people can tell the character’s story through their face.

Meanwhile, I’m collaborating with a fashion photographer that experimenting the face projection mapping on the model for a collection. This project is using 2D UV mapping for the texture for most visuals. However, I would love to jump into the next level for 3D tracking, I already create some 3D face rigging to experiment with the 3D facial motion capture through the FaceTraker. I would also want to try different program tools for face hacking such as SparkAR, Blender, Unity and Unreal4.

Self Evaluation

Overall, I learned so much from this project. I have been planning this project for over 6 months. I am really glad that I can develop the beta version from last term project into an immersive performance.

Looking through many face hacking projects, they are involving with many peoples and developing more than 6 months. Therefore, I am really happy that I can finish this giant project that people thought that it is too big to finish before the degree show.

Telling a story within 12 minutes is difficult, but I am glad that most of the audience can feel the patient suffer from and feel the experience that I had.

I cried during the rehearsal. This project for myself is not only for demonstrating the skills we learned but therapy for myself. I used to misunderstand and being impatient with the patient. I have seen that all of my family is suffered from this mental diseases. I used to be ashamed of telling this story that I have. Now, I am glad that it is part of my life and willing to share this with others.

Reference

DBraun - FaceChop

https://github.com/DBraun/TD-FaceCHOP

DBraun - PhaserCHOP

https://github.com/DBraun/PhaserCHOP

Generated Photo

https://generated.photos/

Paul Lacroix

http://paul-lacroix.com/

Paul Lacroix - PARTED - Body Tracking and Projec=on Mapping

https://vimeo.com/416554307

Paul Lacroix -EXISDANCE – REAL TIME TRACKING & PROJECTION MAPPING

https://www.pics.tokyo/en/works/exisdance

WOW|INORI - PRAYER-

https://www.youtube.com/watch?v=3Aos1Z2htDU&list=PLUYQlc8Q_-GObge0Z5TRtfniUeMyYO4DU&index=5

Kat Von D - Live Face Projection Mapping

https://www.youtube.com/watch?v=KYbI6gIDnKQ

Chris Burden - Shot

https://www.youtube.com/watch?v=drZIWs3Dl1k

Franko B - Aktion 398

http://www.franko-b.com/Aktion_398.html

Gillian Wearing

http://www.artnet.com/artists/gillian-wearing/

Special Thanks

In this project, I really appreciate that I can have so many friends help.

This will not just be a one men project, but a team project. Without my incredible team helping me, it will just become a light show.

Thank you Ray helped me so much and suffer a lot from this project and do not forget the drama we had.

Thank you Kristia always be so kind and patient to me. It is such an honour can work with you. You are the best dancer that I ever had.

Thank you Christina for bare with me a lot. I am terrible to express the concept that I want, but you always can make incredible audio which is exactly in my mind.

Thank you Rob for filming this project. I cannot imagine what will my video become without you. "You safe my life. I am eternally grateful. "

Thank you Clem for introducing me such an amazing performer. You are always the role model that I keep learning from.

Thanks to my sister Melody who inspiring me for the whole project and made me the person I am now.

Thanks to my parent, I love you both. But mom please do not being whiny too much. I still love you.