Networked Veil

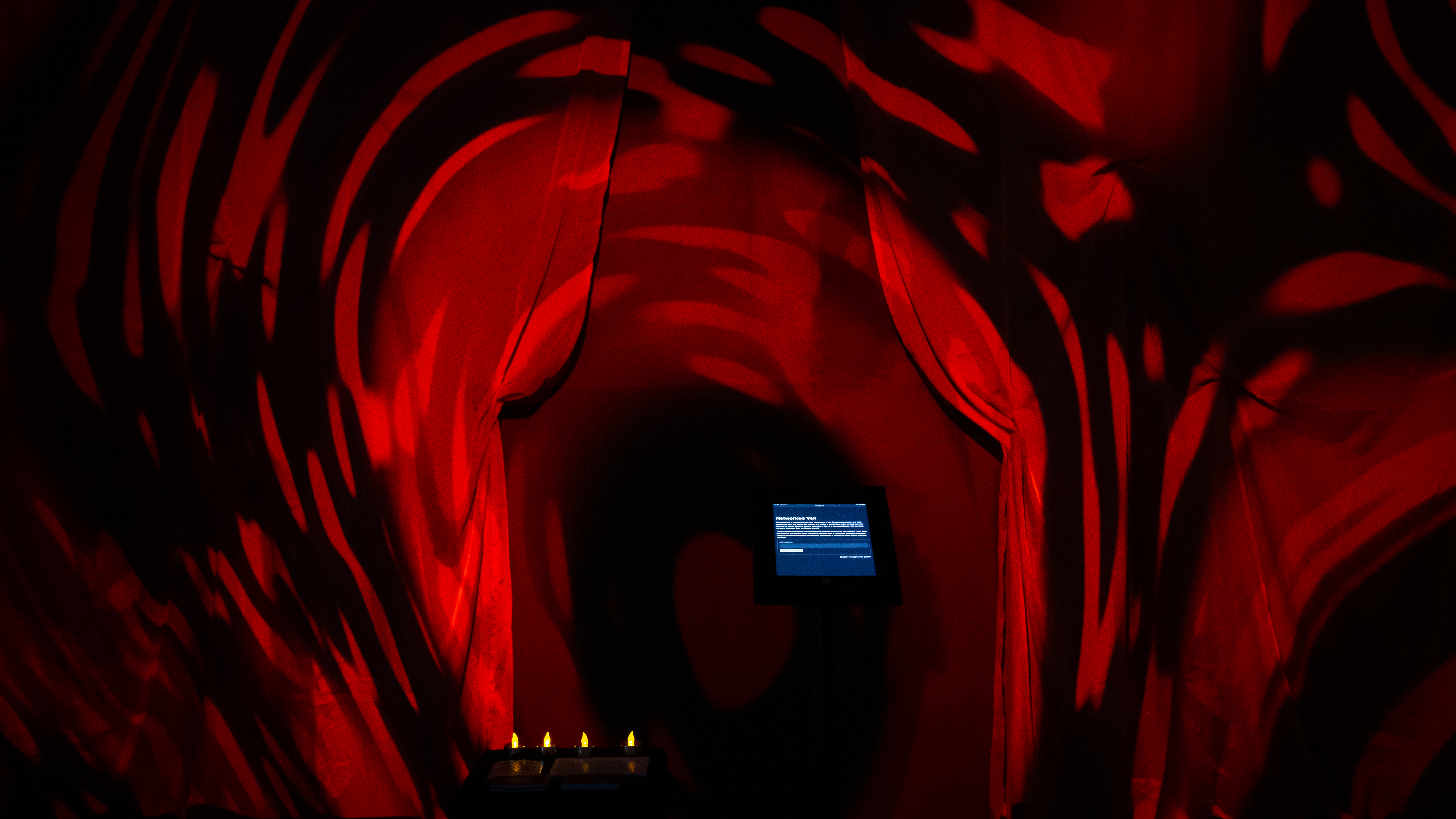

Networked Veil is an interactive projection which creates new digital landscapes based on the emotional content of textual messages on grief.

produced by: Ana Catarina Rodrigues Macedo

Introduction

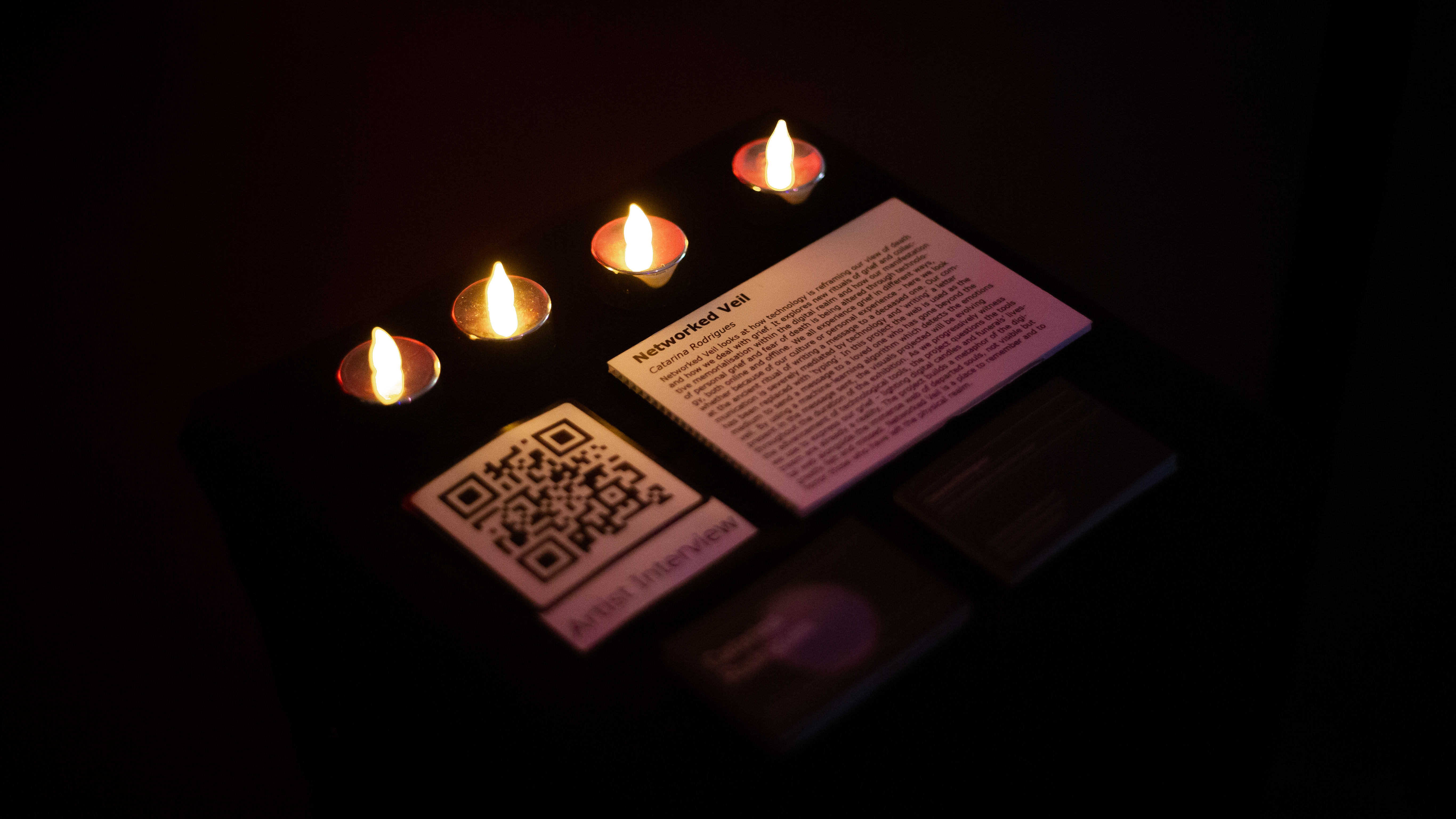

Networked Veil project looks at how technology is reframing our view of death and how we deal with grief. The public is invited to send a message to a deceased dear one, which will trigger a particular visual in relation to the emotions found in the message. As grief encompasses many stages of emotions, a machine-learning Python library is used to identify the emotions and their scores present in the text message sent. The projection gathers all our tributes and memories, thus it never looks the same throughout the duration of the exhibition. In memory of all it was and what will never be.

Concept and background research

For this project, I was drawn to new rituals of grief and collective memorialisation within the digital realm and how our manifestation of personal grief and fear of death is being altered through technology. In Networked Veil we look at the ancient ritual of writing a message to the deceased loved one. Written text can be one of the many ways that we give a physical expression to what we feel when we grief. Our communication is severely mediated by technology and our ‘writing a letter’ has been replaced with ‘typing’. In this project I use that action to send a message. As we progressively witness the advancement of technological tools, I question what tools we will use to express our grief – lighting digital candles and funeral live streams are already a reality.

The emotional energy current can feel fluid and spacious like in a deep state of meditation bliss of equanimity. The emotional energy vibration can be like ripples on a lake, not disturbing, but feeling sensations nonetheless present. The emotional energy vibration can be like a torrent river in a state of panic or fear. The emotional energy vibration can be like earthquake tremors such as in a state of anxiety. The emotional energy vibration can be intensifying charged current like in the state of anger. Emotional energy can be like a tsunami wave that hits you with full force like in the state of grief pulling you down and under. Shawna Freshwater

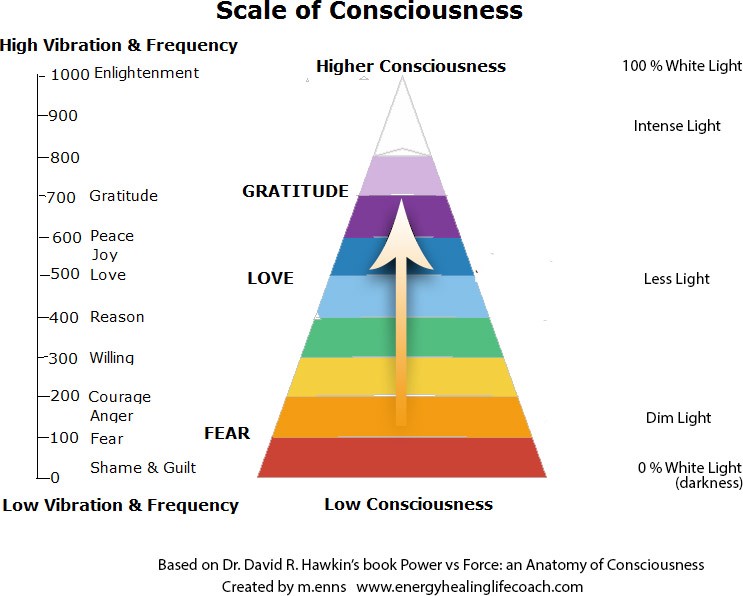

I wanted to build a metaphor of the digital web with the immense web of departed souls, not visible but greatly felt and missed. In this interactive projection, a new digital landscape is created using the emotions found in a text message sent by the viewer. The colors that we see in the projection are connected to the emotions. For this, I drew inspiration from the emotional frequency based on the scale of consciousness. As we can see in the image below, the highest frequency emotion is joy, associated with violet hues, and the lowest is sad with red colour. This was the most accurate way of organising the colour parameters with the emotions input, as it is intrinsically related to how we sense the emotions in our body - joy and gratefulness brings us high waves of energy, whereas sadness leaves us depleted of energy and motivation.

Image: Paintings by Ana Montiel

The visuals are inspired by fluidity and how people often say that grief sometimes feels like an ocean. With it being associated with water (emotional world) and always moving, either backwards or forward (like a wave), I wanted to bring a sense of continuous movement with the visuals projected. Besides that, there are elements of the visuals (for instance, elements such as noise, speed, sin wave which are going to change according to the score of the emotions). I want the piece to keep evolving, so it never looks the same. I was inspired by the works of Ana Montiel, the artist’s paintings have the fluidity and colour scheme I was looking for.

Image: Paintings by Ana Montiel

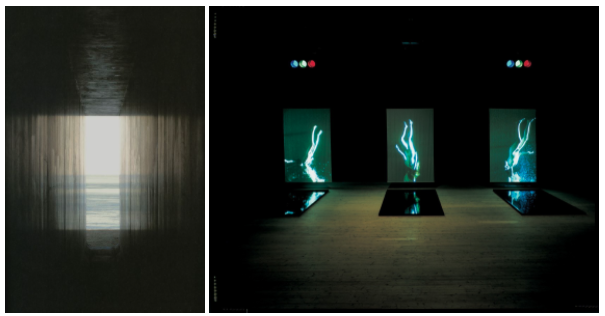

The title Networked Veil is inspired by the expression “beyond the veil” which refers to the unknown state of life after death. I wanted to bring the idea of a veil onto the visuals, so I decided to project on a set of two curtains. They have a semi transparent look and are set up to resemble the curtains we find in a window. The fragility present in the fabric and how the visuals become fragmented on the curtains relates to the way our memories become fragmented as time goes by when we grief. The Go'o Shrine-Hiroshi Sugimoto, in the Japanese island of Naoshima, inspired me to look at a landscape through fabric and to reflect on what’s visible or invisible. Additionally, Bill Viola’s work inspired me in the way the artist works with similar subject matters in his work.

Image: (left) Go'o Shrine-Hiroshi Sugimoto; (right) Bill Viola

Our messages and memory will forever live in bits and pixels. As Sherry Turkle states "(we are) wired into existence through technology" - but what happens when our "offline existence" vanishes? And how do we witness these events through technology? New forms of consciousness and avenues of connectivity are being established with online memorials as the Internet allows people to find new means to maintain relationships with the departed, which feeds into the idea of the Internet as a “techno-spiritual system”.

One of my intentions is to entice the viewers into a state that is self-reflective – how does it feel when they are writing and sending the message? Fake, emotional, indifferent? How does it feel seeing your text input part of the projection?

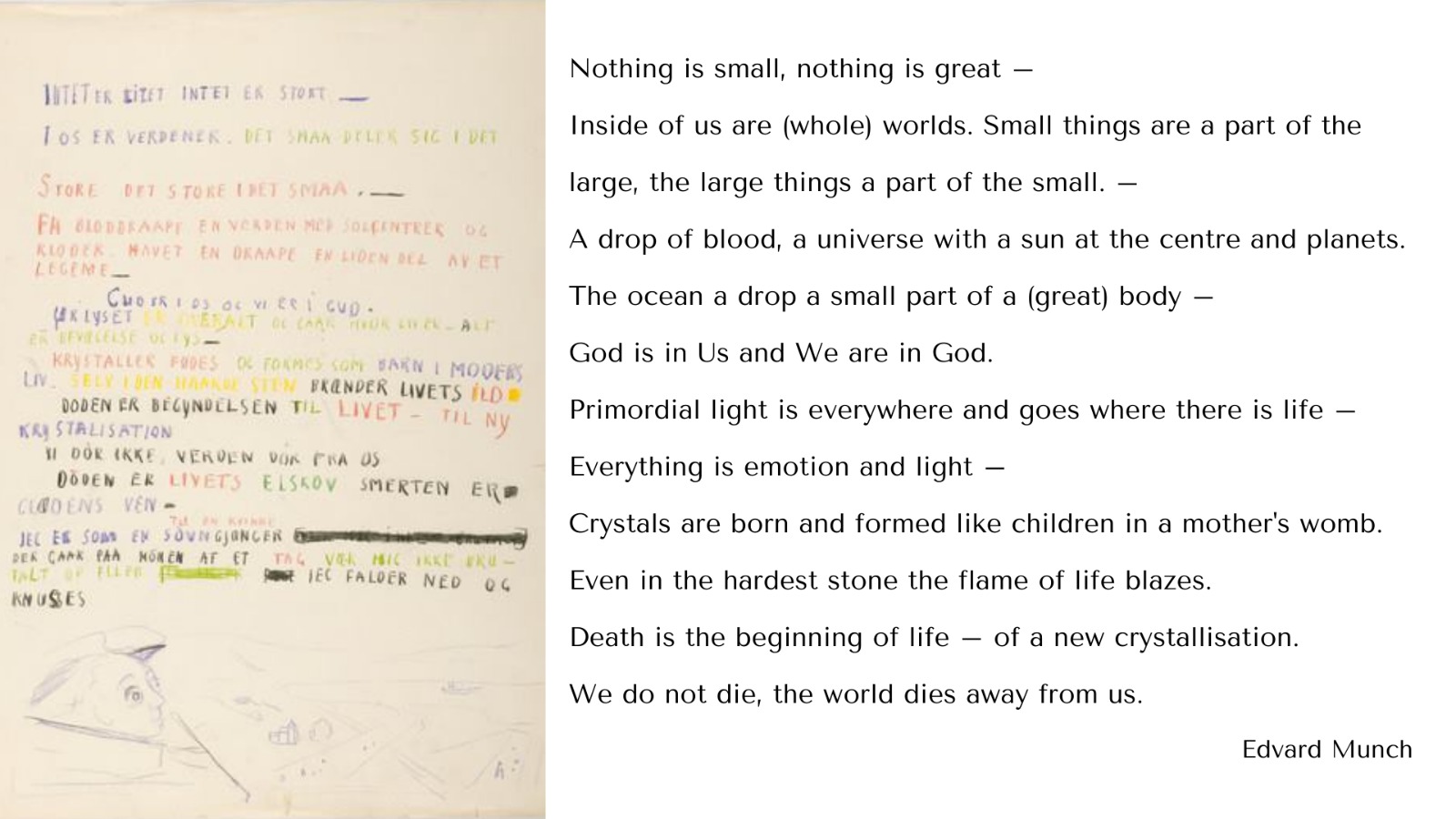

I was very inspired by the work of Carl Jung who talks about accepting mortality and grief as an initiation into self and the growth we can achieve when we embrace grief. Finally, the text that made me embark on this project was the following by the painter Edvard Munch - he says “everything is emotion and light”, which inspired me to work on an interactive projection being affected by emotion, and therefore light.

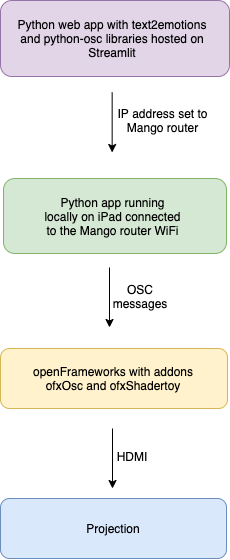

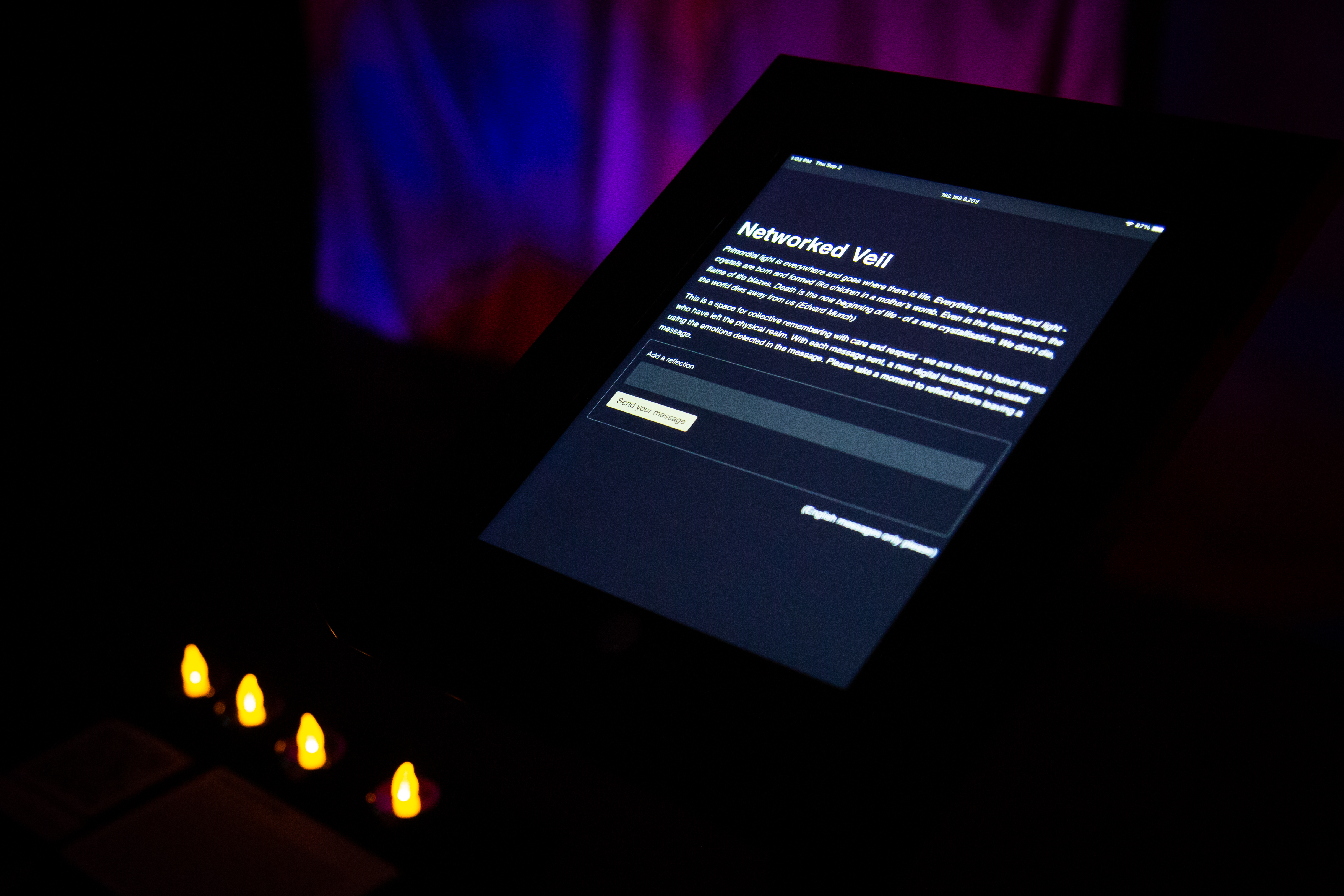

Technical

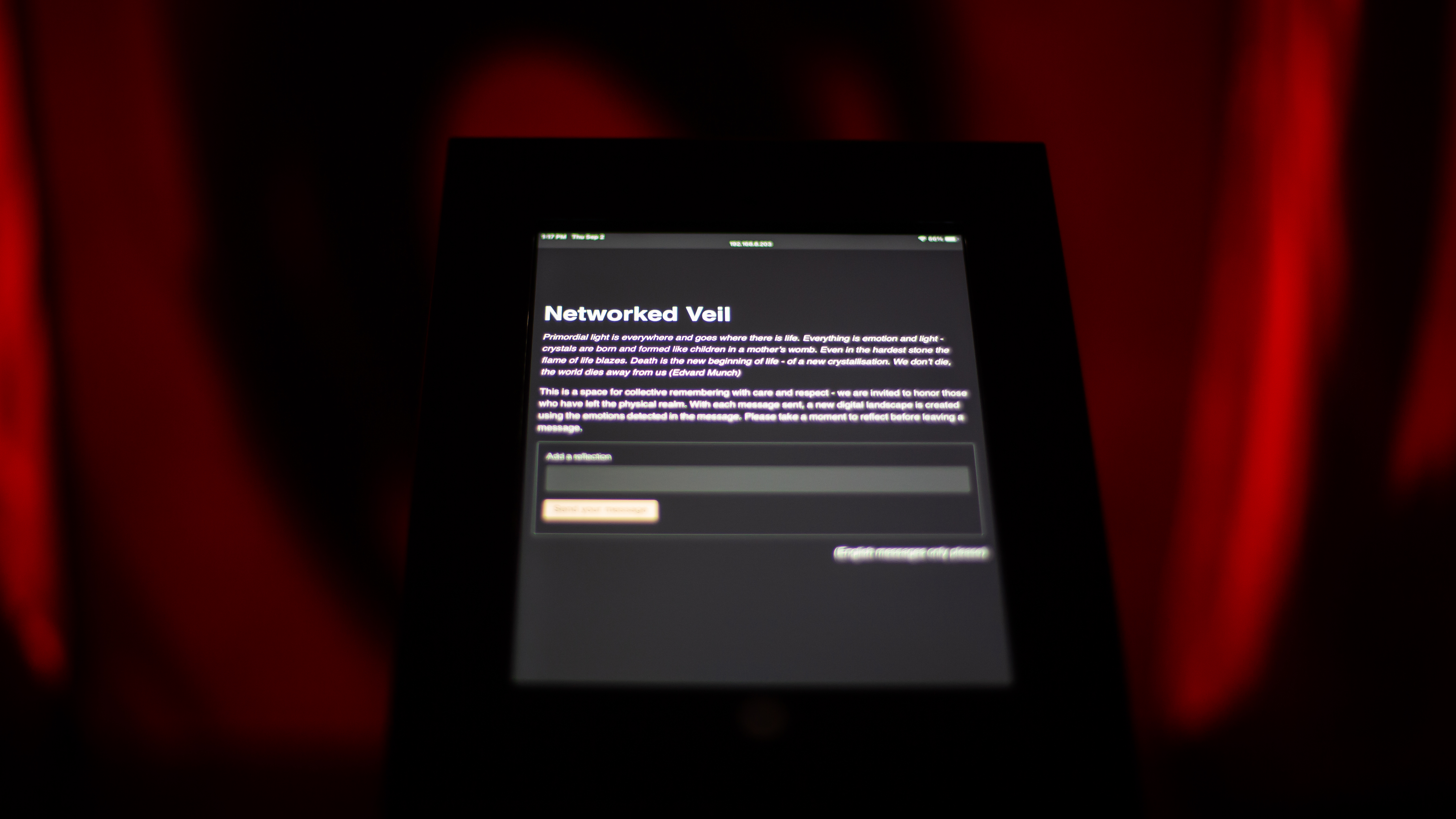

The first part of the project consists of a web app built using a python library named text2emotion which processes textual data, recognizes the emotion embedded in it, and provides the output in the form of a dictionary. It can recognise 5 emotion categories such as Happy, Angry, Sad, Surprise and Fear. As I wanted to create a web app with a text input, I decided to use Streamlit, an open-source framework for machine learning, which enabled me to build a custom web app using Python. The goal of it was for the public to write a message and then send it. Along these lines, the viewer accesses the app by using an iPad and when a message is sent, the emotions categories and their score (between 0 and 1) are sent to openFrameworks via OSC messages. I used the Python library python-osc for sending messages and client implementation, and used the addon ofxOsc to receive the messages in openFrameworks. To make sure that there weren't any connectivity issues, I used a GL.iNet GL-MT300N-V2 router which created a guest WiFi network and allowed me to connect the iMac (where the web app was running locally from Visual Studio Code) and the iPad (where the user could access the web app on the browser) to the same network.

Image: iPad screenshot of the web app

The emotions categories and their score are sent to openFrameworks via OSC messages which will process the input messages and create new digital landscapes with each message sent. The openFrameworks app uses the addons ofxShadertoy that setups and loads shaders from the Shadertoy page. I decided to use shaders as the main visual component as I’ve always been drawn to OpenGL and thought this project would be the best way to further develop my shader skills. Additionally, working with shaders allowed me to achieve the aesthetic I was looking for - wavy lines, fluid-like, spiral and curves. On ShaderToy I found a few interesting examples and built my own shaders from there.

One of the challenges present in the project was to differentiate the emotional content of the messages as text2emotion only recognises five emotions, which is not the most accurate representation of the emotions we feel. To make this work, I decided to map the emotion scores into different visual elements, thus it would create distinct visuals. I was able to pass parameters from shaders to openFrameworks by using the global Shader variable uniform. For example, two messages containing the same emotions Fear and Angry, but with different scores, would create two different visuals. The basis of each visual was on the most predominant emotion, thus the main colour was directly related to the emotion, as previously mentioned (see scale of consciousness).

Future development

The project will naturally be further developed as I intend to continue my research on techno-spiritual systems. I imagine the piece having another interactive component with computer vision, in which the public can further change the visuals in real time with their body language, thus going beyond any textual messages limitations. I’d also use an emotion detector algorithm which could interpret other languages than English, as text2emotion can only understand English messages. Given that my first language is not English and that I want my work to reach a wider and diverse audience, I’d like to expand the language scope of Networked Veil as emotions such as grief might not feel the same when we are reflecting on it in a language that it’s not our mother tongue. This also made me think on how our emotional state is influenced by the language we choose to express it, which is another starting point for a future project.

Self evaluation

My initial plan was to allow the user to access the web app directly from their own smartphone, however I wasn’t able to make it work on time for the final exhibition. Nevertheless, I think having only one device enabled the public to focus their attention as it wasn’t their device and avoided any possible issues with wifi connection if the user had to scan a QR code to access the browser.

My goal has always been to create an immersive and interactive projection based on topics such as grief and how we honor deceased ones. I wanted to work with text as an input and to have visuals as an output - in this case the messages are suspended in the cloud of an infinite web. The development of the web app was very insightful and gave me the chance to learn more Python and machine learning, whose skills I will apply on future projects.

References

Acheampong, F.A et al. “Text-based emotion detection: Advances, challenges, and opportunities”. 28 May 2020. Engineering Reports. https://onlinelibrary.wiley.com/doi/full/10.1002/eng2.12189

Streamlit: https://streamlit.io/

The Book of Shaders: https://thebookofshaders.com/

Python library text2emotion: https://shivamsharma26.github.io/text2emotion/

Python library python-osc: https://pypi.org/project/python-osc/

Shawna Freshwater, Understanding Emotions. https://spacioustherapy.com/understanding-emotions/

Munch, E. “The Private Journals of Edvard Munch: We Are Flames Which Pour Out of the Earth”. University of Wisconsin Press, 2005

Addons on openFrameworks:

ofxShadertoy: https://github.com/tiagosr/ofxShadertoy

ofxShaderOsc: https://github.com/hideyukisaito/ofxOsc

Shaders:

Lava flow - https://www.shadertoy.com/view/llsBR4

Light circles - https://www.shadertoy.com/view/MlyGzW

Platform 3 - https://www.shadertoy.com/view/tdtSWN

Slime puddles - https://www.shadertoy.com/view/tlsSzj

Northern Lights Blazing Bright - https://www.shadertoy.com/view/wdf3Rf

Orange - https://www.shadertoy.com/view/XdsfD8