__________________________________________________________________________________________

MOVEMENT FLOW DYNAMICS

___________________________________________________________________________________________

by Arturas Bondarciukas

Interactive and abstract human movement exploration installation - questioning

conscious intentionality, computer algorithms and human motion

___________________________________________________________________________________________

INTRODUCTION

With the recent proliferation of machine learning algorithms, there have been increasing attempts to analyse human movement using computational models, create tools to visualise motion, and even build generative systems that output choreographed dances learned from observation. But these have been mainly aimed at professional dancers and choreographers, requiring computer scientists to build specialised tools from scratch. In these solution-focused endeavours, a playful exploration of motion and digital interaction is limited.

The aim of this project is not to solve the existing problems in building computational models that can identify personal movement variation, or even address the current machine learning techniques. Rather, it is to explore human movements, and what constitutes it, through a series of interactions and interpretations, while inviting the audience to take part. This work is based under the philosophical premise of rejecting the mind/brain identity theory and defending non-compatibilist free will.

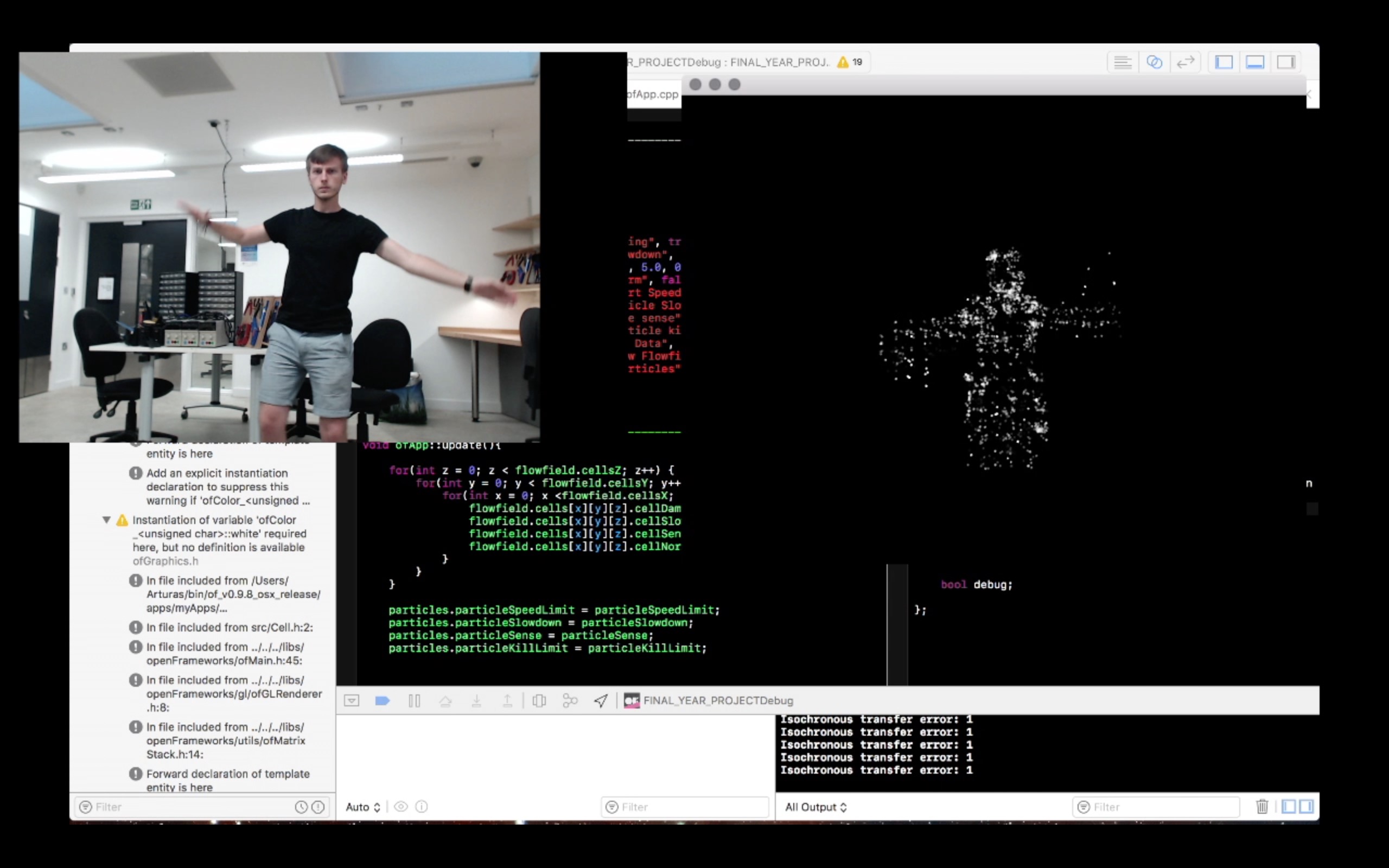

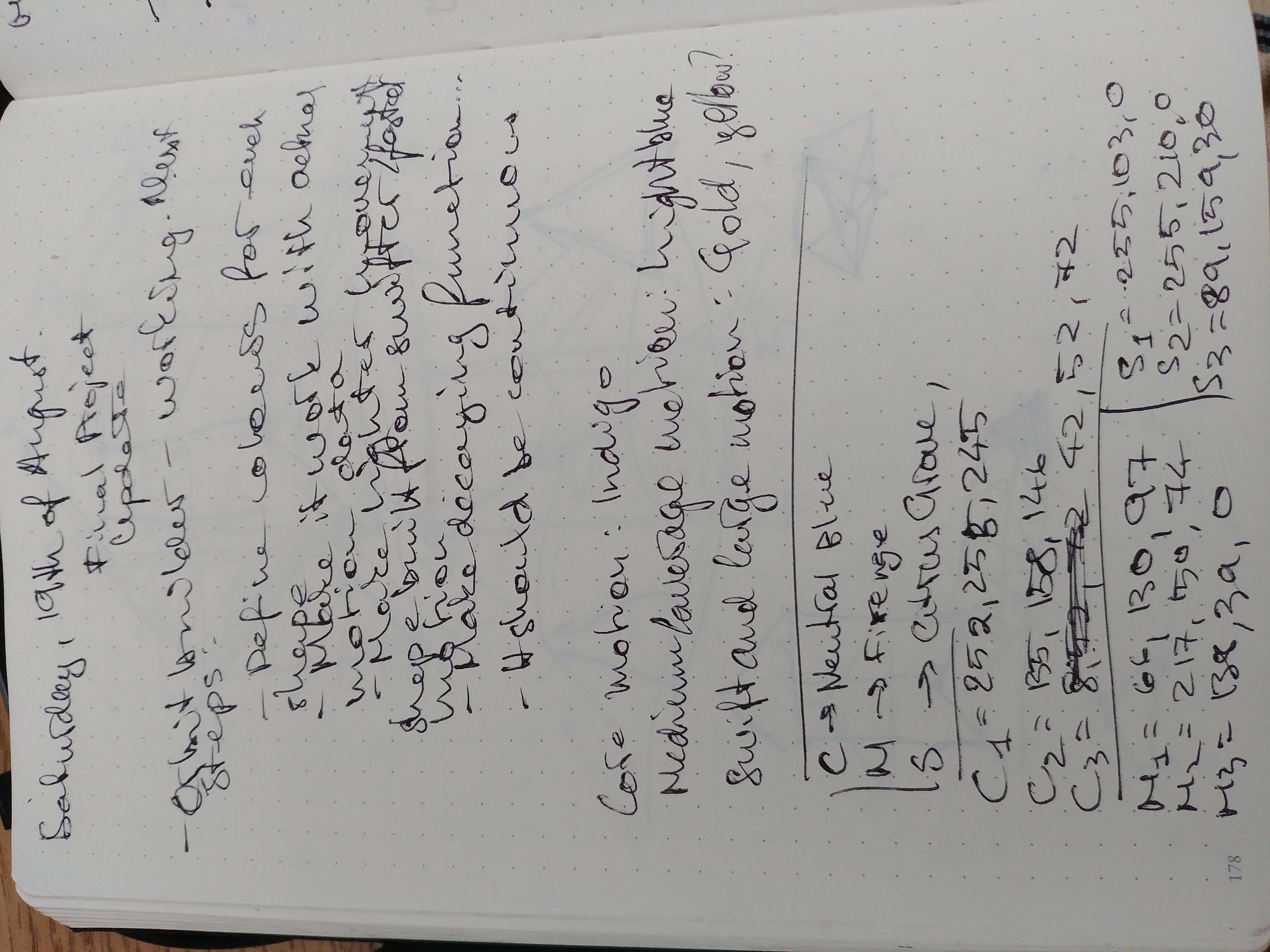

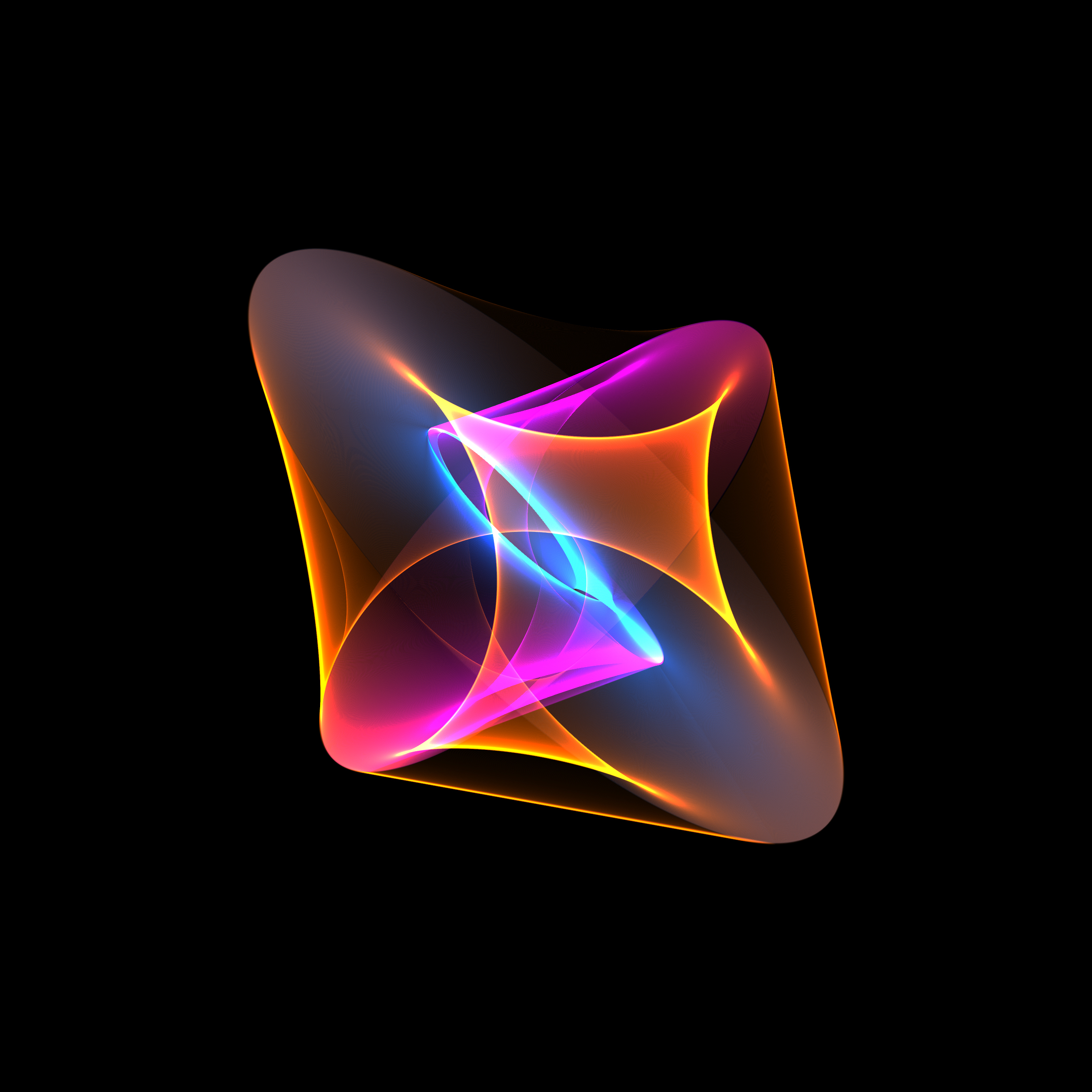

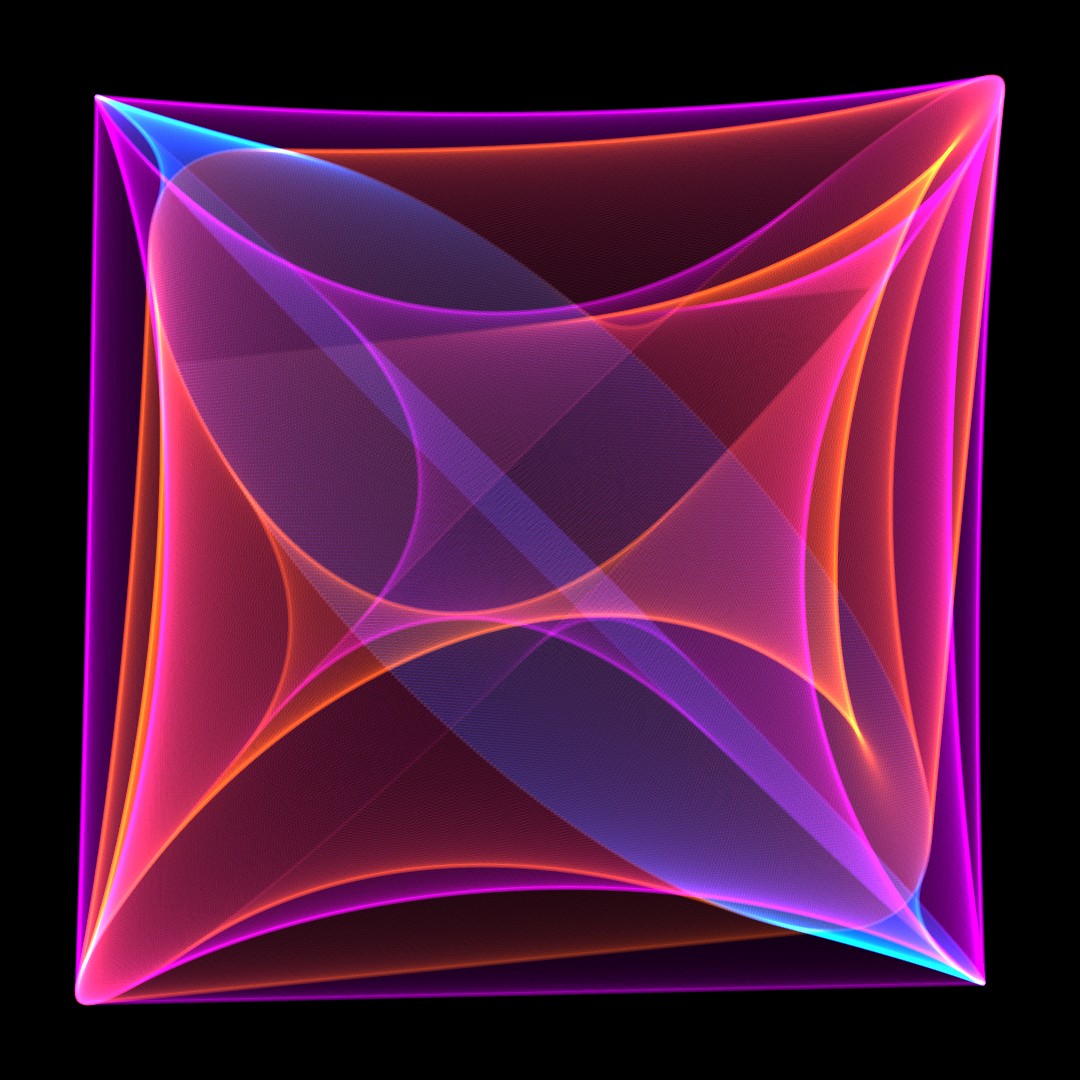

Using the three-dimensional capture camera, the audience can interact and play in the ‘particle field’, where individual shapes interact and are propelled by the velocities of movement. The movements are captured as flow-fields, indicating the relative motion in space. These flow-fields are then used as training information for a probabilistic prediction algorithm that renders a sequence of movement, which is ultimately interpreted as series of visuals. Three types of movement are represented as different colours and shapes. The ‘core’ motion, emanating from the centre of the body, is associated with darker colours, involving a large portion of the body to produce big and slow movements. These then give way to lighter movements represented as brighter colours, and finally, swift and large motions are represented as light colours. Each shape produced is unique, yet all are inextricably linked to each other, representing all the encountered movements as one.

__________________________________________________________________________________________________

CONCEPT AND BACKGROUND RESEARCH

Concept

It may be worth mentioning, without straying too far from our primary focus, to mention the origins of this project and what stimulated its conception. My incandescent enthusiasm for science and philosophy led me to explore the field of artificial intelligence, and the various themes that emanate from it, including mind/brain identity theory and the nature of free will.

Attempts to explain consciousness purely in material terms are in the habit of denying the obvious facts of personal conscious experience and action, and make very large dogmatic and highly speculative claims about itself. Daniel Dennett, a proponent of eliminative materialism, tried to justify the existence of consciousness in his book Consciousness Explained, stating that conscious states are ‘nothing more than’ brain-behaviour. He did not make a very convincing case, thus earning his book the nickname 'Consciousness Explained Away' within intellectual circles (Ward, 2009).

The complete diagnosis of all conscious experience in naturalistic terms involves the error of equating correlation with identity. Is consciousness the product of brain activity, or does it have its own distinctive reality? Indeed, all events in consciousness are correlated with neural events; this leads many materialists to infer that consciousness is the product of brain activity. This misses an obvious point. For example, neurochemical activity can be correlated with a mental image of an apple, but it is not itself the mental image of an apple.

Notwithstanding, the mind/brain identity theory, at one time widely favoured by philosophers of mind and still safeguarded by some, is untenable. Further, the more recent naturalistic alternatives based on emergent properties, such as dual attributes and functionalism, are also indefensible. They are forms of epiphenomenalism, according to which the direct contents of consciousness (the qualia) are not the products of brain-function but have in themselves no executive power. But if consciousness cannot affect behaviour, why has it evolved? No one has been able to adequately answer to this question – for if consciousness merely reflects the activity of the brain, and makes no behavioural difference, it wouldn’t have any survival value or evolutionary advantage.

The consequence of all this is that the nature of consciousness remains a mystery, and has been dubbed by many leading neurophysiologists as ‘the hard problem’. This entails that, as well as a physical reality, there may be a non-physical reality, which would be more sympathetic to the theistic worldview.

Recent advances in artificial intelligence research have accentuated that consciousness is very closely associated with the structure and activity of the brain, and have raised the possibility of processing neurocomputational information in complex but purely physical computers. This remarkable feat has led some scientists to postulate human minds as information-processing systems, and relegate conscious states as mere superfluous add-ons to our brains.

According to Ward (2009), these discoveries ‘do not change the basic insight of most classical philosophers that consciousness, intellectual understanding and morally responsible action, are important and irreducible properties of the real world’. Highly sophisticated computers may out-wit human cognitive speed in processing information, but they are not conscious. Neither do they understand or reflect on the programs that operate on their hardware, or deliberate over difficult moral dilemmas. A computer could be programmed to produce behaviour which simulates responses of love, anger, compassion and fear, but it is science fiction to imagine that the computer itself experiences these emotional states. Emotional states do not only arise from storing and editing information, but also from our capacity to comprehend its meaning.

There is an even more fundamental point to be made, and John Hick puts it well: ‘The more successfully [artificial intelligence] demonstrates that computers, which totally lack consciousness, can model human intelligence, the more definitively it shows that the brain as a computer cannot explain the existence of consciousness. For they model human behaviour without being conscious’ (Hick, 2010).

Computational neuroscientists are in the habit of assuming that we’re essentially computers; but if that’s the case, consciousness would be an superfluous add-on with no function. If it does not exercise cognitive control, by monitoring behaviours that facilitate the attainment of chosen goals via the brain, consciousness becomes functionless and inexplicable. Ironically, by trying to demonstrate a materialist account of consciousness, cognitive science increasingly establishes a powerful argument against it. My initial study objectives aimed to highlight the erroneous syllogism of logic of naturalistic thinkers by showing that consciousness is not a prerequisite of passing the Turing test.

At the start of this project the main premise was to a device an algorithm capable of multiple outputs, in order that the algorithm can be adapted to output predictions, suggestions and even generate a routine from learned movement content, such as dance. The objectives for the project in its preliminary form and can be outlined as follows:

1) To create an algorithmic dance piece based on a choreography directed and performed by dancers. The outcome of both pieces would be presented side-by-side to the public to determine if the algorithmic choreography can be distinguished from the one directed by a person.

2) To develop an autonomous system that would output suggestions for the analysed dance piece.

3) To analyse efficiency, flexibility and other aspects of the performers. The output would be a systematic numerical rating of the dancer’s traits.

4) To develop a predictive system that would classify and output the next most likely move based on pre-set criteria, such as the most efficient move, dance-style appropriate move, or a probabilistic model of improvisation.

Computers can be modelled in their software to simulate human choreography, or at a more sophisticated level, pre-programmed to play games such as Go or chess against world-class players. Some people would assume that the more successfully artificial intelligence is able to simulate human intelligence, the closer they become to becoming conscious. However, the more a computer can simulate conscious behaviour, the more evident it becomes that consciousness is not a prerequisite for apparently intelligent behaviour, and thus further demonstrates that the existence of conscious is a mystery. This these initial set of objectives are sympathetic to the view that humans possess a degree of conscious free will, as opposed to complete physical determinism.

Movement, dance and algorithms

Machine Learning

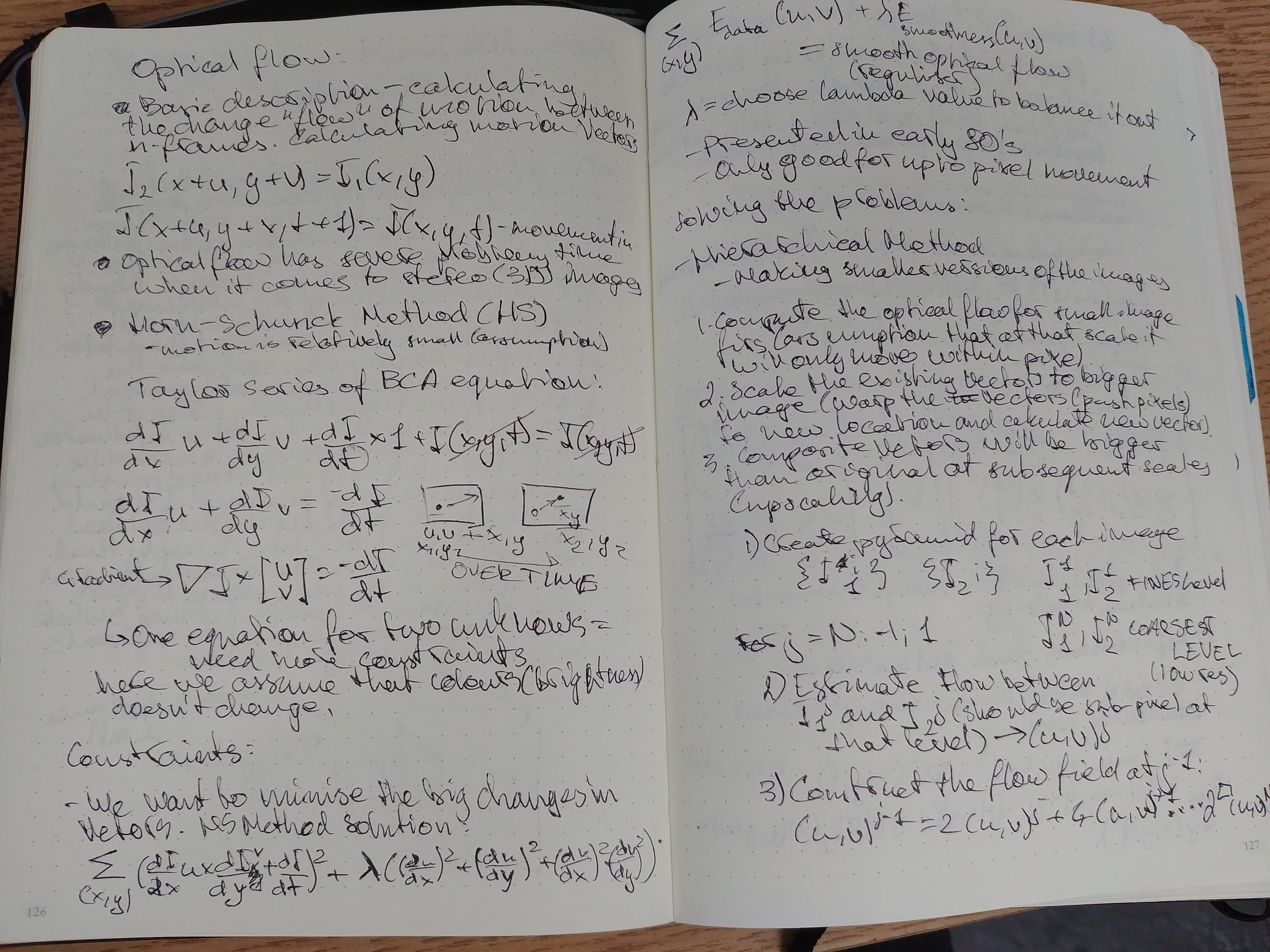

Optical Flow

___________________________________________________________________________________________________

TECHNICAL

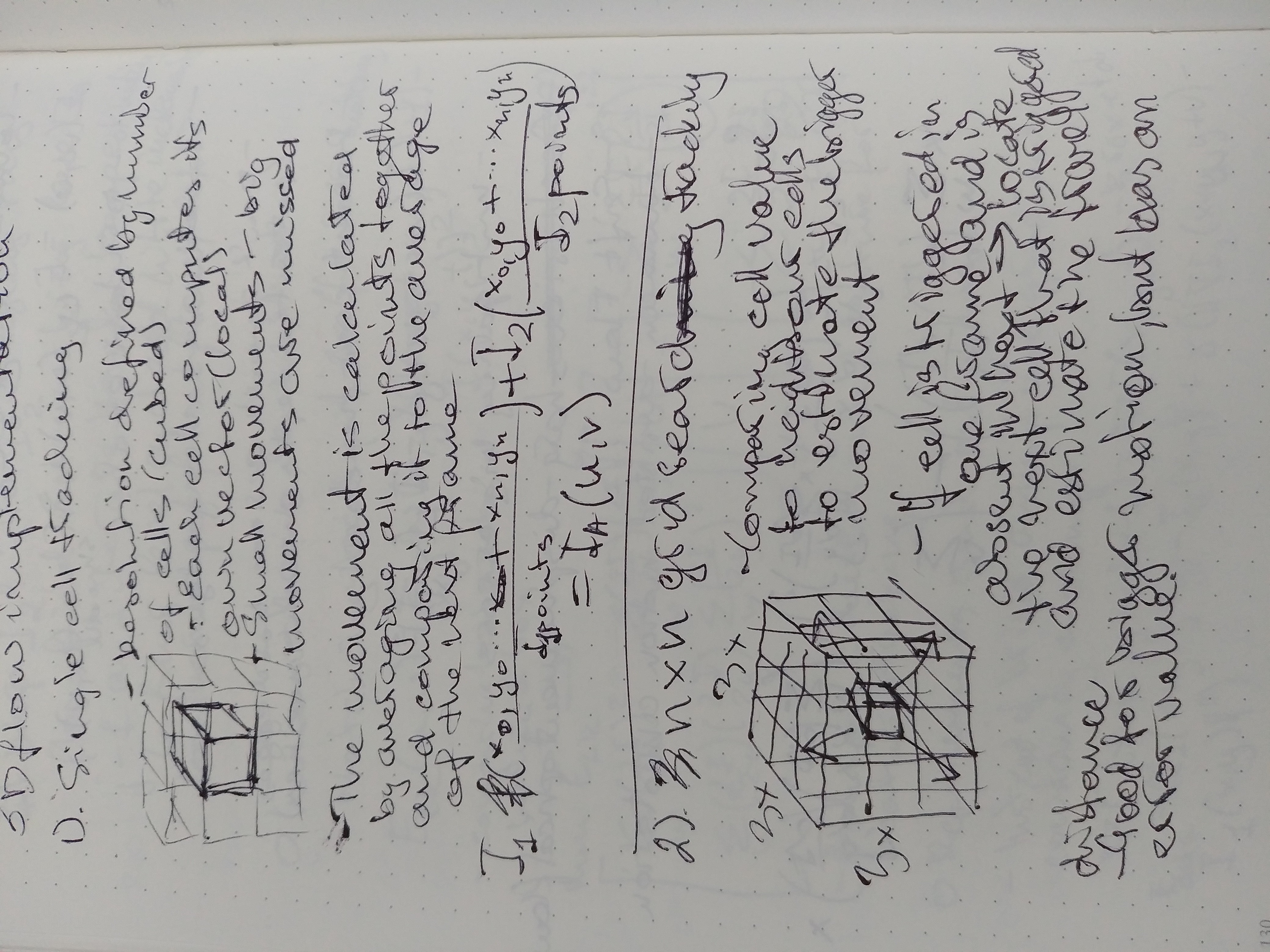

Volumetric Motion Flow Field

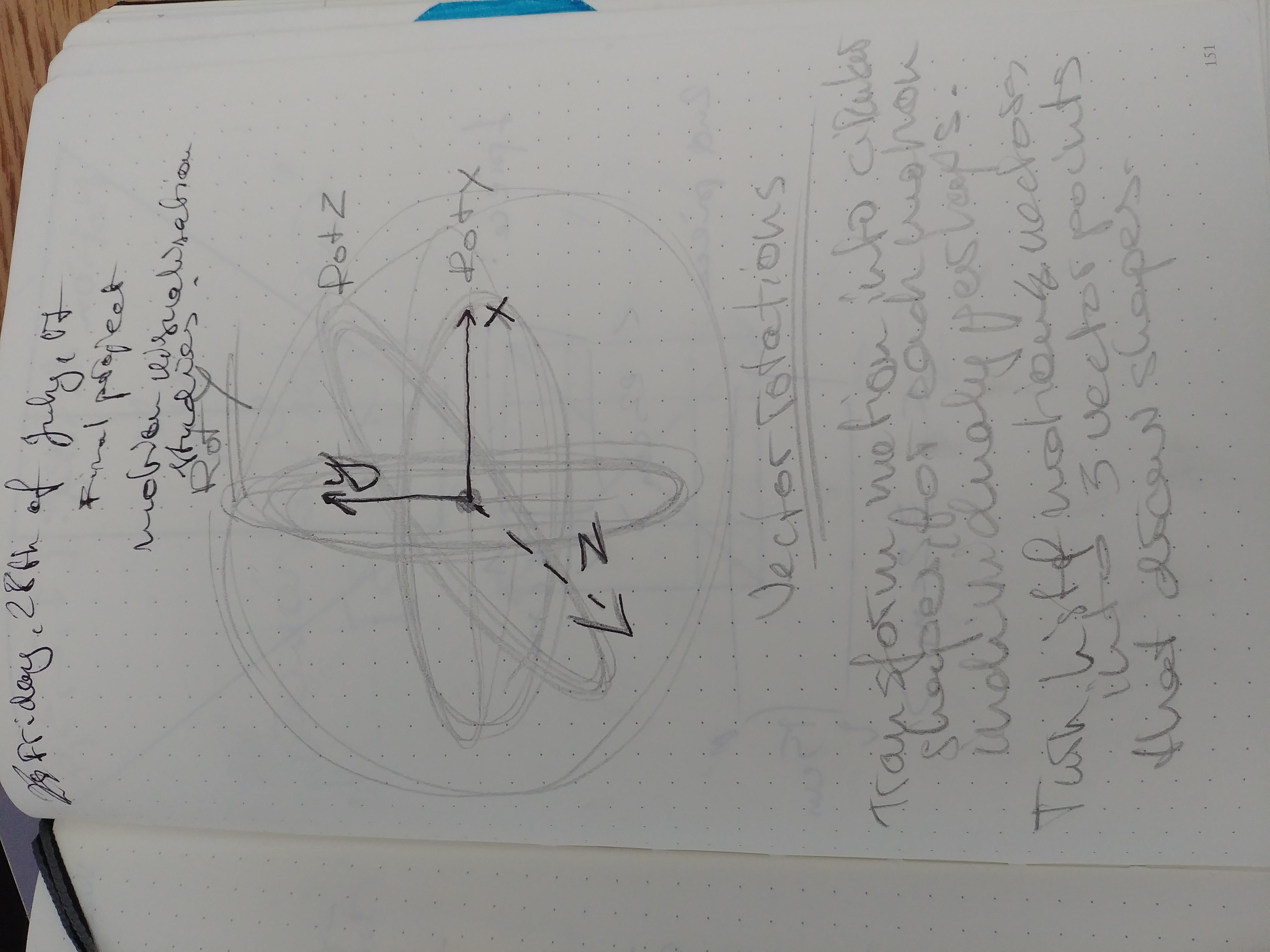

The foundation block of my piece (and research) was to come up with a way to capture movement information without using Kinect skeleton tracking. Wanting to learn how to use optical flow in three dimensions, and generally to understand it better, and to avoid using Kinect skeleton tracking (due to version 1 issues interfacing with Mac, sloppy SDK ports and non-existent support for MacOS), I set out figuring out how to capture motion flow fields. Below is a breakdown of how it works.

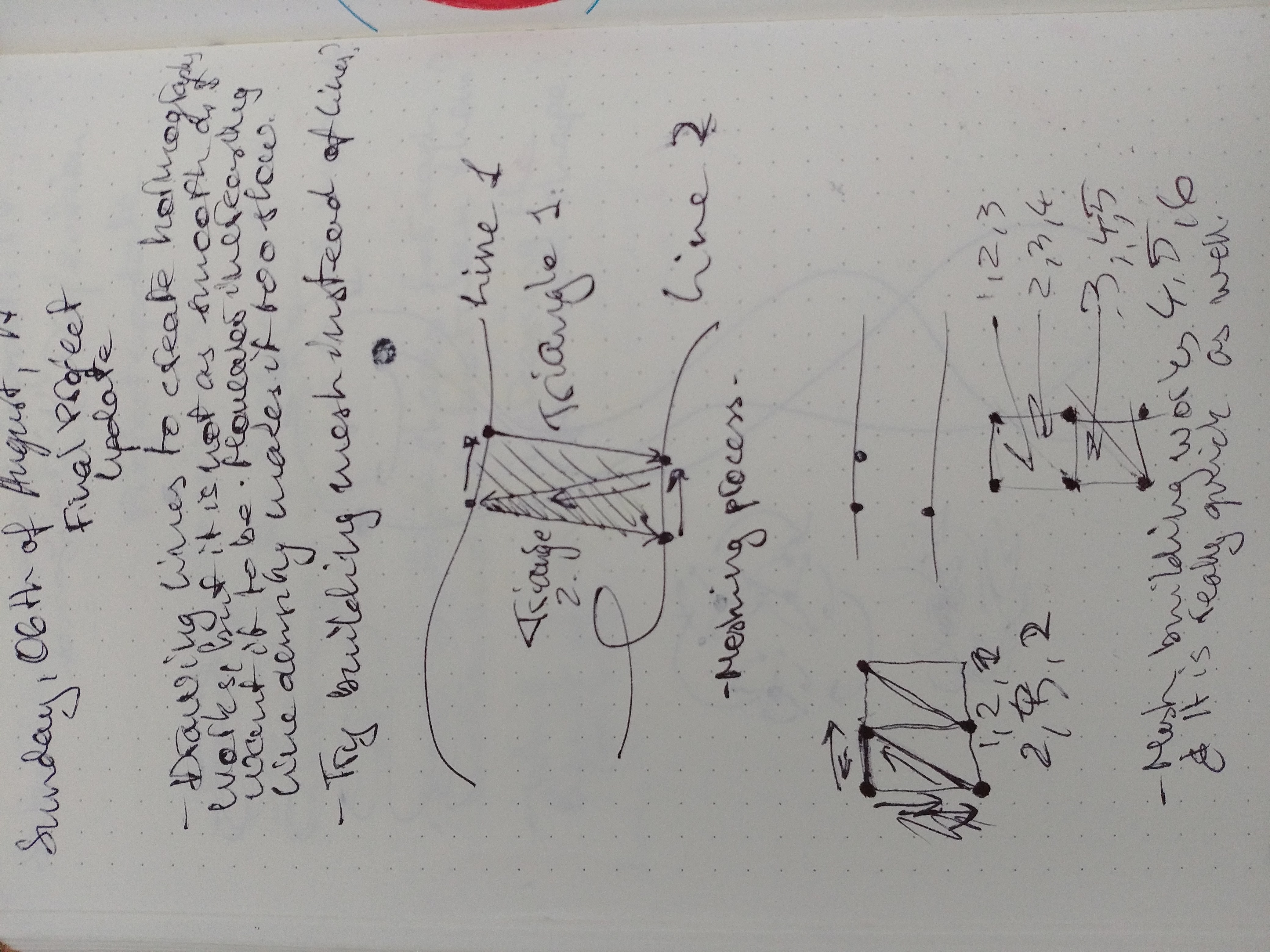

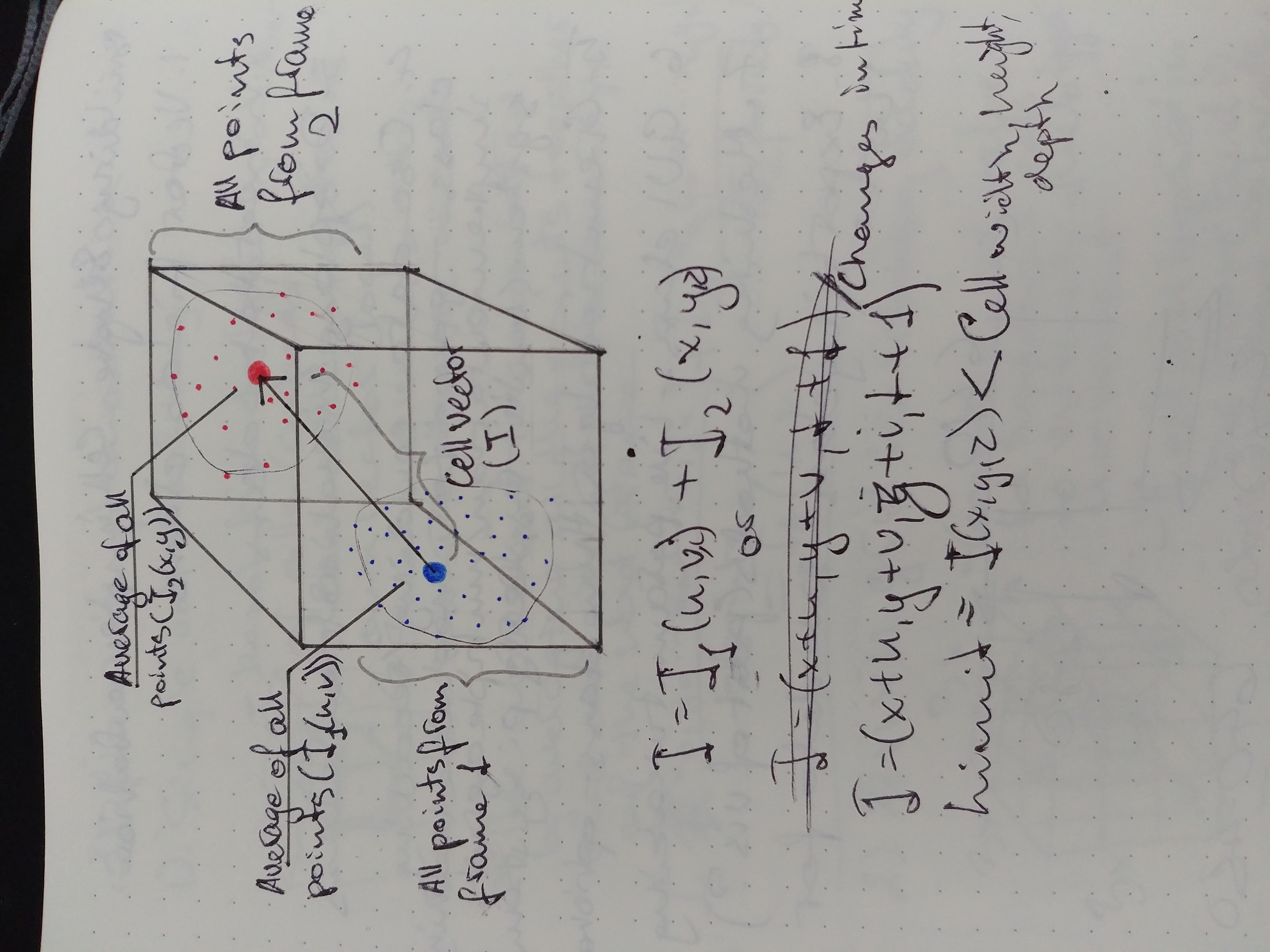

Each individual pixel from the Kinect is converted into a 3-dimensional vector and stored as an array. These vectors are then passed into a corresponding cell (in this case a volume of 40 x 40 x 40 cells) that stores the current frame vectors and the next frame. Inside the cell (a separate class within the program) the average location of all the vectors for both current and next frame are calculated and compared, from which a heading vector is calculated.

The limitations of this approach can be broken down into several categories, that are linked together (changing one parameter affects the other aspects of the flow field capture): 1) performance – increasing the cell count increases the time needed to perform all the calculations; 2) resolution – smaller cells provide more detail view at the motion at the cost of missing the bigger movements, and vice versa; 3) movement magnitude – motion heading is only calculated for movement within cell bounds. Some of these limitations can be mitigated by the means of implementing closest neighbour comparison at the cost of performance.

This motion flow field capture implementation is used for drawing all the visuals in my project; from interaction side to Markov-chain classifying and generating abstract shapes from the movement as visuals in the final piece.

The performance of the class is fairly reasonable (for 40x40x40 flow field volume), after switching some of the parameters to pointers instead of variables. For better-debugging capabilities, I have used ‘ofxGui’ add-on that allows quick tweaking of the parameters, as I found out that debugging is what takes me the longest. The next step would be to optimise the code more by switching more of the variables to pointers, as there is a performance hit when exchanging entire blocks of information between classes.

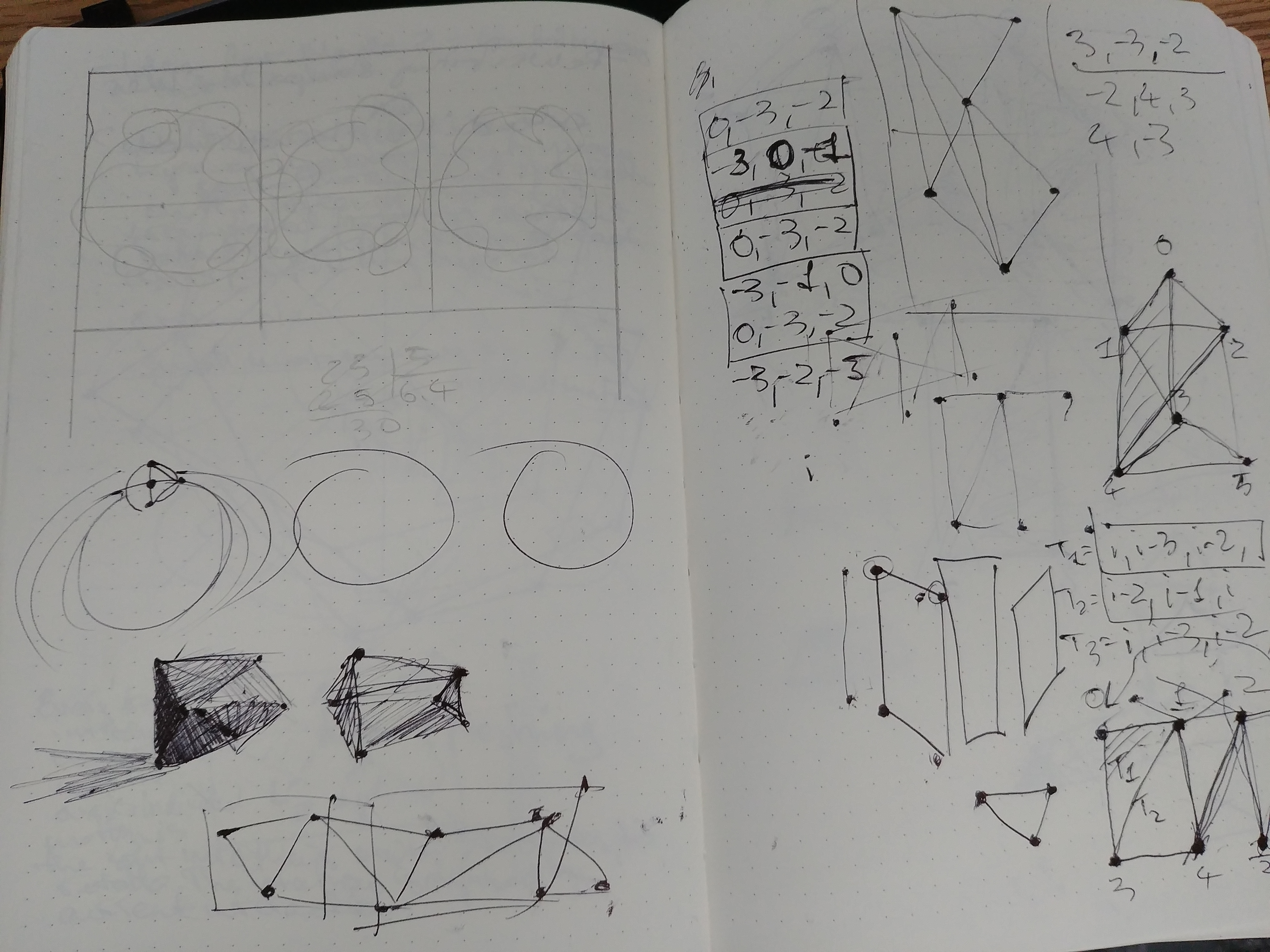

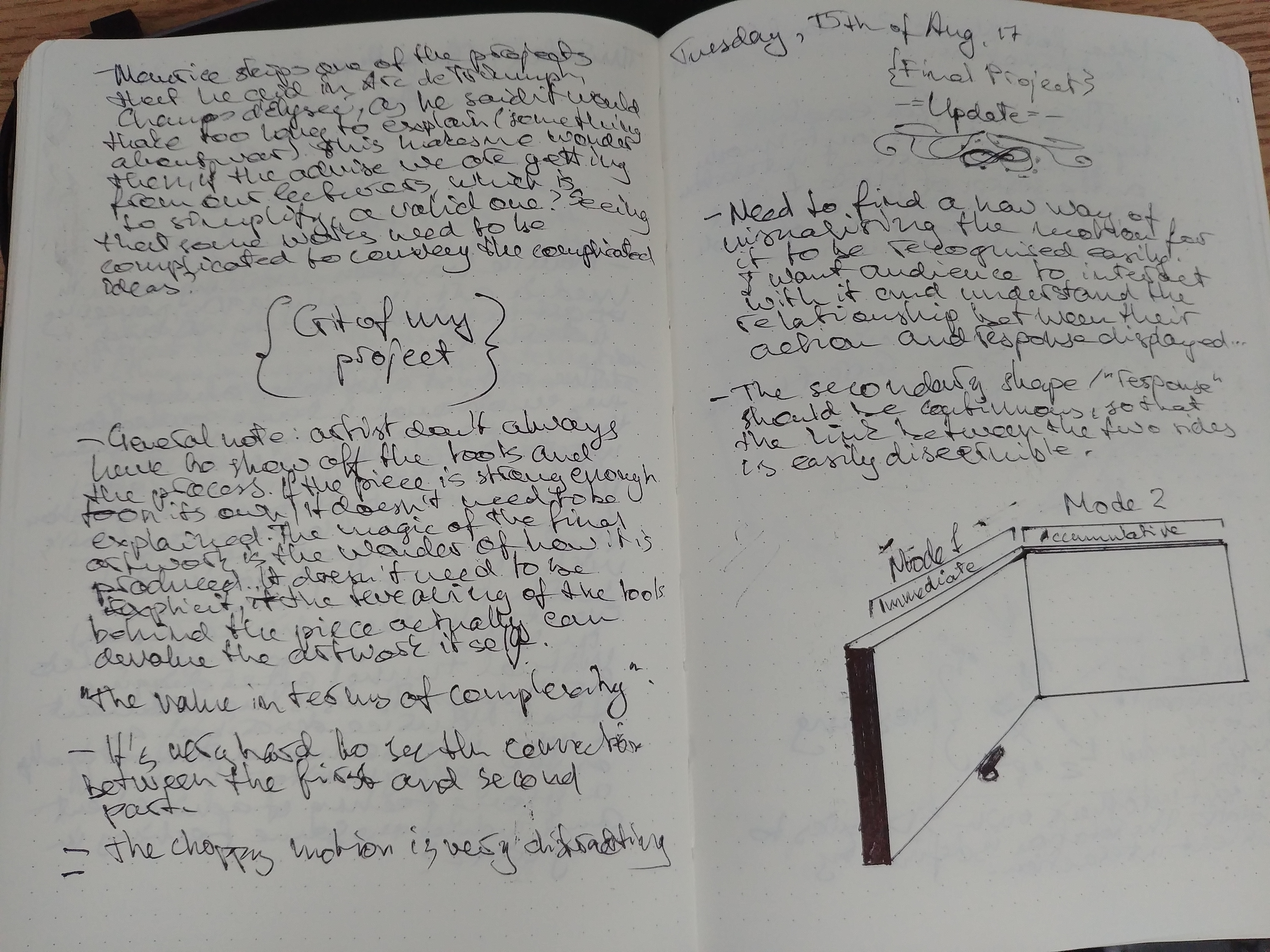

Genetic algorithm - experimentation, ideation and learning process

For a while now I was trying to figure out what the best fitness function would be for generating new flow fields that could potentially be interesting. One of the ideas I had was to use a ragdoll like stick figure whose objective would be to maintain balance and not fall. Then, when the balance motion was good enough, I would start introducing the captured flow fields, attempting to make the ragdoll move with the flow fields while trying to maintain the balance. However, with the time I have left, I am not sure if I am going to go down that path. Instead, I came up with the new fitness criteria: generate new flow fields to try to hit the activated cells (taking capture flow fields and looking at the cells that have motion present in them). As a proof of concept, I will only focus on one frame of the flow field. Once that works, I will start introducing time series of flow fields.

I have come up with a genetic algorithm that does what I have discussed previously – attempting to hit activated cells (current pose of the user). For a while I had a problem, where the flow fields ‘cheated’ in a way: it would generate flow fields that generated circles, constantly hitting activated cells in a loop, matching fitness criteria. The problem was that it still looked like random noise, in a sense. After speaking to a classmate (Howard), I realised that perhaps I need to introduce a certain criterion that instead of generating fresh new flow fields, it would start converging the cells that didn’t hit anything towards activated cells (the average of them, for example) over time. Also, to visualise the process better, the particles that did not hit the activated cells, would be killed, only revealing the particles that have reached the activated cells. In the end, I think I managed to achieve what I wanted, with slightly unexpected results. What I ended up is a flow field that forms interesting patterns inside of the activated cells. By ‘interesting’ I mean that they are fairly abstract, that in itself is not a bad thing. It is visually appealing, however slightly too abstract to communicate human motion. For that end, I decided to keep the algorithm and instead do another study and build a new GA or classifying class (Markov-chain inspired) that would generate new patterns (or sequences) of motion, that hopefully would be reminiscent of the input motion (over time).

Markov-chain inspired classification system

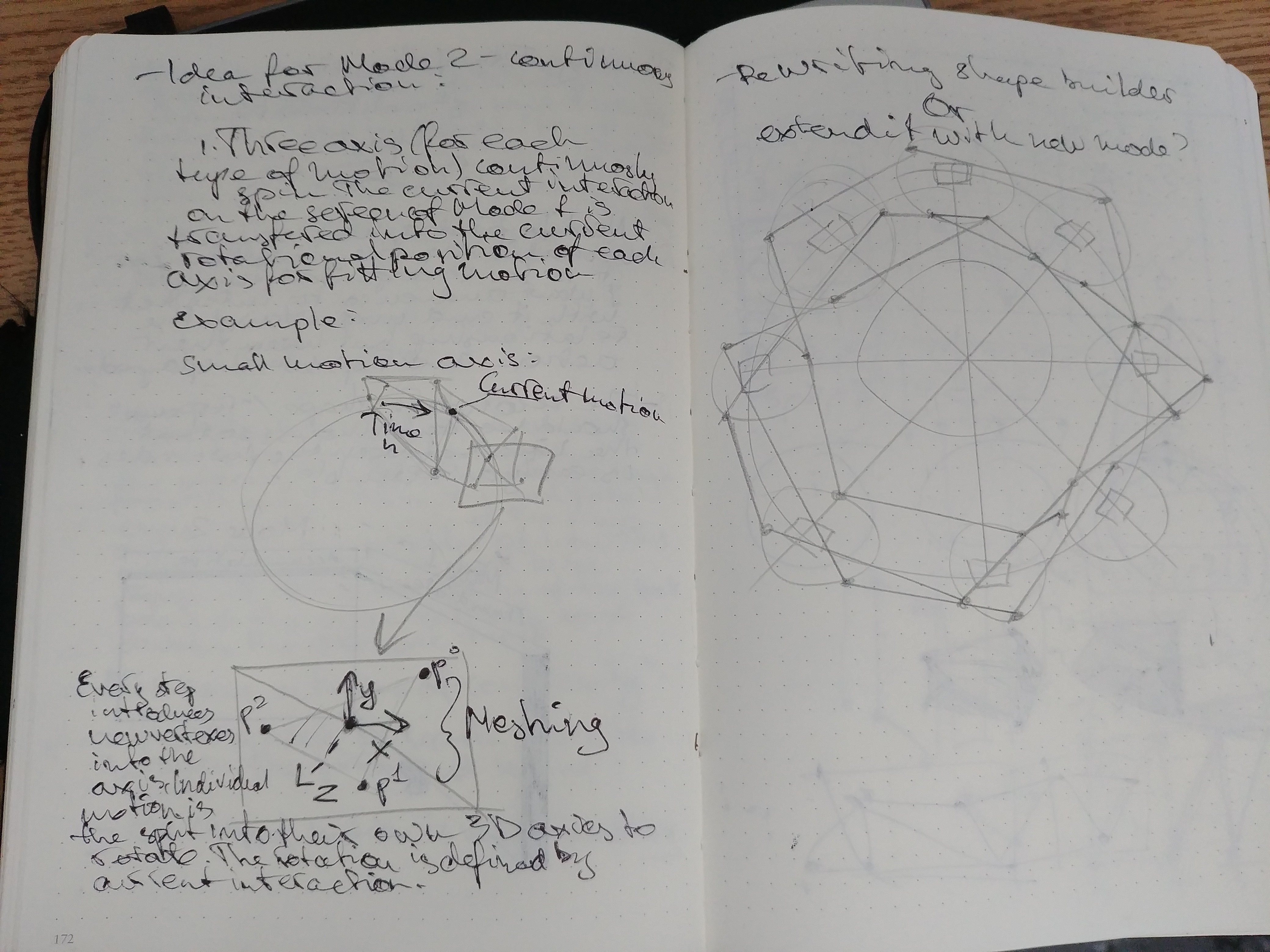

At the start of this project, as a proof-of-concept, I wanted to make a system that would analyse dance movements in real time and predict the next movement based on Markov-chain estimations. A basic concept was to look at every movement, create a type of classifier for each motion and compare them, giving each movement a score (probability). I was hypothesising that, given enough time and a dance being repetitive in its nature, I would be able to predict a next dance movement pretty accurately and be able to generate a new sequence of movement based on that. However, given my lack of knowledge in dance and machine learning, I abandoned my original plan and left this idea behind. But after seeing the results of the GA, I decided to re-implement a Markov-Chain inspired classification system, that could analyse any type of motion and assign a score to it, if it repeated itself over time.

Technicalities of the system

The Markov Chain classification system that I have built parses the current flow field captured and goes through each cell in it, to get a number of activated cells that it contains. The way that it assesses if the flow field contains any meaningful motion is simple – if the count of activated cells is greater than 5 (usually a hand gesture) and the heading of those cells is greater than X (in this case vector length/magnitude bigger than 2). Once those two criteria are met, the cells that qualify get passed into a temporary array of vectors. Then, the array size is compared to all the classifiers sizes, and another array of possible matches is created. If there are no matches and classifier array is smaller than X number, the current array of motion vectors is saved as a classifier. If the list of possible matches is at least one big, it gets passed further into a comparison function. The comparison function parses the matching classifier, identified as described above, and it compares each vector of both the motion and the classifier in the array. If the current motion vector is aligned to the classifier vector (within X degrees) the current classifier vector gets a score. After iterating through all the motion vectors the classifier increases its score by one, but only if more than three-quarters of the total vectors in the classifier got a match. Also, after increasing its score, the current classifier interpolates itself by 20 percent towards matching motion vectors, to account for variability of the motion. This process repeats itself for a defined number of iterations.

While the classification process is taking place, another system is building a sequence of movements that are most likely to happen next, based on scores that are assigned to every classifier. At the moment there is only one score, meaning that it does not account for flow of motion, i.e. a hand does not suddenly move in opposite corner of the room, detached from the body, which can always be fixed later for more sophisticated predictions, by adding a second array that describes a probability of a movement happening after the previous one. At the beginning, the system outputs the same number, as it is based on pseudo-random number generator, but diversifies its output as more classifiers get their scores, generating a steady stream of numbers representing classifiers. These numbers are stored in an array as index numbers pointing to the classifiers they represent. These numbers are then used to create a new flow field vector with the classifier movements from the generated sequence.

To visualise the sequence of generated indexes for classifiers the ‘draw’ function of the class interpolates the output flow field over time and draws these changes on the screen. While the global interpolation value is smaller than 1 (100 percent), ‘draw’ function slowly interpolates the current output flow field towards the next classifier indicated in the sequence array. Once the interpolation value is 1, it moves onto the next classifier in the sequence and the process repeats. When the function reaches the end of the sequence, another function generates a new index, that is then pushed to the front of the sequence array and the last index from the sequence is removed, to maintain the size of the sequence indicated in the global variable of the class.

The main purpose of this system is to analyse, compare and output most likely movements that are to happen. The system itself is flexible in terms of how many classes (of motion) it can have, precision at which it tries to match the motion and the mode of output (single value, a sequence of a continuous stream of motion). It can be applied to multiple uses, for example, particle system, or other visualising systems.

In the first implementation of the classification system, it used to compare the entire flow field for possible matches. However, that introduced a problem, also known as ‘overfitting’ in machine learning, whereby the classifier created for a particular motion would only match a very specific motion at exactly the same spot in space from where the classifier has been created. That meant that even the same motion happening somewhere else was entirely ignored, as it did not occur in the specific area that the classifier is trying to match. After reading ‘Machine Learning of Personal Gesture Variation in Music Conducting’ (Sarasua, et al., 2016), I realised I could recognise the gestures independent of their spatial position, by creating a profile of the motion, i.e. create classifiers that match the size of the activated cells and headings of the motion, excluding the vector positions (but still keeping them in a separate array for drawing and debugging).

___________________________________________________________________________________________

FUTURE DEVELOPMENT

During and after completing this project I have been actively documenting ideas of expanding the project and potential applications for it. I am currently working at the company that specialises in motion graphics and visuals for live performances (such as concerts) and I have showcased my project to them. The feedback I got led me to believe that it can potentially be applied to concerts, where the visuals for the stage are generated live in response to the performer. The underlying structure of my project allows it to be adapted to different scenarios right now and given enough computing power could even drive more detailed visuals and even accommodate multiple inputs from the Kinect camera. The aesthetics of my project left a good impression on the people I am working with and they are keen on applying my knowledge to future projects in creating live visuals for live performances. I see this as the venue I will be working in the near future, expanding my knowledge and creating interactive installations to the real-world clients and briefs.

My interests do not end in the practical implications of this work. Although artificial intelligence is beyond the scope of the present study, I hope my related work in future will spur further research into this field, and stimulate discussion on the limitations of mind/brain identity theory, and in general, the physicalist account of consciousness. Some researchers of artificial intelligence that try to explicate consciousness in purely material terms fail to take into account a wide range of the data of human experience, and make highly speculative and dogmatic claims about the future success of their profession. Their faith that someday art, morality and culture will be explained in terms physical processes alone demonstrates their unassailable certainty of materialism that ventures beyond the available evidence.

This does not necessarily mean that we may never construct a conscious, thinking artificial intelligence. The idea is practically and principally far-fetched, but if that day ever comes, we will simply have found a new way of bringing conscious minds into existence. This would add weight to the hypothesis that causal interaction between the mind and brain is that of both physical and non-physical realities. We will not have reduced minds to computers; we will have transformed computers into real minds. The distinction is deceptively subtle, but there's in fact a world of difference between these two alternative perspectives.

____________________________________________________________________________________________________

SELF EVALUATION

The initial objectives of this work set out to demonstrate that computers that can simulate human behaviours in an indistinguishable way does not mean they are closer to being conscious, and that creative work in the arts presupposes a significant degree of physical freedom (contrary to determinism). This work later ventured in a direction to show that human movement can be conveyed in an abstract way, which is reprehensive of physical neural activity transmuting into intentionality and conscious experience, which have radically different – indeed, non-physical – attributes.

Current research into artificial intelligence is very impressive. But the problem arises when scientists talk of human minds as merely information-processing systems. As previously mentioned, the more successfully artificial intelligence demonstrates that unconscious computers can emulate human intelligence, the more positively it shows that the brain as a computer cannot explain the existence of consciousness. Thus, my primary hypothesis is based on the Turing test to show that a machine's ability to exhibit intelligent behaviour in the form of choreography can be indistinguishable from that of a human.

Some people may be fooled into thinking that a computer-generated dance routine is the product of a human, but that does not mean the computer is conscious. The computer will not be experiencing the rich mental realm of personal experience, full of meaning, purpose, feeling and intentionality.

Through an unanticipated outcome of my genetic algorithm, I decided to change my initial study design. I took advantage of the abstract interpretation of movement and wanted to develop it to convey the mind/brain identity theory.

However obvious it may seem to many people, the material account of consciousness faces formidable difficulties. The basic problem is that not even the most complete account of brain function reaches the actual conscious experience with which it is associated. This point is often expressed in terms of the law of identity; namely, that if X is identical to Y then they have the same attributes. However mental states are not located at some point in space, whereas brain states are.

For instance, the conscious experience of pain can be experienced as sharp or dull or throbbing. More simply, if I scrape my elbow, the attributes of my consciousness of pain certainly do not seem to be the same as attributes of the firing of a series of neurons in my brain.

Thus, my final project idea set out to emphasise the somewhat paradoxical notion that mere electrochemical activity in the brain can be transmuted into thoughts, intention and movement, by showing the inverse. That is, meaningful locomotor activity can be represented in abstract correlates, as represented by Movement Flow Dynamics.

The metaphysical conclusion of this work can be stated with an aptly suited metaphor, namely that the relationship between physical brain states and non-physical conscious experiences is like that between two dancers who always move together, but sometimes with one or the other taking the lead.

___________________________________________________________________________________________

REFERENCES

Caramiaux, Baptiste; Bevilacqua, Frédéric and Tanaka, Atau. 2013. Beyond Recognition: Using Gesture Variation for Continuous Interaction. Proceeding of CHI '13 Extended Abstracts on Human Factors in Computing Systems, pp. 2109-2118

Crnkovic-Friis, L. and Crnkovic-Friis, L. (2017). Generative Choreography using Deep Learning. In: International Conference on Computational Creativity. [online] ICCC 2016. Available at: https://arxiv.org/abs/1605.06921v1 [Accessed 12 Jul. 2017].

Hick, J. (2010). The new frontier of religion and science. New York, NY: Palgrave Macmillan.

Jadhav, S. and Mukundan, S. (2010). A Computational Model for Bharata Natyam Choreography. International Journal of Computer Science and Information Security, 8(7), pp.231-233.

Leach, J. and deLahunta, S. (2015). Dance ‘Becoming’ Knowledge. Leonardo.

Müller, A. and Guido, S. (2017). Introduction to machine learning with Python. Sebastopol (CA): O'Reilly.

Peng, H., Hu, H., Chao, F., Zhou, C. and Li, J. (2016). Autonomous Robotic Choreography Creation via Semi-interactive Evolutionary Computation. International Journal of Social Robotics, 8(5), pp.649-661.

Sarasua, A., Caramiaux, B. & Tanaka, A., 2016. Machine Learning of Personal Gesture Variation in Music Conducting. CHI '16 Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 7 May.pp. 3428-3432.

VIRC̆ÍKOVÁ, M. and SINC̆ÁK, P. (2010). Dance Choreography Design of Humanoid Robots using Interactive Evolutionary Computation. [online] Available at: https://www.semanticscholar.org/paper/Dance-Choreography-Design-of-Humanoid-Robots-using-VIRC-%C3%8DKOV%C3%81-SINC-%C3%81K/99a6580e7f510d81d213d7e37e6a9dede173f160 [Accessed 15 Jul. 2017].

Ward, K. (2009), Why There Almost Certainly is a God. Chicago: Lion Hudson UK.

Videos

Harvard University (2016). Advanced Algorithms (COMPSCI 224), Lecture 1. Available at: https://www.youtube.com/watch?v=0JUN9aDxVmI&t=3574s&list=PLaaDOizf38zs4xk-McwWLO5ay3In46ib7&index=5 [Accessed 17 Jul. 2017].

Radke, R. (2014). CVFX Lecture 13: Optical flow. [image] Available at: https://www.youtube.com/watch?v=KoMTYnlNNnc&list=PLaaDOizf38zs4xk-McwWLO5ay3In46ib7&index=13 [Accessed 9 Jul. 2017].

UCF CRCV (2012). Lecture 02 - Filtering. Available at: https://www.youtube.com/watch?v=1THuCOKNn6U&t=4s&list=PLaaDOizf38zs4xk-McwWLO5ay3In46ib7&index=15 [Accessed 9 Jul. 2017].