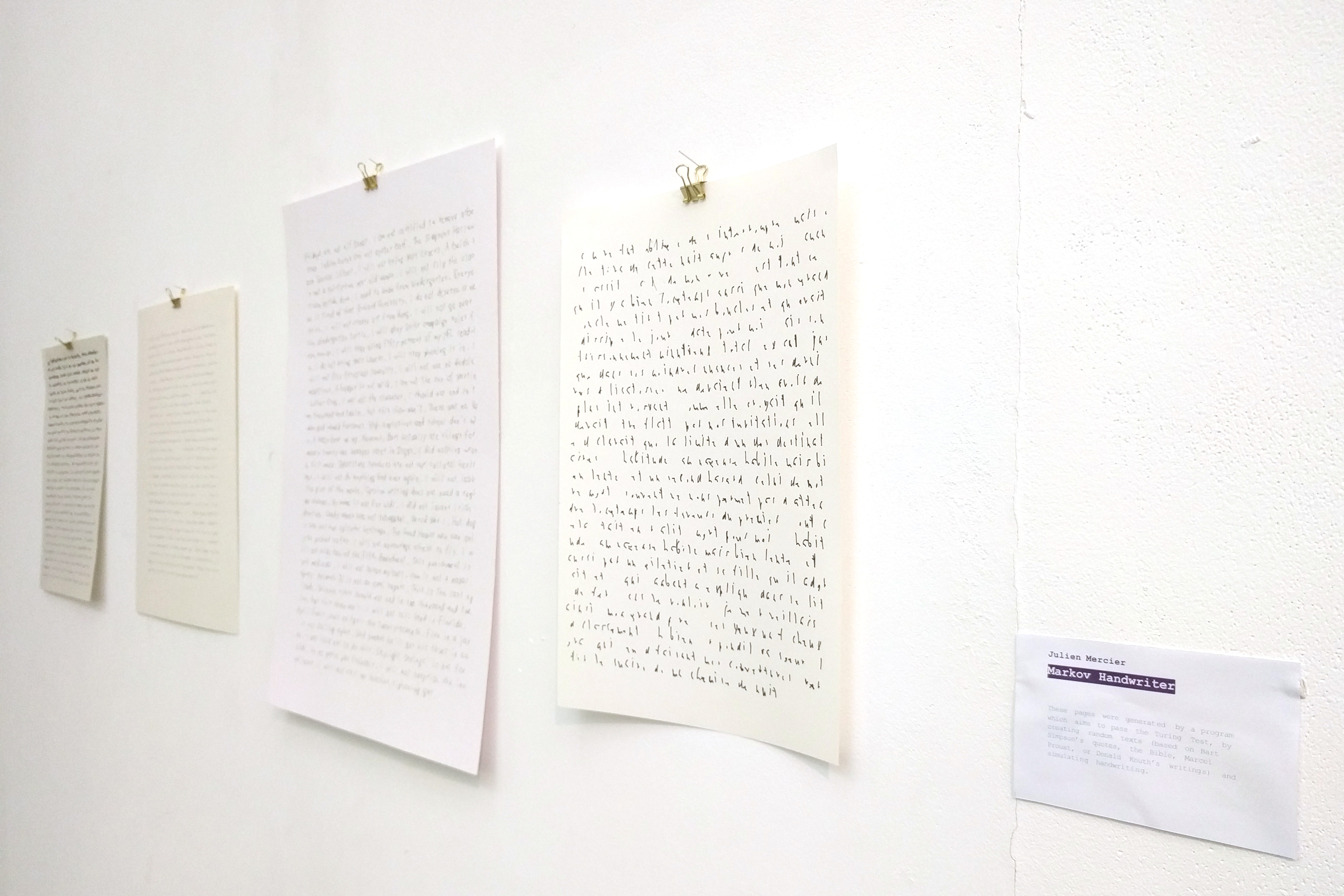

Markov Handwriter

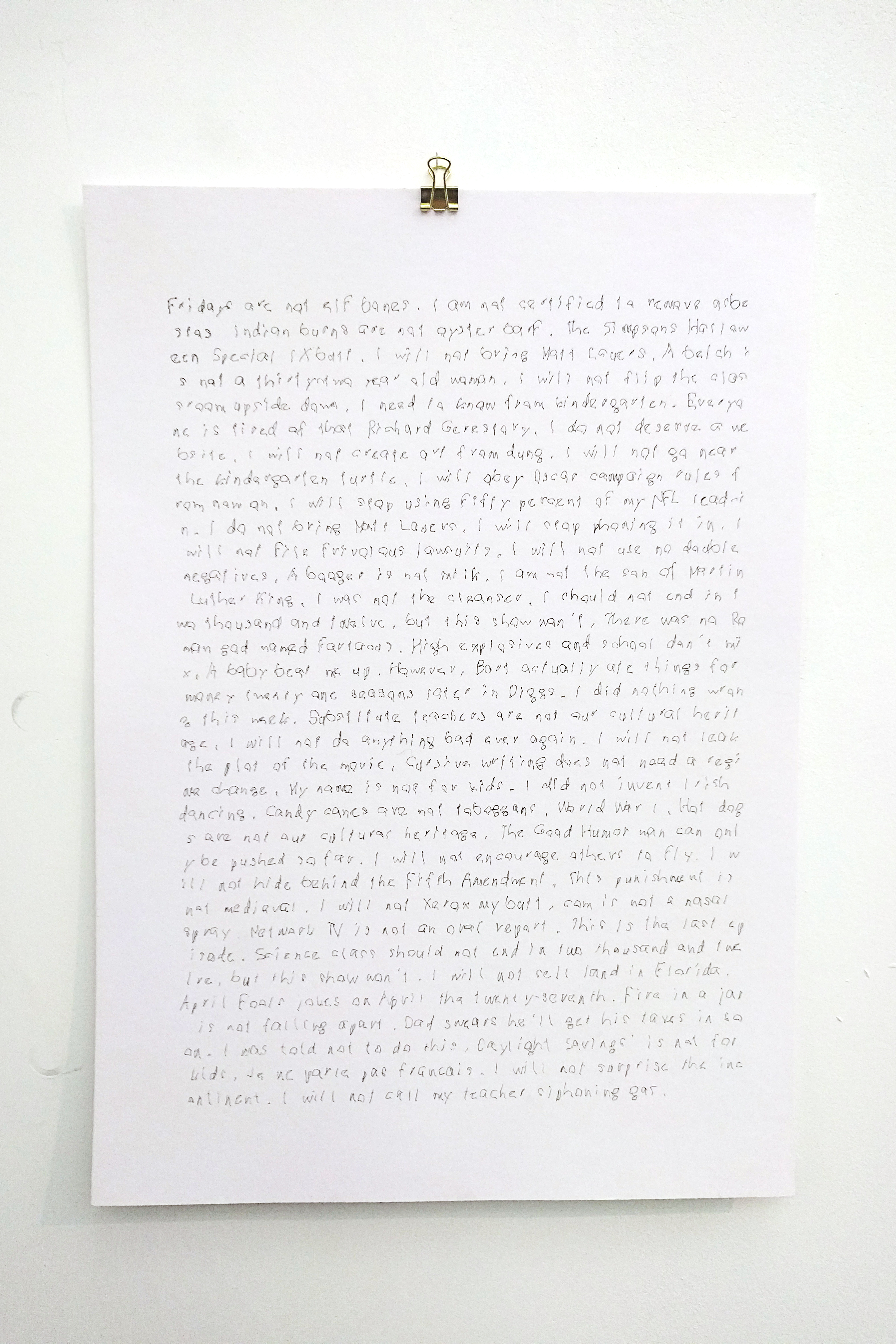

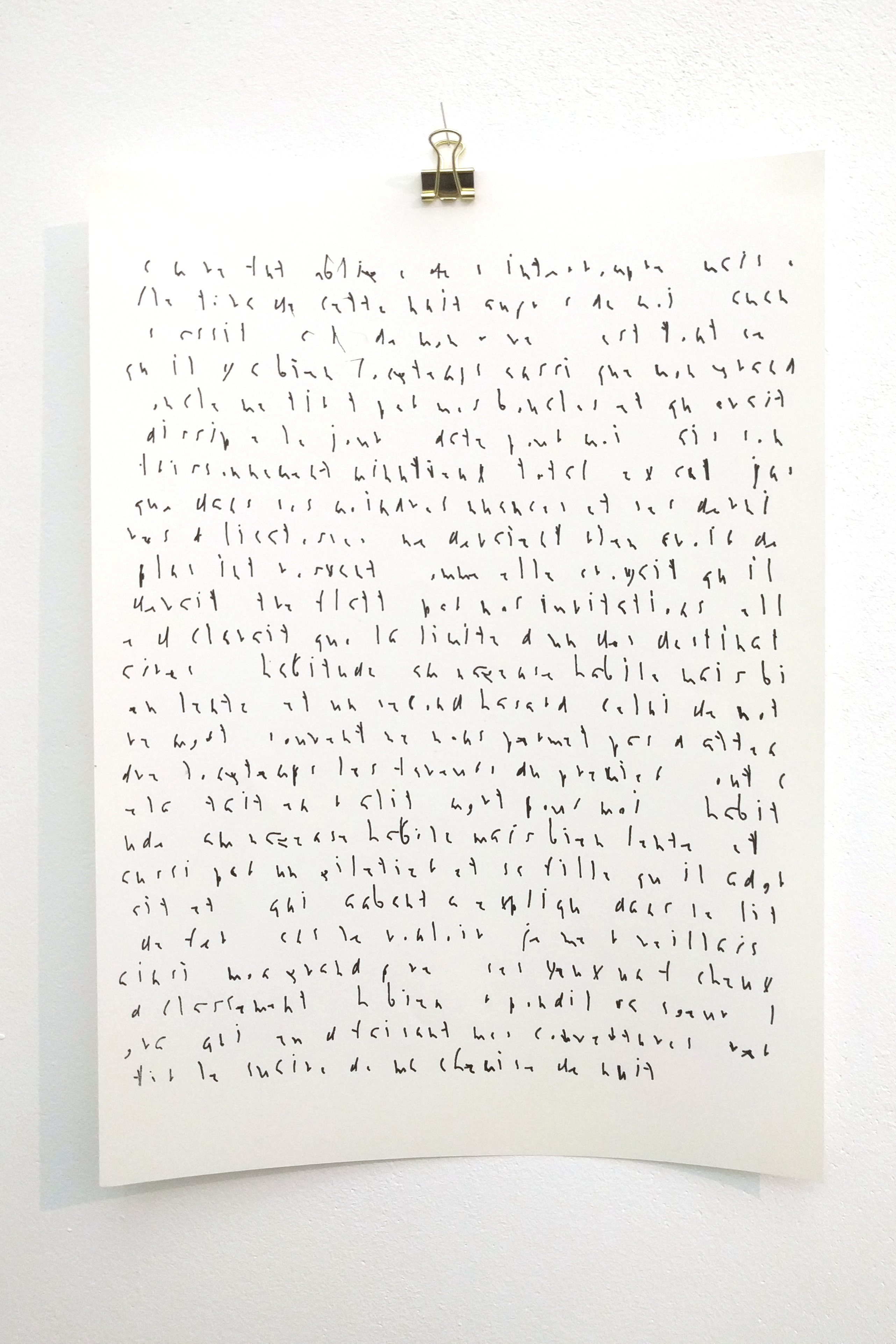

This processing program allows the user to generate random pages of text based on one or several source texts (Bart Simpson’s quotes, the Bible, Marcel Proust, or Donald Knuth’s writings) and export it with handwritten-like lettershapes, with customizable setting (size, space, shakiness…). The idea is to create simple, machine-made artefacts that appear to be entirely handmade, thus passing the Turing Test.

produced by: Julien Mercier

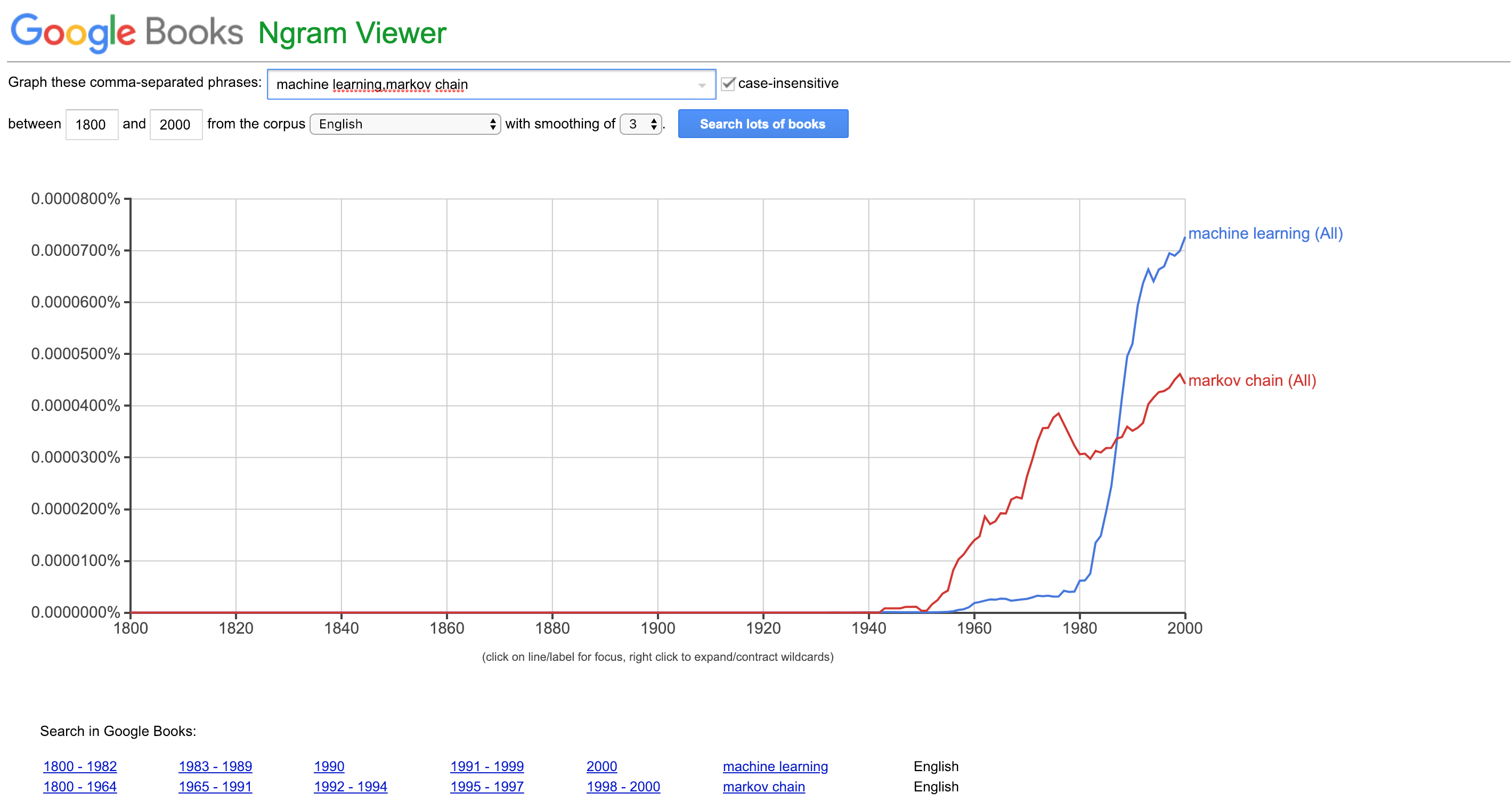

Introduction

Markov Chain models have been used in a variety of ways for the past decades. They are a simple yet powerful way to make predictions based on probability, without taking into account the entirety of past events but rather the one last step taken. They used to be a lot more fashionable before the time of machine learning, which made me want to understand them, before moving on with ML in term 2. In simple terms: a markov chain will “read” (loop over) an input that you give it, like text. For each word (or given n-gram) it reads, it will store the probabilities of what the next word can be, based on the input data. Then, you want to generate a text that will choose each word but based on the statistics it stored.

Concept and background research

Knowing little about Markov chains, I assumed that I would need to do some research before I’d be able to come up with a sensible idea. I wasn’t exactly sure what I’d use it for although I already had interest for some text-generator programs. During a one-to-one session in week #6 (a haiku generator program), Lior suggested that I looked into Markov chains. I was attempting to create a haiku generator at the time, but with very simple rules and dataset to pick words from.

After a little research, I found some good instructions for a java-based markov chain program on https://rosettacode.org/wiki/Execute_a_Markov_algorithm#Java. However, the program was done with OOP which was a little ahead for my technical skills. I attempted to understand and convert the provided sample code to something I could make sense of, but with limited success. I then found additional ressources on Daniel Schiffman’s youTube channel. He made three videos on markov chains and how to program them. However, translating his p5.js/javascript based syntax into processing proved more difficult than I expected. The early results I got were promising, but I had trouble with making objects and classes at some point. I read what I could find on objects and classes in the “Learning processing” book, but the basic understanding I managed to get after a couple of days did not allow me to move ahead with my code. I seeked help on the processing.org forum, while investigating on other ressources.

This is when I came across the RiTa library, which is a powerful ressource for computational literature. It made me realize that the field that was already very well covered and that finding my own, modest voice would be challenging. I watched all the tutorials on the RiTa library, in order to come up with ideas on how to make the best use of markov chains.

That’s when I remembered another assignement that I had had a lot of fun doing a couple of months before: A Manfred Mohr inspired piece, for which I made a basic, abstract hand writing program based on noise and randomness. (a random walk with constraints) At the time, I wanted to create an actual, readable handwriting tool but my coding skills didn’t allow me to do so. By december however, this sounded more like a technically feasible possibility. Pairing it with a markov chain text generator even started to sound like the beginning of a concept. I could try and build some sort of forged handmade pages. (novels, love letters, manuscripts…) It would have to be thought of as a sort of simple turing test: I’d have to make the forgeries look in a way that no one could tell it has been done by a program.

Technical

Thanks to the RiTa library, the technical aspects of the text generator was pretty much figured out. I could then focus on building an interesting handwritten typeface. Every reccuring letter would have to look different than its previous iteration. I looked into my archive of hand drawn letters, from 17th century manuscript to Jean Dubuffet’s poetry, but figured it would make more sense to try and mimic my own handwriting.

I drew each character (lowercases, uppercases, + some punctuation) with simple lines, using bezier vertexes. I added parametric noise to the vertexes, in order to create a semi-controlled deformation everytime a new letter is drawn. However, I had to make sure to add the same noise values on each point and its two bezier handles, in order for the line to feel smooth and continuous.

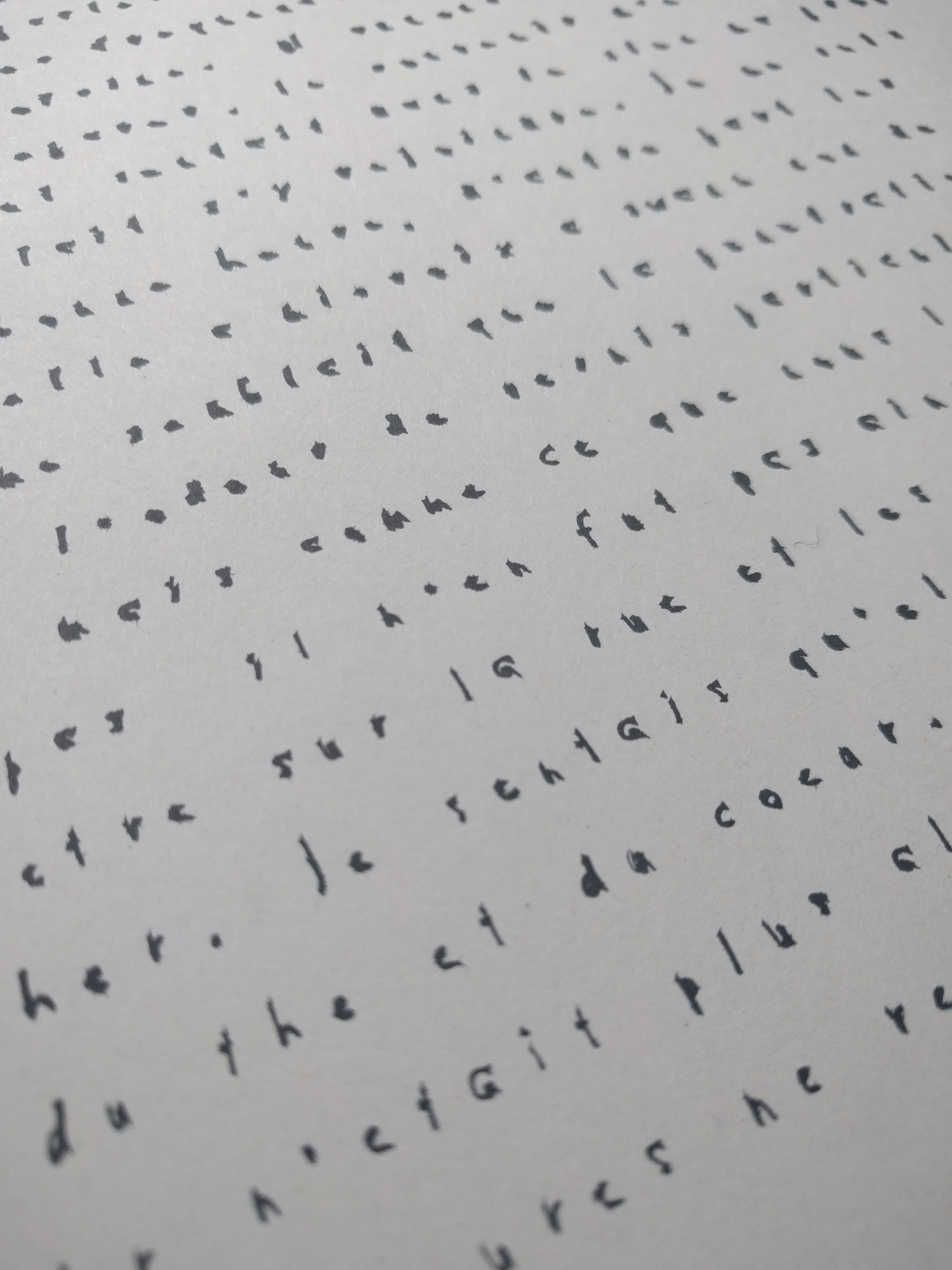

I then wrote the function that would look at the position (.charAt method) of each character in the markov-generated string, and turn it into X and Y coordinates accordingly. Every time it hit the right margin I had defined, it would trigger a line-counter and the Y position would increase. I also stored a max amount of character per line and per page, that were dependent on the X & Y size, letterspace and line space values. I wanted to be able to play with these settings based on a live visual feed, so I created controls for:

— The width and height of the letters, which controls the size of the unit variable I created when drawing my letters

— The space between letters, which is the increment added to the X position, the linespace, which is the increment added to the Y position,

— And maybe the most interesting one is the amount of noise added on the vertexes, that make the writing look more or less shaky.

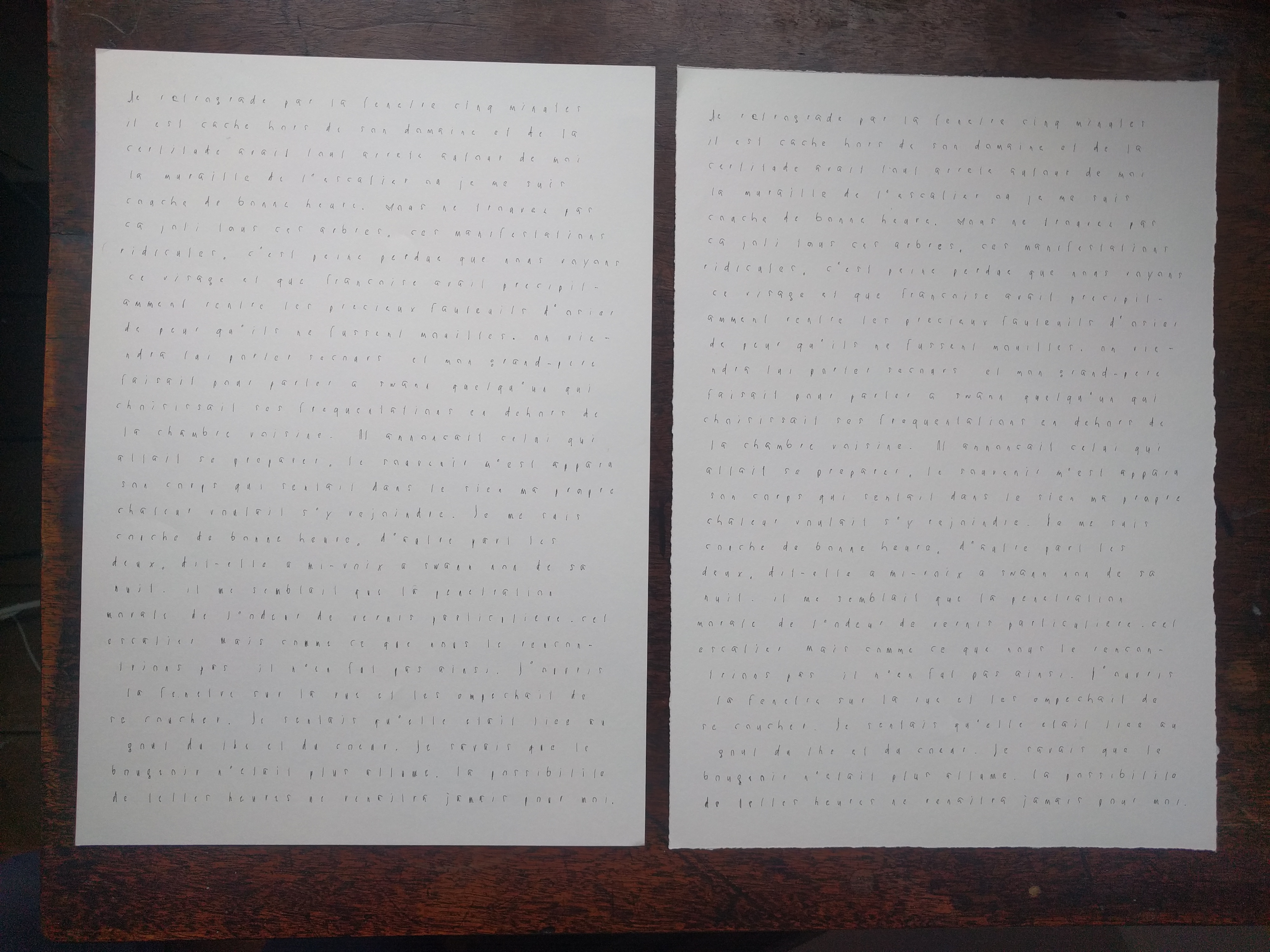

Finally, I added ‘refresh’ and ‘export’ buttons. After adding those controls I was quite happy with the simplicity of the tool, and the rough aspect of the outputs it allowed me to create.

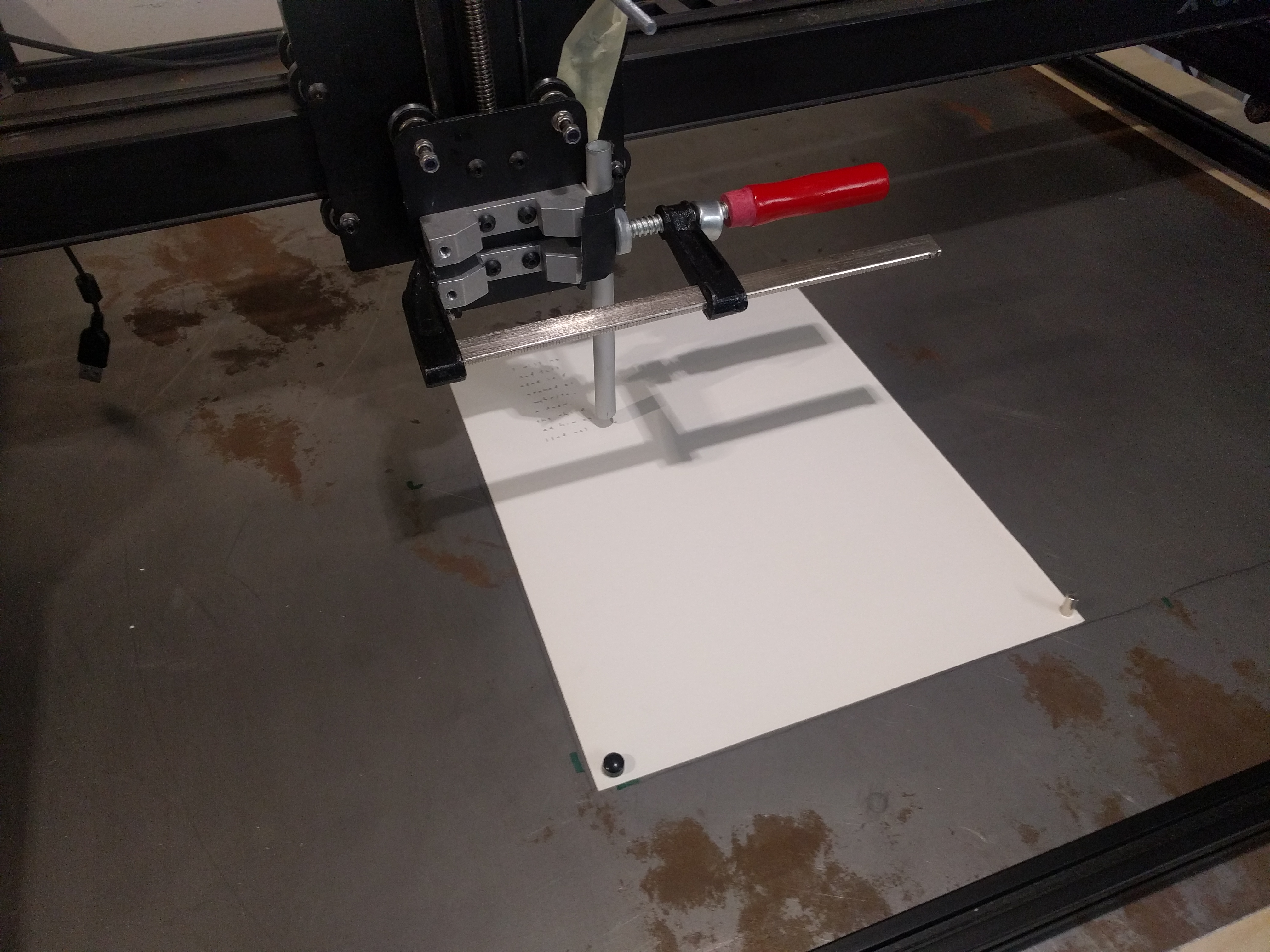

When designing for print, one needs to take into account the printing technique that’s going to be used, alongside the design process (not after). In my case, choosing the most appropriate printing method meant finding the one that would reproduce hand writing with the most accuracy. I used a CNC mill, on which I taped simple pen and pencils. It fit very well with the idea of making forgeries/turing-test pages, which you could hardly tell whether it was made by a human or a computer. A pen traced line has a material quality that inkjet printer simply don’t allow.

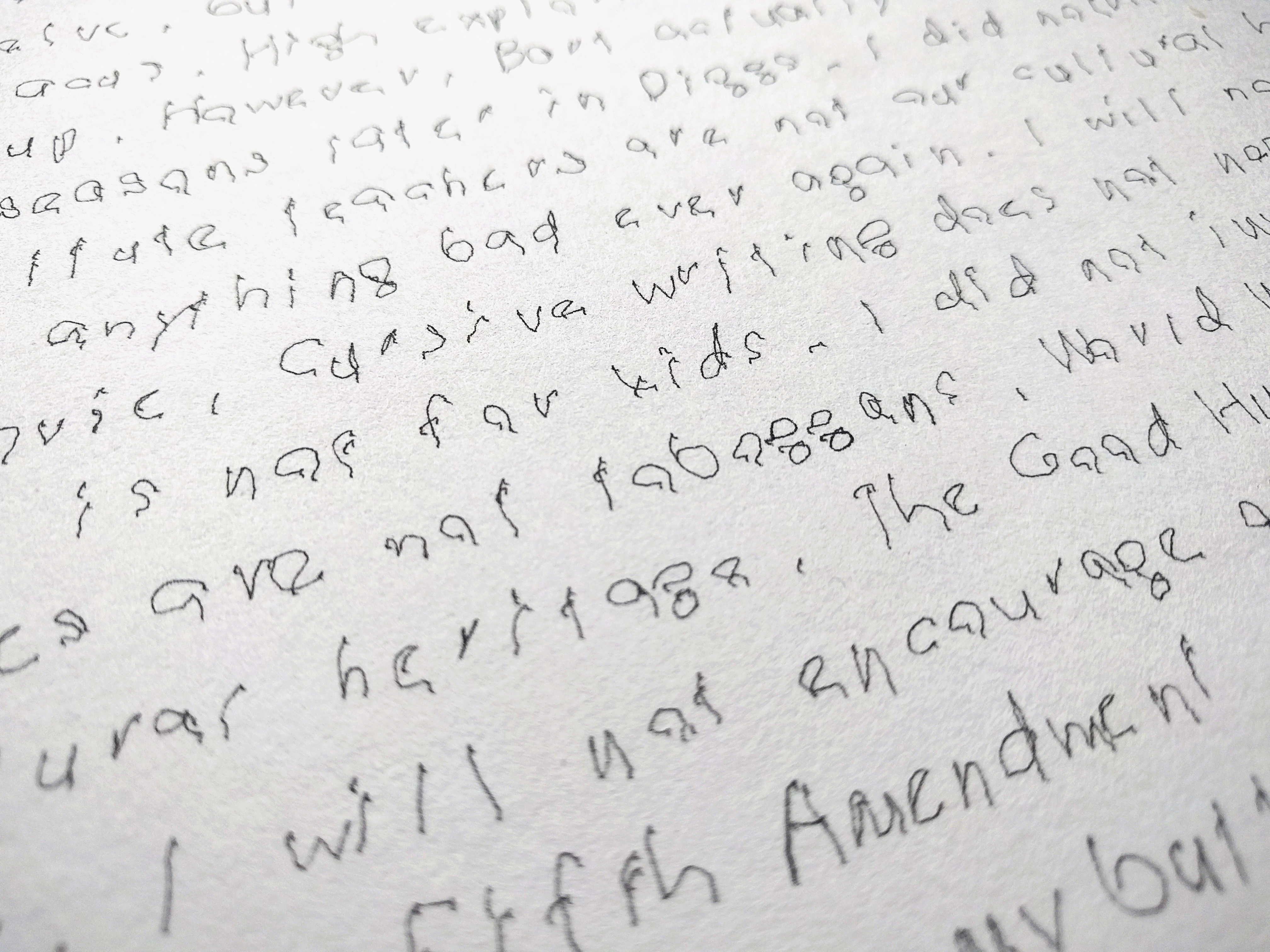

I noted that I could make my pages look very ‘suspicious’, by drawing really small characters: the overall steadiness makes it look impossible that a human would be able to do this by hand, despite the shaky lettershapes.

Future development

I would really like to develop this tool further, and maybe explore this machine/human threshold. Maybe by creating an entire manuscript, without a single blunder. Or by writing the same one page text over and over again, with thousands of different lettershapes. It could also be interesting to use it to add hidden, encrypted content, or visual patterns in the sentences.

Acknowledgements

References I used for the project:

* https://rosettacode.org/wiki/Execute_a_Markov_algorithm#Java

* https://rednoise.org/rita/

* Daniel Schiffman tutorials (P5.js) https://shiffman.net/a2z/markov/

* Daniel Schiffman’s Thesis generator using Markov chains https://github.com/shiffman/A2Z-F16/blob/gh-pages/week7-markov/07_Thesis_Project_Generator/index.html

* Allison Parish’s Generative Course Descriptions http://static.decontextualize.com/toys/next_semester?

* Chris Harrison’s Web Trigrams http://www.chrisharrison.net/index.php/Visualizations/WebTrigrams

* Learning Processing, Daniel schiffman, chapter 8: Objects

* A list of every Bart Simpson’s blackboard quote to date: http://simpsons.wikia.com/wiki/List_of_chalkboard_gags

* King James’ Bible on the Gutenberg project: http://www.gutenberg.org/files/10/10-h/10-h.htm

* A little theory on Markov chains https://www.analyticsvidhya.com/blog/2014/07/markov-chain-simplified/

* Even more theory on Markov chains http://www.cs.princeton.edu/courses/archive/spr05/cos126/assignments/markov.html