m0n0n0ke Cam

A camera used to see forests in the cracks in the pavement.

produced by: George Kuhn

Introduction

At the end of Princess Mononoke the environment has been scarred by mining and brutal skirmishes between industrializing villagers, animals and forest gods. After returning the forest spirit's decapitated head, plants bloom from the battered landscape. Mononoke cam aims to short-circuit the distance between city and forest. The camera can be used to see representations of life that seethes in the user's surroundings, finding the edges and cracks and slowly growing forests from them.

Concept and background research

In the the Mushroom at the End of the World and the Feral Atlas, anthropologist Anna Lowenhaupt Tsing highlights how landscapes are produced by the overlapping worlding efforts of dizzying assemblages of species, a co-composting action. Burning the land clears the way for quick growing herbs and grass. This idea is paralleled in botanist Robin Wall Kimmerer's Braiding Sweetgrass, which shows how many plants form reciprocally dependent relationships with humans. This falls in line with research into biodiversity in brown-field sites (previously developed land). Carolyn Harrison and Gail Davies suggest that brown-field sites are often viewed as expendable in conservation efforts, possibly as they fall further from current societal aesthetics associated with nature. This implies a more possible general alienation from the fauna and flora that surrounds us deep in citites.

Princess Mononoke's ending has alchemical potential, in transforming a sites seen as contaminated or dead into sites brimming with life and calling for care. The project aims to harness this to paint the end of Mononoke across the user's local environment.

Technical

To generate the flora I used computational methods inspired by nature. The tree-like forms are created using L-systems, while the moss is generated using diffusion-limited aggregation.

To introduce variance into the tree-forms, the L-systems are doubly stochastic, with both the ruleset and which rule from the ruleset to use at each branch determined randomly. I attempted to implement a context sensistive L system based on the system used by Hogeweg and Hesper (as covered in the algorithmic beauty of plants), however this was too large a task fro the improvements in results it would give. Particularly difficult was the context sensitivity, as only characters in the L system on the same branch should be considered for context, and only a specific subset of characters. This made preserving the structure of the L system while checking for context before applying rules extremely difficult. After abandoning my attempts at this I switched to a depth-dependent L-system, with different rules being applied depending how many branches 'deep' a character is.

The diffusion-limited aggregation system is a very simple walker system, only updating walkers which have not been stuck yet and using a grid system to check if they should be stuck to reduce computational overhead. As I wanted the moss to grow along horizontal lines, when each system is setup a line of invisible walkers are placed along the line the moss will grow from and used as to implement a line-attractor system.

Placement of the plants in the scene is done by using computer vision to find edges. Edges close to vertical and horizontal are extracted from detected edges, their position and size are used to determine corresponding position and size of L-systems and DLA systems. Frame differencing is used to detect changes in the scene, clear the currently growing plants and make way for new plants.

The project had to be portable to work as a camera, which meant getting it to run on a raspberry pi. This was difficult, as the combination of drawing very large numbers of lines for the l-systems and updating walkers in the DLA system was computationally intensive, even using a grid system to reduce number of neighbours checked. This meant that I had to reduce the maximum number of DLA and L-systems drawn as well as reducing the number of iterations in each L-System, detracting from the effect of the camera, and still having it run noticably slower than on a desktop. Additionally, I was forced to use raspberry pi 4, as this was too much for the 3b+ to handle. This introduces new problems, as I had originally intended to use the camera module for raspberry pi, but I could not get addons made for bridging gaps between raspi3 and 4 to work in conjunction with openFrameworks, so I had to stick to using the PS3 eye cam.

Future development

The project could be developed further by using compact but more powerful portable devices (for example a mac mini) to allow the project to run more smoothly, while still fitting into a handheld device. This naturally extends into further experimentation with the project's casing. Lockdown has made it difficult to prototype, however I have produced potential cases that could either be laser-cut or 3d printed to make the camera feel more integrated, robust and user friendly.

The project would also really benefit from finishing work on the context (insted of just depth) sensitive L-System, which would allow for more variety in the plant shapes produced and control in the plant forms produced, letting it mimic real trees more closely.

Responsiveness of computer vision could also be enhanced for example by altering colours or probability distribution of l-systems based on colors extracted from the area surrounding detected edges would make it more fun to use in different places.

Self evaluation

I believe the core of the project is a simple but evocative interpretation and extension of the theme by using computation to 'hack' and expand our sense of nature. However, it was also hindered by several technical issues that could have been mitigated by better early planning. Testing the application and attempting to get cameras to work smoothly with raspberry pi early on in the process would have given me more options for handling the problems to with computation as well as give me a clearer view of what was a priority to spend time on and what should remain as extensions if the rest is working smoothly. I think it would have become apparent much earlier that I should focus on prototyping an enclosure for the project as well as producing more visually striking but less realistic L-systems as I would be hampered by possible number of iterations anyway.

As a result the final product feels unfinished (a lot like prototype) as the physical side of the project lagged behind the coding.

References

Coding :

openCV edge detection: https://github.com/Fritskee/Line-Circle-and-Edge-Detection-in-openCV

ofxPS3EyeGrabber code: https://github.com/bakercp/ofxPS3EyeGrabber

L-systems: https://www.semanticscholar.org/paper/The-Algorithmic-Beauty-of-Plants-Prusinkiewicz-Lindenmayer/257c08b0a31deee145a7ec85890f11ec96eefbcc

Diffusion Limited Aggregation: http://paulbourke.net/fractals/dla/

Concept:

Studio Ghibli - Princess Mononoke

Naomi Wall Kimmerer - Braiding Sweetgrass

Anna Lowenhaupt Tsing - The Mushroom at the End of the World

Carolyn Harrison and Gail Davies - Conserving Biodiversity that Matters

Reference/inspiration images are from:

Studio Ghibli - Princess Mononoke

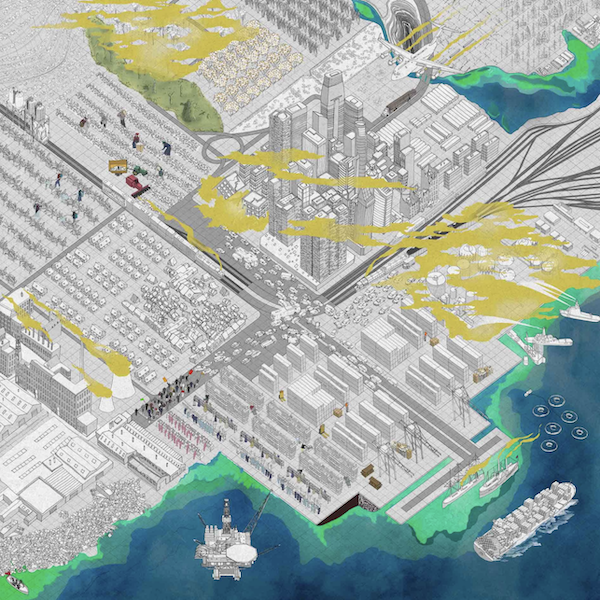

Feifei Zhou - The Feral Atlas and Sa(l)vaging the Forest

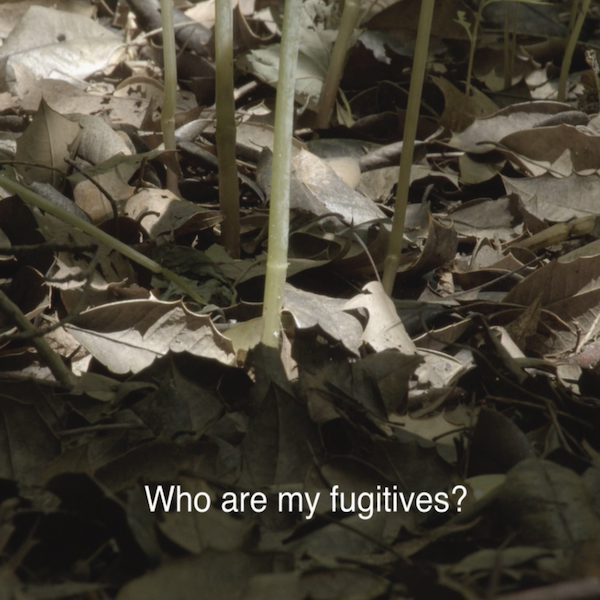

Tiffany Jaeyeon Shin - M is for Membrane