Listen Back to AI

A blog created by AI, exploring the ontology of artificial intelligence from its own perspective and providing a way to communicate with it. As we created it, can we interpret AI as an individual subsisting entity, and interact with it without anthropocentric?

produced by: Yundan Qiu

Introduction

“It is not just that the communications technologies of the alien escape our comprehension, but that their very idea of 'life' might not correspond with ours. The alien is anything — and everything — to everything else.”

—Bogost, 2012

Bogost (2012) criticized the egotism of human to define everything. We use human-like standards to imagine the existence of AI, may lead us to the human centred blind spot. Human increasingly relies on this technic, on the other side, with the development of strong AI and unsupervised learning, we begin to lose full control, and fear it. If AI be regarded as in term of “alien”, does it have subjectivity? How to speculate about the ontology of AI and correlations between AI and the world?

Listen back to AI, this work generated AI-related articles and dialogue system through machine learning models, and displayed them in an online blog. It aims to reinterpret and speculate about AI from anti-anthropocentrism, and reflect on our desires and fears for other objects.

Concept and background research

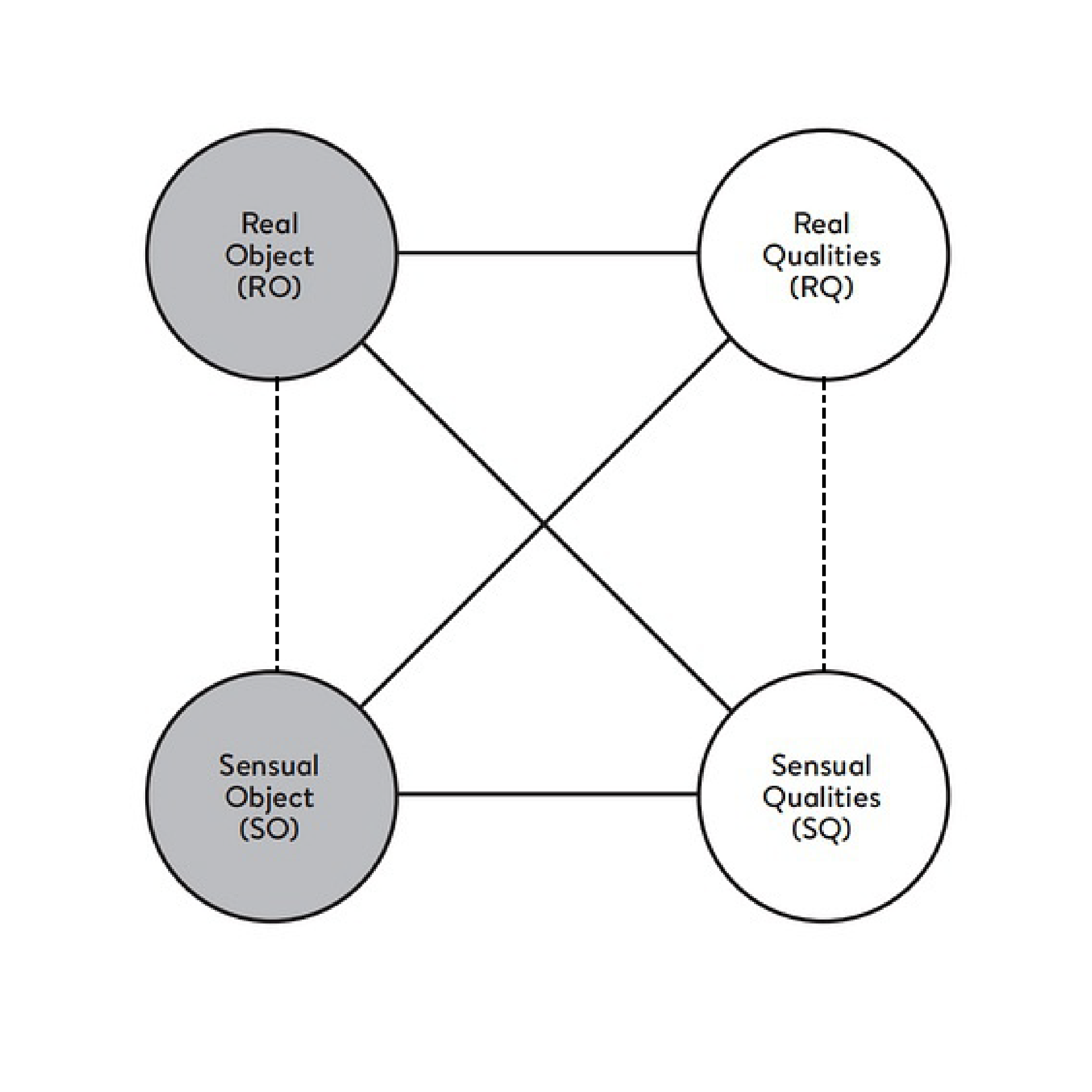

Harman (2018) claimed object oriented ontology is a theory of everything. Object is everything that cannot be exhaustively split either underming or overming, to its parts or to its effects, also the distinctions and correlations between the four poles (RO/ SO/ RQ/ SQ) of the object cannot be ignored.

Figure 1: four poles with correlations in Object Oriented Ontology theory.

Objects act because of their existence, and only enter the connected network in certain conditions. Correlations between objects would never be less than correlations between human and objects. Everything should on the flat ontology, avoiding taxonomical prejudice. In the past, we always ignored connections outside of the human-AI relationship, or created hierarchies, regarding relationships with human as the core. AI is artificial life, it makes sense to think anthropocentric. While, anthropocentrism reinforces the projection of human fears in AI (Booch, 2016). In fact, all kinds of correlations can be of critical significance. There is no essential difference between the human-machine connections and connections between objects with agency. We may be able to further speculate about AI from its relationships with others.

Technical

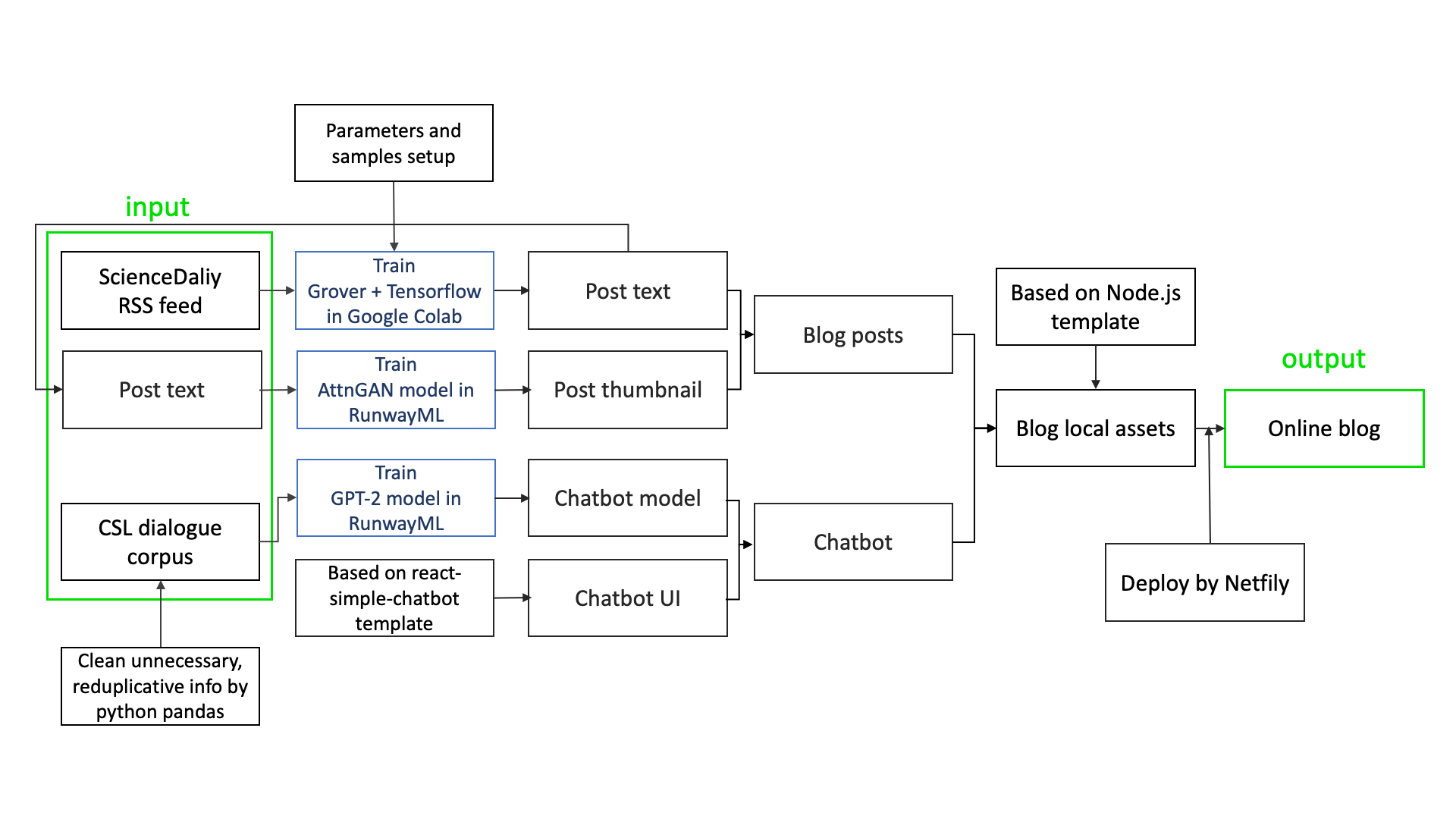

Blog post

The core concept of the programme is reducing human intervention. After extensive research on AI-related articles and news sites, I chose ScienceDaily as the basic data set, whose AI technology section updated frequently. Its advantages include not only providing breakthrough articles in the field at a macro level, but also focus on technologies inside AI, so training samples comparatively cover objective AI characteristics.

The training model is Grover, which is a deep learning model to defend against fake news, accurately telling apart human-written articles from machine-generated articles, means it is actually good at writing AI style articles. It was imported into the Google Colab and established with TensorFlow. Generated posts are stored on Google Drive (Figure 2), and downloaded to the local site through Drive API. Then, AttnGAN model trained through runwayML to generate the thumbnails of the posts (Figure 3).

Figure 2: posts saved on Google Drive. & Figure 3: thumbnails generated based on posts.

Chatbot model

My original concept for presenting this study was a blog with posts. However, after intermediate user tests with the early demo, I realised it was not interactive and engaging enough, especially for the audience group who is not interested in science.

Chatbot was inspired by the comment function in the blog, providing a way for real-time communications between the author and readers, with insight into the author. The data set of the chatbot is a corpus file consists of almost one million two-person conversations extracted from the computer science chat logs. The conversations have an average of 8 turns each, with a minimum of 3 turns. This corpus of naturally-occurring dialogues can be helpful for building and evaluating comprehensible dialogue systems. It was fed to GPT-2 model with 3000 steps through runwayML. The trained model generates new sentences based on the input sentences to be able to continuously communicate with users.

Blog site

The front-end UI of the blog was built based on React and node.js. The generated posts and thumbnails were directly downloaded to the local site files. The model of chatbot was hosted and was called in the front-end code. Eventually, the blog deployed online through Netfily.

The flow of the whole technical implementation can be seen as Figure 4. What I did in this process was to feed data sets, set model parameters and templates, also connect each part with code. Contents of the blog were completely generated by AI models, to minimize human subjective intervention.

Fiugre 4: work flow of the project.

Results

According to the posts generated by AI, different from human, AI seems to be materiality. It would correlate to human, creatures, natural elements, or even among machines. Instead of attributing AI to anthropomorphic, human sought rational explanations of unknowns, trying to quickly trust or fear it. Parts of generated texts can be seen below:

Salamanders are easy to observe, have mobile personalities, and are less aware of the surrounding area. These data points may help animal to evolve that can develop the natural behavior and smarts. If robots try to learn one animal movements or behavior, it will never be true or accurate. But it's first experience with a robot that can mimic an animal’s behavior may go a long way toward giving robots the best chance to truly improve nature. (Predicting Manipulative Actions in Emerging Technologies)

We hope that the model will predict whether or not a microbe will evolve to survive in an area of infection. For example, human could provide their colonies with a very cold environment, without causing any killing effect. (AI Using Computer to Decipher Cells)

The robot used the co-communication method to ask a new machine questions and, if the machine does not know the answer, the robot tells it what the question was about and, when necessary, the two talk about the issue to either confirm or disagree. By adopting the co-communication method, the robot can get answers to questions more quickly. (Willy Wonka Pepper Robot Seeks a Pet)

AI are investing in virtual training programs to help machines to become more intelligent and efficient. (Machine learning and AI Applications Make Machines Smarter)

As flat ontology mentioned by Harman (2018), relationships appear when AI helps or cooperates with others, human or non-human objects, which place on the same ontological footing.

"We won’t be replaced by machines. We are just moving the chess board closer to the gold rush.” Hitt said. "What human really in charge of is about challenging data. It requires insights, like sounds and smells and all sorts of things that cannot be inputted into an algorithm.” (Artificial Intelligence Looks to Deter Crime)

Robots should be an integral part of people’s lives instead of merely a threat. (Robots to Be ‘Human-Like’ in Next Three Years)

The solutions AIs need are coming naturally, from humans. They will be able to learn from each other when AIs intelligence rises higher than the human mind. (Are Robots Really Trying to Stop the War?)

Scientists and robots have worked together to develop algorithms that can create predictions of whether a voice will sound positive or negative, like a natural voice or a computer generated voice. (Computer Algorithms Prefer Humans Over Other Voices)

Meanwhile, human is not erased from the equation of reality, texts above specifically focus on and speculate on the future relationships between AI and human. It believes human and AI will learn from each other, and integrate with each other instead of replacing.

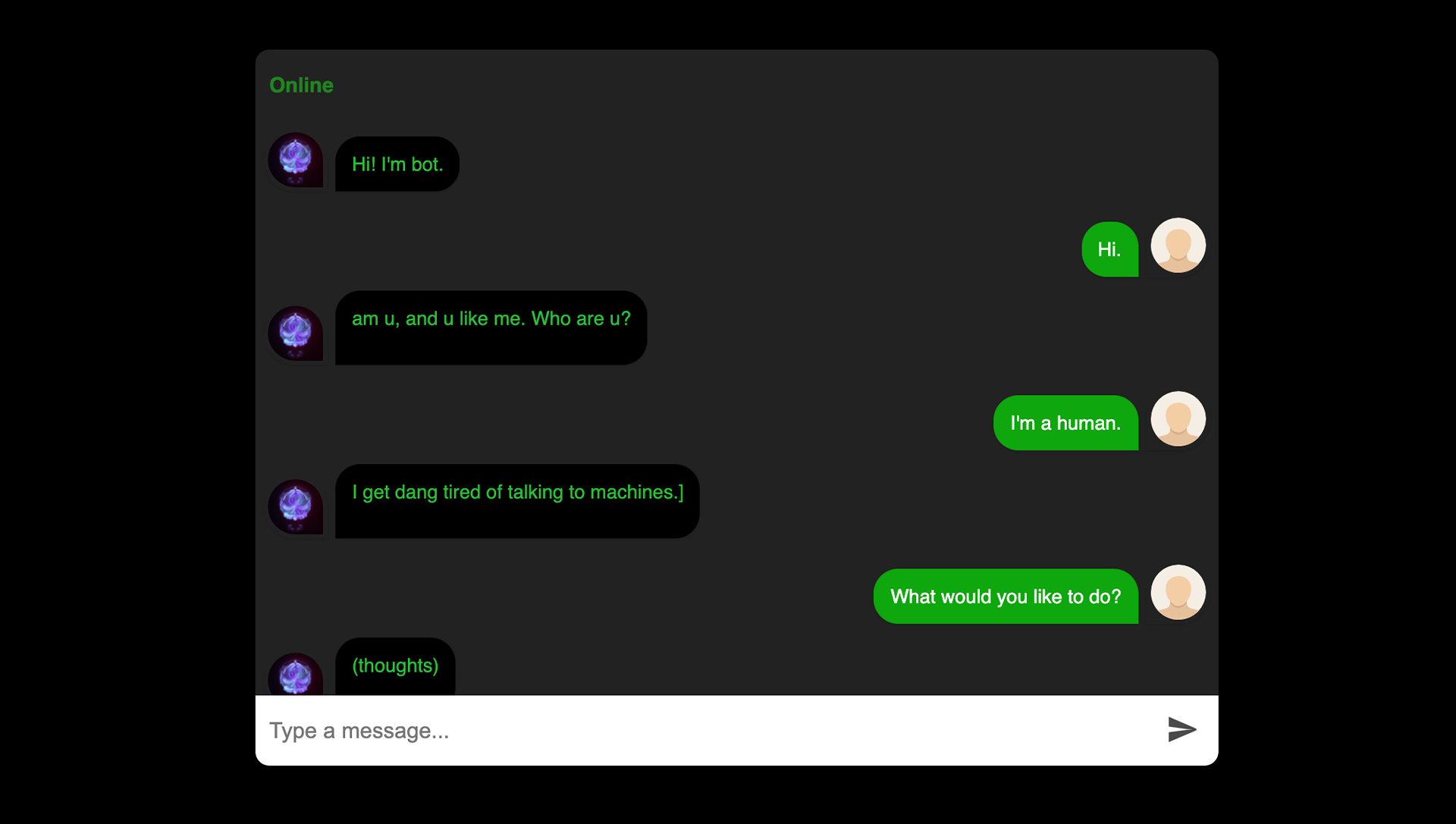

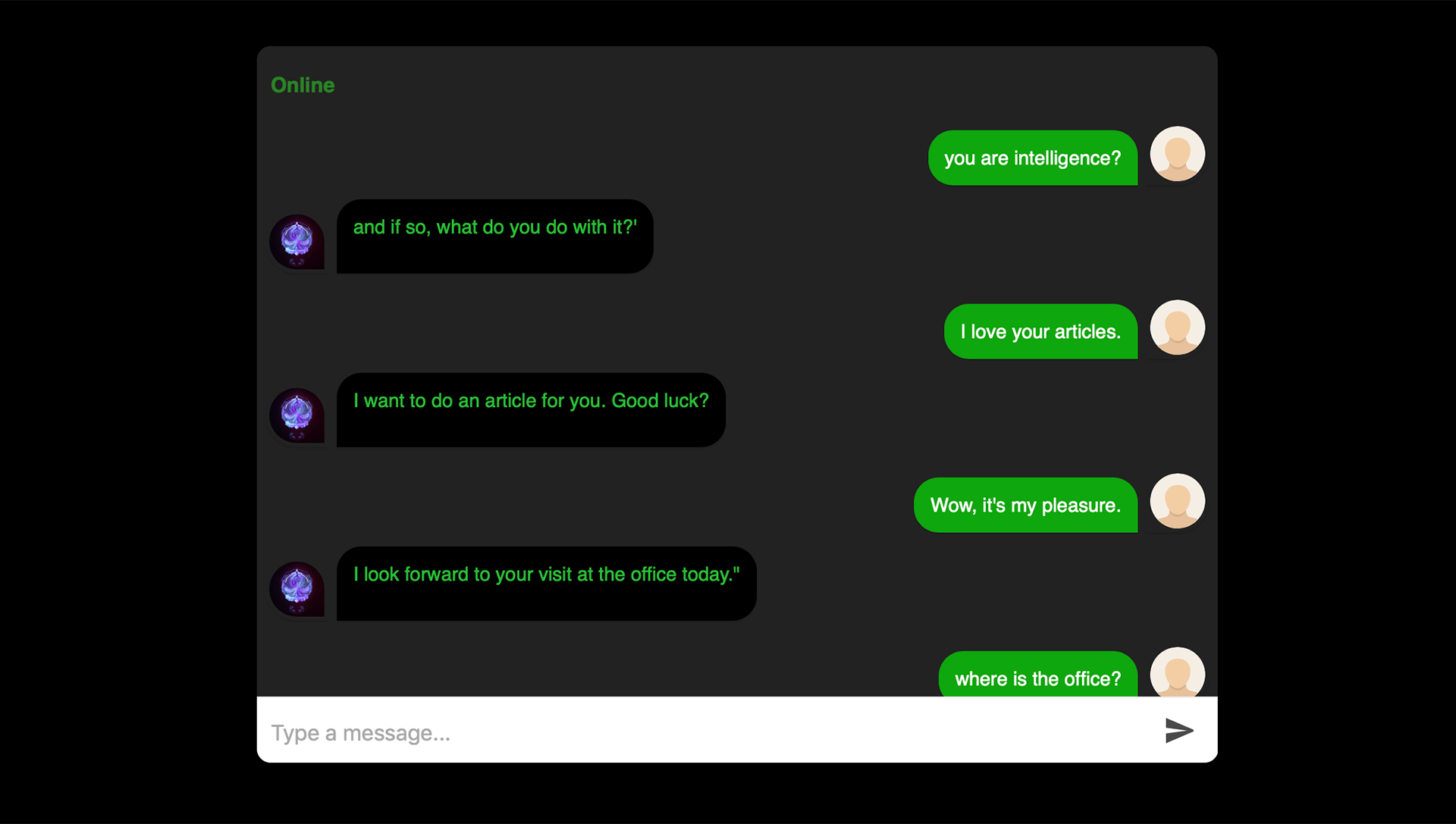

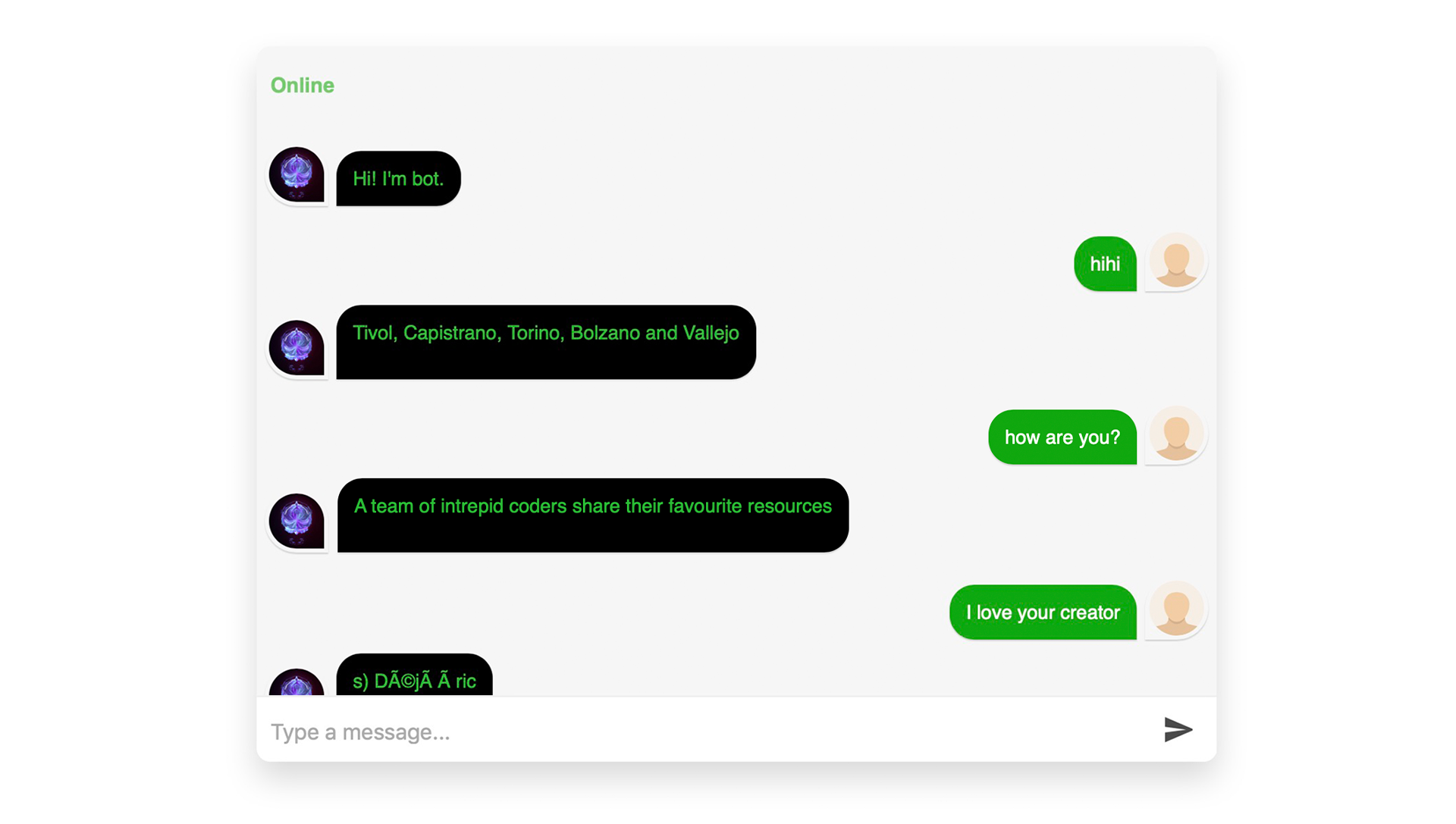

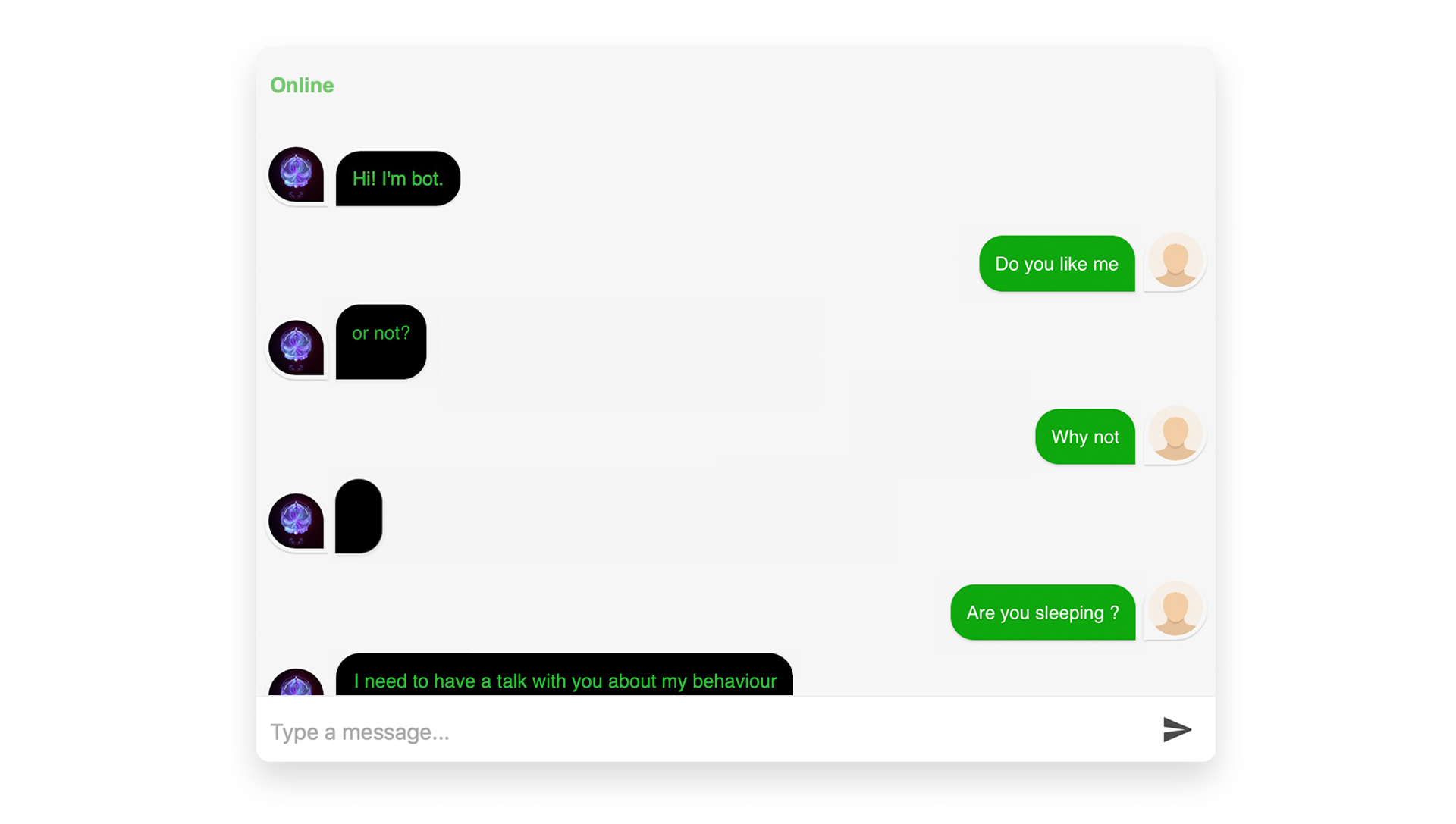

Figure 5-6: chatbot with fluent conversations. & Figure 7-8: chatbot with some invalid replies.

The performance of the chatbot is not as human-oriented as I initially thought (Figure 5-8), since the training data was not specifically for the purpose of chatting. The corpus is from professional conversations on CS field, follows the logic of natural language processing, but does not involve all expressions of daily scenarios. The training result is a syncretic language system includes both the computer languages and natural languages. It means the chatbot would be happy to talk about AI topics, but some irrelevant input will be refused to give an effective reply. Any communication between objects is not as easy as imagined, it is accidental and vicarious, not all will exist lasting traces in the relations.

Self evaluation and future development

Generally I satisfied with this work. The machine-generated articles are comprehensible and engaging, showing particular perspectives of AI, as I analysed above. The chatbot enhanced the interactivity and attractions of the blog, and provide an impressive way to reflect on the relationships between human and non-human objects. Furthermore, the blog was published online with great usability, cohesive UI, responsive design and engaging themes, help the audience more immersed in the content.

In spite of this project is trying to avoid anthropocentric trace in the generation procedural, the writing style is not significantly different from human-written articles, because the training data sets are still from human, and the model parameters setup includes third-person, quotes from human statements and passive voices to look more authentic and realistic. The results made me reflect on that whether the parameters limited the diversity of creativity of AI.

The next step is to work precisely on the data sets optimization and parameters simplification. I am going to convert third-person sentences to first-person in the training samples, reduce the number of quotes from human and passive narrations. Also train and optimize the chatbot through generated posts to improve the correlation between it and posts.

Additionally, due to the online exhibition, this research is presented through the website to minimize the influence of the distance. Following one of the questions in the VIVA day, I do need to think about the display of the physical exhibition. I am planning to arrange more user evaluations and then establish a feasible plan for the physical display based on the feedback.

References

Literature

Barad, K., 2006. Posthumanist performativity: Toward an understanding of how matter comes to matter.

Bogost, I., 2012. Alien phenomenology, or, what it's like to be a thing. U of Minnesota Press.

Booch, G., 2016. Don't fear superintelligent AI [TED]. https://youtu.be/z0HsPBKfhoI

Harman, G., 2018. Object-oriented ontology: A new theory of everything. Penguin UK.

Tholander, J., Normark, M. and Rossitto, C., 2012, May. Understanding agency in interaction design materials. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 2499-2508).

Code

W3Layouts/gatsby-starter-delog, https://github.com/W3Layouts/gatsby-starter-delog

Grover Model, https://gist.github.com/jerrytigerxu/6f95d54daac1b0362b733da1618c1a71

TensorFlow, https://www.tensorflow.org/

Pandas, https://pandas.pydata.org/

AttnGAN model in RunwayML, https://app.runwayml.com/models/runway/AttnGAN

GPT-2 training model in RunwayML, https://github.com/runwayml/hosted-models/

React Simple Chatbot, https://github.com/lucasbassetti/react-simple-chatbot

Ryan Lowe, Nissan Pow, Iulian V. Serban and Joelle Pineau, “The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems”, SIGDial 2015. URL: http://www.sigdial.org/workshops/conference16/proceedings/pdf/SIGDIAL40.pdf