In Absentia

In Absentia is an immersive audio-visual performance.

produced by: Benjamin Sammon

Introduction

In Absentia is an exploration of both generative and improvised music/visuals using the idea of shared agency.

Concept and background research

In this project I wanted to create a process in which I would share agency with the computer. Using elements of human improvisation and generative programming, I went about curating a song which would become part of an audio-visual experience.

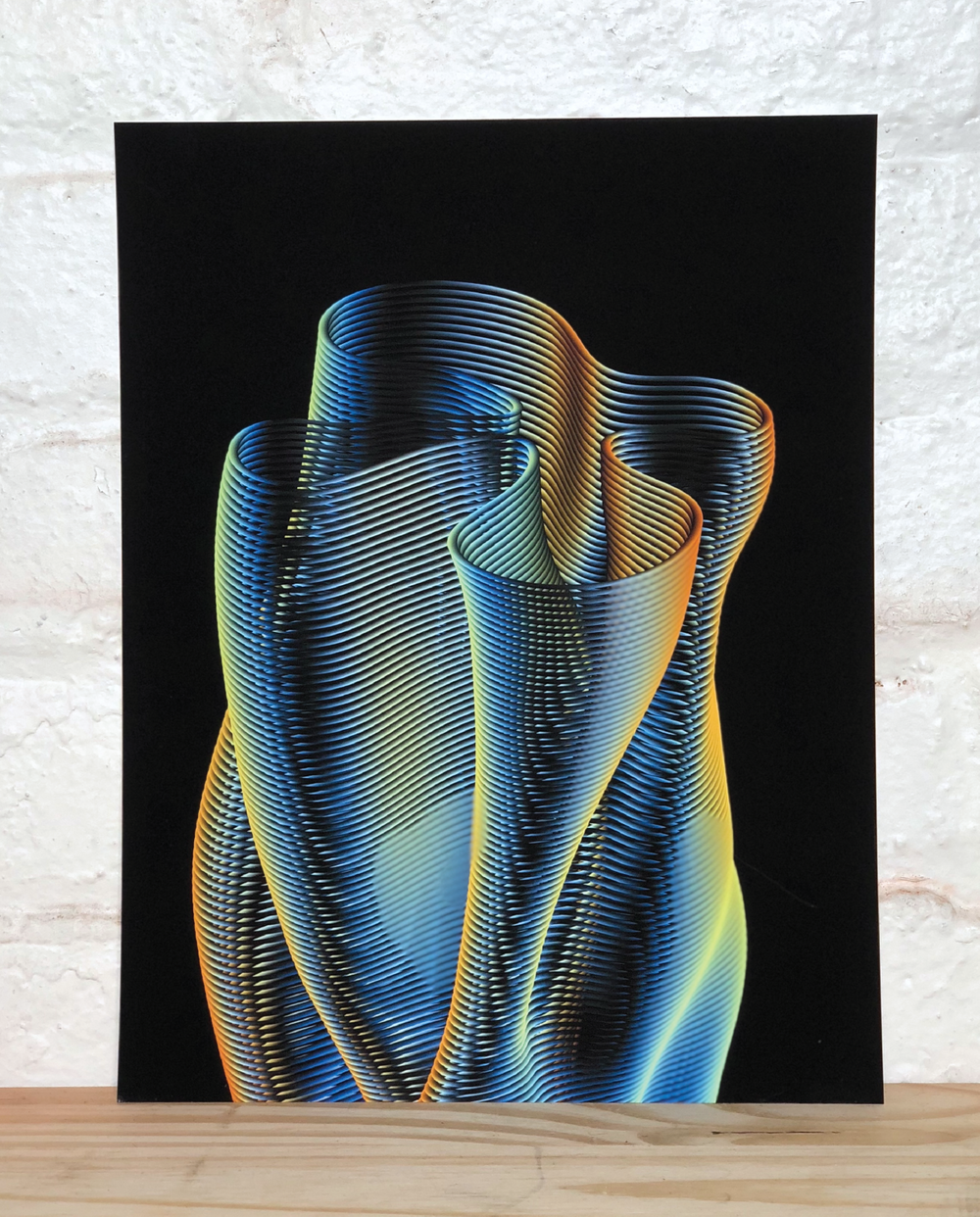

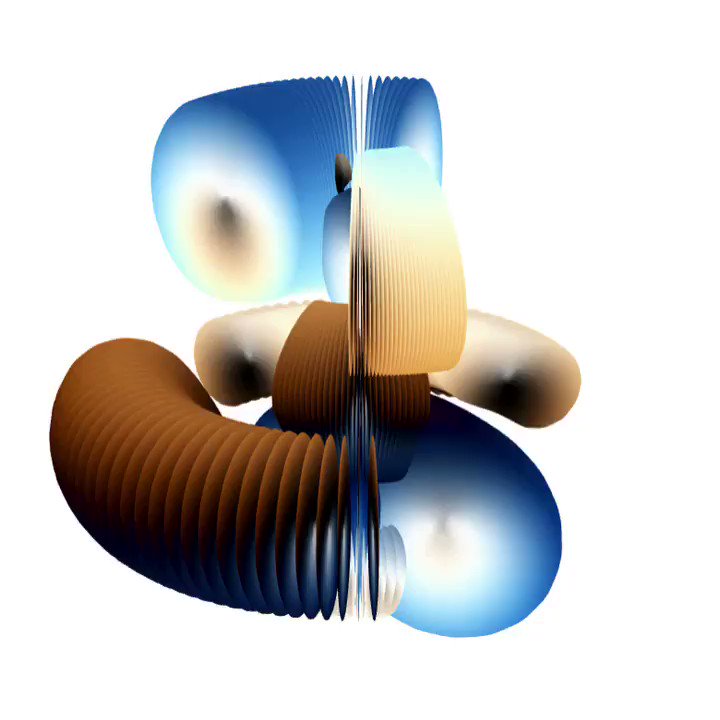

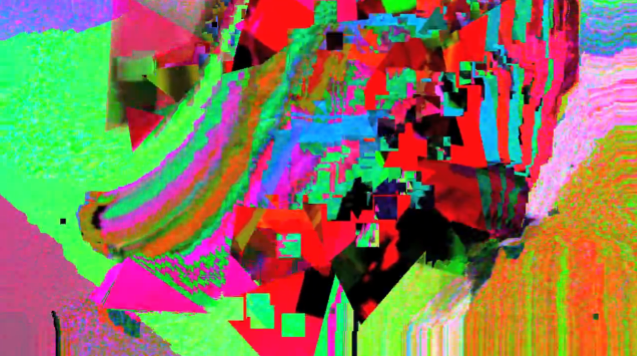

For the visuals I was heavily influenced by Zach Lieberman’s work with gradients, creating textures reminiscent of iridescent patterns. I was also influenced by Andrew Bensons work with Jitter, his maximalist glitch video paintings are visually and technically brilliant, and his process explained in the Jitter Recipe Books were really helpful to learning Jitter.

For the music I was inspired by electronic music producers such as Autechre and Aphex Twin who use algorithms and generative elements to make their music. I am fascinated by music containing generative elements and how the artist works with the computer as almost a member of the band. It is also very interesting to me how the curation process works in making this style of music.

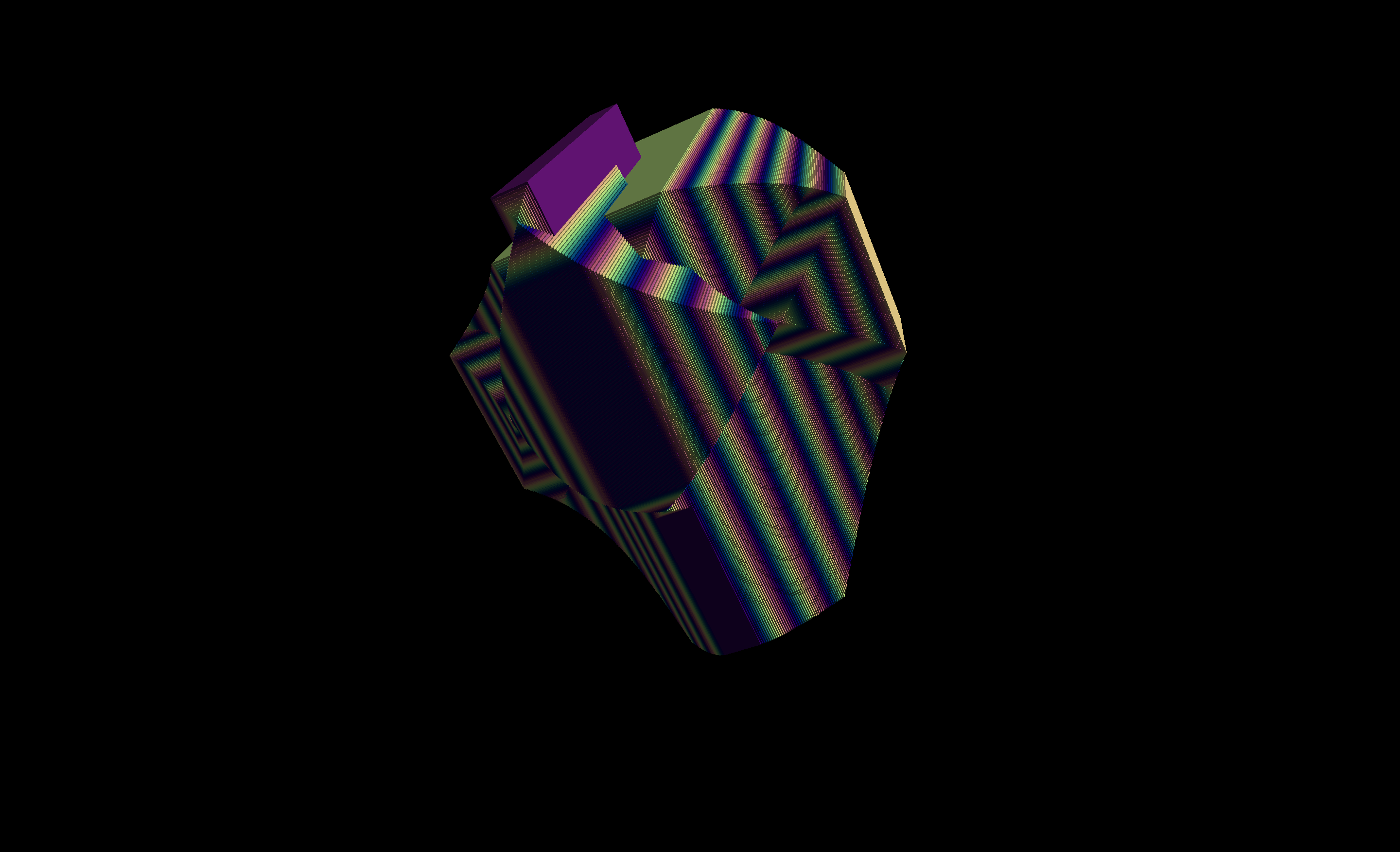

Below are some inspiration images from Zach Lieberman and Andrew Benson:

Technical

I used Max/MSP and Logic Pro for my audio. This involved programming drum patterns and synth lines as well as generative processing of various audio channels. I also used Jitter to create the visual elements which I then mapped to a midi controller to play live in real time. I liked the juxtaposition of generative elements in the music and live improvisation of the visuals. It’s almost as if the computer was able to improvise a certain amount of the process along with my own improvisation. An example of how this works can be seen in the music, I created a hi-hat loop which was then fed into an algorithm and then I curated the outcome, only allowing hi-hat ‘glitches’ that I deemed interesting to be a part of the song. This worked well as I had the inclusion of seemingly random elements and computational glitches, but I still had the final say on what would make the song, so I could make it auditorily and musically pleasing.

For the visuals I used Jitter. I originally was using a combination of Jitter, OpenFrameworks and Processing but it became apparent that I had overcomplicated the process and there was little cohesion between the different elements. After some discussions with my peers, I concluded that the visuals I created in Jitter would work best for the performance on their own.

Using Jitter was really effective and lent itself well to creating a set of visuals that I could perform live. For this project I used a lot of functions from the jit.mo package, this creates 3d moving objects using jit.gl.gridshape. In this project, I used different waveforms to determine the position, scale, rotation and colour of the visual. The jit.mo.function works using ‘speed’ inputs, this determines the fluctuation of the function. A good example of this is using the colour, if the speed is at 2000 the shape will slowly change between colours, if the speed is at 3 it will travel through the colours extremely quickly, creating a stripy gradient effect.

After I completed the project I then spent two weeks with fellow performers setting up the immersive projection. This involved mapping our visuals to a 5-projection screen setup using Mad Mapper and mixing the sound correctly for the space. We also took it in turns to practice our set on a daily basis. This was paramount as I wanted to make sure that my visuals would be effective on this large scale. I think that tailoring my visuals to this setup was the key to it successfully being an engaging and immersive experience.

Future development

I would like to further develop this project into a 30-minute performance that I would be able to perform live at gigs. I would probably include more improvised musical elements to make the performance have more of a spontaneous feel and vary the visuals throughout the set. I would also like to build my own instruments and midi controllers using Arduino’s to make the music more performative. This will make the experience more engaging for the audience as they can physically see me play the music live.

Self evaluation

Overall, I was happy with my final project, I think the visuals complimented the music and created an immersive environment for people to enjoy. I also enjoyed the production, including setting up the projectors, mixing the music to fit the 12-speaker sound system and organisation of the event went particularly well.

During this project I had various issues which caused me to tailor my project in a certain way. I was initially going to use a lot of audio reactive elements but due to memory allocation issues this lowered the frame rate of my visuals to below acceptable standards. This was initially a negative, but I am glad I decided to perform the visuals live as it gave me a lot more to do in terms of a performance. I also wanted to use multiple platforms and had written code in both OpenFrameworks and Processing, but this was left out of the project as I thought the various different parts wouldn’t have fit together cohesively. I spent a lot of time trying to make these parts work when I should have just focused on what was working in the project. However, I plan on using these elements in futures projects.

I think in future I will focus on a substantive idea and work more with themes. For this project I was more focused on aesthetics but in future I would like to work on more of a conceptual theme.

Also, in future I would like to work more on memory allocation and learn how to properly utilize the audio-reactive functionality of Max/MSP. I previously worked on a project using Max with OSC for audio reactive visuals, so I may revisit that in future projects.

I initially set out to create an immersive audio-visual experience using curated computational and improvised elements, I think I succeeded in this goal and thoroughly enjoyed the process.

References

- https://cycling74.com/forums/andrew-benson-jitter-recipe-book-123

- https://www.youtube.com/user/dude837

- https://www.youtube.com/user/MrNedRush