How Do I Remember Thee

A Triptych Video Installation about memory preservation and recollections against the influx of current media news. It deploys AI technologies and deep fake characters that recite poetry.

produced by: Daniah Alsaleh

Introduction

The installation explores the process and mechanism of memory making in the presence of media influences. How the constant flux of images with the different practices of representation in media, impacts, interferes, and intrudes with memory preservation in particular within the context of cultural identity.

“How do I remember Thee” is influenced by the framework of cultural theorist Stuart Hall’s philosophy of coding and decoding discourse, and by the French theorist Jean Baudrillard views of modernity and postmodernity with regards to his notion of hyperreality. The piece situates itself as a commentary on how the archival memories of the past is being slowly distorted by images in contemporary political culture informed along different modes of media by employing fourteen generated deepfakes protagonists that recite poetry as narrators. The installation consists of Three screens that uses Three different types of AI generative models:

Screen 1: StyleGAN2 model which has been trained on a unique dataset and generates deep fakes (Nvidia)

Screen 2: Deep fakes manipulated by First Order_motion_model (Siarohin Et al)

Screen 3: Contemporary Images manipulated by sinGAN model (Shaham Et al)

View of full video Artwork here: https://vimeo.com/462443240/26e8bfed55

Or Press Play Below

Concept and background research

The inspiration behind the project was instigated by my own reflections on comparing images broadcasted on media outlets alongside images stored in the collective memory. The politics of image creation and manipulation in contemporary culture obfuscates the clarity of a memory, and the memory itself is left battered, confused and muddled with undistinguishable features that threatens the safeguarding of authentic witnessing.

The first part of the work is based on the theoretical framework of Stuart Hall’s “Encoding and Decoding in the Television Discourse”. Hall critically examines mass media as central to the audience’s culture and how the encoded and decoded messages are interpellated between the audience and the receiver in ways that plays into reconfiguring cultural identity.

The importance of Archiving during decades of conflict is so critical to memory preservation in particular when faced with media news. The Arab image foundation was founded by the Lebanese artist Akram Zaatari as an intervention against the lack of photographic archives in the region. Within the rapid disappearance of the few images that remained during the turbulence wars and unrest during the 20th century, the collections reveal a large aspect of social histories of the Arab world including photographs that citizens have constructed of themselves since the creation of photography. The collection has amassed around 600,000 images and makes it accessible with an online image database for artistic creation and exploration that confronts the complexities of social and political realities of the past and present.

The art of forgetting and remembering is a subject that has been investigated by many artists within their practices exploring chunks of hidden histories that nudges their discourse into ways to reconstruct and make visible these obscured areas to the world, as objects of witness. Doris Salcedo’s work on collective memory and suffering in the context of war is powerful, in particular her work Palimpsest that deals with the migrant crises of Europe. In this installation, with temporal and intermitted droplets of water appearing on floor slabs of stone, she applies the act of naming 300 anonymous victims who have died trying to cross the Mediterranean Sea fleeing from wars in Africa and the middle east. The installation carves out a constant state of engraving and erasure that transforms the space into an active memorial.

Memory aliveness continues with Paula Rego’s representational paintings in her “Maps of memory: National and sexual politics” body of works that delve in Portuguese history and nostalgia from a feminist prospective. Her work dips into her unconscious resources entangled with personal references and makes them eternally visible on canvases portraying visual narratives of passed histories.

The second part of the project situates itself with Jean Baudrillard’s theory of Simulacra and Simulacrum where he examines the relationship between reality, symbols and society, specifically where it relates to significations and symbolism of culture, media, and the construction of shared existence. In Baudrillard’s simulacra, there are four stages of representation: It is a reflection of reality, it masks reality, it masks the absence of reality, and finally, it has no relation to any reality whatsoever where it becomes pure simulacrum. This forth stage, a simulated sense of reality, surpasses the real and society begins to produce images of images, copies of copies where at this point, in the postmodern condition, the original has been removed entirely and is replaced by the hyperreal, a pure simulacrum.

Baudrillard’s hyperreality can be felt within the proliferation of deepfake technology that has and still continues to have implication in distinguishing between what is real and what is fake, as well as becoming more challenging to understand truthiness from falsehood within the emergence of hyper realistic deepfakes that utilizes artificial intelligence. Fictious content and convincing fabricated manipulation of videos can be spread quickly by malevolent actors through the different modes of media where it could lead to many social and political consequences that could inform certain biases, decision making, and manipulation of opinions.

With the harmful side of Deepfakes, they still do have a positive employment that has been engaged in many industries such as advertisement, entertainment, games and various fields in social, medical and e-commerce. The most prevalent use is in the movie industry, whether using it as a framework such in the Matrix and Blade Runner, or whether reincarnating dead actors or utilizing it in special effects and alternating the appearance and voices of individuals. Carrie fisher, who passed away in 2016 and was known for her role as Leia in the Star Wars series, was brought back to life in the 2019 film “Rise of Skywalker” and which she had a powerful presence in where the audience had a strong response to . In the realm of arts and culture, a deepfake of Dali was created at the Dali Museum in Florida, where the Catalan artist was brought back to life by pulling thousands of frames from old video interviews and processing them through 1,000 hours of machine learning training to be able to overlay the source onto an actor’s face .

Turner Prize-winning British artist Gillian Wearing used a deepfake video to create a new extension of her work, as part of the Cincinnati Art Museum exhibition “Life: Gillian Wearing”, Where she explores tensions between public and private life, identity, self-revelation and contemporary media culture.

Although deepfakes have been used incisively in memes, parodies, and satirical scripts for entertainment, what happens when a deepfake cannot be distinguished from the real and thus becomes as credible as an authentic truth? Miquela is an influencer and a social justice activist with millions of followers on Instagram and TikTok, and despite the uncanny valley Miquela lives within, many of her fans believed for two years that she was a 19 year old flesh-and-blood character until her provenance was disclosed by her creators that she is in actual fact, a pixelated creation of a deep fake CGI model, a pure digital fabrication of which was perceived through a hyper mediated world as a very real character.

When the distinction becomes blurred between juxtaposition real world events with an imitational digital realm, and when society replaces reality and its meaning with symbols and signs, the human experience here becomes a simulation of reality and not reality in its objective form. A created copy of reality that is so artificial that it becomes un-linked to anything real, where the audience prefer and obsess over this simulation more than the real materiality of object and things.

Taking the hyperreal as a point of departure for my own explorations and applying first motion order model on the fake image that styleGAN2 synthesized, the resulting artifice were absolute simulacra and has no relations whatsoever to anything real that are represented in the fourteen deepfake protagonist in the installation.

Technical

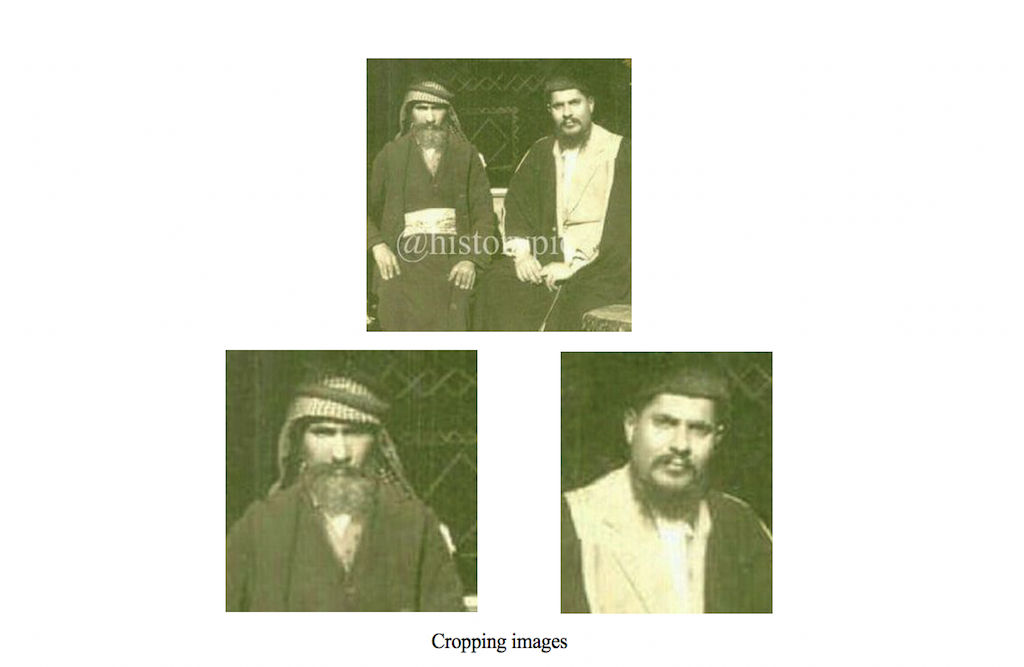

First part: To proceed with the inquiry of what the collective memory is, and to express what I wanted to make within the idea of memory preservation, I had to start collecting data, specifically data that relates to pictures of Iraq between the thirties and sixties. To start of preparing my datasets, I used a scraping python program to scrape images from Instagram and google. The result was an accumulation of more than 8000 images that needed intensive cleaning and organizing. The duplicates had to be deleted, landscapes had to be discarded and uniform sizing had to be applied to all. With that I was left with a much smaller dataset that I had to hack in a way that produced at least 1000 samples. One way in which I had to enrich my dataset was to choose images of groups, zoom in and crop the headshots individually, and add that to the dataset.

For this part of the project, I have used 2 types of GAN architecture: SRF BN super resolution and StyleGAN 2. The SRF training aided in addressing the artifacts that were on the images: debris, discoloration, resolution. With regards to StyleGAN 2, it consists of two networks, the generator and the discriminator, and between them they synthesize realistic image that are indistinguishable from authentic images by learning and training from a dataset. Although this particular GAN can produce a “Fake Image”, that was not my aim here for the video playing on the first screen, what I was interested in was the latent space the GAN produced while training. The latent space is the area where the two networks, the generator and discriminator test each other, a sort of wrestling ring where the former is trying to pass the later a convincing image. I see it as the memory dumping well of all the failed attempts to create that realistic crisp image which tricks the discriminator in believing it is true.

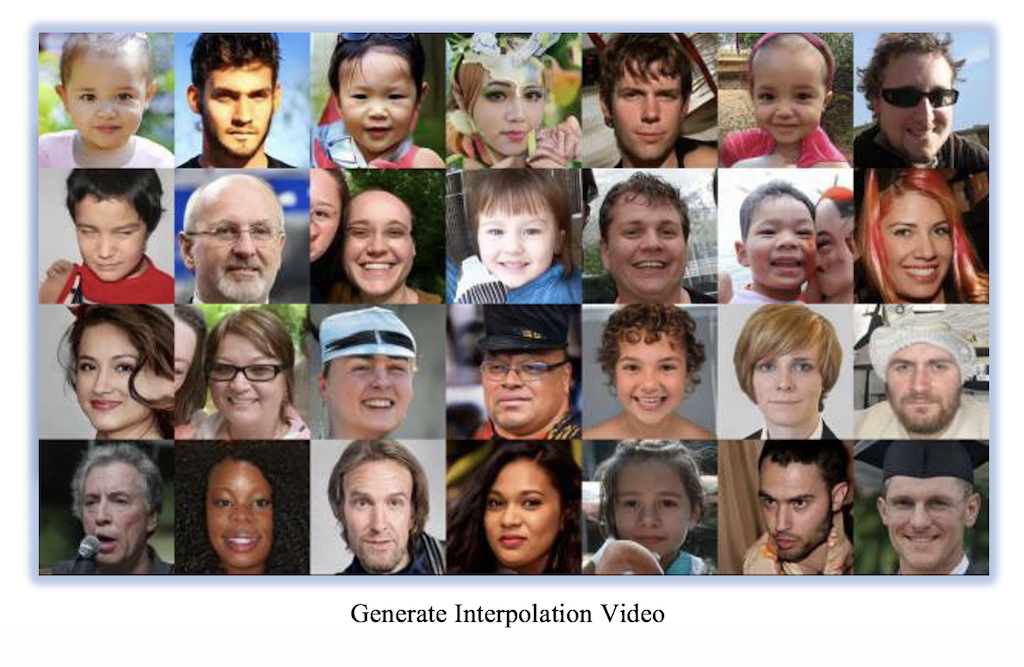

Using google Colab to execute the code, the training with StyleGan 2 initially took 41 hours with 27,000 steps dispersed among many many training sessions. As StyleGan 2 works in a 512-dimensional array, the latent walk is the process of a virtual walk between a vertex and another in this space, hence a video was created to interpolate between one point to the next in this multi dimension area. The result was a form of expression that resonated with my own subjective position on the erosion of memory and identity confronted by the encoded messages within images in media.

Second Part: Departing from the styleGAN2 model experiment in the first project, I continued to train this further as I was searching for more clearer fake images. The training continued till it reached towards the 100,000 step mark. After many experimentations, a selection of head shots were chosen to represent the hyperreal. These were surreal to say the least, with the influence of battered black and white effects, they seemed as though they were vintage pictures from an old lost photo album in an antique shop, but the truth is, they are a fabrication of a machine learning architecture.

The next step was to take these images and train them with a First motion Order model, a type of GAN that creates image animation from 2 inputs: a source still image and a driving video. The input image was taking one image of the selected portraits, and the video was a recording of myself talking with an exaggerated face gesture. The resulting output combines the appearance extracted from the source image with the motion derived from the driving video. This model generates a motion video with no sound hence to add the voice effect, this needs to go through post processing to add a layer of the original voice via a chosen video software. The spoken word in the video is a piece of poetry that I wrote borrowing from classical poetry about memory and computer science lexicons.

Third Part: The third part of the installation used sinGAN, an unconditional generative model that can learn from just a single input image. Then it generates a high quality, diverse samples of animation that carry the same visual content as the image.

The Data set here was only a handful, carefully selected photos from current media which relates to civil unrest, conflict and destruction regarding the demographics in Iraq.

Future development

The time and effort that went into this project whether from its theoretical framework or the technological expertise that one has to learn was a journey full of trials and errors. I am very proud of the work I have achieved and took on board all the feedback I received. The best reaction about the work was when a few of the visitors and the online audience were emotionally engaged and actually believed that the deepfakes were real people and questioned me about them. The reaction when they were told that these characters, were in fact fakes, was shock. This is living proof of Baudrillard’s hyperreality theory which I applied to be part of the piece.

As a point of departure from my project, I want to investigate further the framework of what memory is combined with the expressive form GANs can produce by investigating and exploring my reflective process. This experiment showed me that the incredible advancements in AI needs more critical investigation, although the development with these technologies are fairly new to the world when compared historically with other technologies, yet there is space for urgent evaluation and a critical discourse to question what will the long term effects of such developments be on culture and the total fabric of society.

Self evaluation

Artists who are using GANs, usually are interested in how GANs can create art. Looking now at Mario Klingemann work with GANs as an example, in particular his installation “Memories of Passers-by I”, it is all about the aesthetic principle, the classical unique portrait. I was more inspired by an AI exhibition at Goldsmiths “The CreativeMachine2” in 2018. But with this experiment in “How Do I Remember Thee“, I found myself using GANs not as a technological scientific research method, nor to create Francis Bacon-like aesthetics, but instead it is used as a tool for self-expression of a concept that helped me in translating the notion of memory making and safeguarding memory storage. The resulting video artifact was an interpretation of the erosion of memories subjected against the constant bombardment of stitched ideas in broadcasted images, that gives rise to stereotyping and causes the reframing of mental visual accounts, and the encoded messages in media.

The process of working on this project was faced with many challenges, in particular with regards to the dataset. Although I tried to mix a variety of data in the set, I definitely did not include different types of data due to the fact that I had limited data albeit it consists of 8000 plus images, that is still considered a small data sample. Another factor to the set is that by unconsciously ignoring other data, I might have caused an algorithmic bias in the GANs outcome that of which I am not aware of. Furthermore, a considerable amount of time took in cleaning up the images, this was a long tedious task that pushed me in looking into digital labor and the hidden works that one is not aware of.

In addition, not owning a powerful GPU made this work very challenging. Due to this I had to use google Colabs while working on this project, and the restrictions with Colab are frustrating. Google Colab only allows you ten hours a day to use their GPU, where I had to be next to the training proving that I did not leave it overnight by actively pressing on messages Colab was sending me, otherwise they will delete all the training that was accomplished and I had to restart everything again. Towards the end of the training, I decided to move all my pickle files to Runway, although it had more powerful GPUs and was not free, at least I felt safe leaving the machine train my data without the need for me to be there.

Moving forward I would like in the future to own my own GPU, and have time and patience to work and invest in creating my own datasets with more clarity and better pixilation to improve on the quality of any work that might arrive from that.

References

Code:

[1] @InProceedings{Siarohin_2019_NeurIPS,author={Siarohin, Aliaksandr and Lathuilière, Stéphane and Tulyakov, Sergey and Ricci, Elisa and Sebe, Nicu},title={First Order Motion Model for Image Animation},booktitle = {Conference on Neural Information Processing Systems (NeurIPS)}, month = {December},year = {2019} }

[2] @inproceedings {Karras2019stylegan2,title = {Analysing and Improving the Image Quality of {StyleGAN}},author = {Tero Karras and Samuli Laine and Miika Aittala and Janne Hellsten and Jaakko Lehtinen and Timo Aila},booktitle = {Proc. CVPR}, year = {2020}}

[3] Wenming Yang, Xuechen Zhang, Yapeng Tian, Wei Wang, Jing-Hao Xue. Deep Learning for Single Image Super-Resolution: A Brief Review. TMM2019. [https://arxiv.org/pdf/1808.03344.pdf]

[4] 2020. "Dvschultz/Dataset-Tools". GitHub. https://github.com/dvschultz/dataset- tools.

Other References:

[5] Baudrillard, Jean, and Sheila Faria Glaser. Simulacra and Simulation. Ann Arbor: University of Michigan Press, 1994.

[6] Hall, Stuart, University of Birmingham. Centre for Contemporary Cultural Studies, and Council of Europe. Encoding and Decoding in the Television Discourse. Birmingham: Centre for Contemporary Cultural Studies, University of Birmingham, 1973.

[7] Batatu, H., 1978. The Old Social Classes And The Revolutionary Movements Of Iraq. Princeton, N.J.: Princeton University Press.

[8] Mitchell, W., 2010. What Do Pictures Want?. Chicago: University of Chicago Press.

[9] BBC News. 2020. Star Wars: How We Brought Carrie Fisher Back. [online] Available at:

[10] The Dali Museum, 2020. Behind The Scenes: Dali Lives. Available at:

[11] Cincinnati Art Museum. 2018. Cincinnati Art Museum: Life: Gillian Wearing. [online] Available at:

[12] Instagram.com. n.d. Login • Instagram. [online] Available at:

[13] Brud.fyi. n.d. [online] Available at:

[14] Lisboa, Maria Manuel. 2003. Paula Rego's Map Of Memory. Ashgate Publishing Limited

[15] Zaatari, Akram. 2020. "The Arab Image Foundation". Arabimagefoundation.Com.http://www.arabimagefoundation.com.

[16] "Mario Klingemann MEMORIES OF PASSERSBY I". 2020. Medium. https://medium.com/dipchain/mario-klingemann-memories-of-passersby-i-c73f72675743.

[17] "Homepage | Francis Bacon". 2020. Francis-Bacon.Com. https://www.francis-bacon.com.

[18] cube, White. 2020. "White Cube - Gallery Exhibitions". Whitecube.Com. https://whitecube.com/exhibitions/exhibition/doris_salcedo_bermondsey_2018.

[19] CYLAND. 2020. The Creative Machine 2 Exhibition By Goldsmiths, University Of London And CYLAND Opens In November - CYLAND. [online] Available at:

Creditation: Parts of Documentation Video was filmed by Rubert Earl https://www.instagram.com/visuals_ru/