Gloow

produced by: George Linfield

Introduction

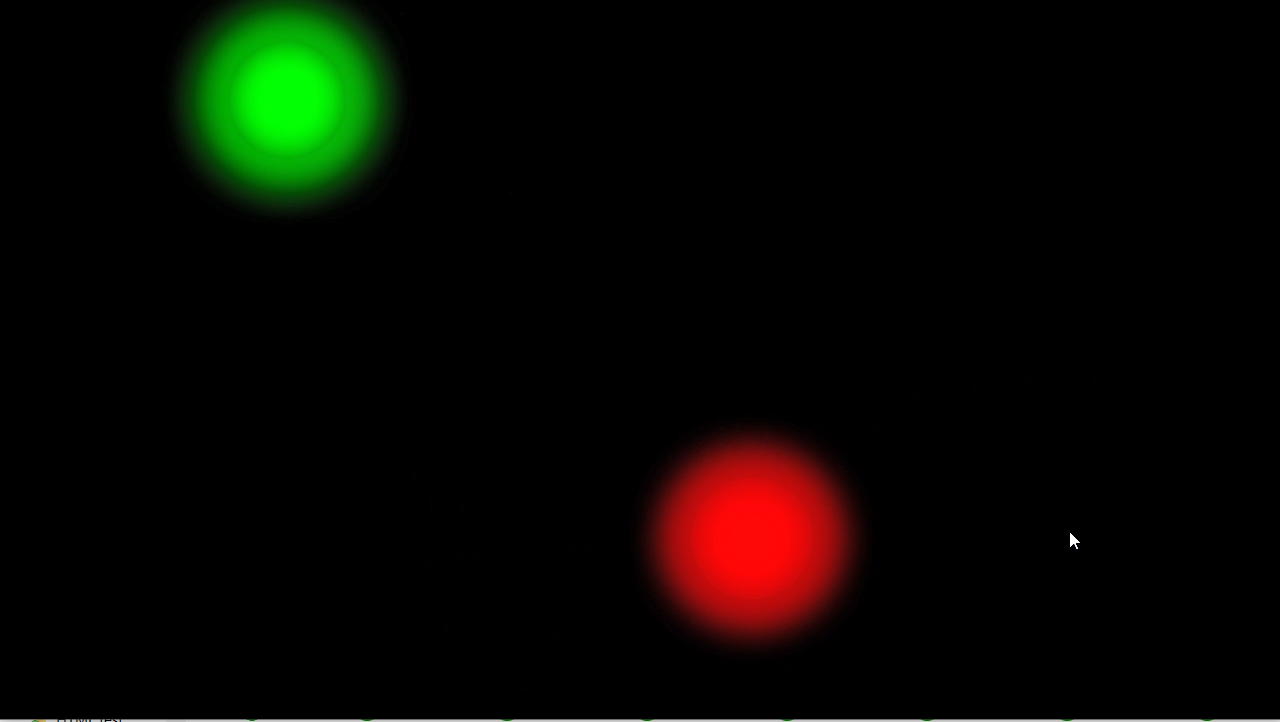

Gloow (pronounced “glu”) is an arcade game controlled through physical movement. The game uses computer vision to detect two coloured paddles, which the player must to move to steer a set of orbs and an interconnecting wire between obstacles. The game is a modern re-imagining of the classic ‘wire loop’ puzzles, a tricky test of players’ hand-eye coordination, and is as much fun to watch as it is to play!

Initial Concept

When I saw that the brief required only a single input I knew that computer vision was what I wanted to work with. I pretty quickly settled on using different coloured paddles as physical controllers, and decided to design a game within the constraints present in this control scheme.

My initial game idea was a two-player competitive shooter similar to Geometry Wars. One player controls an orb, and the other a turret trying to shoot the orb. Each is controlled by an individual paddle. Player One must dodge their orb around the screen for as long as possible without being hit. Player Two’s turret is locked to the edges of the screen, but they can move it fully around the window, meaning they can tactically create a stream of bullets to dodge.

However, after setting up the controllers and playtesting a basic iteration of this game, I quickly realised there was a problem: it just wasn’t fun to play. Due to the CPU limitations of running computer vision (maybe my laptop just wasn’t up to the task) I was unable to spawn bullets fast enough to really challenge Player One. It was also very easy to dodge around the screen as the orb’s position was mapped to the position of the paddle – and it was possible for Player One to move this very quickly. Player Two could only move around the edges of the screen, and their shooting speed was fixed. It was incredibly unfair.

I felt I had taken my initial idea as far as I could, so went back to the drawing board.

Iterated Concept

The one satisfying thing about my first prototype was the physical control scheme. The way users interacted with the game reminded me of the old wire loop games, in which players had to guide a small metal loop around a complicated route of metal wire without the two metal objects touching.

I thought about whether it was possible to bring my gameplay experience closer to the level of balance and control required in the wire loop games. I wanted to lean into the physical aspect of the control scheme. I wanted my game to cause players to move with their controllers, as if they were balancing objects on their hands.

I took the most satisfying part of the first prototype – Player One’s movement – and applied this to both players. I now had two orbs moving around the screen, mapped to two different paddles. I then spawned a continuous stream of objects from the right of the screen, which both players would have to guide their orbs around. However, this was still far too easy for players to navigate, and also didn’t produce the kind of physicality I was looking for.

I then struck upon the idea of linking the two orbs by a wire, with the goal being to move the wire through the oncoming barrage of obstacles. The wire would stretch and slack between the orbs, so it was possible to bring the orbs close together to shield the wire, and then stretch them apart to collect bonus objects. It was very similar to playing an on-screen accordion, and immediately made the game both more challenging and more fun. Crucially, it also increases the embodiment of the control scheme: suddenly players had something to balance between the paddles. It was as if they were connected to the screen.

Technical – Computer Vision

I used the OpenCV addon to run computer vision in this project. This ran fine on my built-in webcam, as well as other USB webcams.

When first building the tracking system, I used the colour red (as red is one of the side’s of the ping pong paddle).

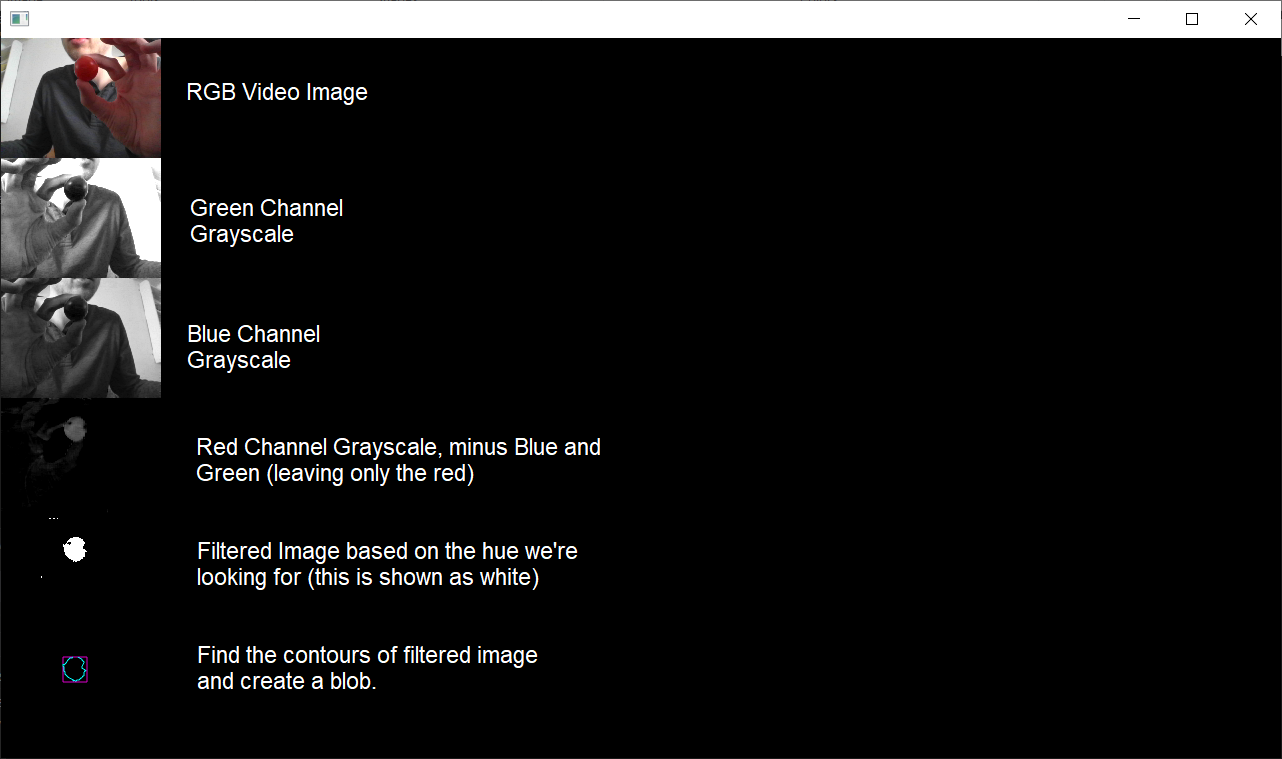

After initialising the webcam, I set and mirrored the RGB image before converting this into a grayscale planar images for each of the colour channels (r, g and b). I then added the green and blue channels together, and subtracted this from the red. This meant that my final grayscale image was only showing red – everything else was black.

With my colour channel set-up, I now just needed to create a tracking blob around any red on the image. I first filtered the image so that any pixels between a certain hue range (0-55 proved to be the magic number, picking up most of the red but missing out on any artefacts closer to black), were coloured white, and any that weren’t were coloured black. I used a conditional operator to check this.

I then simply had to used the findContours function on the newly filtered image to create a blob. Looping over the blob would allow me to set the relevant coloured orb’s x and y values to match the centre of the blob (using centroids).

After doing this for the red channel, I realised that it would be easy to replicate for either the blue or green channel, rather than tracking the black side of the ping pong paddle (nightmare). I ended up opting for green, with the plan being to vinyl wrap my second ping pong paddle to make sure it was the right colour.

Technical – Game Engine

There’s not much to say about the game itself, as it was quite easy to put together once I’d got the computer vision out of the way. All objects in the game, aside from the main player orbs, were handled using classes. The orbs’ positions were taken using the centroid of the colour-tracked blobs. I then used a class to create the wire.

To handle collision for the wire I drew twenty different nodes at even spaces along a line between the two orbs (using getInterpolated). I then detected collision between these nodes and obstacles, which would constantly spawn on the right and move to the left of the screen.

Self-evaluation

I am really happy with the finished product. I was admittedly a bit worried at the halfway stage, when I had a working control scheme but an incredibly shallow play experience. My iterated design solved this worry, as it was both more enjoyable to play, but also leveraged the control scheme as part of that experience, rather than just having it tacked on as a requirement of the project. Indeed, I was particularly pleased that I managed to get the computer vision tracking working really smoothly, as this was a big concern going into this project.

The only problem with the project is that it can be quite laggy at times. I’m not sure whether this is a result of my laptop, or something to do with the number of on-screen objects combined with the quite complex computer vision tasks being run at the same time. In the end I couldn’t really do a screen record and play the game at the same time without the footage stuttering, so this is an issue I’d like to look into fixing. There are also a couple of collision-related bugs that I ran out of time to iron out.

References

The ‘Experimental Game Development’ chapter of the OfBook reference was really useful to see what was and wasn’t possible with OpenFrameworks, and it also helped me with collision detection: https://openframeworks.cc/ofBook/chapters/game_design.html

For more information about wire loop games (and also the picture credit): https://en.wikipedia.org/wiki/Wire_loop_game