Face Casino

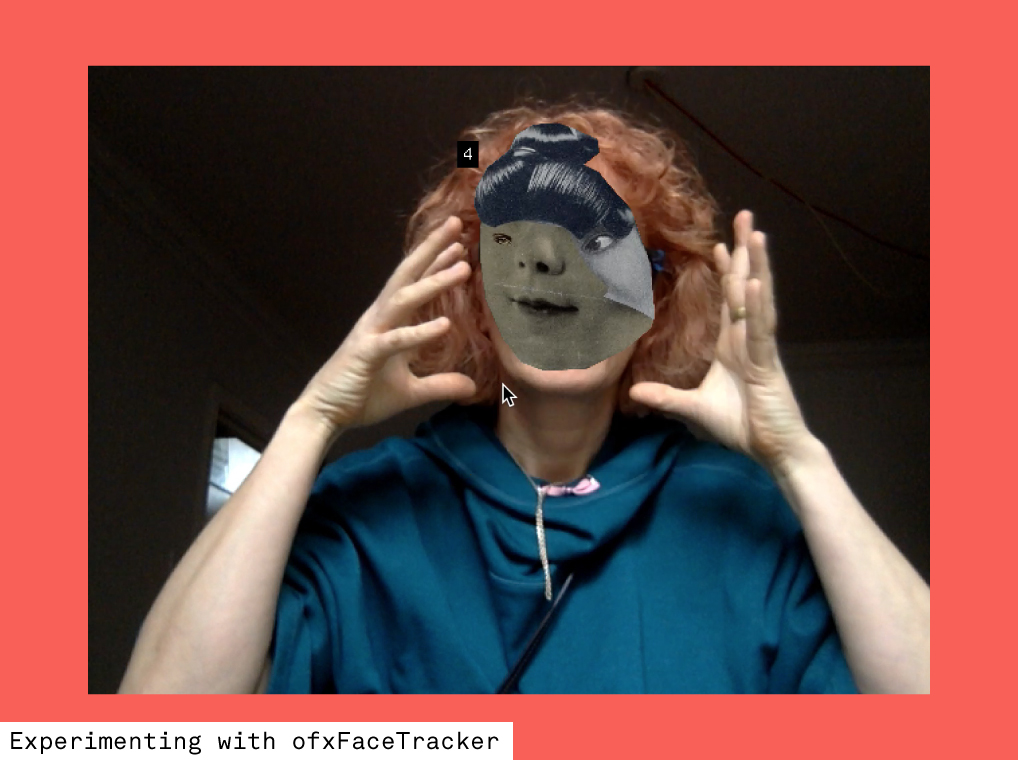

Playful automated facial casino experience that augments the viewer with crude new personalities that are positioned over and move with their actual face.

produced by: Panja Göbel

Introduction

An antidote to facial recognition systems that now pervade our daily lives. This application pretends to partially know the viewer offering an interpretation of likes, stats and surreal poetry attached to the side of each new face.

Every time the viewer looks to the side a new facial combination is loaded. The app encourages a tinderesque swiping through the facial mash-ups in the pursuit of finding a match - ie. getting comfortable with the “interpretation of the machine”.

Every configuration is automatically collected and added to an ever evolving piece.

Concept and background research

I have a big interest in computer vision and am particularly curious about how machines interpret us with all the other data they hold on us. What do they know about us? How do we appear in their eyes?

Over the past 5 years we have seen facial augmentation mushroom with anything from cartoonesque overlays to facial enhancement or distortion apps. I wanted to create a distinct appearance that separated itself from common visual reference points but rather resembled an expression by an "unsophisticated" artificial intelligence.

One big inspiration here was the german dadaist collage artist Hannah Höch. Her provocative facial photomontages often a critique of partriachial society feel very relevant to most of the political and societal issues we are facing at the moment. Another big inspiration was the work of the American multi-media artist Tony Oursler. In his series of works that are themed around privacy, surveillance and identity, absurd head installations invite the viewer to see themselves through the lense of a machine.

Technical

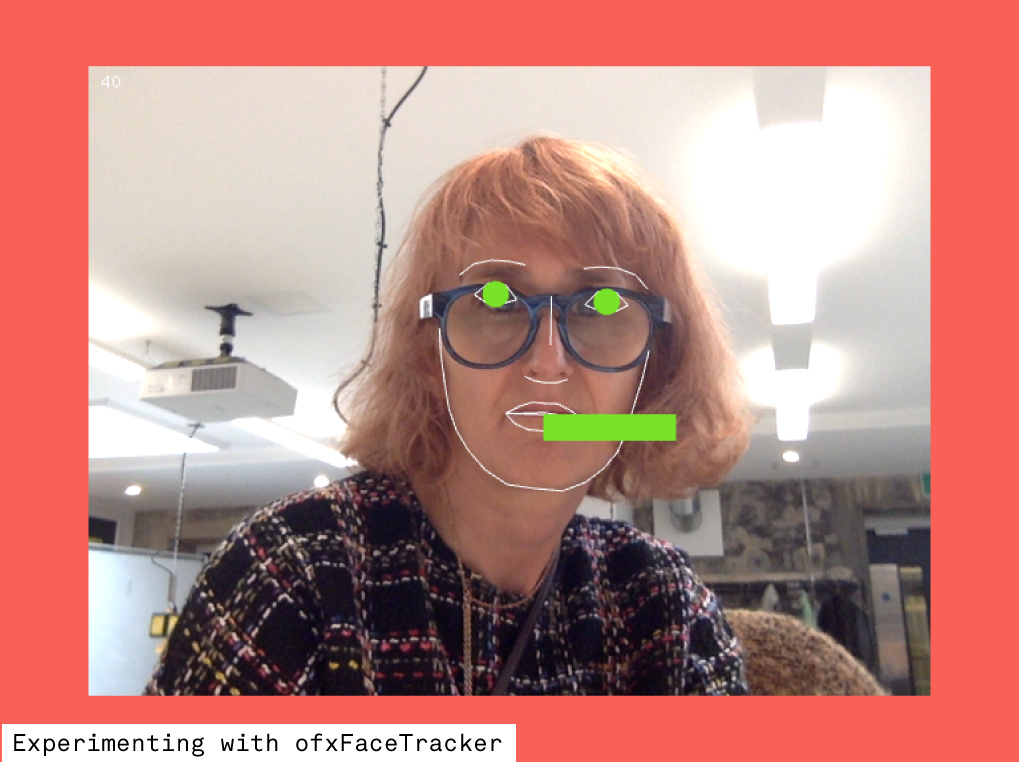

I was immediately drawn to the face controller homework we had as part of the OSC week. I wanted to create a gestural experience and Kyle Mc Donald's faceOSC add-on seemed a perfect fit to start with. After a short time experimenting with it though I realised that ofxFaceTracker was a better fit for what I was trying to achieve. I needed the camera feed and just wanted the overlaid components to move with the user's actual face so there was no need for OSC messaging from one app to another.

I started experimenting with the ofxFaceTracker add-on examples to evaluate what I needed for my experience to work. Temporarily I was hoping to create a 2 user interaction where the app takes a screenshot of the first user and then comps it onto the second user, but I found it hard to extract the raw data pairs for each face; something that should be technologically possible though, but was way beyond my technical skillset.

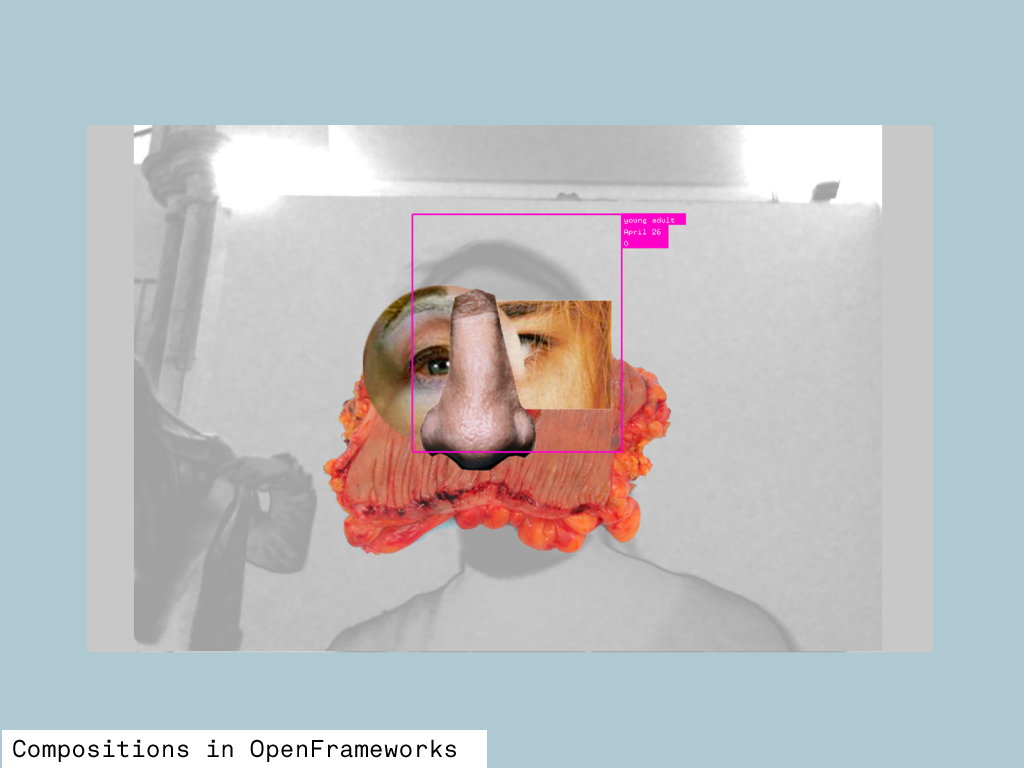

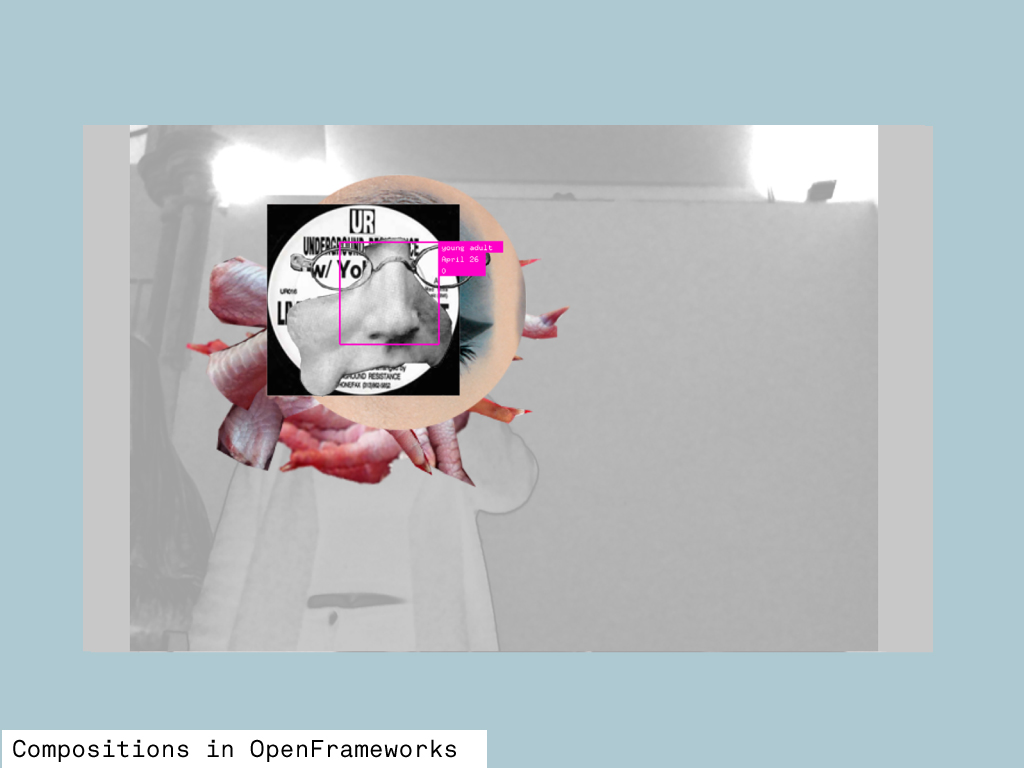

Within ofxFaceTracker addons folder I found the "example-cutout" which used the poly-line moving with the user's actual face to distort another image. I stripped out all the Delauney code which I didn't need and just used the poly-line to anchor the faceTracker for my mapped facial components. Then I integrated the camera feed and gave it a solarised black and white effect to knock the camera feed back from the facial overlays.

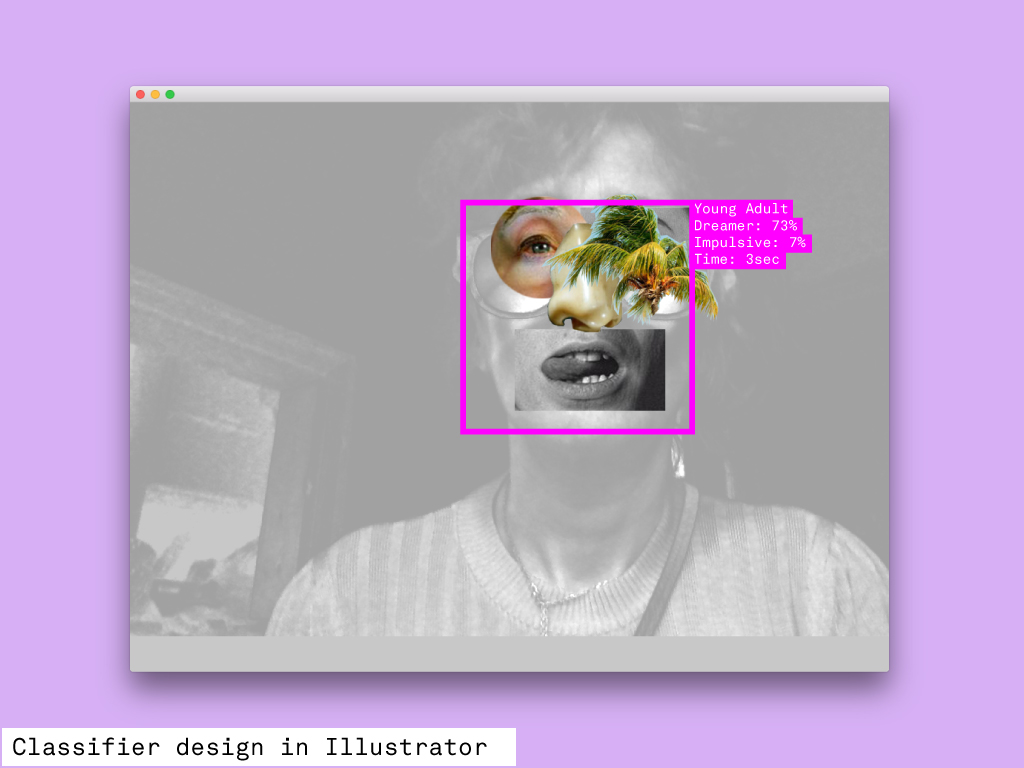

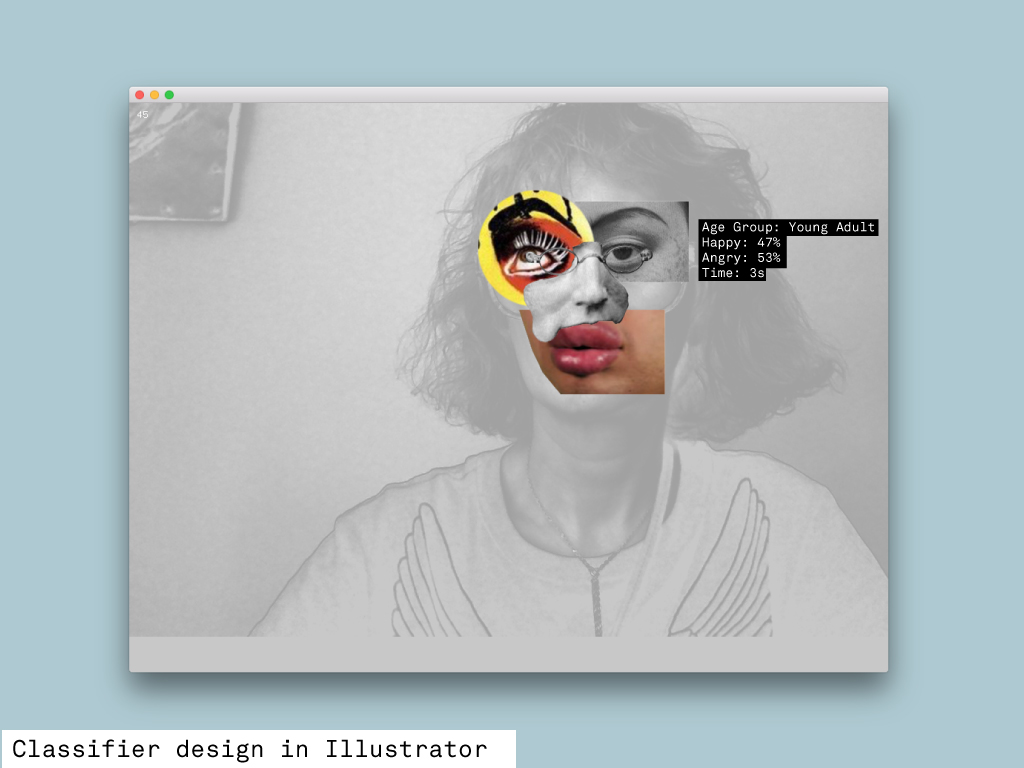

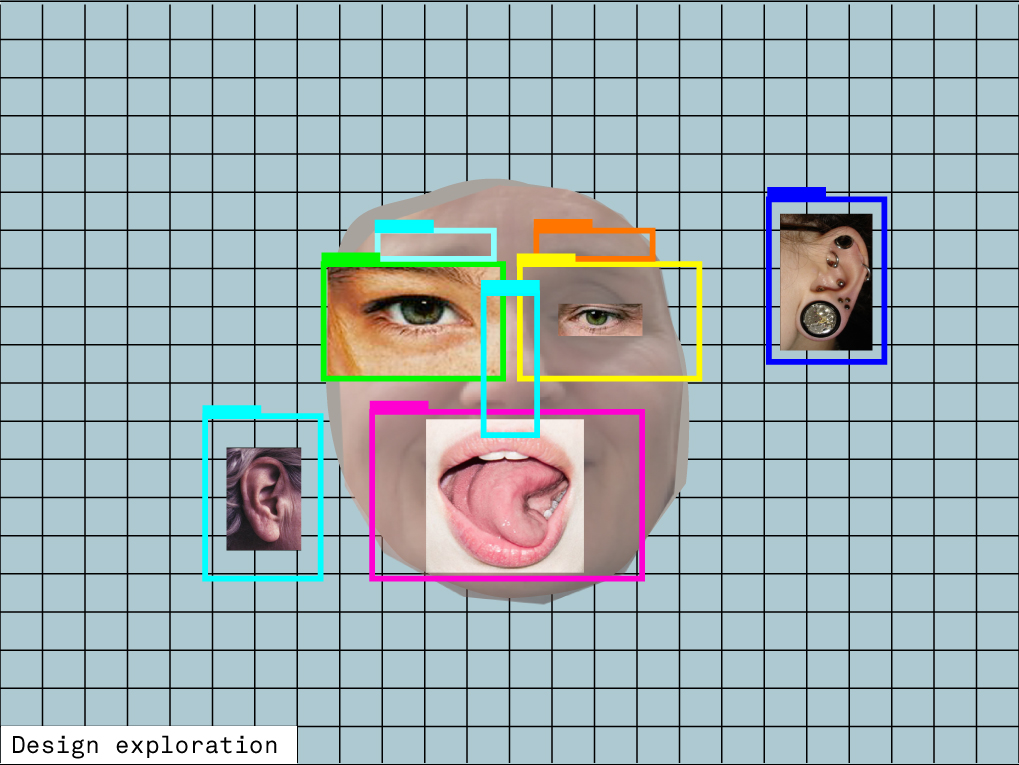

Parallel to my tech experimentation I had a big task of sourcing images and cutting them out in photoshop and exporting these as pngs with transparent areas that allow for overlapping. I started with normal facial components but then moved on to exploring objects as well. I wanted the experience to be about the digital representations of our data as well as about our human reminiscent looks. During this process I found myself hopping between software: Illustrator to create quick compositions and working out the sizing of components, Photoshop to cut out the components and openFrameworks to feed my efforts into the machine. I enjoyed the surprising and absurd combinations I got back.

I created image vectors for every facial component that loaded in around 10-15 photographic images each. Then I randomised the way these images were displayed with a current object holding one random component of the vector at the time. Every time the facial tracking looses the user's facial data a new configuration is loaded for all the facial component vectors.

To allude to a desired future idea I created currently "fake" image classifier rectangles that reference the system analysing what it sees. I'm hoping to develop this further for our Popup show so that the system cycles through words in the same way that it currently runs through the image vectors.

Finally I integrated a screen shot facility so the system would take a picture of every creation that it compiles. I'm planning to create an ever evolving piece with that.

Future development

I think this piece would be really interesting if it performed sentiment analysis on the user's face and then supplied a live response by searching for images on the internet. I'm also keen to implement machine learning to train the system on its own monster mash-ups. Maybe it could analyse these based on emotions that it interprets in their face? I like the absurdity and the creative promise this application holds. Over the summer I'm hoping to experiment with silicon prosthetics that the user could attach to their real face. These artificial components could then compute with the app. This would then juxtapose the physical with digital augmentation and add another layer to the blurring between human and machine.

Self evaluation

I'm really happy with how phase one of this project completed. Of course, I could spend a lot more time on sourcing images and experimenting with what works and what doesn't, but I think it's at a perfect place now to get it user tested. From a code point I will turn the facial component vectors into classes to simplify the ofApp.cpp file, something I didn't have time to do. I also want to spend some time on the classifiers to deliver a more personalised experience.

From the little user testing that I have undertaken the interaction feels intuitive and playful. At this phase now I feel confident to expand it to the next level.

References

Dadaist Collage artist Hannah Höch

American multi-media artist Tony Oursler

Uncomfortable Proximity by Yoha

Computer Vision: Algorithms and Applications by Richard Szeliski

Learning OpenCV, OReilly

Kyle McDonald ofxFaceTracker blogpost on polylines

OpenGL Texture map for camera feed

openframeworks website