Diffusion

"Diffusion" is a performance (Interactive installation) that uses a brainwave sensor (EEG) to convert the intangible abstraction brain activity, thinking, or feeling into tangible things such as sounds, objects, sculptures.

As well as using this interactive project in connection with human brain activity, it will unleash the inherent meaning between thought and matter, concept and object, human and machine.

Produced by: Shuai Xu

Performer: Dahong Wang, Yuhao Yin, Meijun Xu

Introduction

This research seeks to investigate the intertwined and interactive relationship between human consciousness and computational practise through an analysis of human brain data from an electroencephalogram based on Brain-Computer Interfaces and an interactive installation. For this project, through phenomenological research, human consciousness becomes a medium of expression and creation and enhances the embodiment and sensation of the emotion through tangible and intangible feelings. As a result, this project is an experiment intended to convert intangible abstraction brain activity (thinking or feeling) into tangible things, such as sounds, objects or sculptures.

As well as using this interactive audio-visual project in connection with human brain activity, it will unleash the inherent meaning between thinking and objects, real and unreal and human and machine. Furthermore, to deliver an interactive experience for the participants, the installation assesses human motivation, can be abstract constructs, such as creativity, emotion and insight.

Keywords: EEG, brain activity, visual stimuli, smart material, Max/MSP, physical computing, interactive installation, biosensor

Research Questions

This research project aims to analyse both sides of the interaction, especially concerning the relationship between human brain activity, biofeedback and a brain worker's sense of presence. Here, two questions are proposed to guide the deep consideration of this experiment:

1. How can we create a bridge that interacts between brain activity and audio-visual installation during interactive experiences?

2. How does audio-visual stimulus variation alter an individual's state of human brain activity? How do they influence each other?

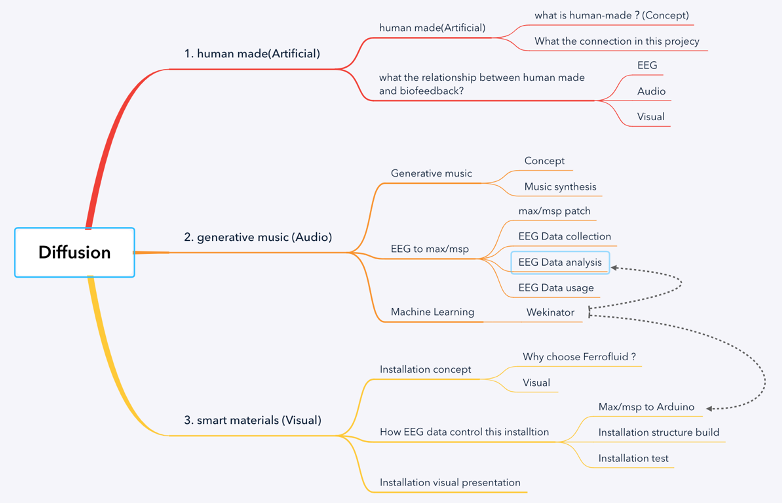

This project explores these questions through three aspects. Firstly, relevant theory and practise in the contemporary art field of Electroencephalography (EEG) will be presented, with a discussion on 'human-made' (artificial) and a description of how a narrative is created using a biosensor. Secondly, this essay will explain how the audio component of this interactive installation makes generative music from the biofeedback produced by the EEG and what kinds of technology may apply. Thirdly, this essay shall present the relationship between humans and machines. Finally, and most importantly, this essay will explain the audio-visual installation of diffusion and demonstrate how one may perform an interactive performance and a process of 'human-made'.

Concept and background research

As Benayoun states in ‘AI, all too Human’ (2017), the term 'human-made’ refers to human development. It can be found in western epic records and eastern epic records; humans have been creating new ideas throughout history. The story of artificial intelligence (AI) started with humans wanting to invent a new form of manufacturing. For example, AI subtly helps us improve our quality of life and to control or trigger evolution. AI gives us a chance to know nearly everything. We can learn or control everything, we can create a cycle or let a machine produce another machine. Nowadays, we can deal with most daily tasks, such as designing or moving objects. Most jobs are automatically completed. In this case, human-made becomes a natural phenomenon; we do not even notice it.

This project, ‘Diffusion’ will help us understand or feel what human-made (artificial) is, things that we do not pay attention to or notice. Exhibition participants will control some real thing such as sounds, visual system, and ferrofluid by using their brain activity, which can be though thinking, feeling or meditation. During the process, to understand the real human-made, real artificial; I cannot explain it, because human consciousness is becoming more and more impenetrable, perhaps only intelligence can understand human-made (artificial).

The installation

Generative Music

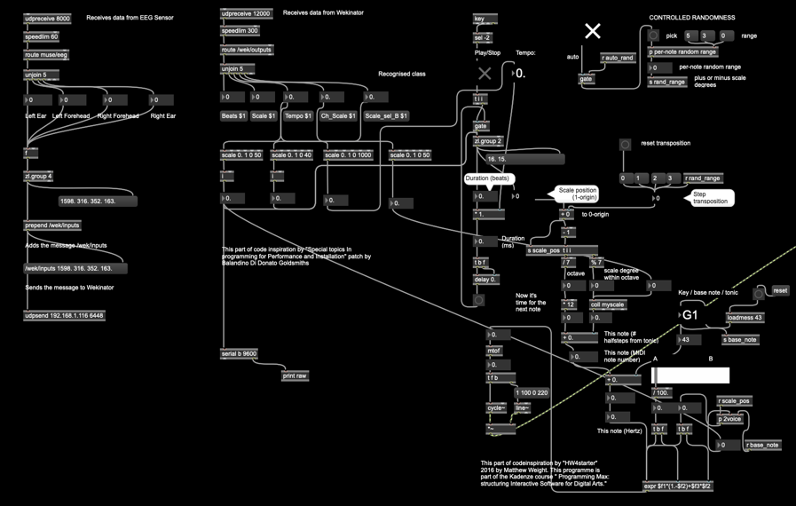

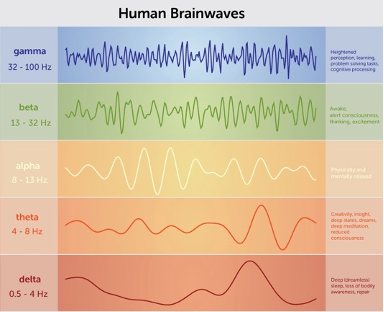

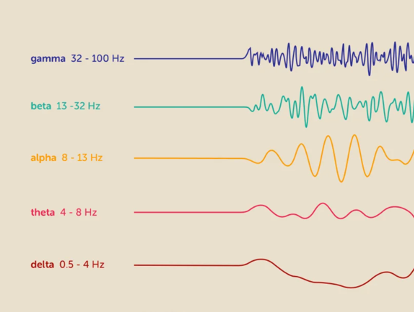

To gain the brainwave data from participants, the ‘Muse2’ EEG headset was selected. This is a good EEG headset for primary research to receive brain waves. This sensor can facilitate four channels of EEG data, which separately correspond with four parts of the brain (left ear, left forehead, right ear, right forehead). Max/MSP software was used to build the music system for obtaining an artistic music intention. The Max/MSP patch is built upon four main elements: the data input from the EEG sensor, the leading music generator, sound effects, and music filters. Max/MSP includes lowpass, bandpass, highpass and a distorted and transformed audio output.

Machine learning

This project uses machine learning as a tool for analysing EEG data and musical elements. I used Max/MSP as a bridge to receive EEG data and send it to Wekinator (machine learning software) by Open Sound Control (OSC), and again to collect the training data to make the music.

The EEG sensor, 'Muse2', provided four EEG data channels; it is the original signal about brain activity; The experimental proof that the original signals are no rules at all, which only shows the feedback of electrodes on the performer's head. As a result, in order to gain more easily controlled data, I sent all the raw data to Wekinator for pre-processing. In Wekinator, the four channels of data were processed separately by linear regression.

Physical Interactive installation

To mimic the brain behaviour, this experiment attempts to forge a relationship between brain activity and ferrofluid. The four channels of EEG data will be the primary input and will control this installation. This installation has three working progress stages: (1) Exploration of material, (2) interaction in the project and (3) final presentation.

The first stage of this project was aimed at exploring the dynamic movement of the magnetic fluid. This was a simple experiment. I tried different methods to control the ferrofluid and the various materials of magnetic fluid. The most satisfactory result was the ferrofluid in the aqueous or oily environment.

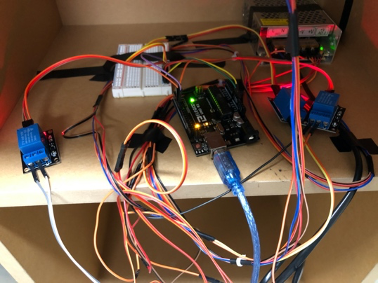

In the second stage, I built a simple demonstration structure, which included a frame structure, two motors, some wires and an Arduino Uno board. I then sent the EEG data to the Arduino and mapped the EEG data movement and angle of the motors. Thus, I could control the range of movement of the motors based on the EEG data.

In the final stage, I connected human activity and the ferrofluid installation more directly. I tried to use electromagnets to control the ferrofluid. I accessed the human brainwaves channel to obtain the produced electrical pulses – visualise a wave rippling through the crowd at a sports arena. As a result, the brainwaves affect the ferrofluid directly. This is a straightforward way to visualise the intangible brain activity and the movement of ferrofluid will be used as a direct way to express the realisation of ‘human-made’.

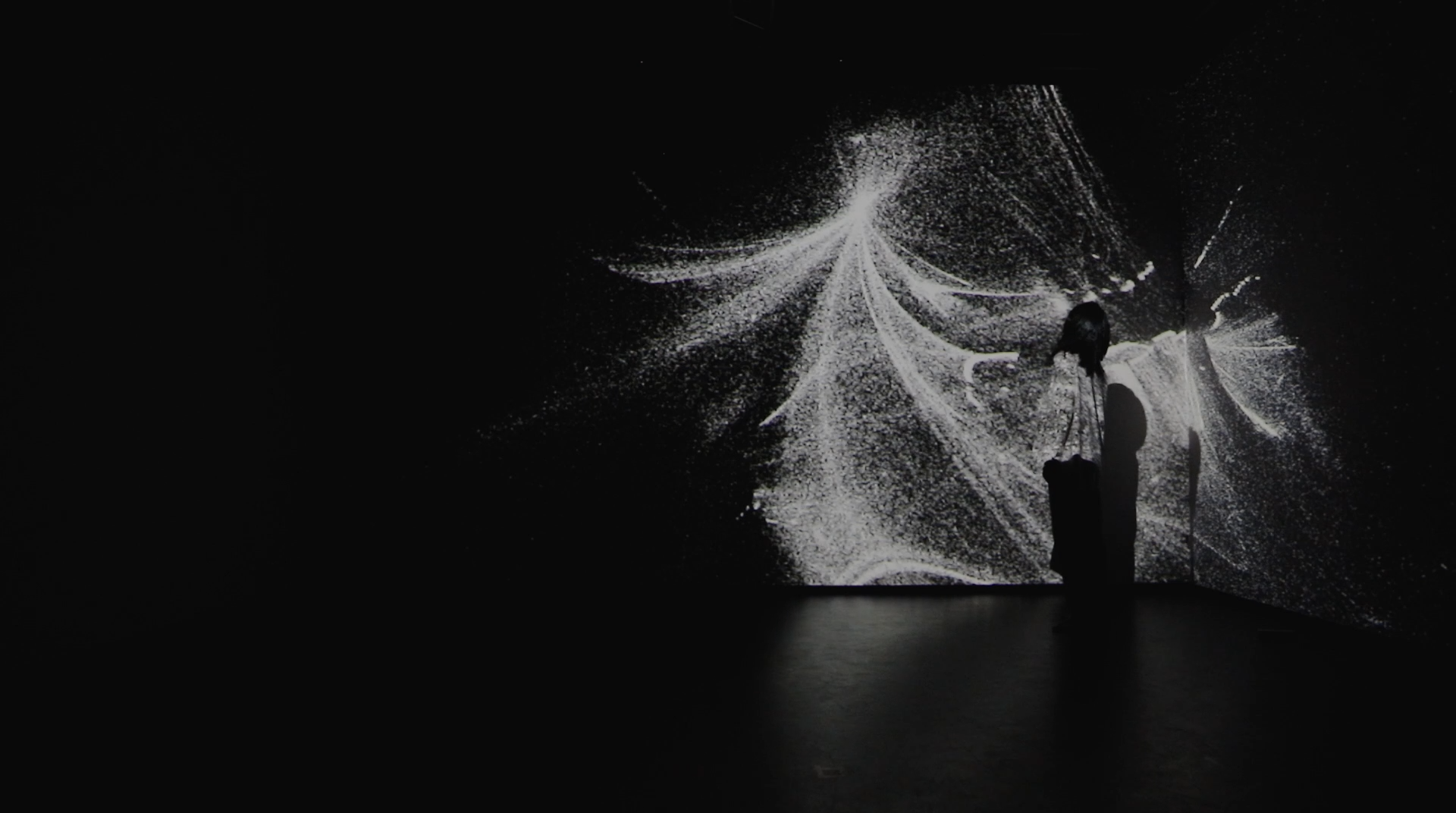

Visual system

Because this project will perform at an immersive space where have multiple projectors and 10 audio channels, so I made a visual system for this project. For this visual system, this main computational software is Max/MSP, using the GL3 engine, and shader to making this visual system.

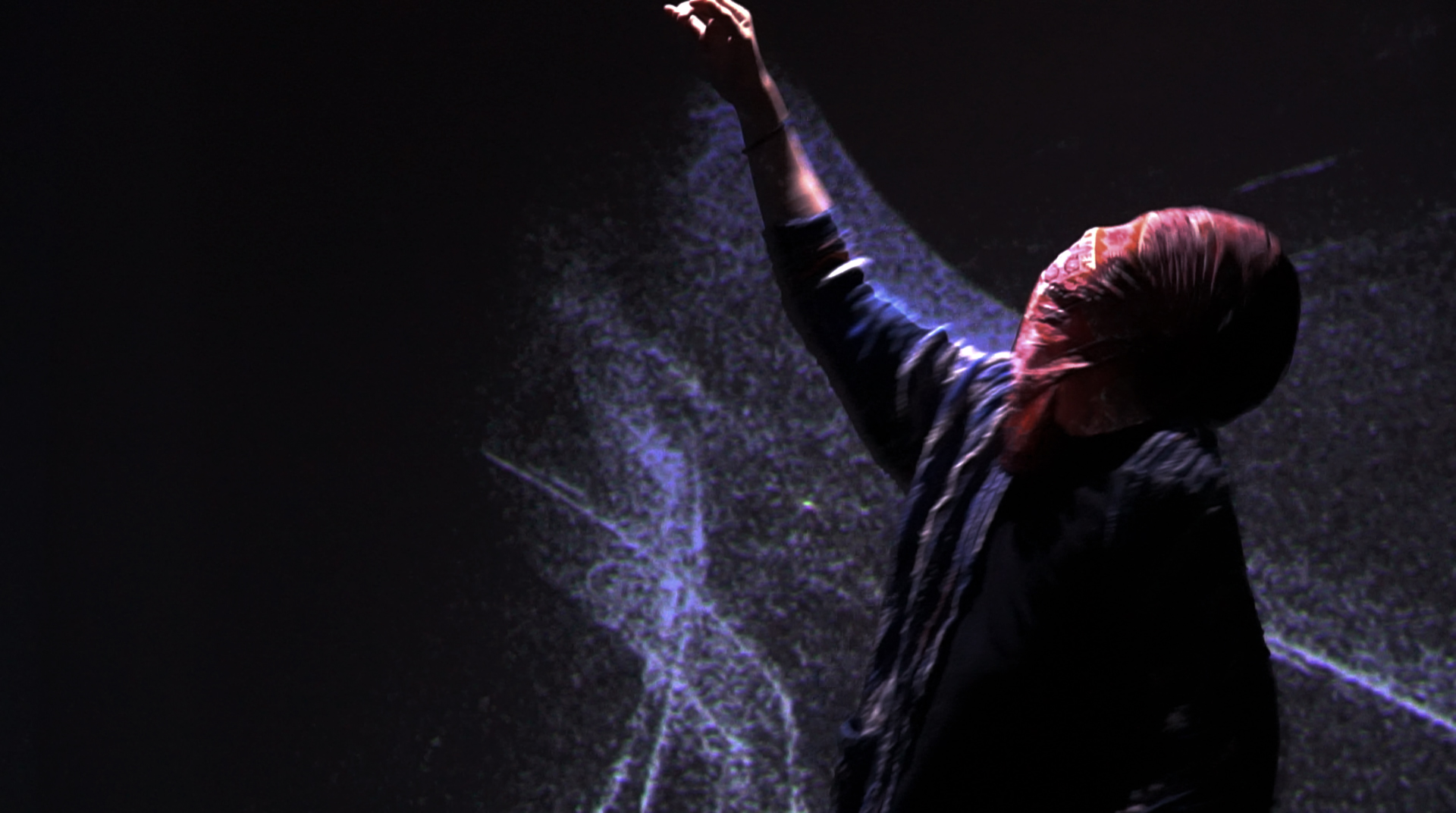

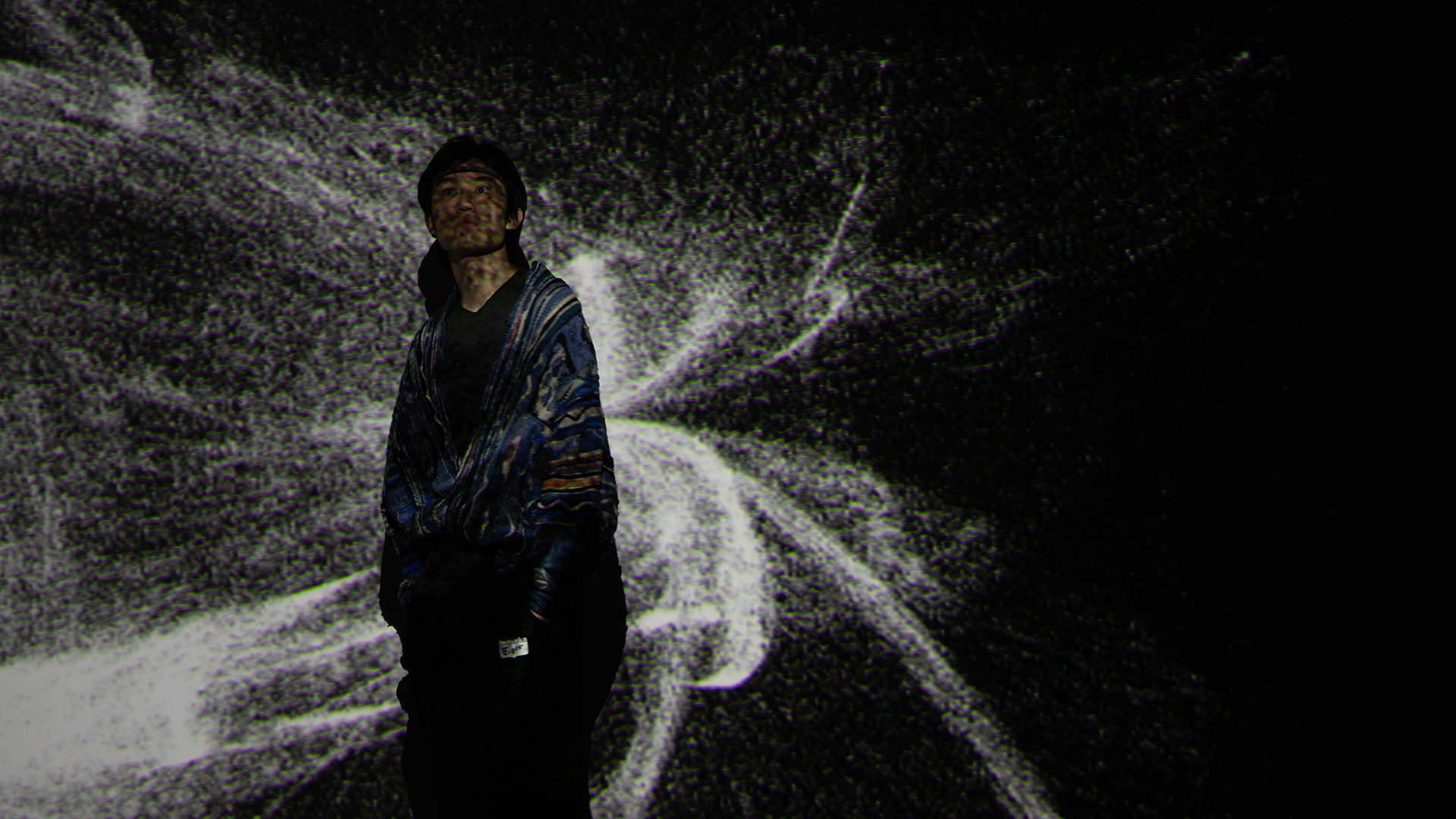

Final Performance

Throughout this project, I have utilised human creativity and emotion design within an interactive system, which includes sound, visuals and sculpture. I have achieved this by using EEG sensors to track the performer's brain activity to interact with sound, visuals and ferrofluid sculpting. The performance had three performers who had different types of behaviour and bodily movements. The results provided an excellent response to the two research questions. The performer served as a satisfactory bridge while connecting to the audio-visual installation. For example, when the performer was calm or meditated, the installation provided an interactive experience; the sound, visual and ferrofluid maintained a soft result. Conversely, when the performer did strenuous exercise or thought about various things, the brainwave fluctuated sharply, and the sound, visual system and ferrofluid also changed drastically.

This project was affected by human brain activity. However, during the performance, the performer is also affected by the environment, the sound and the visual system. These factors potentially influenced the performer into a state of relaxation, tension, thought or even anger and reflection. This means that the performer becomes a part of creating the meaning of the performance itself. The performer builds a relationship between the audio-visual and human brain activity. They are not autonomous; they supplement each other.

Self-evaluation

For this project, this is my first time to build a generative music system, first time doing a performance show. I know, my music systems are not enough powerful to generate a variety of sounds. The visual system also makes me feel that I am not so excellent in many times, and the physical part also is unsuccessful, It is still a long way from my expectations, so I still need to be studied and do more research about that.

Future Research Areas

I plan to engage in 'human-made' in future endeavours. I will keep researching EEG sensors and try to use other EEG headsets, such as NeuroSky and OpenBCI. Additionally, I will be expanding my EEG dataset. I want to collect brainwave data that reflect different ages and races, which will make the brain feedback more accurate. Once I achieve this, I will enter the next stage, and try to combine a Virtual reality (VR) headset and an EEG sensor, to create a storytelling game with these technologies. I intend to show the results of 'human-made' in an immersive environment. Furthermore, I will try to use other biosensors to understand 'human-made' (artificial).

Reference

Mitchell, T., Hyde, J., Tew, P., Glowacki, D.R., 2014. danceroom Spectroscopy: At the Frontiers of Physics, Performance, Interactive Art and Technology. Leonardo 49, 138–147. https://doi.org/10.1162/LEON_a_00924

Bennett, A., 2002. Interactive Aesthetics. Design Issues 18, 62–69. https://doi.org/10.1162/074793602320223307

de Bérigny, C., Gough, P., Faleh, M., Woolsey, E., 2014. Tangible User Interface Design for Climate Change Education in Interactive Installation Art. Leonardo 47, 451–456. https://doi.org/10.1162/LEON_a_00710

Wong, C.-O., Jung, K., Yoon, J., 2009. Interactive Art: The Art That Communicates. Leonardo 42, 180–181. https://doi.org/10.1162/leon.2009.42.2.180

Stern, N., 2011. The Implicit Body as Performance: Analyzing Interactive Art. Leonardo 44, 233–238. https://doi.org/10.1162/LEON_a_00168

EEG (Electroencephalogram): Purpose, Procedure, and Risks. https://www.healthline.com/health/eeg

Brain Factory, 2016. MOBEN. URL https://benayoun.com/moben/2016/03/05/brain-factory/ (accessed 7.15.20).

(PDF) Human Specificity and Recent Science: Communication, Language, Culture [WWW Document], n.d. . ResearchGate. URL https://www.researchgate.net/publication/279416161_Human_Specificity_and_Recent_Science_Communication_Language_Culture (accessed 9.13.20).

Eunoia [WWW Document], n.d. Lisa Park. URL https://www.thelisapark.com/work/eunoia (accessed 7.15.20).

solaris - ::vtol:: [WWW Document], n.d. URL https://vtol.cc/filter/works/solaris (accessed 7.15.20).

Artificial Intelligence, All Too Human, 2017. MOBEN. URL https://benayoun.com/moben/2017/08/29/artificial-intelligence-all-too-human/ (accessed 7.15.20).

Marshall, M., McLuhan, M., 2003. Understanding Media: The Extensions of Man. McGraw-Hill.

Kotchoubey, B., 2018. Human Consciousness: Where Is It From and What Is It for. Front Psychol 9. https://doi.org/10.3389/fpsyg.2018.00567

Harvard May Have Pinpointed the Source of Human Consciousness [WWW Document], n.d. Futurism. URL https://futurism.com/harvard-may-have-pinpointed-the-source-of-human-consciousness (accessed 11.3.20).

Artificial Intelligence, All Too Human, 2017. . MOBEN. URL https://benayoun.com/moben/2017/08/29/artificial-intelligence-all-too-human/ (accessed 9.5.20).

Bainter, A., 2019. Introduction to Generative Music [WWW Document]. Medium. URL https://medium.com/@metalex9/introduction-to-generative-music-91e00e4dba11 (accessed 7.26.20).

A. Brouse, "The Interharmonium: An Investigation into Networked Musical Applications and Brainwaves," M.A. dissertation (McGill University, 2001).

G. Kramer, ed., Auditory Display: Sonification, Audification, and Auditory Interfaces (Reading, MA: Addison-Wesley, 1994).

Marz, M.B., n.d. Interharmonics: What They Are, Where They Come From and What They Do 8.

Cycling ’74 [WWW Document], n.d. URL https://cycling74.com/ (accessed 7.31.20).

Dynamic Tutorial 3: Distortion

Wekinator | Software for real-time, interactive machine learning, n.d. URL http://www.wekinator.org/ (accessed 9.24.20).

Introduction to OSC | opensoundcontrol.org [WWW Document], n.d. URL http://opensoundcontrol.org/introduction-osc (accessed 8.2.20).

Stephen, P.S., 1965. Low viscosity magnetic fluid obtained by the colloidal suspension of magnetic particles. US3215572A.