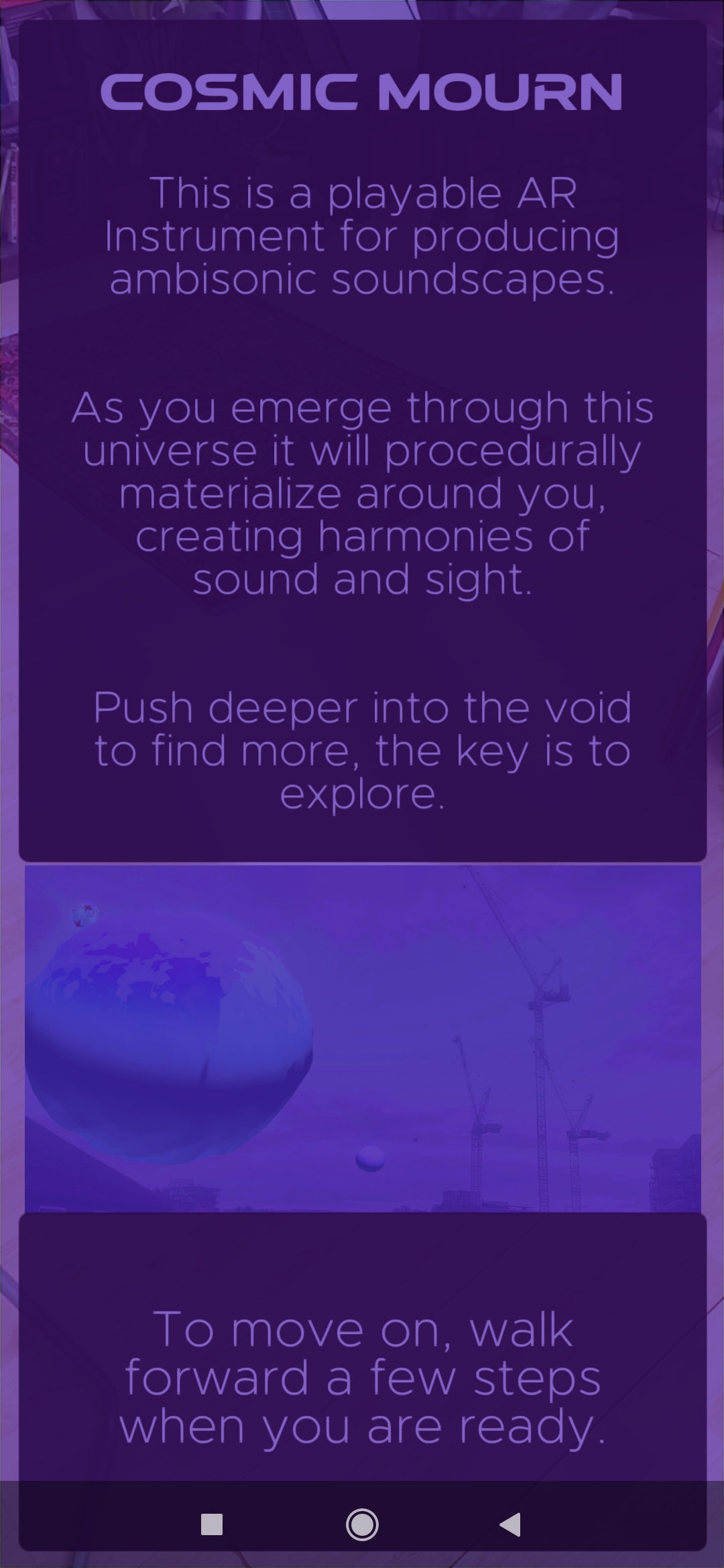

Cosmic Mourn

Cosmic Mourn is a playable AR soundscape that allows you to create your own procedurally produced galactic AV experience. It is an exploration into how new technologies such as AR can allow us to reimagine the traditions, hierarchies, and experiences of making and exploring music.

produced by: George Simms

Concept

The thought behind this project originated from the idea of using play spaces as places to explore and test out new dynamics of interaction. I was thinking about the liberties provided by these virtual spaces, how play in itself is an exchange that abstracts you from your surroundings and roles. It allows you to freely imagine and play out interactions that may be impossible or otherwise unimaginable.

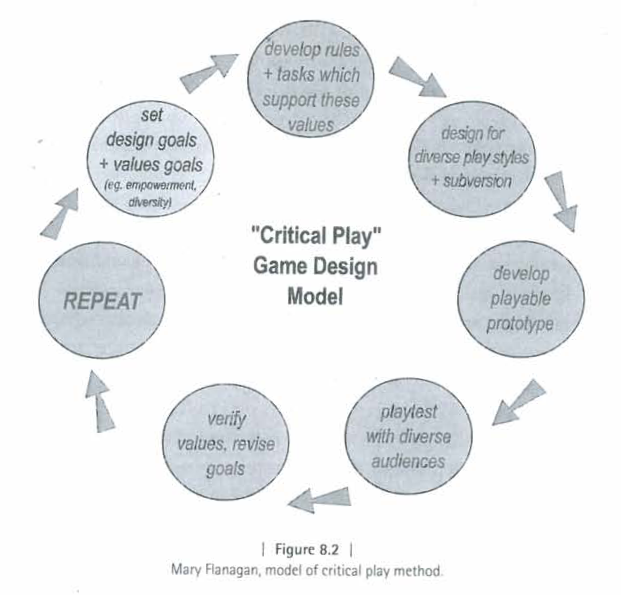

Critical Play

I focused on Mary Flanagans ‘Critical Play’, thinking about interweaving dense concepts into the values and structure of the play. Making complex topics understandable through the medium of play, building experiential dynamics of interactions to explain things that are lived and experienced, not abstracting it, and weighing it down into words.

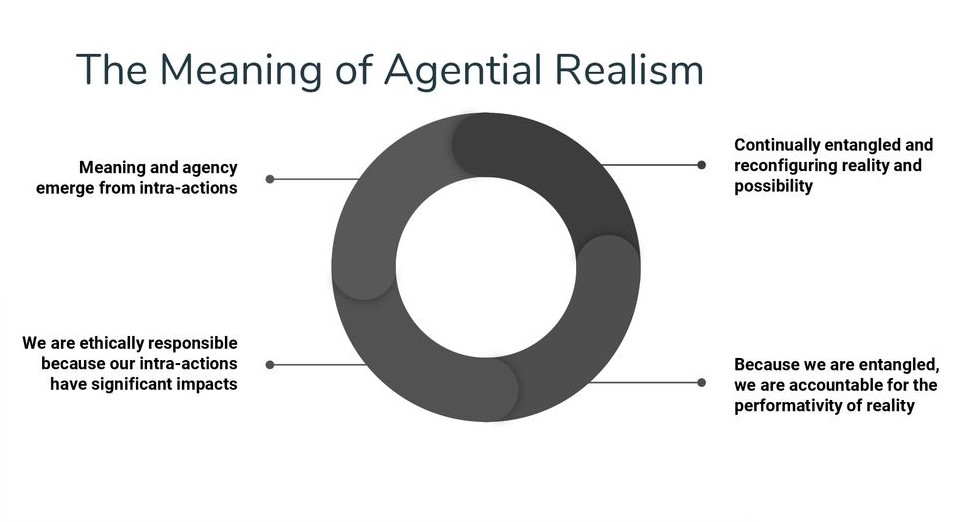

Karen Barad

One of the dense concepts I explore through the values and play of my apparatus is Karen Barad's thoughts on “Agential Realism”. An element that I bring to the forefront of this experience is the idea of “Intra-action”, the person being an inevitable part of any viewed ecosystem and narrative, using AR to bring the person into this parallel reality, embodying them into the space and dynamics that produce that universe. Their presence and interaction are a foundation element of the phenomena that is formed during the piece. I also focused on her recalibration of the boundaries of materiality, how she imagines points hazing, matter blending into fields, and the filling of the structured voids that had once left the universe lifeless and static. Taking quite a voidious intangible visual space and making it into a deep and affective experience, using ambisonic sound to blend and merge the isolated points. Showing the empty divided universe we see, whilst feeling the deeply interwoven universe we hear.

Squeezing these heavy concepts into a game's dynamics may seem a bit tight or abstracting them too much, but I aimed to build a space that allowed you to explore how working through these structures might feel, letting you simulate a new way of interacting with the world around you through a temporal reality layered on top.

The Experience

I was thinking about Roger Caillois and the duality of Ludus (structure) and Paidia (playfulness), roughly speaking it forms an interpolation between Ludus a dynamic formed from rules in which we get encompassed in, and Paidia a dynamic with few rules but lots of unstructured imagination. Think about it as chess to tig, or bridge to hide and seek. I decided to side with Paidia, tying back into Karen Barad's loose structured approach to forming phenomena and to produce a space that's not heavily entrenched in narratives and roles. It's a space where people could surprise me with their interactions and outcomes, forming emergent experiences through a build-up of simple but effective dynamics.

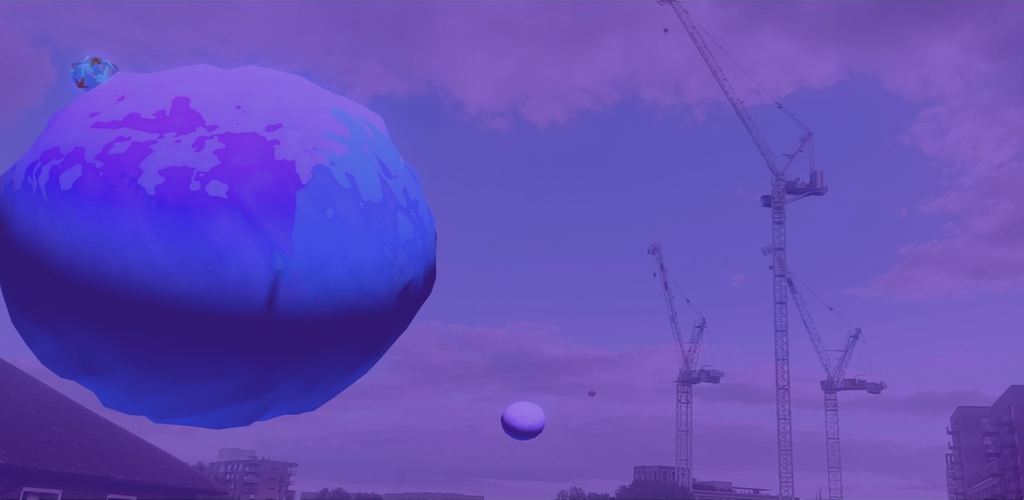

To build this space and fulfill a lot of the aforementioned desires I decided to contextualize it in a dubious yet familiar space, using its uncertainty to allow a deeper reading into the piece, creating space to build your own individual narratives. I decided on a cosmic landscape of planets in the void, slightly abstracted, allowing potential imaginaries of atomic and cellular biotic. The vacuous space is a reminder of the void that has been filled, a sparse landscape that can be freely explored through AR. It allowed me to build a diversity of modular and experimental phenomena to experience in space. This allows their simple dynamics to build to a complex milieu intertwined through the users past and present, and into their future.

Technical

Audio Dynamic

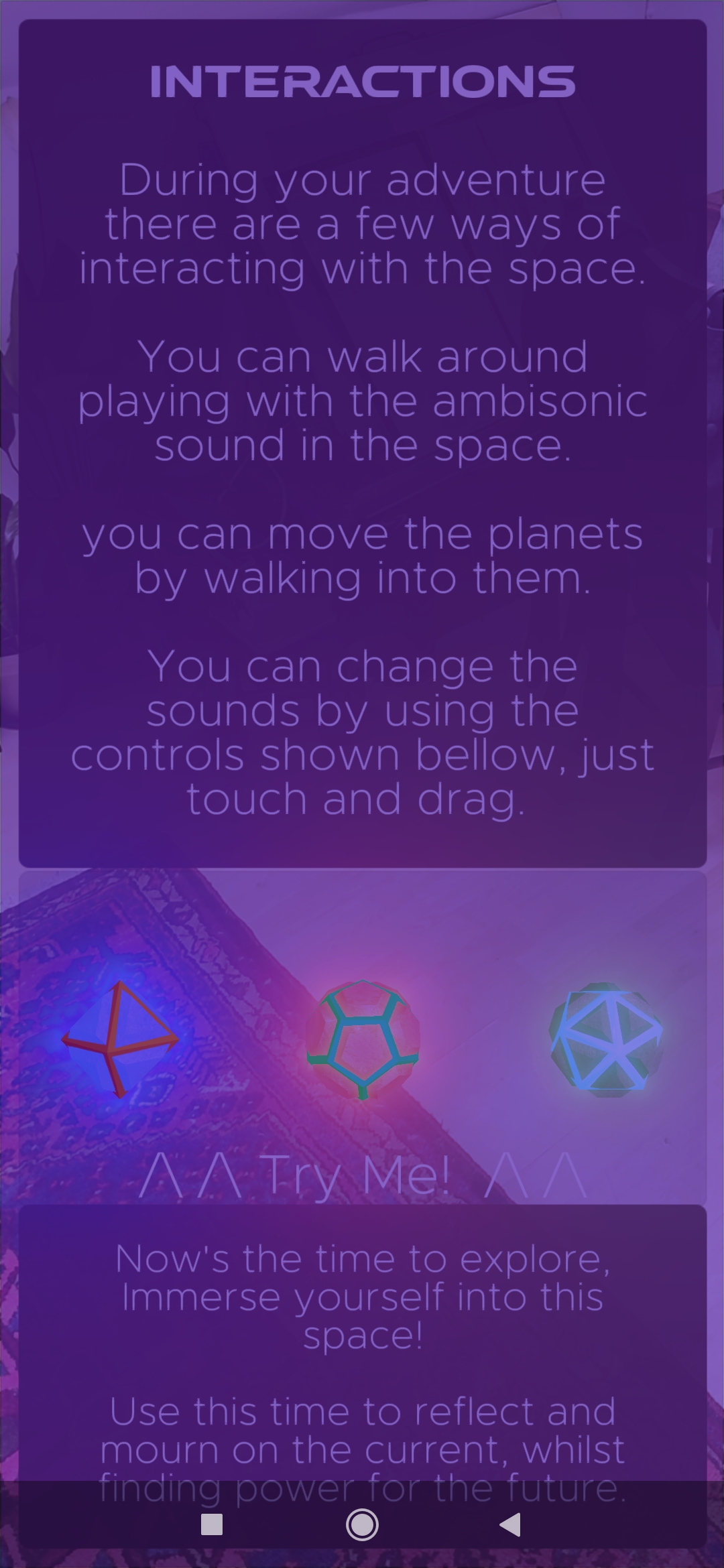

With the audio dynamic, I was wanting to break down the traditions and hierarchies of music-making. Music can be an impregnable encryption of knowledge and understanding, deciphering sheet music, or editing music on software. I wanted to break it out of these structures and decipher it through gamified narratives, using known explorative tendencies of these spaces and the understood modes of interaction (e.g. touch and drag, search and explore). Looking to Don Norman and thinking through the affordances of AR spaces and my chosen void context.

Ambisonics

A quintessential element of the space and experience is the combination of AR and ambisonic sound, using the Resonance plugin for Unity, this transformed basic movement in space and a desire to explore into a complex instrument in of itself. Transporting you through simulated fields of noise and their natural reverberations and reflections, blending and layering the noise sources around you, creating a physically impossible temporal and infinite space for combining sound in three dimensions.

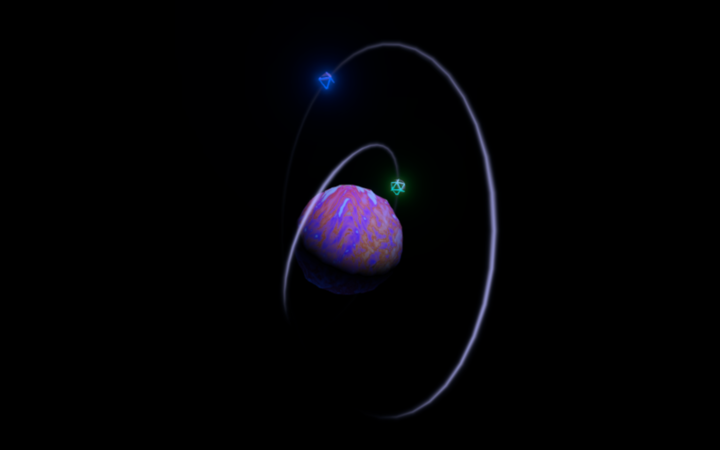

The Planets

The Main visual instrument I designed are the planets, a procedural base that forms audio sources from worlds and orbiting moons. The moons, audio controllers to modulate and play with the sound, using their orbiting distance as the modulating value. This ensemble was proceduralized by firstly randomizing the selection of sounds, playing one of a multitude of samples. It then activates or deactivates different moons and effects to produce an individual corpus of sound through modulation.

Another proceduralization of these is through the shader. I created a shader that used noise to create a terrain effect using a shader graph, taking the noise texture, and transporting these spheres into detailed forms. I used the noise to displace the vertices along their normals, as well as to lerp between colors, some of the colors selected, and some derived from its position. This took quite a simple light mesh and transported it into a thousand worlds, an amazing optimization when it comes to running on handheld devices and reducing the app size.

Sound Reactive Particles

I created a range of sound reactive particle systems to help build a conducive environment. Each with a standard unity particle system modified and controlled by scripts that produce particles when it's quiet or some when it's loud, as well changing the attraction position and amount due to volume, each to build different dynamics in the space, make it feel like it is interwoven and breathing with the sound. Taking on the forms of galactic clouds, shooting asteroid, and solar winds, each pulling the space into a slightly different dimension and allowing me to transform the context subtly over time.

Music / Singing

To record the audio samples for the piece I collaborated with my friend Izzy Nahkla, an amazing sound artist I have worked with for a few years. We recorded a session of improvised music in the church using AKG 414B stereo microphones, this gave the sound a beautiful natural reverb and a really high quality. The recordings from the session got chopped up into smaller segments to be spread across time and space; the AR piece is a way to keep this improvisation alive and allow people to re-enter this temporal and fragile space. After playtesting the piece with the full audio it really showed, building quite deep and intricate spaces, so much so that I removed all of the original digital music elements (e.g. synths, clicks, etc.).

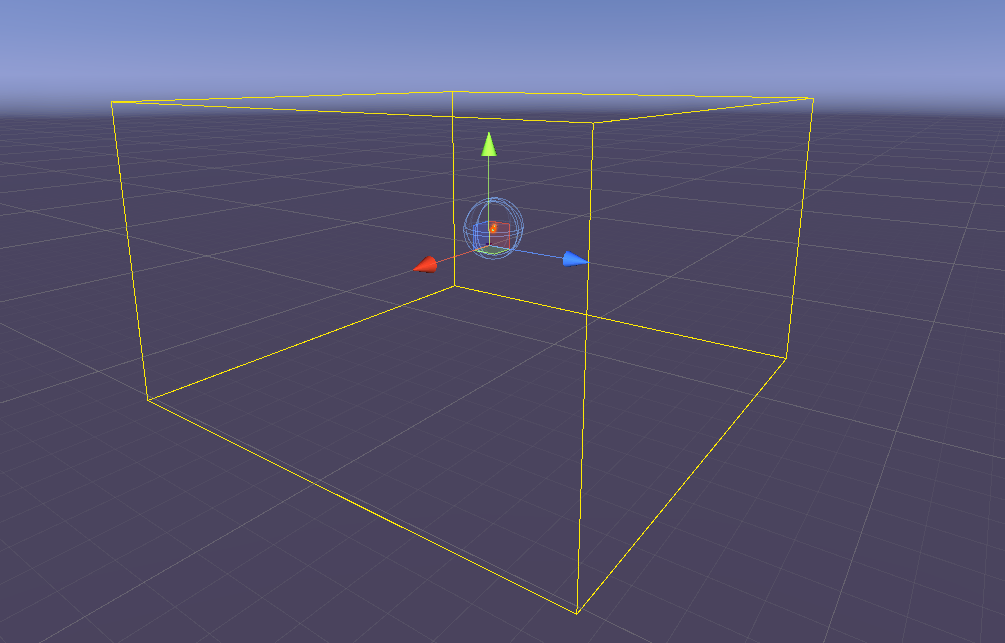

During our time recording this piece we were talking about spaces for sound, prompted by the church with its natural reverberations. Izzy talked about a marble mausoleum that she had visited in Italy, this then became an underlying influence for the reverberation space. Using the Resonance apk to create a gigantic virtual marble mausoleum 25M cubed to reflect the sound around.

Play Testing

During the development of the experience, there has been a huge amount of playtesting and modifying but I am going to address a few major changes that were made in reaction to these sessions.

Orbits

Originally the moons orbited the planets by themselves, just floating through space without any line to guide them. During playtesting I found that people found it confusing as to where these moons are, how they related to other objects, it just wasn't a clean interaction.

In response, I started to figure ways of resolving this issue, deciding that subtle visual cues could bring out the system in place. I used a combination of line renderers to form abstract guides, one showing when touched, the path from the planet to the moon, displaying the direction the object will move. The other, a constant line displaying the orbit the planet follows, giving definition to the space and guides to find where controls are, leading you to points of interaction. Both using a minimal sprite to describe enough structure to guide the interaction without oversubscribing it, as well as creating an extra aesthetic layer.

Introduction

A lot of people found it hard to understand the dynamics of the space and interactions when they first used it. To solve this I created an introduction explaining the space, evolving it through natural interactions of the environment. I used your movement to transfer you through the intro panels, finding that using this existing interaction deepens the emergence into the space. I also overstated the touchable objects with interactive versions of them in the intro and explained the more subtle interactions briefly in the text. This helped a lot but I still find that a lot of people have issues with blocks of texts. I was thinking of helping this by adding pictograms to describe the interactions through images but I didn’t have time so far.

Cohesion Of Realities

Earlier versions of the game felt very layered and non-cohesive, making the space less immersive and real. To help this I added a purple color filter to blend the image layers into the same color space and reality, passing a post-processing layer over both with bloom and a few extras to really tie the scene together. Bloom's blending of lights also creates trails for the users to follow to the objects and interactions.

The Resonance audio plugin by Google really added an extra level of cohesion, allowing you to navigate and understand an unfamiliar space with familiar senses.

Future Development

I feel that this has been a really rewarding and promising project. During my time spent on it, I have managed to stick my pinky toe into what music-making could be through playful dynamics and immersive tech. Opening this huge chasm is really exciting and so far I have had many future ideas on how we can change and augment this relationship into new forms.

One thing I am interested in for the future development of this project and others in its wake is to tie in a narrative more. It would bring clearer progress to the experience, and a desire for the user to explore and delve further. It would help to clarify the deeper running concepts of the piece bringing them out of the structure and framework and into the limelight.

I am also really excited at the prospect of multiplayer AR, allowing multiple users to share and experience one space, their interactions affecting the other peoples. Taking what can be quite an isolated and lonely space and opening it up to communal creation and experience.

References

Conceptual

Critical Play, Radical Game Design, By Mary Flanagan

Meeting the Universe Halfway, By Karen Barad

On Touching: The Alterity Within, Karen Barad

Speculative Realism and Science Fiction, by Brian Willems

Man, Play and Games, by Roger Caillois

The Design of Everyday Things, by Don A. Norman

The gameful world: approaches, issues, application, by Sebastian Deterding

Emotional design: why we love (or hate) everyday things, by Donald A. Norman

Tentacular Thinking: Anthropocene, Capitalocene, Chthulucene, by Donna Haraway

Bodies in Technology, by Don Ihde

Technical

Unity3d AR Foundation Tutorials For Beginners by Dilmer Valecillos

Unity Documentation

Other Influence

Panoramical by Fernando Ramallo and David Kanaga

The Gardens Between, by The Voxel Agents

Shared AR cross-platform multiplayer online with physics and collisions - https://www.youtube.com/watch?v=pWssw8Rw7fQ