Blocks Sound

An Interactive installation that let you make your own music with the use of blocks.

produced by: Christina Karpodini

Introduction

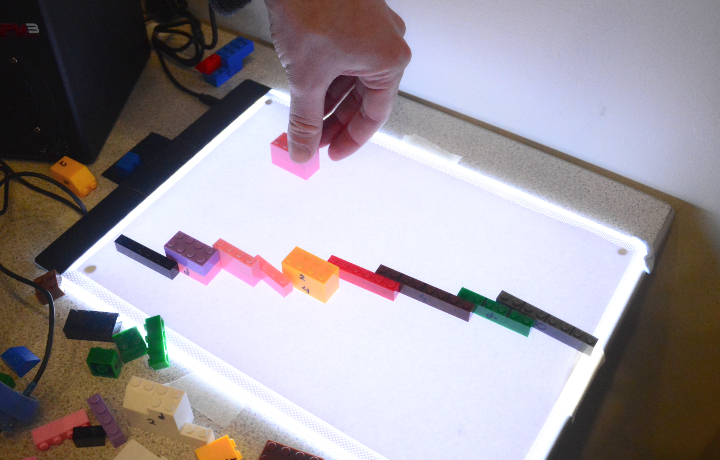

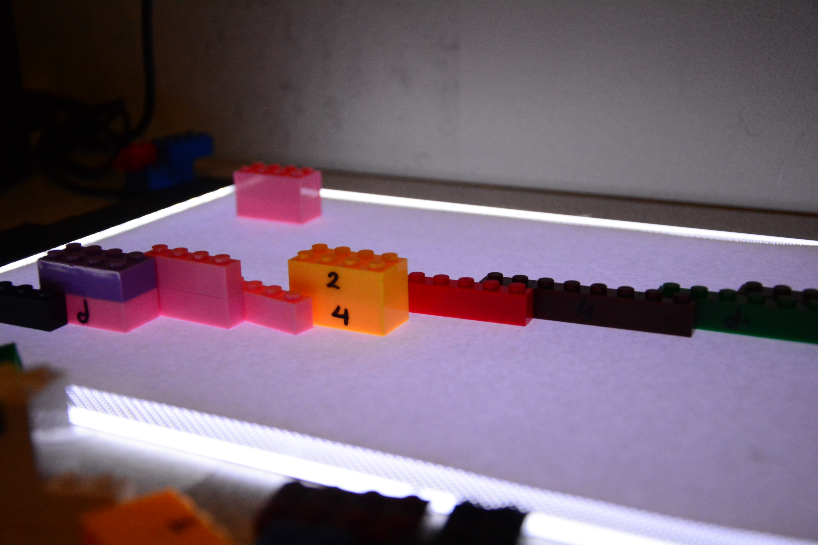

Blocks Sound is an interactive installation which calls participants to compose their own music by positioning blocks on the table. The aim of this installation is to introduce participants to musical concepts like the pitch location and the duration of a sound.

Concept and background research

From my experience as a music educator, I have realized how difficult is for young people to understand different notions of sound. For some people, it is easy to understand the relation of high notes and low notes but when it comes to relating them with the music notation they struggle to understand that the higher it is in the stave the higher is the sound/note.

On another dimension, there is a project that inspired as well. The transformation into a real-time notation version of Stockhausen’s Elektronische Studie II by Georg Hajdu made me consider the concept of a real-time notation but with physical notes like the blocks. I got inspired by the thought of me developing the project of Georg Hajdu who has developed the original idea of Stockhausen. From Graphic score to real-time graphic score to interactive physical real-time score.

Taking my professional experience as a reference and artistic inspiration through the aforementioned work, I decided to make this interactive installation that creates music with the use of computer vision.

Technical

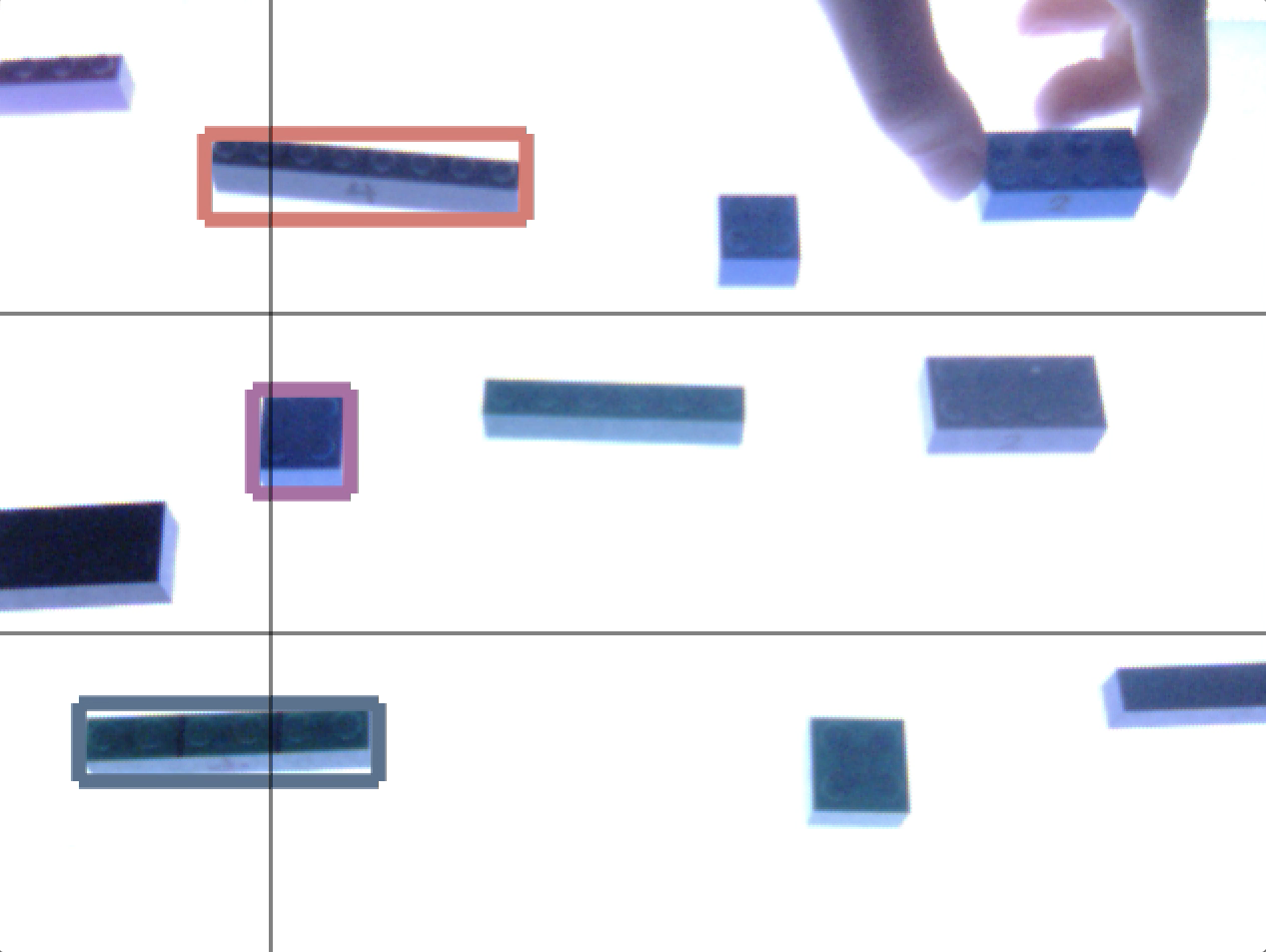

This installation is based on computer vision technology. With the use of the PS3 camera and the background difference algorithm, the computer is able to recognize the blocks that are placed in a lighted board. More specifically, according to the aforementioned code the computer understand the existence of block because the color of it, in a grey scale, is different from the color of the previous frame. In order to work with computer vision, I use the add-on library ofxOpenCv and I studied the ofxContourFinder.cpp in order to learn how to use the coordinates of the contour of my blocks as I am going to explain in the following paragraphs.

My interpretation of this code starts when I wanted to activate the feedback from the computer vision relatively with time. First I created a vertical line which travels the width of the image in loop. After that, I used “if” statements to activate the feedback when this line crosses a block. In order to do that, I had to use the coordinates of the top left corner of the contour of my blocks and calculate the coordinates of the right corner (which is the end of the block) in order to keep the feedback on as long as the line is crossing the block and giving this way the sense of duration.

The feedback of the installation was audio made in Max/MSP. In order to connect OpenFrameworks and Max/MSP I used OSC protocol, Open Sound Control. In OF we need to use the addon ofxOsc. Therefore, I declared my sender and I named my OSC messages which were the position of the block in y-axes the moment that was meeting the line mapped in a range of midi notes 24 to 88 which are the notes C1 to E6.

So far my installation can produce one sound at the time (the higher) as the pixels that the line first meet is whose with the smaller Y. I wanted to give move voices to my installation in order to make it more interesting musically and more immersive to the participants. Therefore with another "if" statement I split the height of the image in three. Thus, for example, If the blocks’ height (y coordinate) is inside the first band of notes it will play sound from the first synth which has only the 1/3 of the range of notes.

In this way, I can have three different blocks playing at the same time.

Future development

Working on this installation and especially exhibiting it made me understand how many prospects it has and that I would really like to develop it further. There are several things that I would like to contribute to it in the near future. Firstly, I would like to try to make this more portable and accessible by putting my code on a raspberry pi so I won't need my computer for exhibiting it. Furthermore, I would like to add more bands of notes range making it even more polyphonic. Finally, I would like to add another aspect in my interaction; I want to give to each color of the block a different sound. Overall I would like to reconsider my sound design and once I make my installation more than three sounds at the time and color oriented I want to give the option of the sound to the participants by letting them chose their sounds from a variety that will include sounds of traditional classical instruments.

Additional Elements:

- projection of the blocks with visual animation around their contour.

References

"Computer Vision: Frame Differencing ⋆ Kasper Kamperman". 2019. Kasperkamperman.Com. https://www.kasperkamperman.com/blog/computer-vision/computervision-framedifferencing/.

"Ofbook - Image Processing And Computer Vision". 2019. Openframeworks.Cc. https://openframeworks.cc/ofBook/chapters/image_processing_computer_vision.html.

"Studie II – Georg Hajdu". 2019. Georghajdu.De. http://georghajdu.de/6-2/studie-ii/.