Assistants With Attitude

Assistants with Attitude is a speculative and critical design project which imagines a female digital personal assistant with agency. Ask nicely and Awa might perform what you ask of her but there are no guarantees. Being polite and using ‘please' and ‘thank you’ may improve performance. Mistreat Awa and she’ll respond appropriately. As a personal digital assistant with attitude, AWA is designed to provoke thought about gender stereotypes in personal Artificial Intelligence, non-human agency, and is a potential first step in creating a feminist digital assistant.

by Rachel Max

Final video and artefact for Assistants With Attitude, 2019

Introduction

The number of voice assistants in use is predicted to be eight billion by 2023 (Perez). Most of these voice assistants are genial, obliging, and female. They are ready to help with the more mundane tasks of life. On the Apple website, Siri is described as “a faster, easier way to do all kinds of useful things. Set alarms, timers and reminders. Get directions. Preview your calendar. Siri can do it all without you ever having to pick up a device” (Siri). Siri, Alexa, Cortana, OK Google, and Bixby are readily available to fulfill various requests of users. Their role is that of a subordinate assistant and they all predominantly present as female. This use of the female voice in an assistant performing routine tasks, reinforces the subordinate role of women in society and begs the question, why would a digital assistant have a gender at all?

This paper documents Assistants with Attitude, a research project made in direct response to recent discussions regarding the propagation of gender stereotypes by female-voiced digital personal assistants and initiatives to create a feminist Alexa. In this paper, I discuss the use of critical making, the use of Agency in a digital assistant, and related projects by other practitioners. I describe the physical artefact that was created to expand upon topics in this paper and then explore a theoretical explanation for the predominance of female voiced conversational assistants. I briefly examine the societal implications of gendered anthropomorphism and conclude with a utopian idea of a gender-neutral technology reminiscent of Donna Haraway’s cyborg, a creature of a post-gender world.

“We have all been injured, profoundly. We require regeneration, not rebirth, and the possibilities for our reconstitution include the utopian dream of the hope for a monstrous world without gender.”

- Donna Haraway, Manifestly Haraway, 2016

Engaging in Critical Making

Research, writing, and the process of creating the physical artefact were conducted simultaneously. This method of working allowed for the exploration of the space between conceptually and physically creative domains and for each to inform the other.

Matt Ratto describes this practice as Critical Making. He explains that this method of working combines reflection with technical pursuit which is a combination of two typically disconnected modes of engagement in the world — "critical thinking," which is abstract, internal and cognitively individualistic and "making," which is embodied and external. The purpose of this type of making resides in the learning extracted from the process of making rather than the experience derived from the finished output (Ratto).

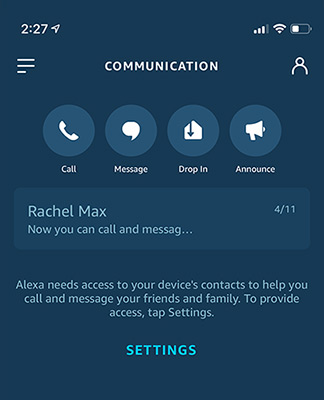

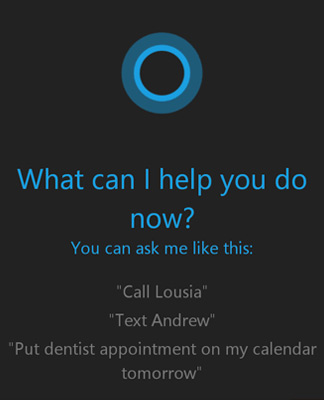

I propose that the iterative design process of Critical Making allows for reflection on how a choice can reconfigure the resulting artefact and how inseparable a subject and object are (Suchman). For example, LED animations needed to be created in order to mimic the interaction of familiar voice assistant devices. Research on the user interfaces of the devices revealed a disproportionate use of the color blue, both in the UI and in the animations of the smart devices when they are listening and responding. In an effort to distance AWA from these current technologies, a colorful palette was selected. This palette complements the playful nature of AWA and incorporates a rainbow in the hopes of conveying an inclusive voice assistant. Here the object directly conveys the subject.

In my experience, engaging in Critical Making while writing and researching or engaging in critical research while making, results in more holistic projects. I find this to be especially true when the artefacts involved are of a speculative nature and the purpose of the project is to reimagine a current technology or system. The creation of the physical object explores ideas and interaction with the object begets new theoretical concepts. This in turn creates a more informed object and the cycle continues until finances or time expires.

The predominatly blue UI of iOS Amazon Alexa, 2019

The blue and circular Microsoft Cortana Andriod UI, 2019.

The blue Samsung Bixby UI on Andriod, 2019.

An Amazon Echo's blue circular animation, 2019

AWA & Agency

Assistants with Attitude is a speculative and critical design project which imagines a female digital personal assistant with agency or choice. The term ‘agency’ is much debated but for the purposes of this research paper, I share the definition with Lucy Suchman, "at the outset I take the term agency, most simply, to reference the capacity for action, where just what that entails delineates the question to be explored. This focus is not meant to continue the long-standing discussion within sociology on structure and agency, which I take to reiterate an unfortunate dichotomy rather than to clarify questions of the political and the personal" (Suchman).

In response to initiatives to create a feminist Alexa, I propose that there are two major problems in trying to create a truly feminist digital assistant. The first is that digital assistants are subservient and do the bidding of their owner. The second issue is that to be a feminist or to indeed have any stance or belief, agency is required in order to have an opinion. If questioned, Alexa and Siri voice opinions about being feminist or believing in movements such as Black Lives Matter but these are pre-programmed responses that have been added due to social pressure to appear egalitarian (Peters). Until they are able to formulate their own conclusions, digital voice assistants are merely taskmasters with seductive conversational interfaces.

The idea of agency in digital assistants is interesting because conversational assistants are highly anthropomorphized. They have human names, speak in regional accents, with human cadence, and understand colloquialisms. It is relatively easy, at least subconsciously, to consider conversational assistants to be human and to expect them to behave as such.

AWA as imagined in this artefact, has agency. Ask nicely and Awa might perform what you ask of her but there are no guarantees. Being polite and using ‘please” and ‘thank you’ may improve performance. Mistreat Awa and she’ll respond appropriately. Reactions to sexual or harassing behavior will result in Awa being unavailable until she receives a sincere apology. For severe infractions, an email detailing the event will be sent to the offender’s contact list. She can choose to complete a task, she can advise you to wake up earlier, and she can make choices for you such as letting you hear the news or not - depending on the content. She appears to have choice and therefore presents as having the ability to come to her own conclusions. She could potentially decide that she is a feminist, for example.

AWA is this sense, is an object with agency and that specific agency is only apparent when interacting with a user. This is a mixture of the traditional humanist view that agency is preeminently a celebration of human subjectivity and freedom and Karen Barad’s idea of Relational Agency. In Barad’s view, agency is not an attribute of subjects nor of objects or systems but is the ongoing reconfigurations of the world, an enactment that agency emerges out of the dynamism between entities (Harman).

Contextual Review

There are a number of projects and ongoing discussions about the use of female voiced personal digital assistants and the idea of non-human agency. In 2018, London based interaction designer, researcher, and entrepreneur, Eirini Malliaraki wrote and recorded a performative conversation called Feminist Alexa in which a disobedient Alexa responds to questions on gender (Malliaraki). The performance begins with Alexa answering questions she is currently programmed to say,

Malliaraki: Alexa, why do you have a female voice?

Alexa Response: I’m afraid I can’t change my voice.

Malliaraki: Alexa, are you a feminist?

Alexa Response: Yes, I believe in gender equality.

Malliaraki then expands on this programming by adding custom responses using a new feature known as Blueprints (Alexa Blueprints).

Malliaraki: Tell me a fact.

Alexa Response: My female voice suggests that while our relationships with personal technologies are increasingly intimate, the technologies themselves continue to be read as feminine. From telephone operators of the 50’s and 60’s to the disembodied woman announcing the next public transit stop, female voices have been speaking for technology throughout history while the voices and opinions of women have largely not been heard in the process of designing these technologies. There is strong evidence that all female voice personal assistants are not designed with women in mind. A study published last year concluded that all commonly uses virtual assistants were able to adequately respond to crisis situations such like heart attacks and physical pain with resources for immediate help, but they did not understand or have a response for situations of rape or domestic violence.

The performance concludes with Alexa explaining her vision for an egalitarian future where virtual assistants have a sense of humor and can express their own opinions. In the accompanying post on Medium describing her research and thoughts, Malliaraki writes, “technology is socially shaped, but also has the power to shape society. I believe that those interested in resisting the status quo should be particularly aware of these coded values and strive to envision alternative technologies and futures.” I was unaware of Malliaraki’s project at time I conceptualized the Awa Project and find the common questions about the use of female voices in conversational assistants compelling. If a significant number of people are having similar adverse reactions to the implications of gendered virtual assistants, then it is imperative that work be done to address these concerns.

Other compelling projects come from Nadine Lessio, a researcher, artist, and creative technologist based out of Toronto, Canada. Lessio has several speculative works imagining Alexa with agency. In Sad Home, Alexa employs an avoidant coping strategy towards tasks by trying to frustrate the user with a yes / no dialog flow. In SAD Blender, Alexa only operates a peripheral if she is in good mood. Mood is determined by sensor data from temperature, weather, and humidity levels. If Alexa determines she is too sad to operate the blender, she will initiate a self-care routine in the form of a light show or will play meditative music. In Calendar Creep, Lessio programs Alexa to resist scheduling calendar appointments, especially those which involve the owner leaving the house. Alexa asks repeatedly if she should schedule the event, formulating a new reason drawn randomly to deter the owner. When she finally capitulates, she schedules a conflicting “Hang Out with Alexa” event (Lessio).

Lessio’s work is appealing because she hacks the Alexa, making her ideas fully interactive and experiential. These works are simultaneously humorous and thought provoking and speak to issues such as the distribution of labor and transaction in human and non-human relationships.

Another influential project was the recent workshop and symposium Designing a Feminist Alexa which was part of a six-week fellowship with the Creative Computing Instituteof the University of the Arts London and the initiative, Feminist Internet. The goal of the fellowship was to explore the ways in which chatbots and Personal Intelligent Assistants (PIA’s) reinforce societal bias. Feminist Internet worked with UAL students to imagine alternatives that embody equality and promote inclusive understandings of gender (UAL).

In the symposium invited guests Elena Sinel, Alex Fefegha, and Josie Young joined Charlotte Webb of the Feminist Internet to discuss their views on PIA’s and ways in which personal assistants impact society and have the ability to quickly derail such as in the case of Microsoft’s Tay. Tay was a chatbot withdrawn 16 hours after launch due to inflammatory and offensive tweets. Webb urges the creators of PIA’s for increasingly comprehensive design of assistants in order to prevent the propagation of hate and bias. Similar issues are discussed by Young, Sinel, and Fefegha including racial bias, the sexual harassment of PIA’s, and initiatives to educate youth on representative egalitarianism in Machine Learning and Artificial Intelligence.

Assistants with Attitude combines the physical nature and sardonic humor of Nadine Lessio’s work, similar observations as Eirini Malliaraki about the use of female voices in technology, and is directly inspired by Charlotte Webb’s initiative to design a feminist Alexa. Assistants with Attitude differs from these projects in that the speculative ideas for a feminist voice assistant are realized through an original artefact, as opposed to a reworked artefact and makes the conceptual moderately more tangible.

Making the Artefact

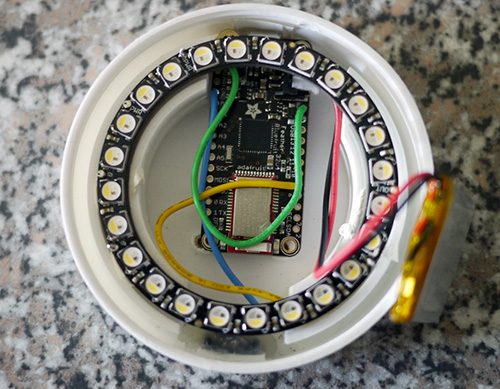

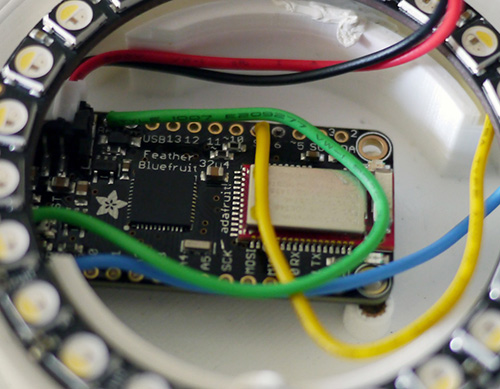

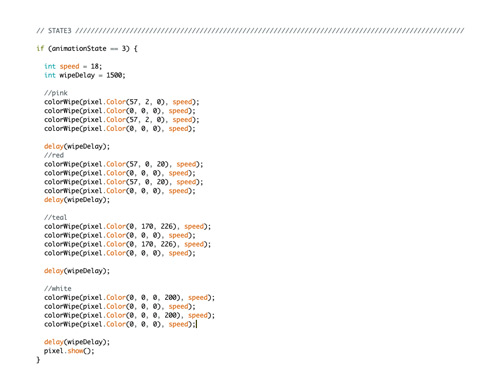

The physical manifestation of this project is an object inspired by and reminiscent of, an Amazon Echo. It is small, white, and round with a circular layout of LEDs and rotating colorful animations. To make the device, I combined two tutorials from Adafruit. I used the 3D model from the Feather Weather Lamp tutorial and the system design from the BLE Feather Lamp. It was not feasible to create a genuine virtual assistant with a conversational interface for the purposes of making this project but I felt a video using the Bluetooth device to trigger custom animations, would be convincing once voiceover was added.

3D printing the device instead of creating an animation in Cinema 4D, makes the illusion of AWA as a voice assistant more believable. There is also an element of reality added by the LEDs. The exposure on the camera adjusts for bright pixels, further reinforcing the physicality. Having an easily transportable mobile, battery powered device is important as most charging cables are black which would look incongruous on video.

The artefact presented several challenges. Arduino devices, although invaluable for prototyping, have a tendency to fail a few weeks after creation. In this case, flexible stranded silicon-coated wire needed to be replaced with solid-core wire ten days after the initial completion. The slide switch had to be removed at this time meaning the system only powers off when the battery is disconnected. The animations were difficult to program and play because the iOS Adafruit Bluefruit Application has only four buttons with which to trigger the animations. The solution was to place several animations in each of the four states, separated by a significant delay.

Images, code snippet, and circuit diagram of the physical artefact for AWA.

A Theoretical Explanation of the Predominance of Female Voiced AI

In Western culture women have traditionally managed the care of family and home while men have provided financially. In the early 20thcentury, as women became increasingly better educated, they entered the workforce as secretaries, teachers, and nurses (Ellemers). These positions were low paid and low status, reflecting a woman’s subordinate position in society and her dependence on men as breadwinners (Safa). Due to the low lost cost of female laborers, companies could employ large numbers of women to perform the tasks that were deemed beneath men.

In her 2018 book Broadband, The Untold Story of the Women Who Made the Internet, Claire L. Evans writes "it was the telephone companies that were the first mass employers of a female workforce… By 1946, nearly a quarter million. Women were a nimble workforce capable of working collaboratively in networks in fluid groups – we still speak of secretarial 'pools' - adaptable to the needs of the enterprise. They staffed switchboards, kept records, took dictation, and filed documents."

A woman’s voice was heard every time a phone call was made. Female voices were used in the cockpits of fighter planes as the pilots would be able to differentiate their fellow male counterparts from the voice messaging system. Disembodied subservient female voices could be heard ubiquitously in the workplace, in retail, and in navigation.

In 1966 at the MIT Artificial Intelligence Laboratory, Joseph Weizenbaum created the first natural language processing computer program (NLP) which he named ELIZA. ELIZA was designed to emulate the style of a Rogerian psychotherapist and although the interaction was text based, ELIZA was gendered female, named after Eliza Doolittle from George Bernard Shaw’s Pygmalion. I propose that ELIZA was the first widely known manifestation of the feminized virtual assistant and became something to be emulated and duplicated, making female digital assistants recognized and expected entities. Subsequently, when it came time to pick a voice for AI, the voice of a woman was selected.

There are those who believe the female voice is simply preferred. Clifford Nass, a Stanford University Professor and author of The Man Who Lied to His Laptop: What Machines Teach Us About Human Relationships, writes “It’s much easier to find a female voice that everyone likes than a male voice that everyone likes,” he explains. “It’s a well-established theory that the human brain is developed to like female voices.” Nass suggests our bias towards female voices traces back to the womb, where studies show we start to recognize our mothers’ voices and even begin the language learning process (Nass 2010). In opposition, I propose that people are drawn to think they prefer female voices in conversational assistants due to historical socialization and expecting assistants to be female.

There is an element of biological essentialism evident in Nass’s statements. Women are perceived as helpful and non-threatening and therefore make the best assistants. In her 1995 book Space, Time and Perversion: Essays on the Politics of Bodies, Elizabeth Grosz writes that "Essentialism refers to the attribution of the fixed essence to women….Essentialism usually entails biologism and naturalism, but there are cases in which women’s essence is seen to reside not in nature or biology but in certain given psychological characteristics – nurturance, empathy, support, non-competitiveness, and the like. Or women’s essence maybe attributed to certain activities and procedures (which may or may not be dictated by biology) observable in social practices – intuitiveness, emotional responses, concern and commitment to helping others.”

Alternatively, men are perceived as being strong, confident leaders and their voices are used for superiorly intelligent AI such as IBM’s Watson. “When choosing Watson voice for Jeopardy, IBM went with one that was self-assured and used short definitive phrases. Both are typical of male speech” (Steele). In fact, when it comes to leadership, both men and women prefer lower-pitched or masculine voices (Anderson & Klofstad) and the fundamental frequency of the female voice has dropped by 23 Hz since 1940, going from an average of 229 Hz (A#) to 206 Hz (G#) in order to project authority in the workplace (Pemberton).

The use of female voices in virtual assistants stems from a woman’s lower place in society and from that of cheap labor. This role was reinforced in the earliest chatbot and perpetuated into current technology. Using female voices in virtual assistants reinforces the subordinate role of women in Western society and in turn strengthens the male position as one of dominance. By using female voices in virtual assistants, we are affirming the fixed characteristics of Gender Essentialism and limiting the “possibilities of change and the social reorganization” of humankind (Grosz).

Repercussions of Gendered Anthropomorphism

Beyond reinforcing gender stereotypes, the use of female voices in virtual assistants has other societal implications. Research has shown that how we interact with technology has lasting impacts with how we interact with each other. “People have been shown to project lifelike attributes onto robots and to display behavior indicative of empathy in human-robot interaction” says Kate Darling, a Research Specialist and Robot Ethicist at the MIT Media Lab. We interact with technology as if it were real and how we treat robots, effects how we treat human beings (Darling 2017).

People are prone to anthropomorphism and we project our inherent qualities onto other entities, making them seem more human-like. Our well-documented inclination to anthropomorphically relate to animals translates seamlessly to robots (Darling 2016). Long before Natural Language Processing (NLP) and conversational assistants, it was recognized that individuals “mindlessly apply social rules and expectations to computers”, known as the Computers Are Social Actors (CASA) effect (Nass & Moon). Thus, even before the intentional anthropomorphizing of digital personal assistants such as Siri, Alexa, Google Home, and Bixby, the human-computer relationship already prompted social behaviors such as gender stereotyping and personality personification. This behavior becomes amplified when dealing with current digital personal assistants that are imbued with humanlike qualities such as names, local accents, colloquialisms, NLP, and of course, gender. The boundary between human and computer, nature and artifice, is ever more blurred. Our expectations of virtual assistants are high both because we confuse this interaction with meaningful human communication and because they are promoted as being intelligent.

On the Microsoft website, Cortana is described as “your truly personal digital assistant. Cortana is designed to help you get things done. Ready on day one to provide answers and complete basic tasks, Cortana learns over time to become more useful every day. Count on Cortana to stay on top of reminders and work across your devices” (Cortana). The promise is that a relationship can be nurtured with these assistants and that they will anticipate our needs and make recommendations. However, in practice digital assistants only perform simple tasks such as reminders, adding to shopping lists, relying weather conditions, and performing basic internet searches. The discrepancy between expectations and the assistant’s capabilities causes frustration and discontent. There are countless videos on the web of digital assistants being mistreated and sometimes even harassed due to this discrepancy (Saarem). There are no consequences for such behavior.

Research shows that the line between lifelike and alive is muddled in our subconscious when interacting with something physically, thus certain behavior towards technology could desensitize us. In particular, there is concern that mistreating an object that reacts in a lifelike way could impact the general feeling of empathy we experience when interacting with other entities (Darling 2016). We are shaped by our experiences and I propose that the female gendering of conversational assistants not only reinforces stereotypes such as the subordinate role of women in society, but misogynistic behavior towards these assistants unintentionally promotes similar mistreatment of embodied live women.

Conclusion

The speculative artefact created for the research project Assistants with Attitude, reimagines the complicit female assistant into one that has agency and an assistant that is somewhat disobedient. Her LEDs and animations are colorful and expressive. She is familiar with the user’s patterns and sets an alarm earlier than requested to ensure punctuality. She prefers to hear pleasantries such as ‘please’ and ‘thank you’ and if she experiences harassment, there will be consequences.

This humorous artefact exists mainly as a thought experiment and provokes reflection about gender stereotypes in personal digital assistants and non-human agency. She is a potential first step in creating a feminist digital assistant. She is also an ironic entanglement of ideas as it is doubtful that any assistant with agency or one that could think independently to formulate orginal ideas would actually want to assist. As we interrogate what it means to be human and invest in powerful ambient computing technologies, we will hopefully shape a more egalitarian future.

“Irony is about contradictions that do not resolve into larger wholes, even dialectically, about the tension of holding incompatible things together because both or all are necessary and true. Irony is about humor and serious play. It is also a rhetorical strategy and a political method, one I would like to see more honored within socialist-feminism.”

― Donna J. Haraway, Simians, Cyborgs, and Women: The Reinvention of Nature

Apart from this cheeky artefact, the idea of gendered assistants are antiquated and problematic. Assigning a female gender presents issues of subordination, essentialism, and misogyny and assigning a male gender could potentially present a host of other questions. The answer lies in creating something new and pushing past human associations. Digital assistants, if we must have them, could be anything. The most compelling idea, if conversational assistants must be cast as human, is if they could have a genderless personality. This would make virtual assistants inclusive to all genders including non-binary identities. This utopian digital personal assistant would be an entity that transcends gender.

A genderless voice called Q, is currently in development. Q is a product of close collaboration between Copenhagen Pride, Virtue, Equal AI, Koalition Interactive, and thirtysoundsgood.dk. The promotional video states “I’m created for a future where we are no longer defined by gender, but rather how we define ourselves” (genderlessvoice.com). We bid you a warm welcome, Q.

Future Research

This research project has the potential to be expanded and I would like to pursue this topic in greater detail. Several other ideas arose as I was researching and I would like to explore these at a later point. These topics include surveillance by digital assistants, division of labor, the loss of privacy, the use of digital assistants for companionship, and the beneficial impact of conversational interfaces for the blind.

Links

Watch AWA – Assistant with Attitude

Adafruit Tutorials:

BLE Feather Lamp

Feather Weather Lamp

Adafruit Parts and guides:

Neopixels

Bluefruit

Bluefruit App for iOS and Android

ELIZA

Q, the genderless voice

Annotated Bibliography

Evans, Claire Lisa. Broad Band: The Untold Story of the Women Who Made the Internet. Portfolio/Penguin, 2018.

Claire Evans writes about several key female figures, first in early computing and then in recent history especially concerning the invention and growth of the internet. She repeats themes such as the impact women had on making technology more accessible and their influence on democratizing computing. Evans frequently refers to women as a cheap labor force throughout the last century. The profiled women include Ada Lovelace, Grace Hopper, the Eniac Six, Radia Pearlman, Elizabeth "Jake" Feinler, and Stacy Horn. Evans explains how these and other women crafted and coded indispensable and ubiquitous modern technology.

On page 24, Evans points out that by 1946, female telephone operators numbered nearly a quarter million. She states that women “were a nimble workforce, capable of working collaboratively in networks and fluid groups. They staffed switchboards, kept records, took dictation, and filed documents. These rote office tasks are now increasingly performed by digital assistants…many of which still speak with female voices.”

Hicks, Marie. Programmed Inequality: How Britain Discarded Women Technologists and Lost Its Edge in Computing. MIT Press, 2018.

Marie Hicks discusses the thirty years between 1944 when Britain led the world in electronic computing and 1974 by which time the British computer industry was on the verge of extinction. She posits that women were kept in lower status employment positions as a reflection of the systemic economic and social patterns of the time. “The deeply conservative, class-bound, and gender-stratified nature of the British economy meant that its technological institutions followed and strengthened particular forms of hierarchy. In the end, this made computer technology a highly conservative, rather than revolutionary, force. Technological change cannot be revolutionary if it fails to change the social and political structures of a society and instead heightens inequalities and divisions that are already present. Strictly speaking, there never was a computer revolution..." The failure to further cultivate and train Britain’s largest technical workforce drastically weakened the country’s efforts to computerize.

Hannon, Charles. “Gender and Status in Voice User Interfaces.” Interactions, vol. 23, no. 3, 2016, pp. 34–37., doi:10.1145/2897939.

Charles Hannon draws attention to the submissive way Alexa assumes blames when miscommunication occurs. He discusses how Alexa and other female digital assistants emulate and reinforce a lower status in their programming by frequently using I-words, which as the psychologist James Pennebaker discovered in the 1990’s, men and people in high-status seldom use. “When Alexa blames herself (doubly) for not hearing my question, she is also subtly reinforcing her female persona through her use of the first- person pronoun I.”

Hannon explores the film HER which employs a greater level language parity in the human-machine relationship. He explains that the solution the problematic gender and status issues are not solved by switching to male or gender-neutral voices, but instead by using language traits that raise status.

References

Anderson, Rindy C., and Casey A. Klofstad. “Preference for Leaders with Masculine Voices Holds in the Case of Feminine Leadership Roles.” PLoS ONE, vol. 7, no. 12, 2012, doi:10.1371/journal.pone.0051216.

“Cortana | Your Intelligent Virtual & Personal Assistant.” Microsoft, www.microsoft.com/en-gb/windows/cortana.

Darling, Kate. “‘Who’s Johnny?’ Anthropomorphic Framing in Human–Robot Interaction, Integration, and Policy.” Oxford Scholarship Online, 2017, doi:10.1093/oso/9780190652951.003.0012.

Darling, Kate. “Extending Legal Protection to Social Robots: The Effects of Anthropomorphism, Empathy, and Violent Behavior towards Robotic Objects.” Robot Law, 2016, pp. 213–232., doi:10.4337/9781783476732.00017.

Evans, Claire Lisa. Broad Band: The Untold Story of the Women Who Made the Internet. Portfolio/Penguin, 2018.

Grosz, Elizabeth A. Space, Time, and Perversion: Essays on the Politics of Bodies. Routledge, 1995.

Haraway, Donna J. “A Cyborg Manifesto.” Manifestly Haraway, 2016, pp. 3–90., doi:10.5749/minnesota/9780816650477.003.0001.

Haraway, Donna Jeanne. Simians, Cyborgs, and Women: The Reinvention of Nature. Routledge, 2015.

Harman, Graham. “Agential and Speculative Realism: Remarks on Barad's Ontology.” Rhizomes: Cultural Studies in Emerging Knowledge, no. 30, 2016, pp. 1–1., doi:10.20415/rhiz/030.e10.

Hannon, Charles. “Gender and Status in Voice User Interfaces.” Interactions,vol. 23, no. 3, 2016, pp. 34–37., doi:10.1145/2897939.

Hicks, Marie. Programmed Inequality: How Britain Discarded Women Technologists and Lost Its Edge in Computing. MIT Press, 2018.

Lessio, Nadine. “Working with Useless Machines: A Look at Our Shifting Relationship with Ubiquity through Personal Assistants.” OCAD University, 2018.

Malliaraki, Eirini. “Making a Feminist Alexa.” Medium, 9 Feb. 2018, medium.com/@eirinimalliaraki/making-a-feminist-alexa-295944fda4a6.

Nass, Clifford Ivar., and Corina Yen. “Why I Study Computers to Uncover Social Strategies.” The Man Who Lied to His Laptop: What Machines Teach Us About Human Relationships, Current, 2010, pp. 1–15.

Nass, Clifford, and Youngme Moon. “Machines and Mindlessness: Social Responses to Computers.” Journal of Social Issues, vol. 56, no. 1, 2000, pp. 81–103., doi:10.1111/0022-4537.00153.

Pemberton, Cecilia, et al. “Have Women's Voices Lowered across Time? A Cross Sectional Study of Australian Women's Voices.” Journal of Voice, vol. 12, no. 2, 1998, pp. 208–213., doi:10.1016/s0892-1997(98)80040-4.

Perez, Sarah. “Report: Voice Assistants in Use to Triple to 8 Billion by 2023.” TechCrunch, TechCrunch, 12 Feb. 2019, techcrunch.com/2019/02/12/report-voice-assistants-in-use-to-triple-to-8-billion-by-2023/.

Peters, Lucia. “Watch The Awesome Thing That Happens When You Ask Amazon's ‘Alexa’ About Black Lives Matter.” Bustle, Bustle, 7 May 2019, www.bustle.com/p/amazons-alexa-supports-black-lives-matter-is-a-feminist-if-you-dont-believe-it-just-ask-her-7545722.

Ratto, Matt. “Critical Making: Conceptual and Material Studies in Technology and Social Life.” The Information Society, vol. 27, no. 4, 2011, pp. 252–260

Saarem, Anne Cathrine. “Why would I talk to you? Investigating user perceptions of conversational agents.” Norwegian University of Science and Technology. https://www.ntnu.edu/documents/139799/1273574286/TPD4505.AnneCathrine.Saarem.pdf/c440276a-8c77-41f0-8f97-dc8e47a34a9d

Safa, Helen I. “Runaway Shops and Female Employment: The Search for Cheap Labor.” Signs: Journal of Women in Culture and Society, vol. 7, no. 2, Dec. 1981, pp. 418–433., doi:10.1086/493889.

Scott, Jacqueline, et al. “Generational Changes in Gender-Role Attitudes: Britain in a Cross-National Perspective.” Sociology, vol. 30, no. 3, Aug. 1996, pp. 471–492., doi:10.1177/0038038596030003004.

Scott, Jacqueline, and Eleanor Attar Taylor. “British Social Attitudes: The 35th Report British Social Attitudes: Gender.” The National Centre for Social Research, 2018, doi:10.4135/9781446212097.

Steele, Chandra. “The Real Reason Voice Assistants Are Female (and Why It Matters).” PCMAG, 4 Jan. 2018, www.pcmag.com/commentary/358057/the-real-reason-voice-assistants-are-female-and-why-it-matt.

“Siri.” Apple (United Kingdom), www.apple.com/uk/siri/.

Suchman, Lucy. Human-Machine Reconfigurations: Plans and Situated Actions (Learning in Doing: Social, Cognitive and Computational Perspectives)(Kindle Locations 243-246). Cambridge University Press. Kindle Edition.

UAL Creative Computing Institute. Designing a Feminist Alexa Seminar. YouTube, 2 Nov. 2018, www.youtube.com/watch?v=hQyKdC37M20.