Triptych

3D projections were produced with the openFrameworks platform using generative coding. The projections are shown over a 3 minute period, split into 3 scenes. The first scene is a reference to Plexus (see images below) which is about particles and collisions, the second scene is a generative version of Mathmos Lamps and the third scene is reinventing Edward Muybridge images to show to a contemporary audience.

produced by: Colin Higgs

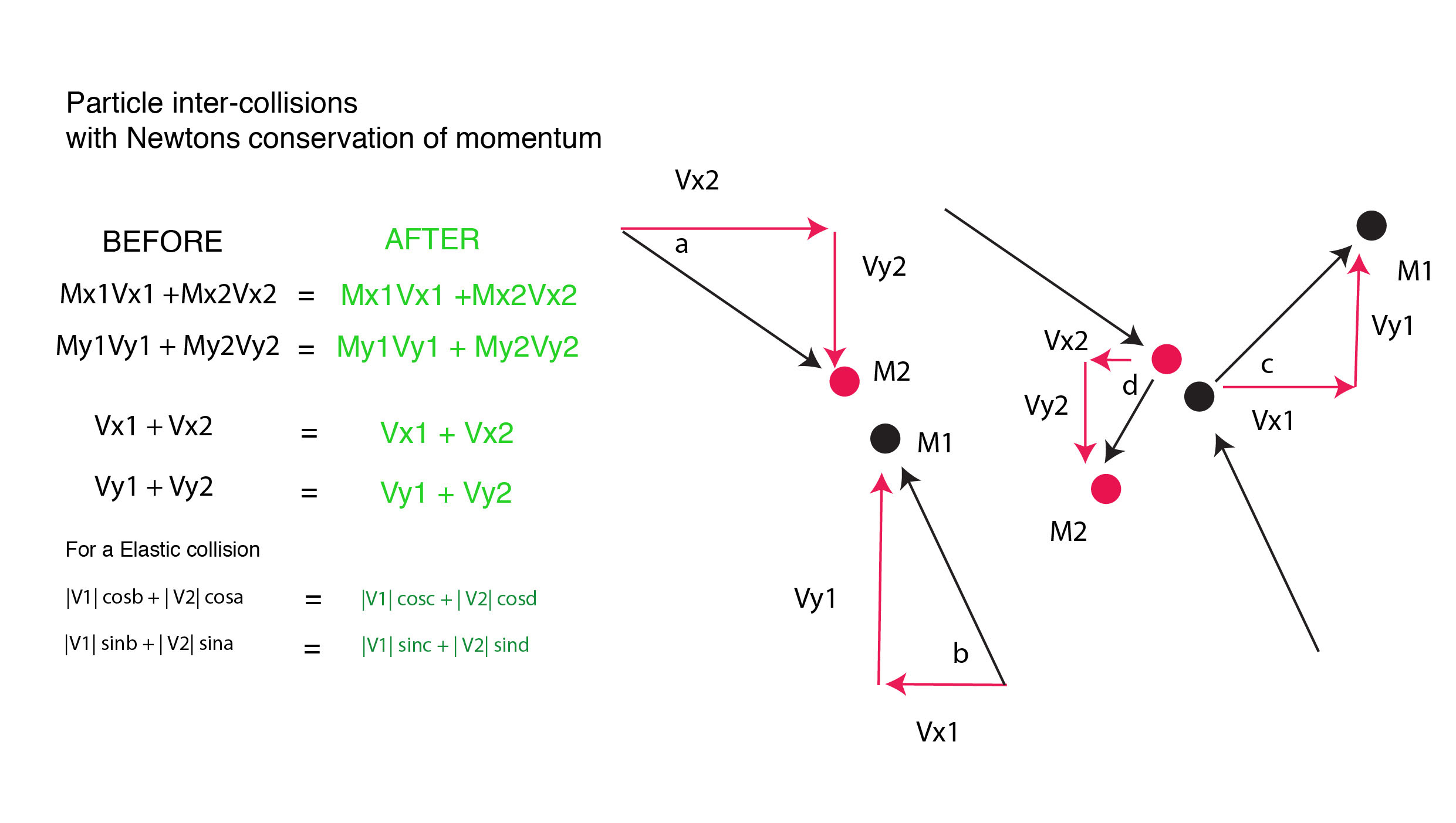

Scene 01 Particle Collisions

In my opinion particle collisions are mesmerizing in their mixture of chaos and structure that results from their dynamic. Random speeds and directions add to the somewhat chaotic nature of something so familiar yet unknown. The inspiration for the sketch was based on Plexus a particle plugin for after effects that I used in my previous work. It creates a random 3d network of particles in 3D space, in addition lines can be connected to the points and polygon faces can be added. (see images below). The particle inter-collisions are based on Newtons conservation of momentum algorithm (see algorithm below). The particles and their lines change colour when they think they will collide and are drawn with the same thinking of impending collision.

Plexus Examples

Scene 02 A Generative version of Mathmos Lava Lamps.

Using generative artwork I re-created the feeling of Mathmos lamps; they slowly change shape and form and variations of colour over time (see images below). Using randomly generated shapes and slowly changing colour values over time as well as leaving the colour for a period of time the rotations and speeds of different shapes bounce off the boundaries of the screens and are continously drawn on screen.

A random generator changes the screens number of shapes as to what polygons are drawn on the screen. A selection of polygon shapes from 2 to 7 sides is randomly chosen over time.

Mathmos Lava Lamps

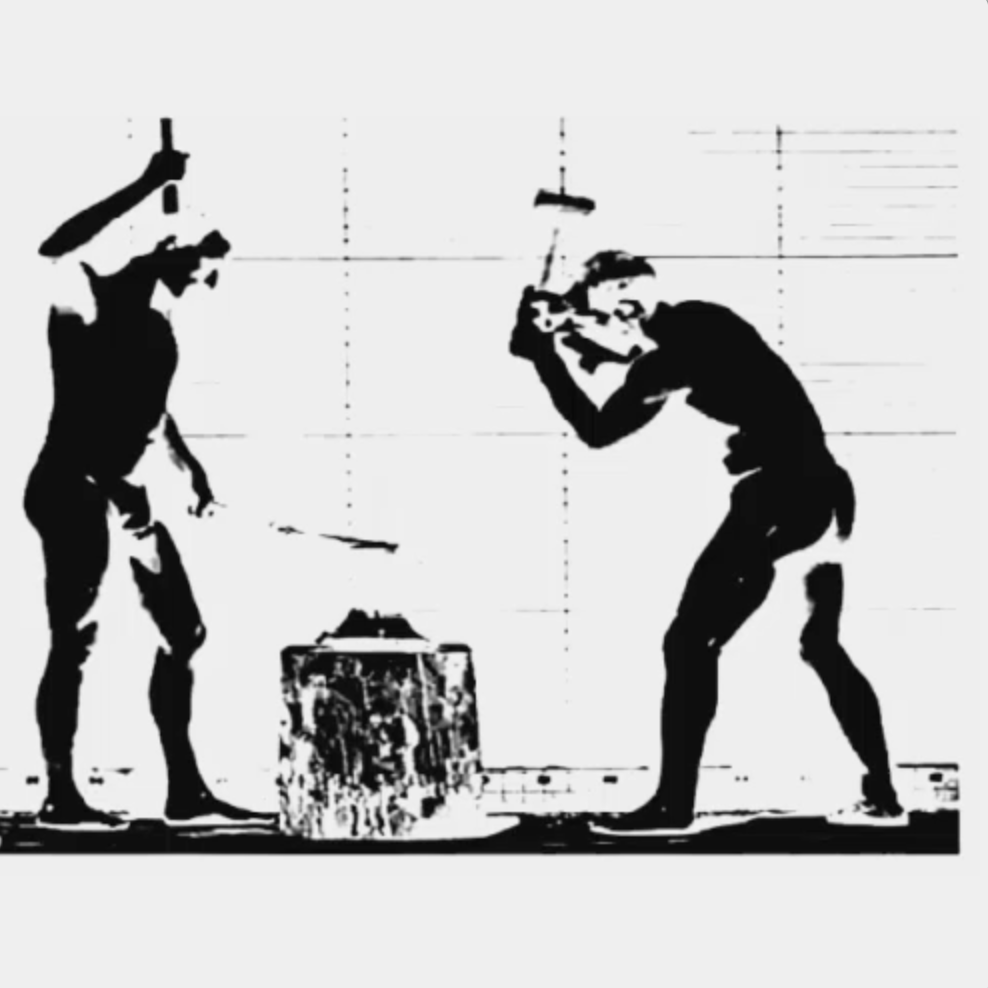

Scene 03: Reinventing Edward Muybridge

Animators who have studied the locomation of both humans and animals have always gone back to Muybridge for reference material. The material is as valid today as it was when it was originally made. Reinventing Edward Muybridge is paying homage to him as one the founding fathers of moving image making and readdressing him in a modern generative format.

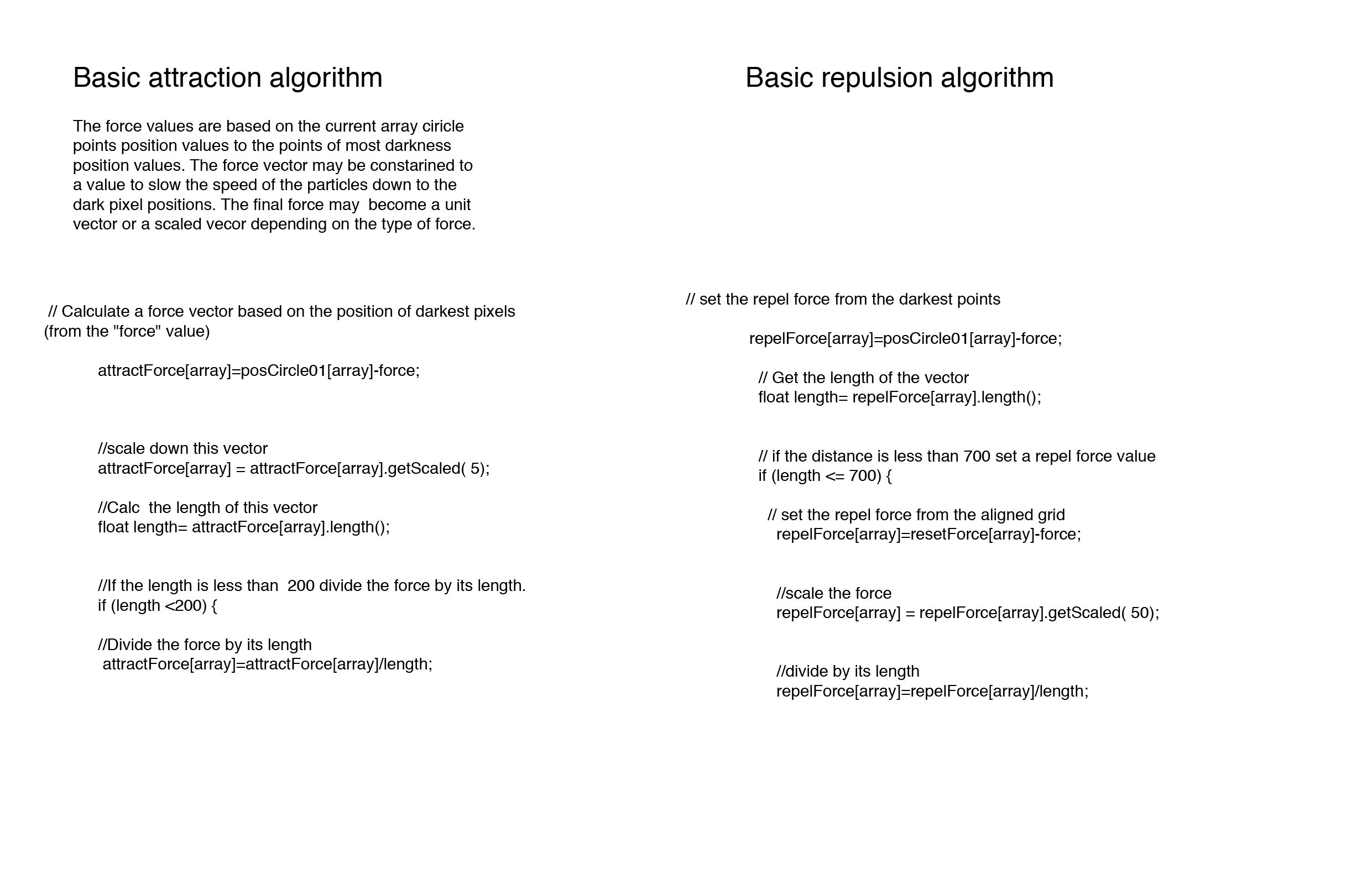

Using video source images from Edward Muybridge's animation collections (see below), the darkness values of the image pixels arrays are drawn and controlled by the video source animations. These in turn control the sizes of the circle arrays that are drawn and the result is that the circle array images look exactly like Muybridge's original images. After a certain time images are either destroyed by a repel force or an attract force algorithm. Or the particles circle arrays use a random perling noise generator so that the the circle array images become distorted over time. The algorithms for both repel and attraction of the particles are searching the video images for the darkest points of the video source image. From these points the forces of attraction or repulsion are applied. The basis of the algorithm is shown below.

Edward Muybridge images

Future Development

The 3 scenes could be improved by:

Scene 01 : the locomotion algorithm could have an added attraction and repulsion element to it, the particles could change shape after a determinated number of collisions

Scene 02: the Mathmos locomotion algorithm could also have a non linear motion added to them

Scene 03: the search brightness aglorithm employed by both attraction and repulsive algorithms could be improved by finding and selecting multiple dark pixel points. This would mean the image would be broken up from multiple points and not from just a singular source point.

Self Evaluation

The work was executed with a variety of different solutions. Although to a certain degree the work was based upon starting points of old work I tried to implement new and fresh directions. The particle collision work was based on previous processing work. However, the implementation of inter-particle collisons was new as well as changing colour values upon collisions and also adding lines when collisions were impending between particles. The Mathmos work was based on a line brush algorithm we did in class. However, the addition of slowly changing colour values continously over time and stopping and restarting this process and also randomly building different polygon brushes over time was new. In reference to the Muybridge work I used the video luminance values as an input to regulate the size of a graphic array and this was also a class subject but the addition of adding new repel/attraction algorithms which destroy the image is a new approach. In addition these algorithms use a new search algorithm which looks for dark pixels positions of the source video image which are then applied as inputs for the destroy algorithms as centres of there forces.