Introduction

In The River of Consciousness where Oliver Sacks skillfully navigates through the history of neuroscience, there’s a compelling concept: the perception of time. He gave the example of people affected with postencephalitic parkinsonism syndrome. This is a rare case caused by extreme brain damage and impairs the flow of movement. This is shown by this anecdote from Sacks’s practice where he observed patients' behaviours for exemple Miron that seemed to often raise his arm and keep it still.

“When I questioned him about these frozen poses, he asked indignantly, "What do you mean, 'frozen poses'? I was just wiping my nose.

I wondered if he was putting me on. One morning, over a period of hours, I took a series of twenty or so photos and stapled them together to make a flick-book, like the ones I used to make to show the unfurling of fiddleheads. With this, I could see that Miron actually was wiping his nose but was doing so a thousand times more slowly than normal. “

This raises the question of, how do we perceive present time? Put simply, it all depends on the speed at which the neurons are firing. Sacks provides the metaphor of the frame rate, where if your neurons are firing at 60 frames per second, your perception is standard. Now, if you jump at say 120 per second, you can process a lot more information and act more quickly. On the other hand, if you are at 1 per second you will notice some serious gaps. The case of Hester is an example of how a person perceives speeding up:

“I once asked my students to play ball with her, and they found it impossible to catch her lightning-quick throws... "You see how quick she is," I said. "Don't underestimate her-you'd better be ready." But they could not be ready, since their best reaction times approached a seventh of a second, whereas Hester's was scarcely more than a tenth of a second.“

The River of Consciousness P47 Oliver Sacks

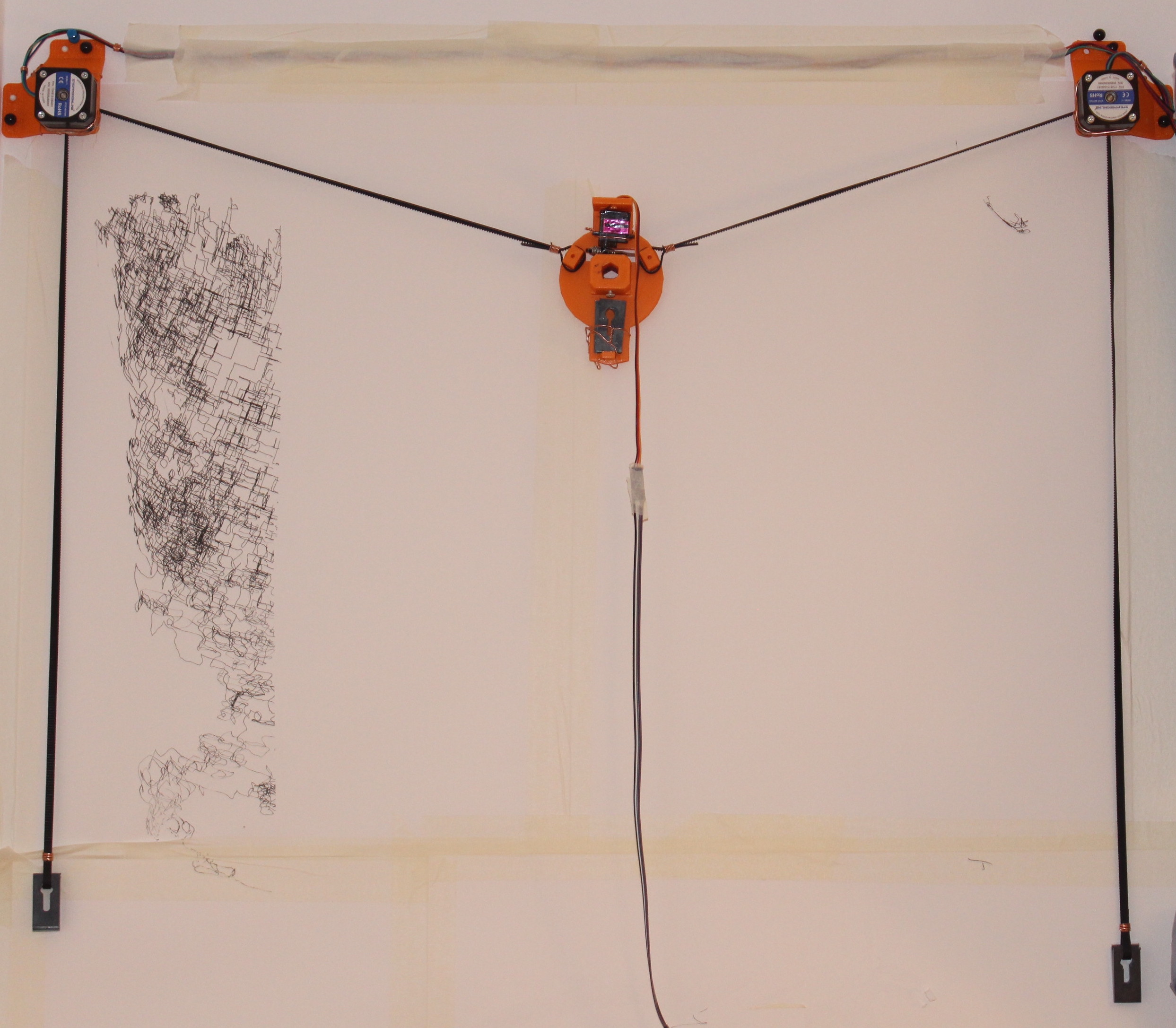

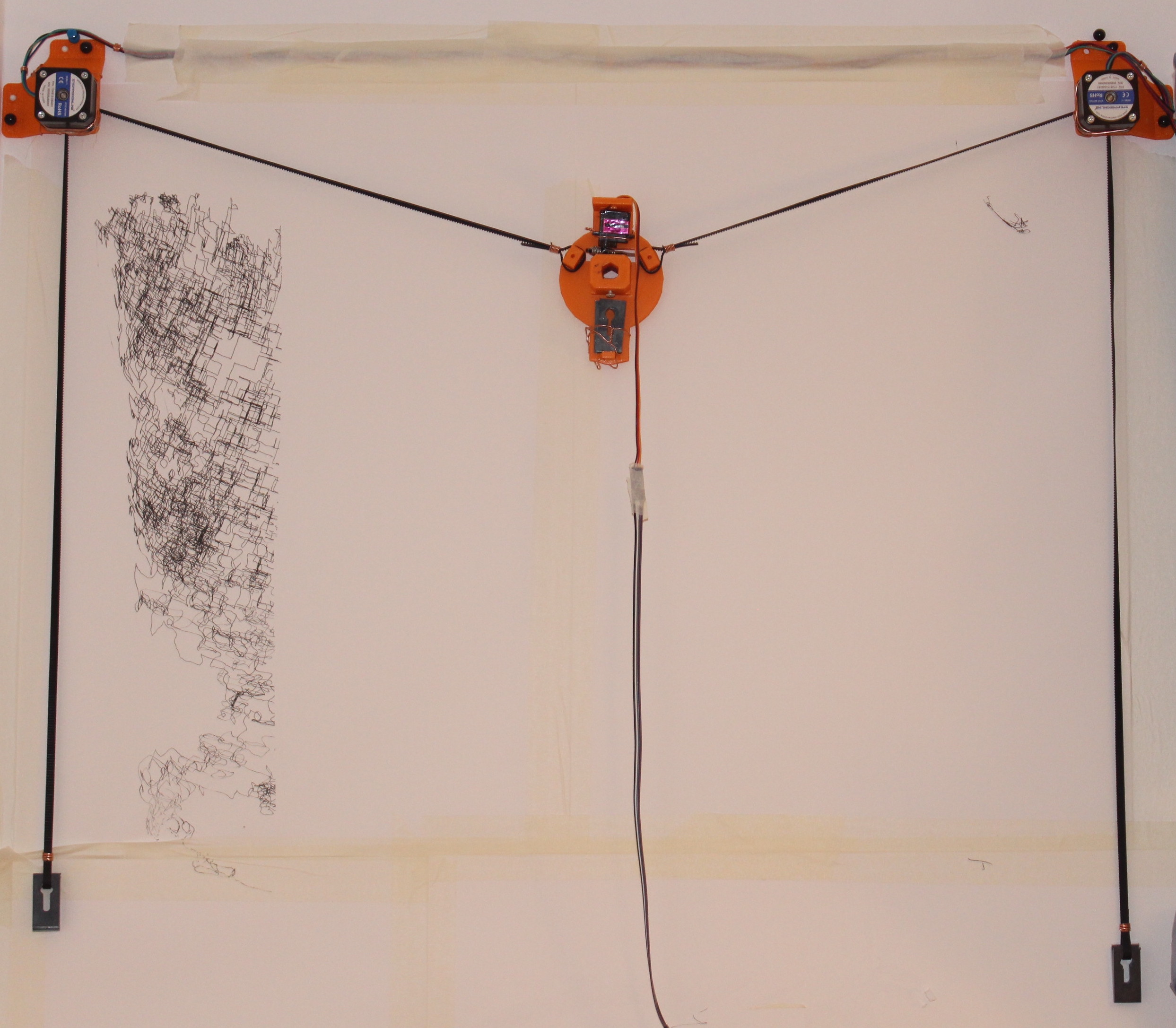

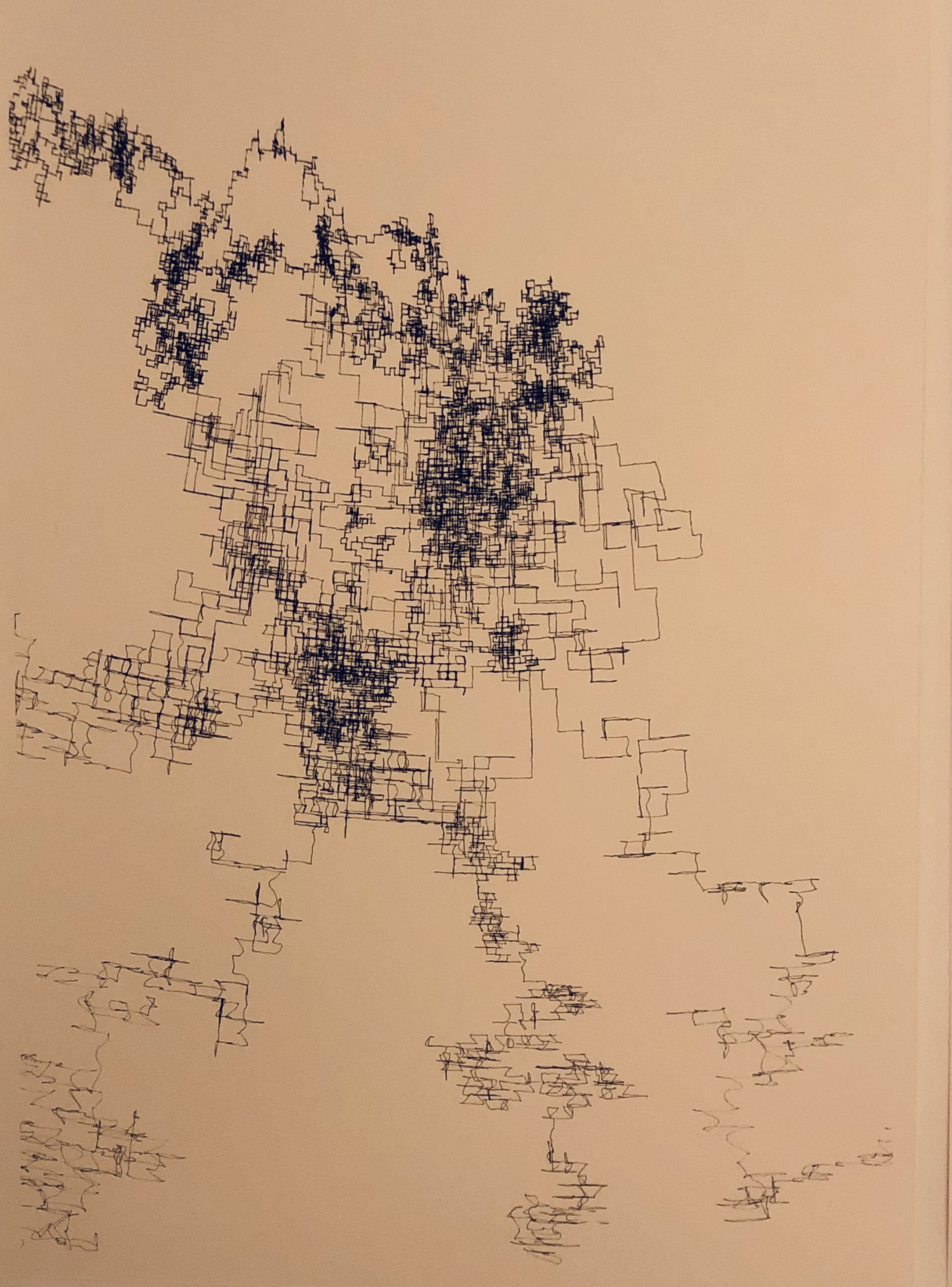

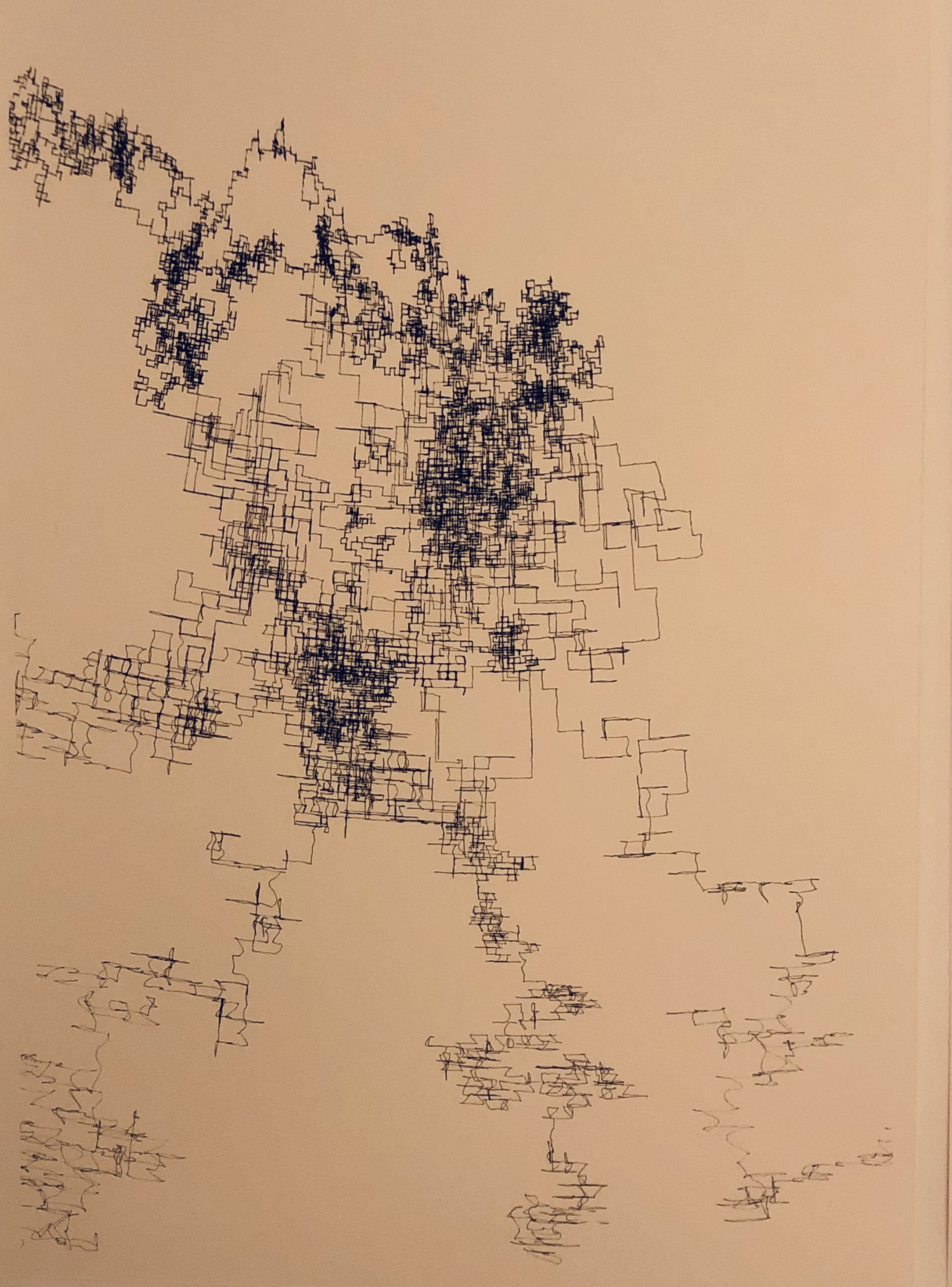

It is a fascinating concept that reflects the Albert Einstein quote: “Time is relative; it's only worth depends upon what we do as it is passing.” Thus, how can we measure our time perception and what is “now”? This is obviously an extensive question, therefore, this project is an introduction to the brain-computer interface field. It is a concept that reimagines the work of Oliver Sacks. It is an interactive installation with data coming from electroencephalograms (EEG) that monitor brain activity, the information controls an X Y plotter distance while the direction is randomized. thus, creates a live generative drawing based on brain activity.

Why:

Lately, we all feel physically stuck, all confined in our room or our homes, as Covid restrictions are still in place. Where every day feels the same as we have no way of feeling the time if it was not from the sun going up every morning as the hours passing by could not be more meaningless if it was not from a self-imposed schedule. In a way we are like Miron and his project to eventually scratch his nose, working on our own projects stock in work, and experience time by their progress and deadline. But the question goes, what happened during those hours and weeks working from home, how do we perceive time now?

How:

With weekly communication with Charlotte Maschke, Master’s student in the Integrated Program in Neuroscience at McGill University, to visualize her research on consciousness, the project gained insights into the field, crash courses on neuroscience, and relevant readings.

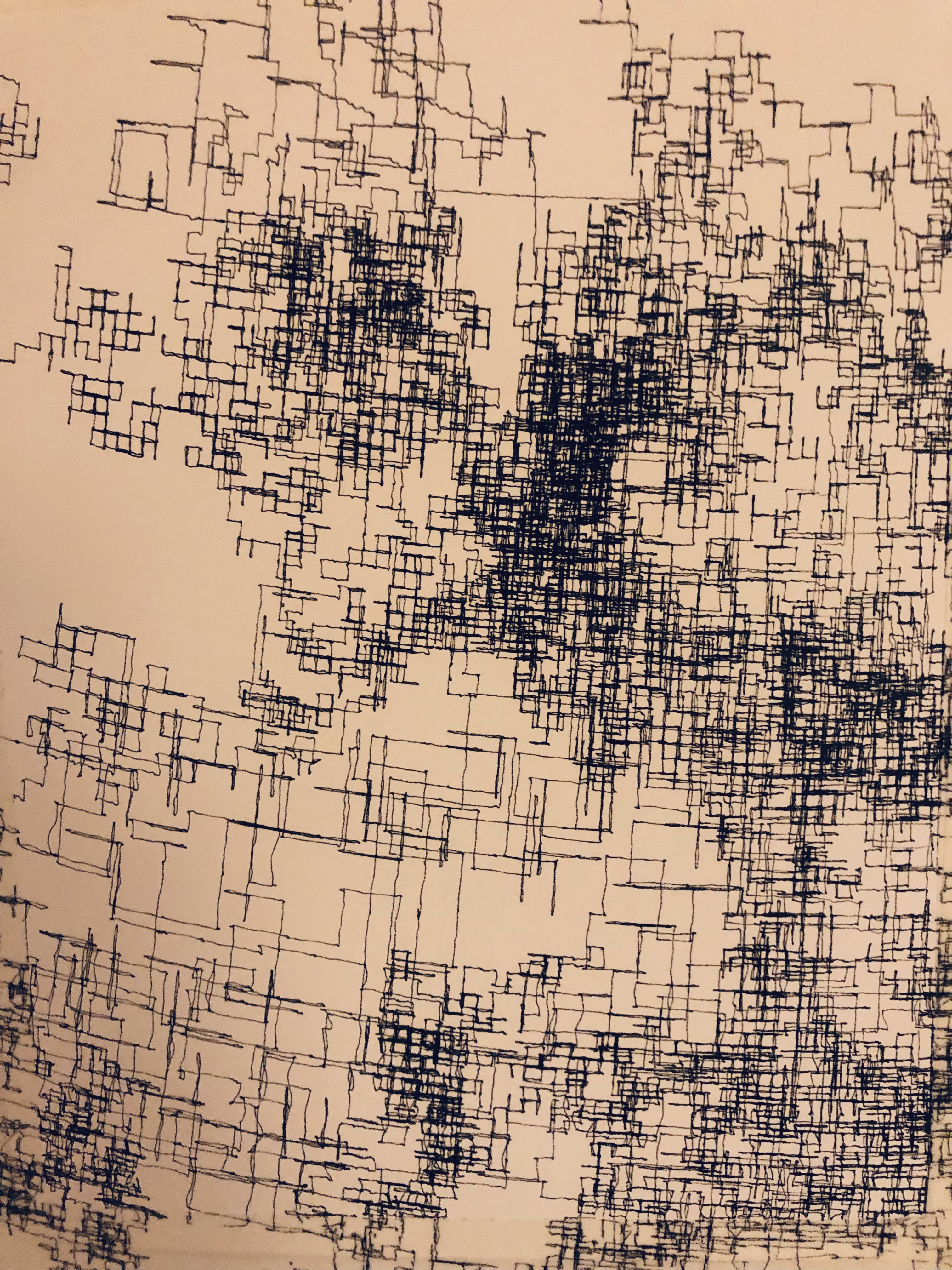

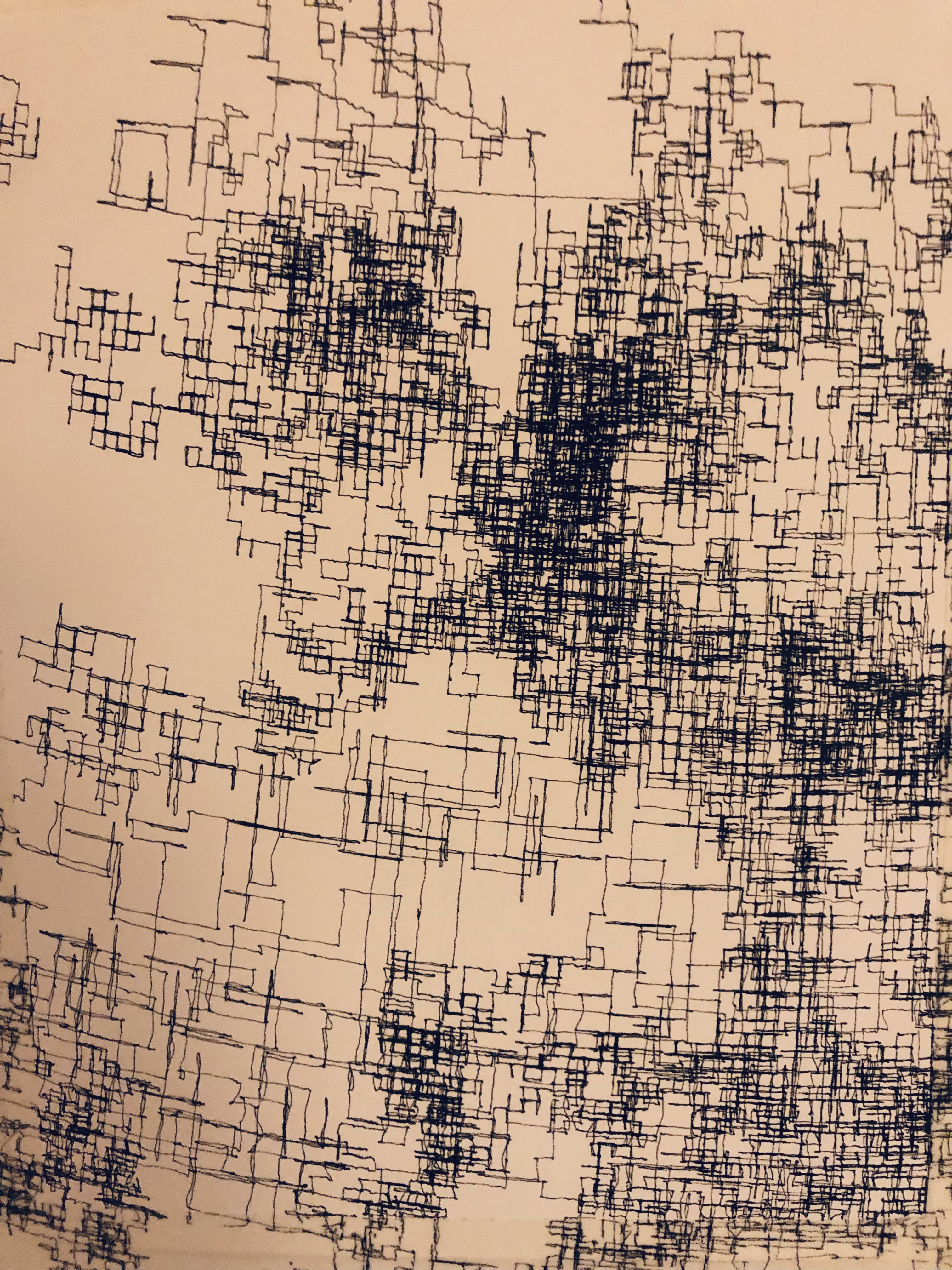

With a live plotter’s operating, the EEG monitors the brain activity during working sessions and creates a variety of artifacts that would accumulate through weeks. Thus giving evidence of time passing and how it affected the brain.

Using spike recorder (Backyard Brains software) to explore the data we get. As seen here we have a lot of noise, and the spikes are due to blinking.

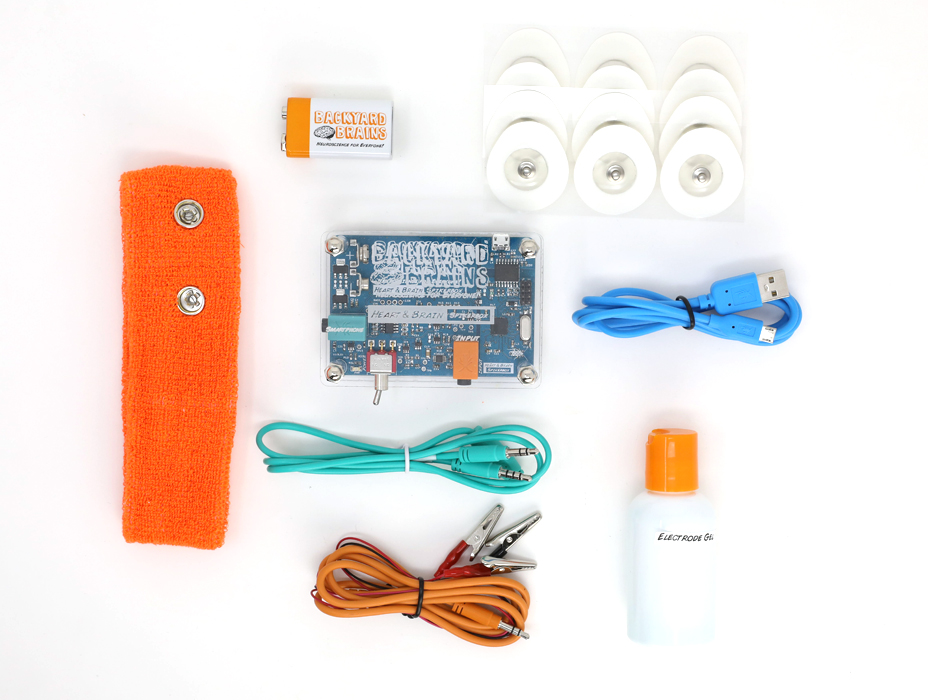

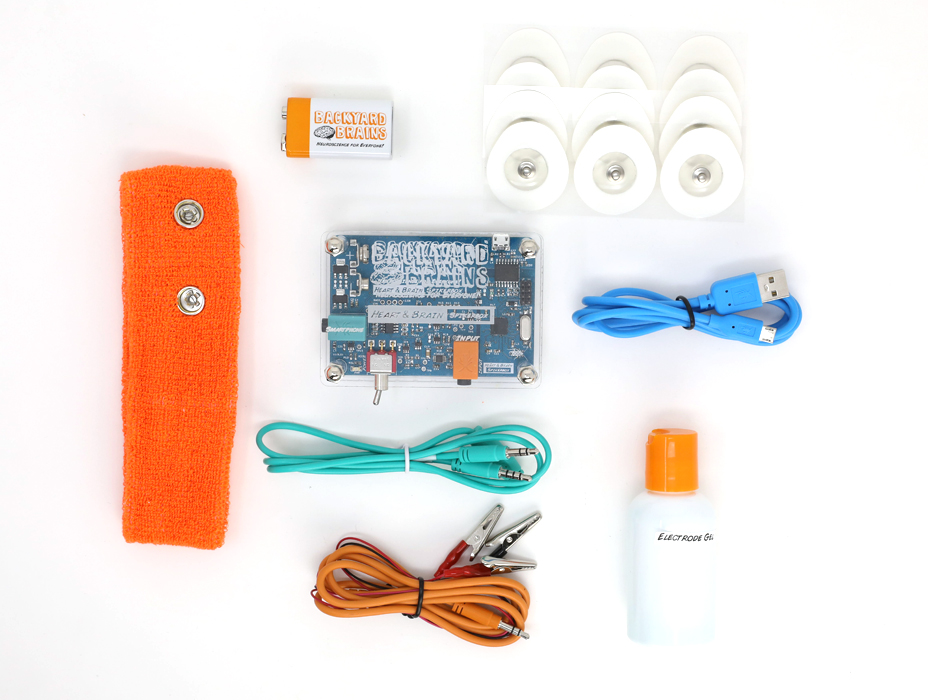

Thus, the project relied on the Backyard Brain Heart and Brain SpikerBox as suitable candidates. It is a two-electrode kit mounted on a bandanna. As the kit's crucial selling point is its open-source feature, access to the code is offered, from the EEG to the software, thus making it your own. The EEG utilizes an Arduino Uno written in C++, as for the software, while more complex is coded in Python.

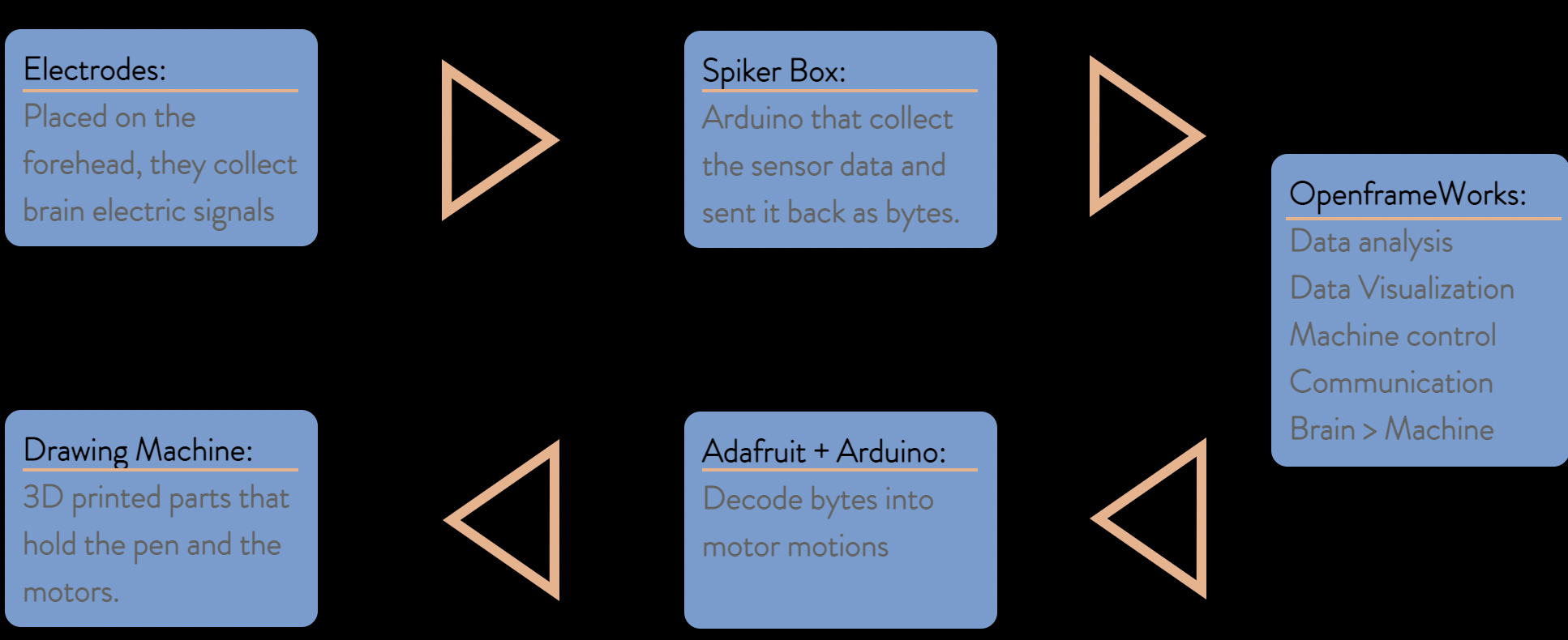

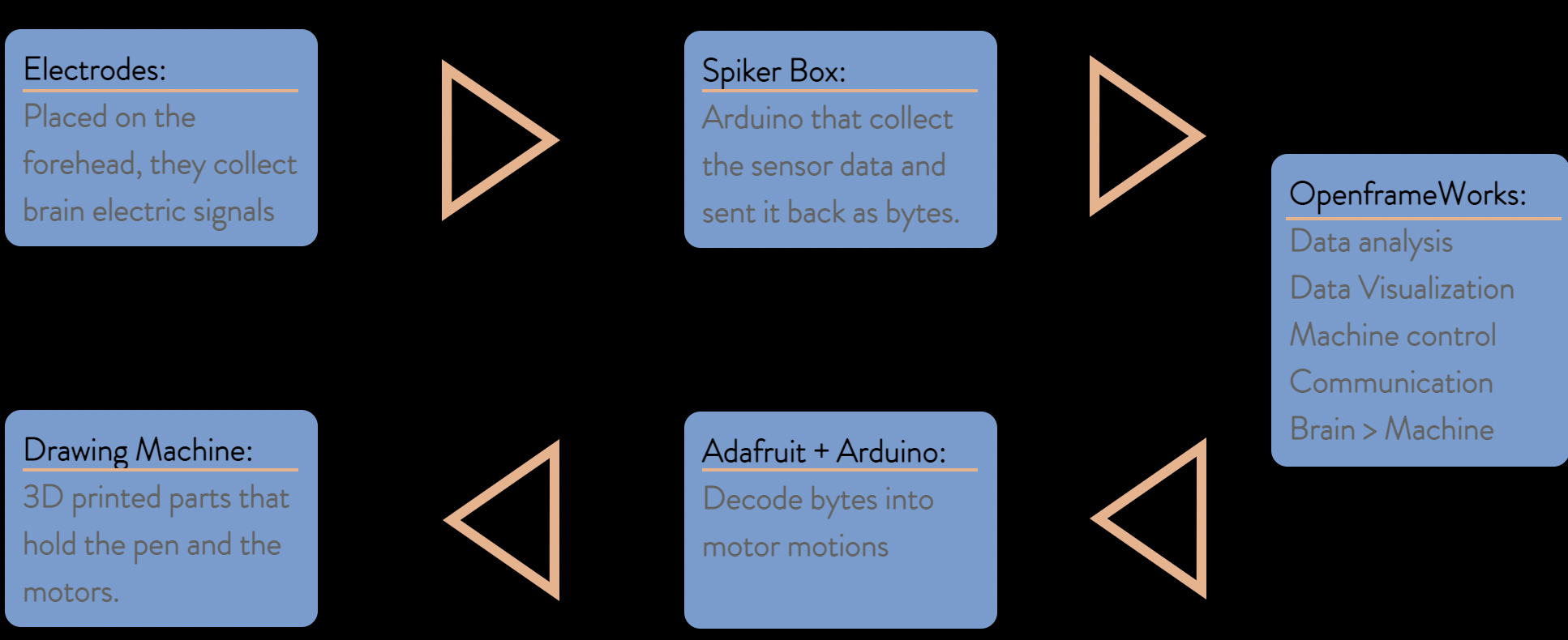

The electrodes are located on the forehead, thus giving reading regarding the frontal lobe, responsible for concentration. For now, the machine is interpreting the maximum value of the raw brain reading as the stroke length. Direction is set randomly.

The programing pipeline goes as follow:

Crucial amounts of time were spent trying to establish swift communication by understanding the challenging bytes system so that it left minimal time for extended data analysis. Thus, only the average, minimum and maximum value for a 10-second window is being used.

The machine is a simple X Y plotter controlled by two stepper motors for the position and one servo for the pen. The difference with the conventional plotter is that this one utilises gravity to navigate the pen. Where a gondola holds the pen, that is connected by rubber belt to the stepper motors.

From my own brain to the screen. The slime mould (blue) changes its speed based on the largest value at each reading and use the average as the distance travelled. The linework (orange) maps the data used in the visualization.

Using Openframework there is a visualization that can be offered to further express the data to the viewer while his brain is scanned. This early visualization is a mesmerizing combination of the organic and inorganic, mixing straight lines as the raw data and stigmergy as the average and maximum value, all extracted from the brain

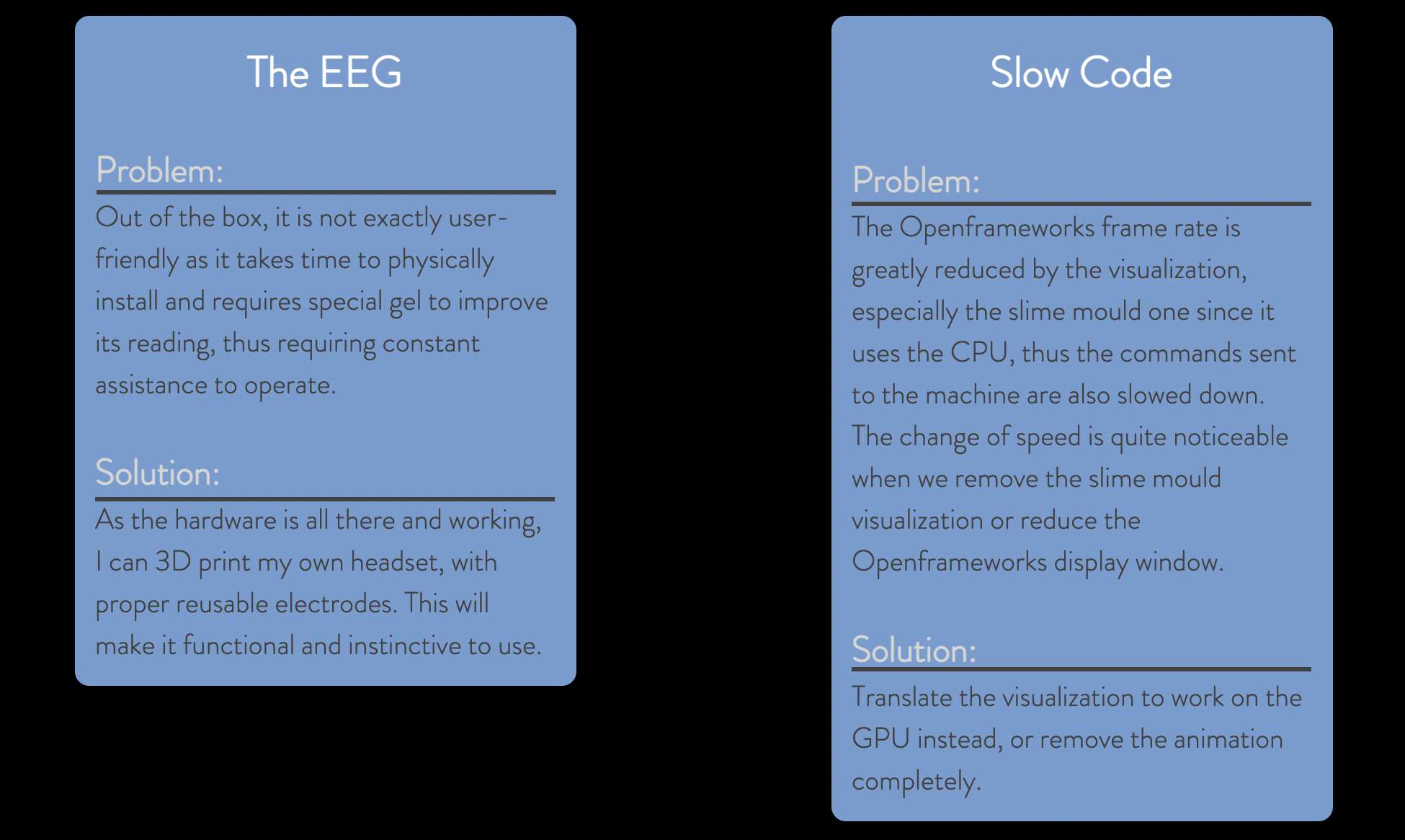

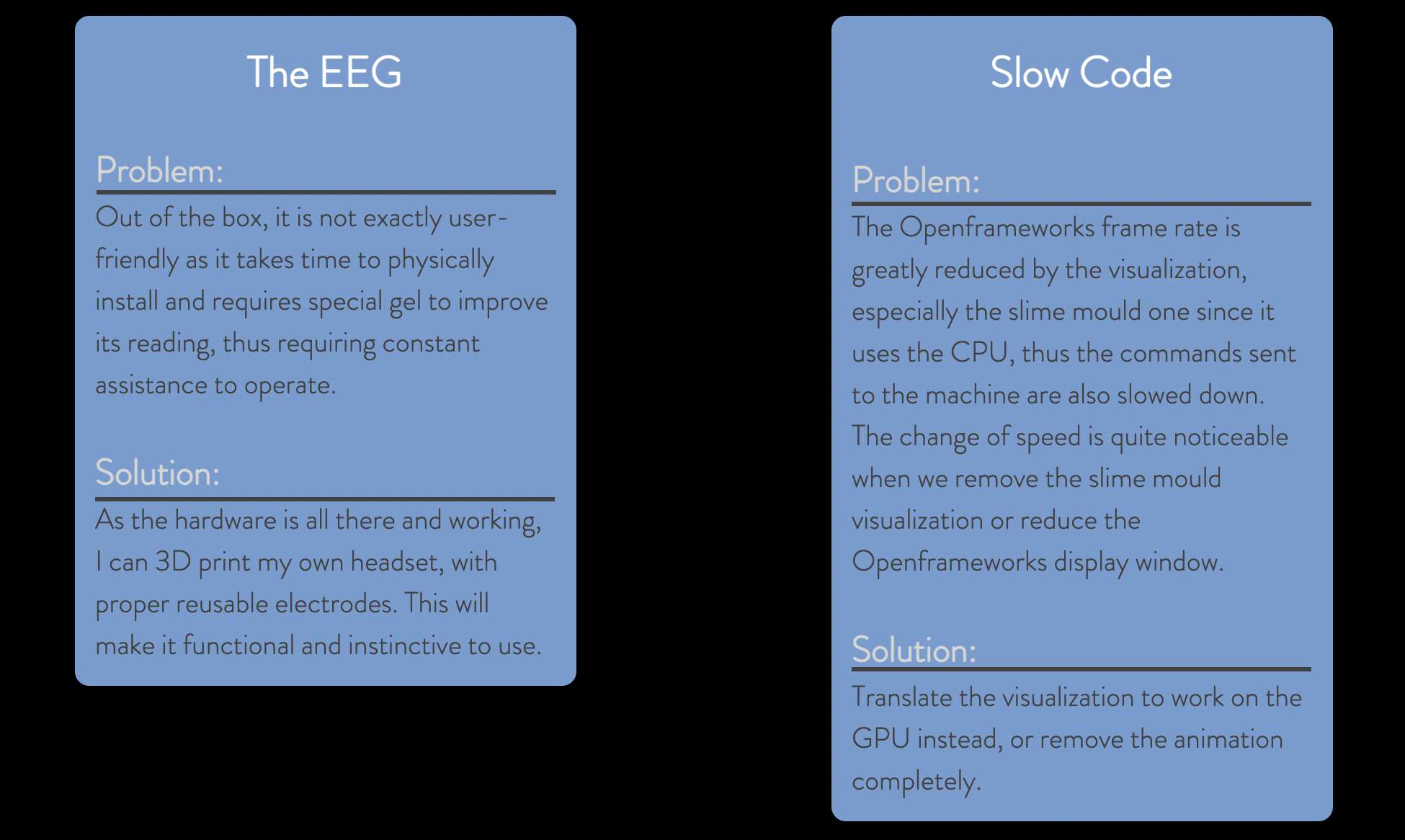

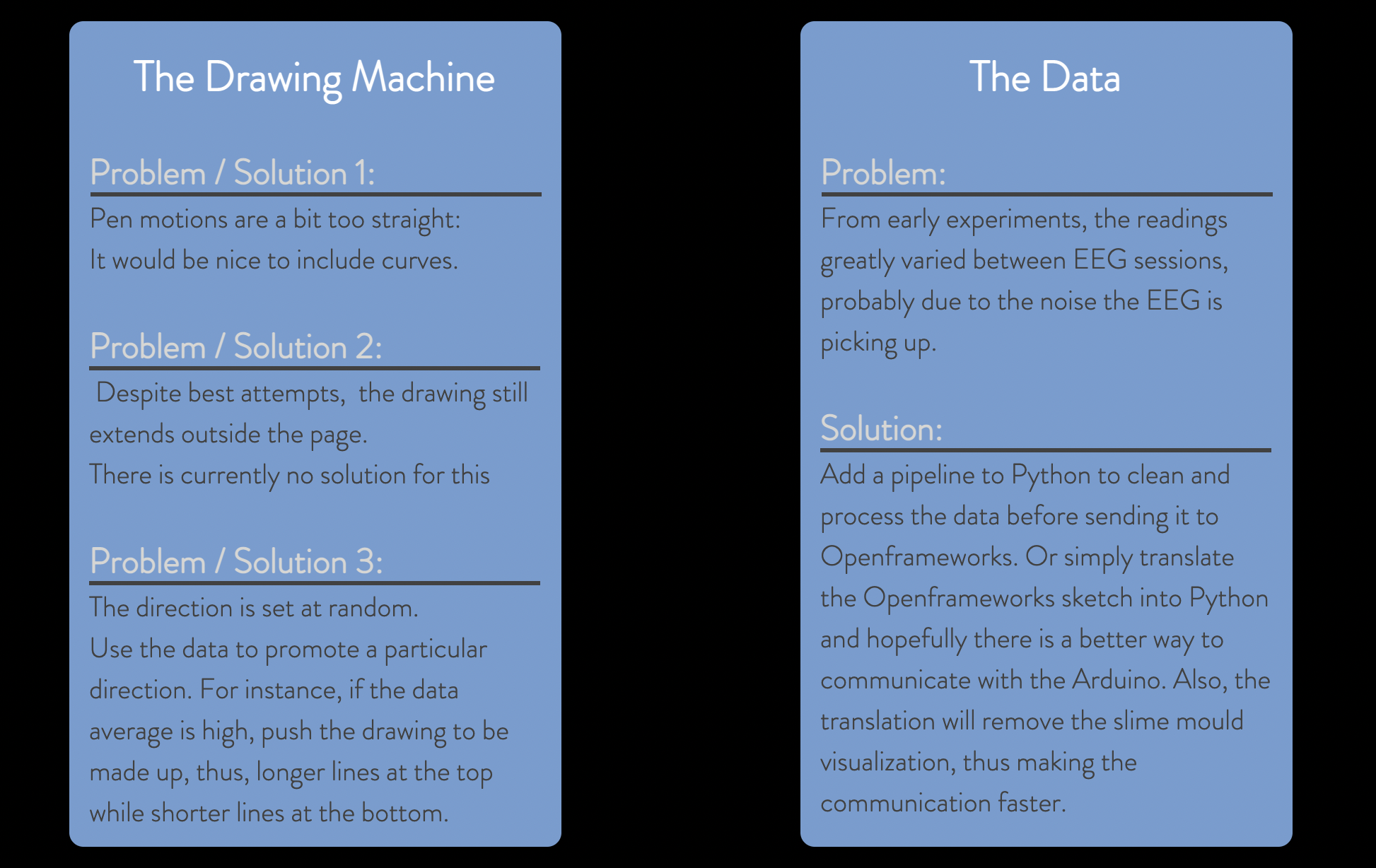

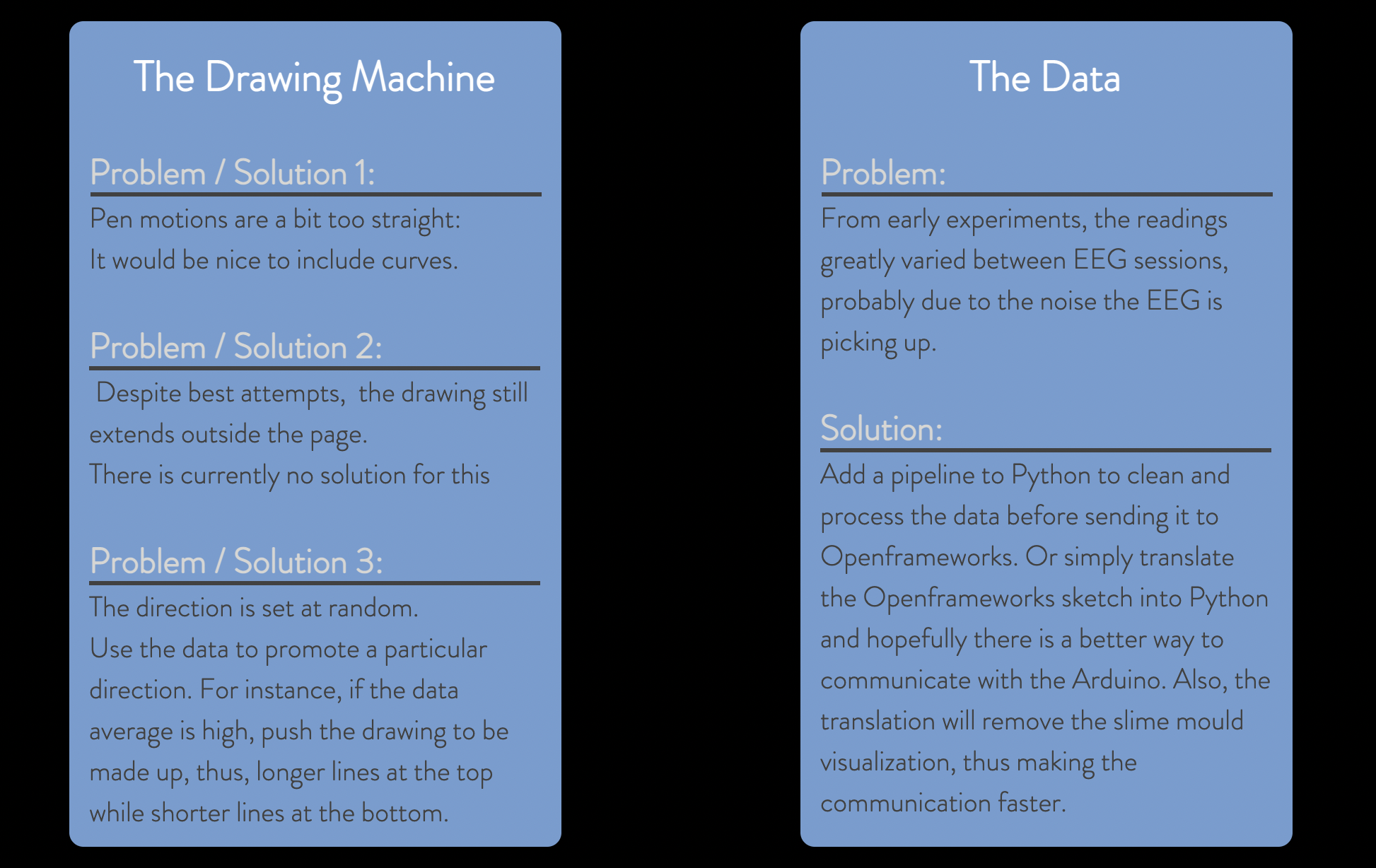

Improvement:

Conclusion:

This project is a great opportunity to understand neuroscience research while conceptualizing brain activity while studying it. It introduces bytes: how to encode and communicate with them. It is a first introduction work on a crude brain-computer interface with a hands-on experiment that physically interprets the early stage of data processing in neuroscience. Thus, as the project evolves and grows, it could help bring brain interaction into a different light and sensitize people to its possibility, as it brings forward brain-computer interface technology of the near future. Indeed it would be great to start considering how our brain works before someone else like Facebook or Neuralink does it for us.

If you want to see more :

Portfolio:

https://mirkofebbo.wixsite.com/mirkofebboportfolio

Webpage:

https://www.mirkofebbo.com/

Bibliography:

Brains, Backyard. “The Heart and Brain SpikerBox.” Backyard Brains, https://backyardbrains.com/products/heartAndBrainSpikerBox.

CNET. “Neuralink: Elon Musk's entire brain chip presentation in 14 minutes (supercut).” Youtube, https://www.youtube.com/watch?v=CLUWDLKAF1M&ab_channel=CNET.

Constine, Josh. “Facebook is building brain-computer interfaces for typing and skin-hearing.” Tech Crunch, 01 04 2017, https://techcrunch.com/2017/04/19/facebook-brain-interface/?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS8&guce_referrer_sig=AQAAALnnoqeTAc1hHZrNzWDk1QAU2e3Bu1pmY-5A4RnVVSog18YaKLQ5YdtczXMxcNFPbBlvdUdRzu4uTbkI7xn_asdXHD4QPEALiSe8EySqaF_WWO6i8OG.

Highlights, CNET. “Republican Senator GRILLS Zuckerberg on Facebook, Google, and Twitter collaboration.” youtube, https://www.youtube.com/watch?v=pOdrPruSnrw&ab_channel=CNETHighlights.

Humain, Some. “Facebook–Cambridge Analytica data scandal.” Wikipedia, https://en.wikipedia.org/wiki/Facebook%E2%80%93Cambridge_Analytica_data_scandal.

Newcomb, Alyssa. “Cleveland news station introduces comical new segment: 'What Day Is It?'” Today, 2020, https://www.today.com/news/what-day-it-cleveland-news-station-introduces-new-segment-t178366.

“This article is more than 1 month old Elon Musk startup shows monkey with brain chip implants playing video game.” The Guardian, 09 04 2021, https://www.theguardian.com/technology/2021/apr/09/elon-musk-neuralink-monkey-video-game.

University, Oxford. “Brain area unique to humans linked to cognitive powers.” News, 28 01 2014, https://www.ox.ac.uk/news/2014-01-28-brain-area-unique-humans-linked-cognitive-powers.