Portraits!

PORTRAITS is an interactive installation focused on the exploration of people’s relationship with the work of art. It invites visitors to sit, relax and wait while a robotic painter shoots balls of paint on a canvas in order to create their portrait.

produced by: Valerio Viperino

Introduction

The core idea behind this installation was to investigate how technology can interfere with the relationship that the audience has with the work of art. It's also an attempt to translate an algorithmic procedure into a physical artistic process performed by a robotic entity, playing with the traditional boundaries of artistic autorship.

After my Physical Computing study #1, on which I tried to create a machine that paints over a stencil, I was intrigued to move to a more complex machine that could show, or at least fake, some higher degree of agency. In my Computational Art research #1 I was also reflecting on the role of the public in the work of art by envisioning how with Augmented Reality people could interact with an ever evolving piece. So all of these influences came together and blended into this final project, which started with the ambitious idea of creating a machine capable of making portraits: because that's how real robot artists roll.

Concept and background research

One of the main influences behind this whole project was the work done by Patrick Tresset with Paul The Robot and all of his robotic successors. I particularly liked the way he played with the audience in order to make people develop some sort of relationship with a non human entity: he programmed the robots so that they appear to have agency, looking at people and pretending to make artistic choices all of the time. He also recreated a traditional portrait setting in which people will make their own suspension of disbelief.

A still of Paul the Robot drawing a portrait

Looking back at how things evolved during the exhibition, I can say that something that helped me to obtain a similar purpose is the loud burst of the paintball gun when it fires: people cannot ignore it because it abruptly breaks into their comfort zone. They expect a silent and obedient machine that does a pretty portrait but they quickly have to reconsider their opinion on this weird robotic servant that erratically throws balls of paint on a wall. The machine appears to have a wicked mind, it's not just a technologic slave.

As soon I began to work on the frame of the machine, I also realised that it was starting to have her own unexpected agency, because once you give a body to what used to be just software procedures and thoughts, every single physical component plays a role in a delicate echosystem of interplays, resulting in a very fragile and not always predictable thing. While this adds a lot of complexity to the debugging process - there are countless variables that influence a single action - it also makes the machine a more organic system.

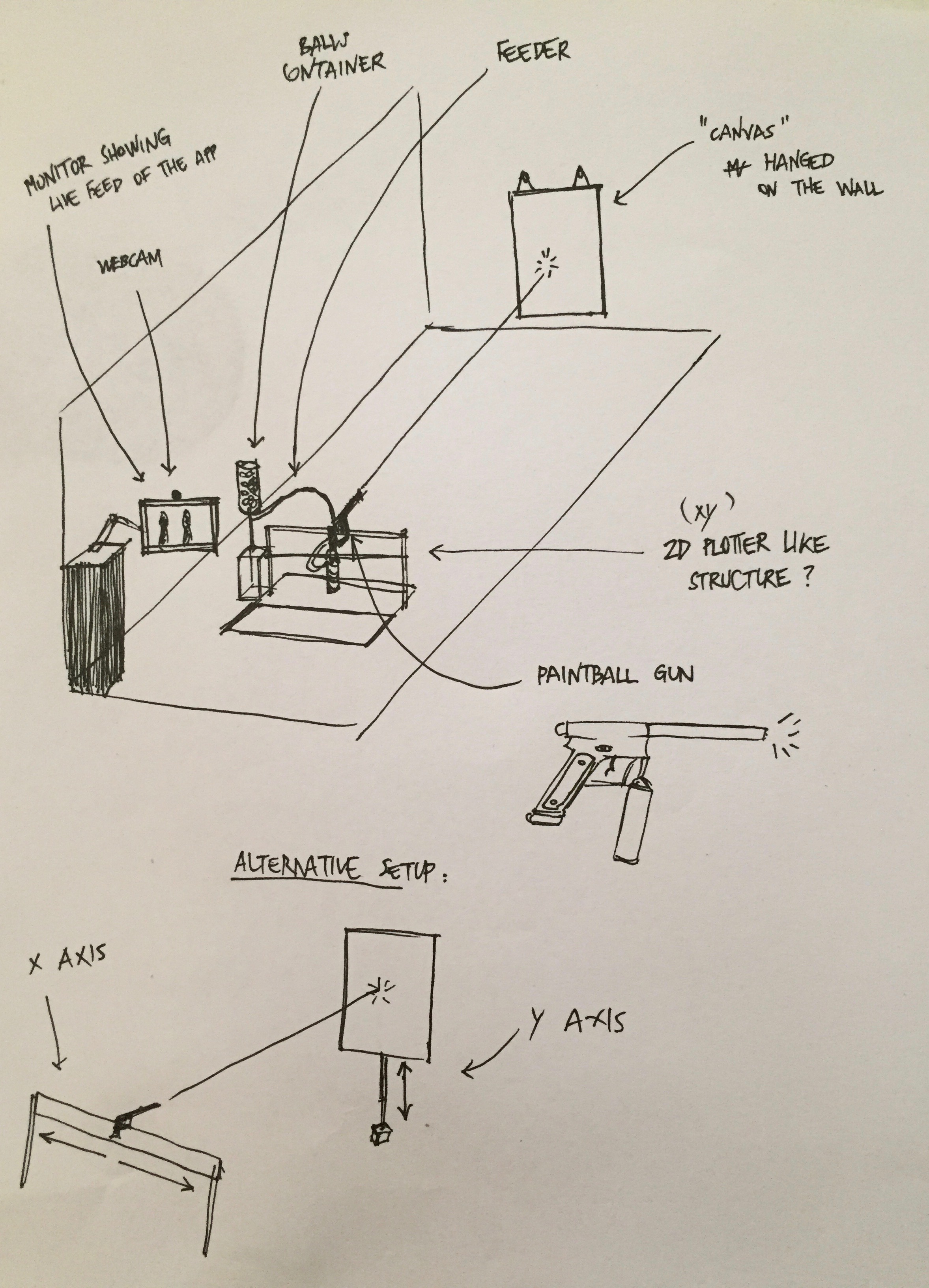

Initial sketch of the installation

One thing that I also constantly kept in mind while building the installation was the contrast between the metallic neatness of the machine with its black techy elegance and the setting were it was shooting, which was inevitably chaotic, messy and coloured, as if the machine needed an inspiring workplace, an artist studio, to do its job.

Other influences, even if more on the technical side and less on the conceptual one, were the CPU vs GPU machines realised by Adam Savage and the Mythbusters and the Facade printer by Sonice Development. I had never built something so complex so I had to continuously go back and study their projects in order to understand how they designed their machines in order to obtain their awesome results. Studying their solutions was very helpful but in the end I eventually decided that I wanted to explore a different approach and instead of having a tilt and pan system I went for a full CNC fashion with the freedom to move the gun in the X and Y axis.

The painting process

Technical

Software

Everything on the computer side was programmed using openframeworks, and many, many addons. Building a project like this one without all of the libraries that the open source community provides would have meant spending a whole year or more reinventing the wheel, implementing the SLIP protocol via serial, the OSC bundling, the openCV algorithms, and so on.. I wanted to stay high level because I was already low level enough with all the electronics and hardware related to the construction of the machine.

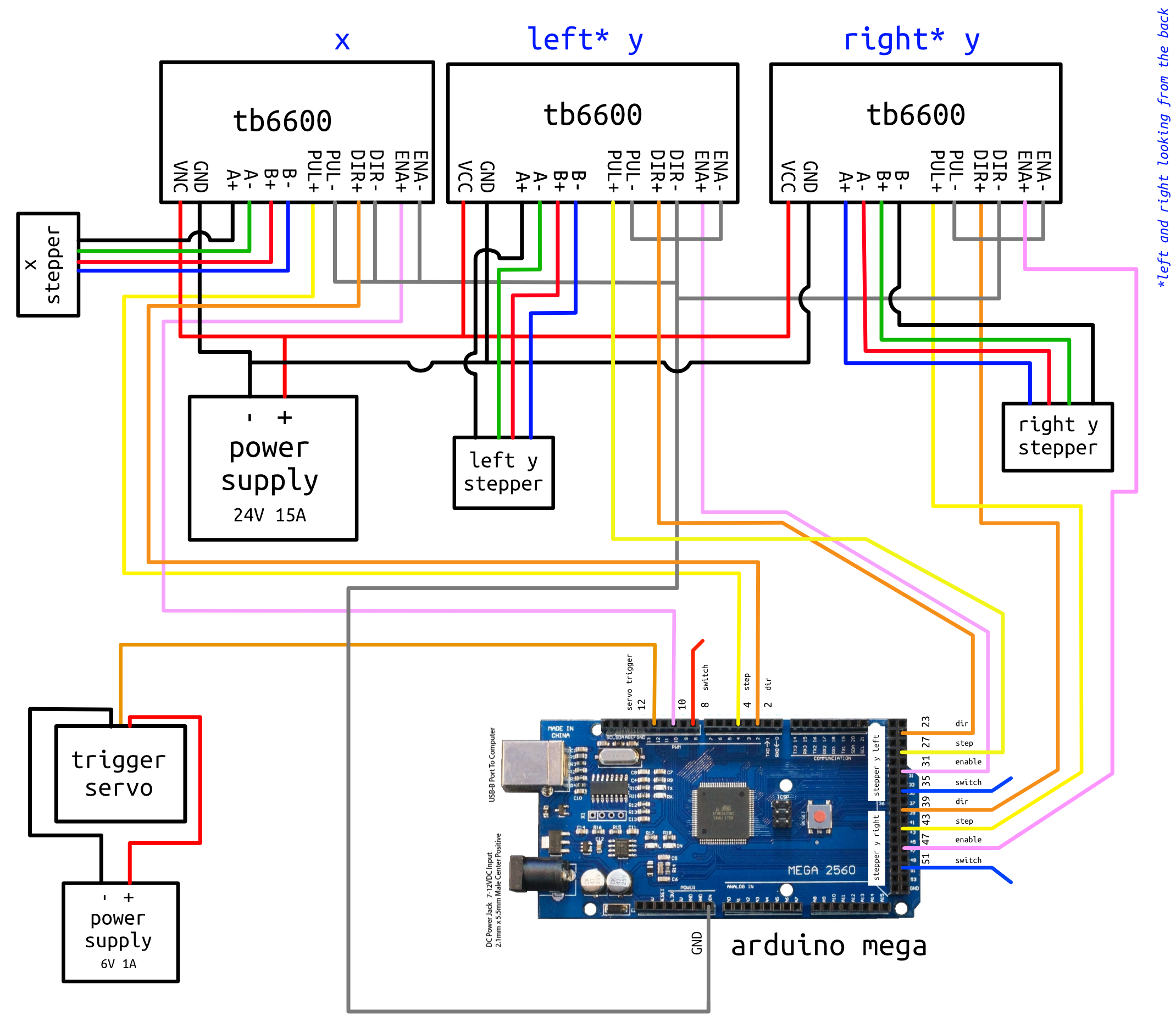

On the physical computing side, I used an arduino mega to control the motors and the servos required to move and shoot the marker.

I initially started controlling the stepper by using the AccelStepper library, but I quickly found out that I needed to have full control on the logic of the movements, so I just wrote my own functions to do everything. It was not overly complex but gave me I deeper sense of how cnc machines works (keeping track of the current position, the motors calibration, full step and half stepping modes, etc..).

The most challenging software part of the project was correctly streaming the x,y coordinates to the arduino. I did many tests using ofSerial and sending raw bytes, then testing the SLIP protocol to have more a more structured message and finally SLIP within an OSC bundle, which is provided by bakercp ofxSerial library. It's a high level solution and provides easy to read code, although creates some overhead due to the fact that OSC has to send also the address. But premature optimization is the root of all evil , and I didn't need to send a lot of data every frame.

The way the serial communication works is simple: the pc app sends the coordinates to the machine, the machines moves and shoots and then sends back the coordinates: now, if they match what we originally sent, the app sends the coordinates for the next point and so on, otherwise it logs the error. In this way I could test the communication until I was sure that no transmission error was happening 100% of the times.

The optimization of the path is an old computer science issue, the so called Traveling Salesman Problem, you can find more information on how I got around it in the documentation video.

Hardware

Schematics of the 3 stepper motors and servo (nema23 x 2, nema17 x 1, LD-20MG x 1)

On the hardware side, things were more complex. I had to find a way of calculating how much torque I needed on the y motors to lift the x axis made of an aluminium bar in order to win gravity, I had to be sure that the cables were correctly laid out without getting in the way of the gun and finally find a reliable way to pull the trigger.. it was lot of fun.

I made some scripts to help me with the calculations (high school physics, honestly), called compute_torque_for_mass.py and linear_distance_from_steps.py .

Then, before buying anything, I made a pre viz inside Fusion360 and animated the joints in order to get a sense of how things would have worked together. This saved me a lot of time/money, even though in the end there are some things which are really, really complex to foresee when you're designing in a virtual space.

echosystems installation-previz from VVZ3N on Vimeo.

Future development

There are hundreds of direction in which I want to expand this project, but the most prominent ones are the audience experience and the aesthetics of the portraits.

I liked that the reactions from people coming to see the shootings were both joyful and surprised - experiencing the facial expressions of people during the first burst of the gun was simply priceless. And even if the painting process was long and not very legible, most of them would still stick around to see what was going on. I want to research more ways to enhance this aspect of the installation and make it even memorable.

Then I want to improve the resemblance of the portraits to the actual subjects, something which was initially causing a big personal delusion because hey - I spent 2 months struggling with this machine and now it's just spitting balls of colour into the wall. It's not that the aesthetics are the focus of this work - it's just that this piece is about people, and I want people to see themselves in the portraits.

Giving how complex it is to calibrate a custom made CNC machine, I'm thinking of using drones in the future in order to move the gun around. : )

Self evaluation

I can now finally say - without feeling too depressed - that in a way this piece is just a beginning. It's not mature, not polished or perfectly completed. But it's a proud first step.

I learnead a lot and I'm happy. I struggled a lot and this too was part of the artistic journey.

I wish I had dedicated more time to it but in the end it was the result of achievieng a personal balance between spending money and earning money. If I were to start from the beginning, I would for sure ask the help of an engineer or an experienced cnc maker in order to avoid many of the stressful issues that I encountered and which made me lose a lot of time.

I also feel lucky to have learned so much by just watching people engage with the installation, and real friends helping me. There are plenty of things that couldn't be described in books that now make sense for me after having observed countless reactions and emotions and I think this is the real precious baggage that I'll carry with me in my future.

A happy visitor proudly standing next to his portrait

References

“Portrait drawing by Paul the robot”, ResearchGate, accessed on 18/09/2018: https://www.researchgate.net/publication/256937942_Portrait_drawing_by_Paul_the_robot

“Facade Printer“, designboom, accessed on 18/09/2018 : https://www.designboom.com/design/facade-printer/

“Adam and Jamie Paint the Mona Lisa in 80 Milliseconds!“, YouTube, accessed on 18/09/2018 : https://www.youtube.com/watch?v=WmW6SD-EHVY

Github repository of the project, accessed on 18/09/2018 : https://github.com/vvzen/paintball-portraits

“Openframeworks“, official website, accessed on 18/09/2018 : http://openframeworks.cc

“Arduino“, official website, accessed on 18/09/2018 : http://arduino.cc