What constitutes true intimacy in the context of performance? With the aid of digital technologies, how can we foster a sense of audience-performer as well as inter-audience intimacy in what our research group came to call “remote environments”? These are the main questions our group is concerned with.

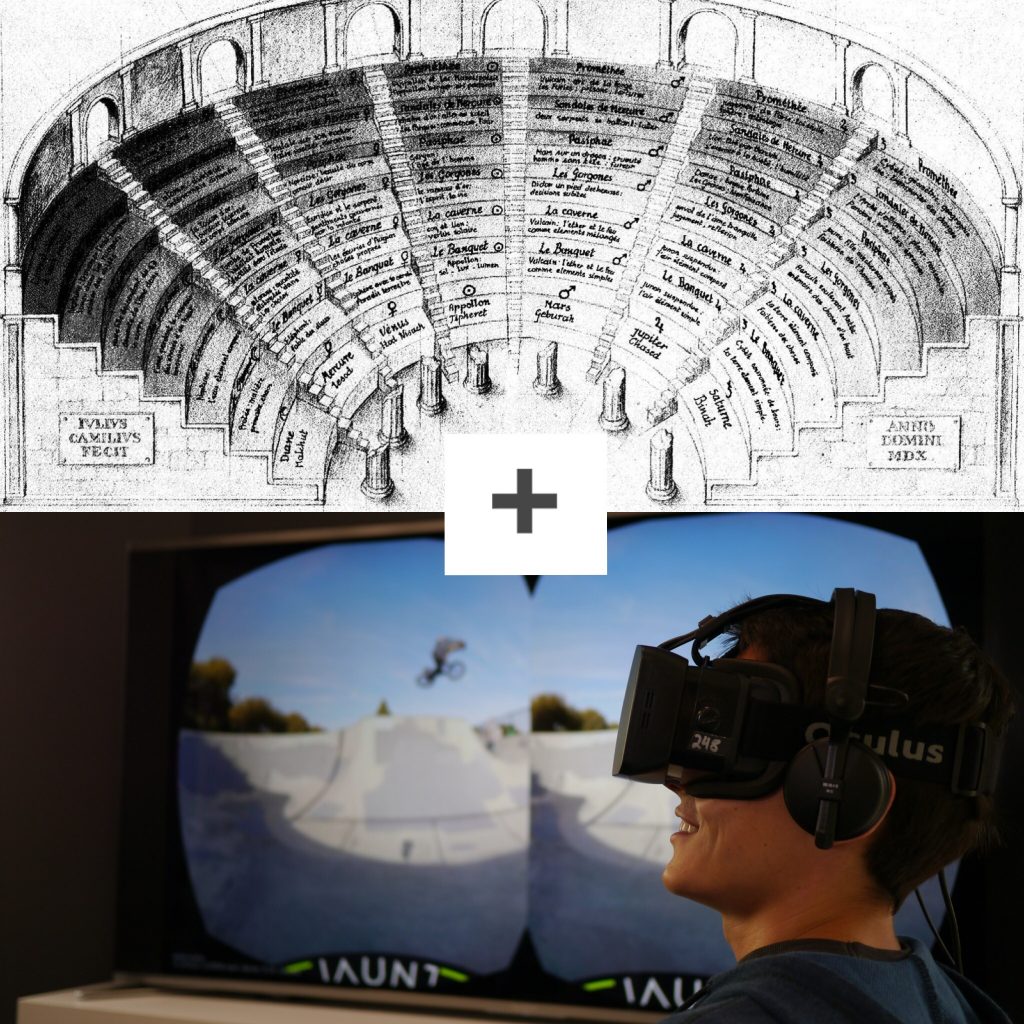

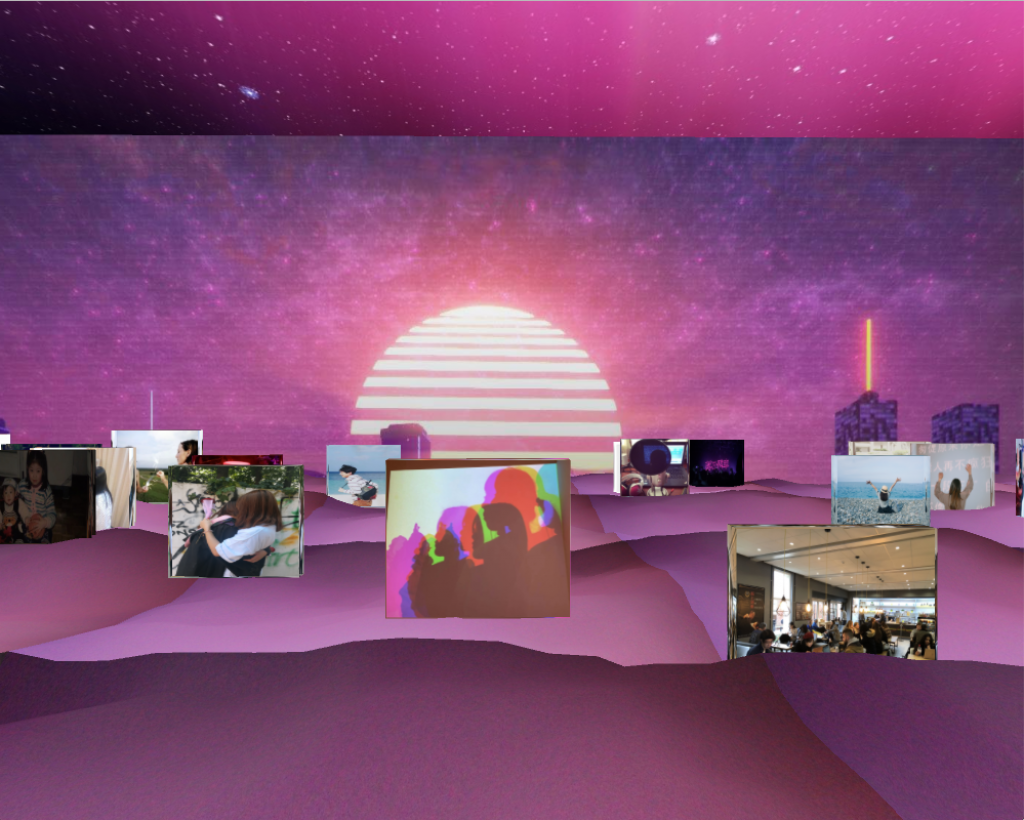

We came to define a “remote environment” in performance as one where the audience is physically removed from the location of the spectacle they are observing. This is not only the case in “digital” environments such as VR or MMORPGs, where the user is obviously physically isolated, while their “digital self” or avatar participates in the experience. For us, a live music concert or theatre performance also constitutes a “remote environment”: the audience is condemned to a physical distance from the performers, who are usually on a stage.

Our hypothesis is that a sense of true intimacy can still be established between an audience and a performer, as well as between audience members themselves, not by attempting to lessen the physical distance, but by creating strong emotional connections between all parties.

An example we looked into, where intimacy is created between an audience and a performer despite the physical distance, is the case of Hatsune Miku, the famous Vocaloid software. Despite not having a physical dimension at all, she has a devoted fan-base who will attend her concerts just to get a glimpse of her performing “live” (in reality as a holograph). We tried to dissect the ways in which the creators of Miku created this bond between her and her fans. In “Human-machine reconfigurations: Plans and situated actions,” Lucy Suchman unpacks ideas such as “what it means to be humanlike,” as well as embodiment: what are the ways in which machines are “figured,” meaning represented, anthropomorphically or anthropocentrically and to what effect? In this case, why is the digital element, Miku, “figured” as a teenage girl? It would appear that her creators have attempted to represent her as a young female pop-star not unlike the human ones that dominate today’s music charts. This way fans can emotionally “invest” in her as much as they would with a human performer. After all, when attending a human performer’s concerts, there is no way of interactively or haptically verifying the performer’s physical dimension. How are they any different from Miku?

For our artefact we attempted to quantify the element of “emotional connection” during remote experiences by using an Arduino-powered heart-rate monitor to record the heart-rate data of a sample group in real time as they experience a performance through a VR headset. Our aim more specifically was to use the biometric data collected by the study to garner an understanding of the engagement of the viewer with the performance, i.e. does their heart-rate pick up when something new and exciting happens in the performance?

We were also interested in establishing a pattern between different participants’ heart-rate data: do they show any evidence of syncing? According to a study carried out by Stephen Fry and Alan Davies called “Science of the opera” it seemed that the audience hearts start to sync during the performance of an opera. This could possibly imply some correlation between the feeling of connection and intimacy one might feel during the show with biometric activities.

Despite being unable to collect enough results to draw a definitive conclusion due to unforeseen, persistent technical difficulties with the various heart-rate monitoring equipment we tried and tested over the span of a few weeks, we plan on continuing our research. Our research led us to new lines of inquiry. For example, if our findings support the theory about syncing heart-rates, what would be the effect of externalising this data for all audiences and performers to see? What if a performer were to interact with this data? We believe that the externalisation of otherwise “invisible” biometric data during a performance would be a big step forward toward creating greater audience-performer as well as inter-audience intimacy.

Reference

- Bollen, Johan, Herbert Van de Sompel, Aric Hagberg, Luis Bettencourt, Ryan Chute, Marko A. Rodriguez, and Lyudmila Balakireva. ‘Clickstream Data Yields High-Resolution Maps of Science’. Edited by Alan Ruttenberg. PLoS ONE 4, no. 3 (11 March 2009): e4803. https://doi.org/10.1371/journal.pone.0004803.

- Breaker, Jeanine. ‘The Complexion of Two Bodies. Part One: Nuance Drawn Out’. Leonardo 46, no. 5 (October 2013): 425–31. https://doi.org/10.1162/LEON_a_00636.

- Leach, James, and Scott deLahunta. ‘Dance Becoming Knowledge: Designing a Digital “Body”’. Leonardo 50, no. 5 (October 2017): 461–67. https://doi.org/10.1162/LEON_a_01074.

- Marks, Laura U. The Skin of the Film: Intercultural Cinema, Embodiment, and the Senses. Durham: Duke University Press, 2000.

- Pongrac, Helena, Jan Leupold, Stephan Behrendt, Berthold Färber, and Georg Färber. ‘Human Factors for Enhancing Live Video Streams with Virtual Reality: Performance, Situation Awareness, and Feeling of Telepresence’. PRESENCE: Teleoperators and Virtual Environments 16, no. 5 (2007): 488–508. https://doi.org/10.1162/pres.16.5.488.

- Shoda, Haruka, Mayumi Adachi, and Tomohiro Umeda. ‘How Live Performance Moves the Human Heart’. PLoS ONE 11, no. 4 (22 April 2016). https://doi.org/10.1371/journal.pone.0154322.

- Spiess, Klaus, Lucie Strecker, Michael Kimmel, Melanie Martes, and Peter Pietschmann. ‘Modeling the Immune System with Gestures: A Choreographic View of Embodiment in Science’. Leonardo 51, no. 5 (October 2018): 509–16. https://doi.org/10.1162/leon_a_01464.